What Is Continuous Integration? A Technical Guide for Developers

Learn what is continuous integration (CI) and how it works. This guide breaks down CI pipelines, best practices, and essential tools for developers.

Continuous Integration (CI) is a software development practice where developers frequently merge their code changes into a central version control repository. Following each merge, an automated build and test sequence is triggered. The primary goal is to provide rapid feedback by catching integration errors as early as possible. This practice avoids the systemic issues of "integration hell," where merging large, divergent feature branches late in a development cycle leads to complex conflicts and regressions.

Every git push to the main or feature branch initiates a pipeline that compiles the code, runs a suite of automated tests (unit, integration, etc.), and reports the results. This automated feedback loop allows developers to identify and fix bugs quickly, often within minutes of committing the problematic code.

What Continuous Integration Really Means

Technically, CI is a workflow automation strategy designed to mitigate the risks of merging code from multiple developers. Without CI, developers might work in isolated feature branches for weeks. When the time comes to merge, the git merge or git rebase operation can result in a cascade of conflicts that are difficult and time-consuming to resolve. The codebase may enter a broken state for days, blocking further development.

CI fundamentally changes this dynamic. Developers integrate small, atomic changes frequently—often multiple times a day—into a shared mainline, typically main or develop.

The moment a developer pushes code to a shared repository like Git, a CI server (e.g., Jenkins, GitLab Runner, GitHub Actions runner) detects the change via a webhook. It then executes a predefined pipeline script. This script orchestrates a series of jobs: it spins up a clean build environment (often a Docker container), clones the repository, installs dependencies (npm install, pip install -r requirements.txt), compiles the code, and runs a battery of tests. If any step fails, the pipeline halts and immediately notifies the team.

The Power Of The Feedback Loop

The immediate, automated feedback loop is the core technical benefit of CI. A developer knows within minutes if their latest commit has introduced a regression. Because the changeset is small and the context is fresh, debugging and fixing the issue is exponentially faster than dissecting weeks of accumulated changes. This disciplined practice is engineered to achieve specific technical goals:

- Reduce Integration Risk: Merging small, atomic commits dramatically reduces the scope and complexity of code conflicts, making them trivial to resolve.

- Improve Code Quality: Automated test suites act as a regression gate, catching bugs the moment they are introduced and preventing them from propagating into the main codebase.

- Increase Development Velocity: By automating integration and testing, developers spend less time on manual debugging and merge resolution, freeing them up to focus on building features.

To implement CI effectively, teams must adhere to a set of core principles that define the practice beyond just the tooling.

Core Principles Of Continuous Integration At A Glance

| Principle | Description | Technical Goal |

|---|---|---|

| Maintain a Single Source Repository | All source code, build scripts (Dockerfile, Jenkinsfile), and infrastructure-as-code definitions (terraform, ansible) reside in a single version control system. |

Establish a canonical source of truth, enabling reproducible builds and auditable changes for the entire system. |

| Automate the Build | The process of compiling source code, linking libraries, and packaging the application into a deployable artifact (e.g., a JAR file, a Docker image) is fully scripted and repeatable. | Ensure build consistency across all environments and eliminate "works on my machine" issues. |

| Make the Build Self-Testing | The build script is instrumented to automatically execute a comprehensive suite of tests (unit, integration, etc.) against the newly built artifact. | Validate the functional correctness of every code change and prevent regressions from being merged into the mainline. |

| Commit Early and Often | Developers integrate their work into the mainline via short-lived feature branches and pull requests multiple times per day. | Minimize the delta between branches, which keeps integrations small, reduces conflict complexity, and accelerates the feedback loop. |

| Keep the Build Fast | The entire CI pipeline, from code checkout to test completion, should execute in under 10 minutes. | Provide rapid feedback to developers, allowing them to remain in a productive state and fix issues before context-switching. |

| Everyone Can See the Results | The status of every build is transparent and visible to the entire team, typically via a dashboard or notifications in a chat client like Slack. | Promote collective code ownership and ensure that a broken build (red status) is treated as a high-priority issue for the whole team. |

These principles create a system where the main branch is always in a stable, passing state, ready for deployment at any time.

Why CI Became an Essential Practice

To understand the necessity of Continuous Integration, one must consider the software development landscape before its adoption—a state often referred to as "merge hell." In this paradigm, development teams practiced branch-based isolation. A developer would create a long-lived feature branch (feature/new-checkout-flow) and work on it for weeks or months.

This isolation led to a high-stakes, high-risk integration phase. When the feature was "complete," merging it back into the main branch was a chaotic and unpredictable event. The feature branch would have diverged so significantly from main that developers faced a wall of merge conflicts, subtle logical bugs, and broken dependencies. Resolving these issues was a manual, error-prone process that could halt all other development activities for days.

This wasn't just inefficient; it was technically risky. The longer the branches remained separate, the greater the semantic drift between them, increasing the probability of a catastrophic merge that could destabilize the entire application.

The Origins of a Solution

The concept of frequent integration has deep roots in software engineering, but it was crystallized by the Extreme Programming (XP) community. While Grady Booch is credited with first using the term in 1994, it was Kent Beck and his XP colleagues who defined CI as the practice of integrating multiple times per day to systematically eliminate the "integration problem." For a deeper dive, you can explore a comprehensive history of CI to see how these concepts evolved.

They posited that the only way to make integration a non-event was to make it a frequent, automated, and routine part of the daily workflow.

A New Rule for Rapid Feedback

One of the most impactful heuristics to emerge from this movement was the "ten-minute build," championed by Kent Beck. His reasoning was pragmatic: if the feedback cycle—from git push to build result—takes longer than about ten minutes, developers will context-switch to another task. This delay breaks the flow of development and defeats the purpose of rapid feedback. A developer who has already moved on is far less efficient at fixing a bug than one who is notified of the failure while the code is still fresh in their mind.

This principle forced teams to optimize their build processes and write efficient test suites. Continuous Integration was not merely a new methodology; it was a pragmatic engineering solution to a fundamental bottleneck in collaborative software development. It transformed integration from a feared, unpredictable event into a low-risk, automated background process.

Anatomy of a Modern CI Pipeline

Let's dissect the technical components of a modern CI pipeline. This automated workflow is a sequence of stages that validates source code and produces a tested artifact. While implementations vary, the core architecture is designed for speed, reliability, and repeatability.

The process is initiated by a git push command from a developer's local machine to a remote repository hosted on a platform like GitHub or GitLab.

This push triggers a webhook, an HTTP POST request sent from the Git hosting service to a predefined endpoint on the CI server. The webhook payload contains metadata about the commit (author, commit hash, branch name). The CI server (Jenkins, GitLab CI, GitHub Actions) receives this payload, parses it, and queues a new pipeline run based on a configuration file checked into the repository (e.g., Jenkinsfile, .gitlab-ci.yml, .github/workflows/main.yml).

The Build and Test Sequence

The CI runner first provisions a clean, ephemeral environment for the build, typically a Docker container specified in the pipeline configuration. This isolation ensures that each build is reproducible and not contaminated by artifacts from previous runs.

The runner then executes the steps defined in the pipeline script:

- Compile and Resolve Dependencies: The build agent compiles the source code into an executable artifact. Concurrently, it fetches all required libraries and packages from a repository manager like Nexus or Artifactory (or public ones like npm or Maven Central) using a dependency manifest (

package.json,pom.xml). This step fails if there are compilation errors or missing dependencies. - Execute Unit Tests: This is the first validation gate, designed for speed. The pipeline executes unit tests using a framework like JUnit or Jest. These tests run in memory and validate individual functions and classes in isolation, providing feedback on the core logic in seconds. A code coverage tool like JaCoCo or Istanbul is often run here to ensure test thoroughness.

- Perform Static Analysis: The pipeline runs static analysis tools (linters) like SonarQube, ESLint, or Checkstyle. These tools scan the source code—without executing it—to detect security vulnerabilities (e.g., SQL injection), code smells, stylistic inconsistencies, and potential bugs. This stage provides an early quality check before more expensive tests are run.

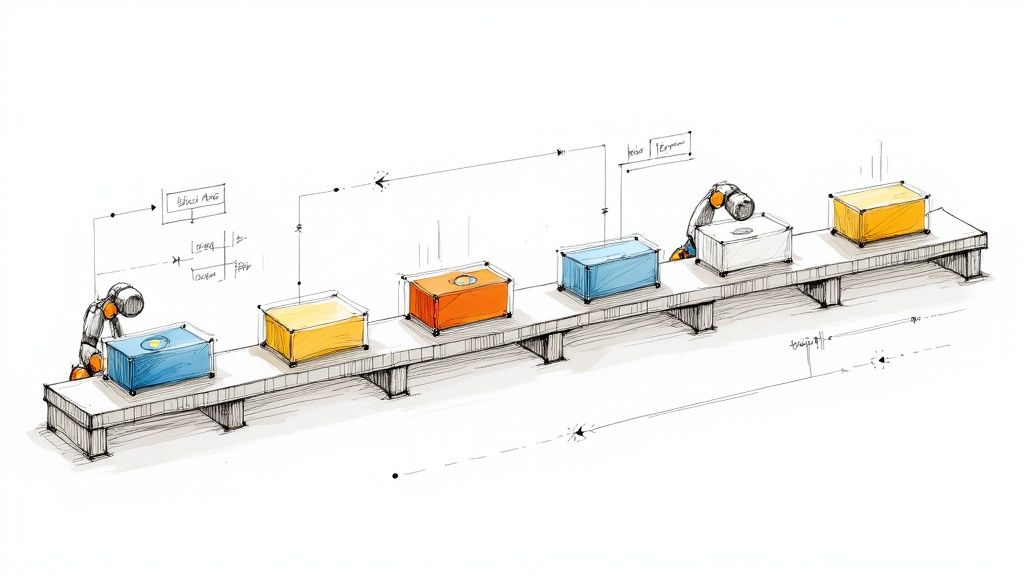

This visual breaks down the core stages—building, testing, and integrating—that form the backbone of any solid CI pipeline.

As you can see, each stage acts as a quality gate. A failure at any stage halts the pipeline and reports an error, preventing defective code from progressing.

The Verdict: Green or Red

If the code passes these initial stages, the pipeline proceeds to more comprehensive testing. Integration tests are executed next. These tests verify the interactions between different components or services. For example, they might spin up a test database in a separate container and confirm that the application can correctly read and write data.

The entire pipeline operates on a binary outcome. If every stage—from compilation to the final integration test—completes successfully, the build is marked as 'green' (pass). This signals that the new code is syntactically correct, functionally sound, and safe to merge into the mainline.

Conversely, if any stage fails, the pipeline immediately stops and the build is flagged as 'red' (fail). The CI server sends a notification via Slack, email, or other channels, complete with logs and error messages that pinpoint the exact point of failure.

This immediate, precise feedback is the core value proposition of CI. It allows developers to diagnose and fix regressions within minutes. To optimize this process, it's crucial to follow established CI/CD pipeline best practices. This rapid cycle ensures the main codebase remains stable and deployable.

Best Practices for Implementing Continuous Integration

Effective CI implementation hinges more on disciplined engineering practices than on specific tools. Adopting these core habits transforms your pipeline from a simple build automator into a powerful quality assurance and development velocity engine.

It begins with a single source repository. All assets required to build and deploy the project—source code, Dockerfiles, Jenkinsfiles, Terraform scripts, database migration scripts—must be stored and versioned in one Git repository. This practice eliminates ambiguity and ensures that any developer can check out a single repository and reproduce the entire build from a single, authoritative source.

Next, the build process must be fully automated. A developer should be able to trigger the entire build, test, and package sequence with a single command on their local machine (e.g., ./gradlew build). The CI server simply executes this same command. Any manual steps in the build process introduce inconsistency and are a primary source of "works on my machine" errors.

Make Every Build Self-Testing

A build artifact that has been compiled but not tested is an unknown quantity. It might be syntactically correct, but its functional behavior is unverified. For this reason, every automated build must be self-testing. This means embedding a comprehensive suite of automated tests directly into the build script.

A successful green build should be a strong signal of quality, certifying that the new code not only compiles but also functions as expected and does not introduce regressions. This test suite is the safety net that makes frequent integration safe.

Commit Frequently and Keep Builds Fast

To avoid "merge hell," developers must adopt the practice of committing small, atomic changes to the main branch at least daily. This ensures that the delta between a developer's local branch and the main branch is always small, making integrations low-risk and easy to debug.

This workflow is only sustainable if the feedback loop is fast. A build that takes an hour to run encourages developers to batch their commits, defeating the purpose of CI. The target for the entire pipeline execution time should be under 10 minutes. Achieving this requires careful optimization of test suites, parallelization of build jobs, and effective caching of dependencies and build layers. Explore established best practices for continuous integration to learn specific optimization techniques.

A broken build on the

mainbranch is a "stop-the-line" event. It becomes the team's highest priority. No new work should proceed until the build is fixed. This collective ownership prevents the accumulation of technical debt and ensures the codebase remains in a perpetually stable state.

As software architect Martin Fowler notes, the effort required for integration is non-linear. Merging a change set that is twice as large often requires significantly more than double the effort to resolve. Frequent, small integrations are the key to managing this complexity. You can dig deeper into his thoughts on the complexities of software integration and how CI provides a direct solution.

Choosing Your Continuous Integration Tools

Selecting the right CI tool is a critical architectural decision that directly impacts developer workflow and productivity. The market offers a wide range of solutions, from highly customizable self-hosted servers to managed SaaS platforms. The optimal choice depends on factors like team size, technology stack, security requirements, and operational capacity.

CI tools can be broadly categorized as self-hosted or Software-as-a-Service (SaaS). A self-hosted tool like Jenkins provides maximum control over the build environment, security policies, and network configuration. This control is essential for organizations with strict compliance needs but comes with the operational overhead of maintaining, scaling, and securing the CI server and its build agents.

In contrast, SaaS solutions like GitHub Actions or CircleCI abstract away the infrastructure management. Teams can define their pipelines and let the provider handle the provisioning, scaling, and maintenance of the underlying build runners.

Self-Hosted Power vs. SaaS Simplicity

A significant technical differentiator is the pipeline configuration method. Legacy CI tools often relied on web-based UIs for configuring build jobs. This "click-ops" approach is difficult to version, audit, or replicate, making it a brittle and opaque way to manage CI at scale.

Modern CI systems have standardized on "pipeline as code." This paradigm involves defining the entire build, test, and deployment workflow in a declarative YAML or groovy file (e.g., .gitlab-ci.yml, Jenkinsfile) that is stored and versioned alongside the application code in the Git repository. This makes the CI process transparent, version-controlled, and easily auditable.

The level of integration with the source code management (SCM) system is another critical factor. Solutions like those from platforms like GitLab or GitHub Actions offer a seamless experience because the CI/CD components are tightly integrated with the SCM. This native integration simplifies setup, permission management, and webhook configuration, reducing the friction of getting started.

This integration advantage is a key driver of tool selection. A study on CI tool adoption trends revealed that the project migration rate between CI tools peaked at 12.6% in 2021, with many teams moving to platforms that offered a more integrated SCM and CI experience. This trend continues, with a current migration rate of approximately 8% per year, highlighting the ongoing search for more efficient, developer-friendly workflows.

Comparison of Popular Continuous Integration Tools

This table provides a technical comparison of the leading CI platforms, highlighting their core architectural and functional differences.

| Tool | Hosting Model | Configuration | Key Strength |

|---|---|---|---|

| Jenkins | Self-Hosted | UI or Jenkinsfile (Groovy) |

Unmatched plugin ecosystem for ultimate flexibility and extensibility. Can integrate with virtually any tool or system. |

| GitHub Actions | SaaS | YAML | Deep, native integration with the GitHub ecosystem. A marketplace of reusable actions allows for composing complex workflows easily. |

| GitLab CI | SaaS or Self-Hosted | YAML | A single, unified DevOps platform that covers the entire software development lifecycle, from issue tracking and SCM to CI/CD and monitoring. |

| CircleCI | SaaS | YAML | High-performance build execution with advanced features like parallelism, test splitting, and sophisticated caching mechanisms for fast feedback. |

Ultimately, the "best" tool is context-dependent. A startup may benefit from the ease of use and generous free tier of GitHub Actions. A large enterprise with bespoke security needs may require the control and customizability of a self-hosted Jenkins instance. The objective is to select a tool that aligns with your team's technical requirements and operational philosophy.

How CI Powers the DevOps Lifecycle

Continuous Integration is not an isolated practice; it is the foundational component of a modern DevOps toolchain. It serves as the entry point to the entire software delivery pipeline. Without a reliable CI process, subsequent automation stages like Continuous Delivery and Continuous Deployment are built on an unstable foundation.

CI's role is to connect development with operations by providing a constant stream of validated, integrated software artifacts. It is the bridge between the "dev" and "ops" sides of the DevOps methodology.

It's crucial to understand the distinct roles CI and CD play in the automation spectrum.

Continuous Integration is the first automated stage. Its sole responsibility is to verify that code changes from multiple developers can be successfully merged, compiled, and tested. The output of a successful CI run is a versioned, tested build artifact (e.g., a Docker image, a JAR file) that is proven to be in a "good state."

From Integration to Delivery

Once CI produces a validated artifact, the Continuous Delivery/Deployment (CD) stages can proceed with confidence.

-

Continuous Integration (CI): Automates the build and testing of code every time a change is pushed to the repository. The goal is to produce a build artifact that has passed all automated quality checks.

-

Continuous Delivery (CD): This practice extends CI by automatically deploying every validated artifact from the CI stage to a staging or pre-production environment. The artifact is always in a deployable state, but the final promotion to the production environment requires a manual trigger (e.g., a button click). This allows for final manual checks like user acceptance testing (UAT).

-

Continuous Deployment (CD): This is the ultimate level of automation. It extends Continuous Delivery by automatically deploying every change that passes all automated tests directly to the production environment without any human intervention. This enables a high-velocity release cadence where changes can reach users within minutes of being committed.

The progression is logical and sequential. You cannot have reliable Continuous Delivery without a robust CI process that filters out faulty code. CI acts as the critical quality gate, ensuring that only stable, well-tested code enters the deployment pipeline, making the entire software delivery process faster, safer, and more predictable.

Common Questions About Continuous Integration

As development teams adopt continuous integration, several technical and practical questions consistently arise. Clarifying these points is essential for a successful implementation.

So, What's the Real Difference Between CI and CD?

CI and CD are distinct but sequential stages in the software delivery pipeline.

Continuous Integration (CI) is the developer-facing practice focused on merging and testing code. Its primary function is to validate that new code integrates correctly with the existing codebase. The main output of CI is a proven, working build artifact. It answers the question: "Is the code healthy?"

Continuous Delivery/Deployment (CD) is the operations-facing practice focused on releasing that artifact.

- Continuous Delivery takes the artifact produced by CI and automatically deploys it to a staging environment. The code is always ready for release, but a human makes the final decision to deploy to production. It answers the question: "Is the code ready to be released?"

- Continuous Deployment automates the final step, pushing every passed build directly to production. It fully automates the release process.

In short: CI builds and tests the code; CD releases it.

How Small Should a Commit Actually Be?

There is no strict line count, but the guiding principle is atomicity. Each commit should represent a single, logical change. For example, "Fix bug #123" or "Add validation to the email field." A good commit is self-contained and has a clear, descriptive message explaining its purpose.

The technical goal is to create a clean, reversible history and simplify debugging. If a CI pipeline fails, an atomic commit allows a developer to immediately understand the scope of the change that caused the failure. When a commit contains multiple unrelated changes, pinpointing the root cause becomes significantly more difficult.

Committing large, multi-day work bundles in a single transaction is an anti-pattern that recreates the problems CI was designed to solve.

Can We Do CI Without Automated Tests?

Technically, you can set up a server that automatically compiles code on every commit. However, this is merely build automation, not Continuous Integration.

The core value of CI is the rapid feedback on code correctness provided by automated tests. A build that passes without tests only confirms that the code is syntactically valid (it compiles). It provides no assurance that the code functions as intended or that it hasn't introduced regressions in other parts of the system.

Implementing a CI pipeline without a comprehensive, automated test suite is not only missing the point but also creates a false sense of security, leading teams to believe their codebase is stable when it may be riddled with functional bugs.

At OpsMoon, we specialize in designing and implementing high-performance CI/CD pipelines that accelerate software delivery while improving code quality. Our DevOps experts can help you implement these technical best practices from the ground up.

Ready to build a more efficient and reliable delivery process? Let's talk. You can book a free work planning session with our team.