What Is DevOps Methodology: A Technical Guide

Learn what is DevOps methodology, its core principles, and technical implementation. Discover how to build a CI/CD pipeline and drive business results.

At its core, DevOps is a cultural and engineering discipline designed to unify software development (Dev) and IT operations (Ops). The primary objective is to radically shorten the software development lifecycle (SDLC) by implementing a highly automated, iterative process for building, testing, and releasing software. The outcome is faster, more reliable, and continuous delivery of high-quality applications.

Unpacking The DevOps Methodology

To fully grasp the DevOps methodology, one must first understand the limitations of traditional, siloed software delivery models. In legacy environments, development teams would write code and then "throw it over the wall" to a separate operations team responsible for deployment and maintenance. This handoff point was a primary source of friction, extended lead times, and a blame-oriented culture when incidents occurred.

DevOps systematically dismantles this wall by fostering a culture of shared responsibility. Developers, QA engineers, and operations specialists function as a single, cross-functional team, collaboratively owning the application lifecycle from conception to decommission.

Consider it analogous to a Formula 1 pit crew. Each member is a specialist, yet they operate as a cohesive unit with a singular objective: to service the car and return it to the track with maximum speed and safety.

From Silos to Synergy

This is not merely an organizational restructuring; it is a fundamental shift in mindset, reinforced by a suite of robust technical practices. Instead of individuals focusing on isolated segments of the pipeline, everyone owns the entire software delivery process. This collective ownership cultivates a proactive approach to problem-solving and quality engineering from the earliest stages of development.

For example, a developer's concern extends beyond writing functional code. They must also consider how that code will be containerized, deployed, monitored, and scaled in a production environment. Concurrently, operations engineers provide feedback early in the development cycle, contributing to the design of systems that are inherently more resilient, observable, and manageable.

The core idea behind DevOps is to create a collaborative culture that finally bridges the deep divide between development and operations. This synergy is what unlocks the speed, reliability, and continuous improvement that modern software delivery demands.

This collaborative culture is supercharged by pervasive automation. Repetitive, error-prone tasks such as code compilation, unit testing, and infrastructure provisioning are automated via CI/CD pipelines and Infrastructure as Code (IaC). This automation liberates engineers from manual toil, allowing them to focus on high-value activities like feature development and system architecture.

The technical and business objectives are explicit:

- Accelerated Time-to-Market: Reduce the lead time for changes, moving features from idea to production deployment rapidly.

- Improved Reliability: Decrease the change failure rate by integrating automated testing and quality gates throughout the pipeline.

- Enhanced Collaboration: Dismantle departmental barriers to create unified, cross-functional teams with shared goals.

- Greater Scalability: Engineer systems that can handle dynamic workloads through automated, on-demand infrastructure provisioning.

DevOps vs Traditional Waterfall Models

To put its technical advantages into perspective, let's contrast DevOps with the rigid, sequential Waterfall model.

| Attribute | DevOps Methodology | Traditional (Waterfall) Model |

|---|---|---|

| Team Structure | Cross-functional, integrated teams (Dev + Ops) | Siloed, separate departments |

| Responsibility | Shared ownership across the entire lifecycle | Handoffs between teams; "not my problem" |

| Release Cycle | Short, frequent, and continuous releases | Long, infrequent, and monolithic releases |

| Feedback Loop | Continuous and immediate | Delayed until the end of a phase |

| Risk Management | Small, incremental changes reduce deployment risk | Large, high-risk "big bang" deployments |

| Automation | Heavily automated testing and deployment | Manual, error-prone processes |

| Core Focus | Speed, reliability, and continuous improvement | Upfront planning and sequential execution |

As the comparison illustrates, DevOps represents a paradigm shift in software engineering. By integrating culture, process, and tools, it establishes a powerful feedback loop. This loop enables teams to release software not just faster, but with demonstrably higher quality and stability, which directly translates to improved business performance and customer satisfaction.

To truly understand what DevOps is, it's essential to examine its origins. It wasn't conceived in a boardroom but emerged from the shared technical frustrations of developers and operations engineers struggling with inefficient software delivery models.

For years, the dominant paradigm was the Waterfall model, a rigid, linear process where each phase must be fully completed before the next begins. This sequential flow created deep organizational silos and significant bottlenecks.

This structure meant developers might spend months writing code for a feature, only to hand the finished artifact "over the wall" to an operations team that had no involvement in its design. This fundamental disconnect was a formula for disaster, resulting in slow deployments, buggy releases, and a counterproductive culture of finger-pointing during production incidents.

The Rise of Agile and a Brand-New Bottleneck

The Agile movement emerged as a necessary antidote to Waterfall's inflexibility, championing iterative development and close collaboration during the software creation process. Agile was transformative for development teams, enabling them to build better software, faster. However, it addressed only one side of the equation.

While development teams adopted rapid, iterative cycles, operations teams were often still constrained by legacy, manual deployment practices. This created a new point of friction: a high-velocity development process colliding with a slow, risk-averse operations gatekeeper. The inherent conflict between "move fast" and "maintain stability" intensified.

It became clear that a more holistic approach was needed—one that extended Agile principles across the entire delivery lifecycle, from a developer's commit to a live customer deployment.

This critical need for a holistic solution—one that could marry development speed with operational stability—is what set the stage for DevOps. It grew from a simple desire to get teams on the same page, automate the grunt work, and treat the whole process of delivering software as one unified system.

The term "DevOps" gained traction around 2009, coined by Patrick Debois who organized the first DevOpsDays event. This conference was a landmark moment, bringing developers and operations professionals together to address the silo problem directly.

From 2010 to 2014, the movement grew exponentially, fueled by the rise of cloud computing and a new generation of automation tools. Foundational practices like Continuous Integration (CI) and Continuous Delivery (CD) became the technical bedrock for enabling faster, more reliable releases. By 2015, DevOps had transitioned from a niche concept to a mainstream strategy, with technologies like Git, Docker, and Kubernetes forming the core of the modern toolchain. You can explore more about this transformation and its impact on the industry.

From A Niche Idea To A Mainstream Strategy

This evolution was not just about adopting new tools but represented a profound cultural and technical shift. The emergence of cloud computing provided the ideal environment for DevOps to flourish, offering on-demand, programmable infrastructure that could be managed as code—a practice now known as Infrastructure as Code (IaC).

This powerful combination of culture, process, and technology enables organizations to move away from slow, high-risk release cycles and toward a state of continuous delivery. Understanding the history of DevOps is key to appreciating why it is not merely a buzzword, but an essential engineering strategy for any organization needing to deliver software with speed, quality, and reliability.

Core Technical Principles And Cultural Pillars

To fully implement DevOps, it's necessary to move beyond organizational charts and embed its core principles into daily work. DevOps is a potent combination of cultural transformation and disciplined technical practices.

When integrated, these elements create a high-velocity engineering environment capable of shipping quality software rapidly. These foundations are not optional; they are the engine of a high-performing DevOps organization. The process begins with establishing the right culture, which then enables the technical implementation.

The Cultural Pillars Of DevOps

Before a single tool is configured, the culture must be established. This is the true bedrock of DevOps, transforming siloed specialists into a cohesive, high-performance team.

- Intense Collaboration: This goes beyond simple communication. It means development, operations, and security teams are embedded together, co-owning problems and solutions throughout the entire application lifecycle.

- Shared Ownership: The "not my problem" mindset is eliminated. Every team member—from the developer writing the initial code to the Site Reliability Engineer (SRE) monitoring it in production—is collectively accountable for the software's performance and stability.

- Blameless Post-mortems: When an incident occurs, the objective is never to assign blame. Instead, the team conducts a systematic root cause analysis to identify failures in the system, process, or technology. The focus is on implementing corrective actions to prevent recurrence.

This cultural evolution is ongoing. DevOps has expanded to integrate adjacent disciplines like security (DevSecOps) and data management (DataOps), prompting many organizations to re-evaluate traditional IT structures and create blended roles for greater efficiency. A DevOps maturity assessment can be an effective tool for benchmarking your current state and planning future improvements.

Key Technical Principles In Action

With a collaborative culture in place, you can implement the technical principles that introduce automation, consistency, and repeatability into your software delivery process. These are not buzzwords but concrete engineering disciplines with specific technical goals.

Infrastructure As Code (IaC)

Infrastructure as Code (IaC) is the practice of managing and provisioning IT infrastructure through machine-readable definition files, rather than physical hardware configuration or interactive configuration tools. This means treating your servers, load balancers, databases, and network configurations as version-controlled software artifacts.

Tools like Terraform or Pulumi allow you to define your cloud architecture in declarative configuration files. This infrastructure code can then be versioned in Git, peer-reviewed, and tested, bringing unprecedented consistency and auditability to your environments.

Technical Example: A developer needs a new staging environment. Instead of filing a ticket and waiting for manual provisioning, they execute a single command:

terraform apply -var-file=staging.tfvars. The script declaratively provisions the required virtual machines, configures the network security groups, and sets up a database instance—a perfect, automated replica of the production environment.

Continuous Integration And Continuous Delivery (CI/CD)

The CI/CD pipeline is the automated workflow that serves as the backbone of DevOps. It is a sequence of automated steps that shepherd code from a developer's commit to production deployment with minimal human intervention.

- Continuous Integration (CI): The practice where developers frequently merge their code changes into a central repository (e.g., a Git

mainbranch). Each merge automatically triggers a build and a suite of automated tests (unit, integration, etc.). - Continuous Delivery (CD): An extension of CI where every code change that successfully passes all automated tests is automatically deployed to a testing or staging environment. The final deployment to production is often gated by a manual approval.

Technical Example: A developer pushes a new feature branch to a Git repository. A CI tool like GitLab CI immediately triggers a pipeline. The pipeline first builds the application into a Docker container. Next, it runs a series of tests against that container. If any test fails, the pipeline halts and sends an immediate failure notification to the developer via Slack, preventing defective code from progressing.

The adoption of these principles shows just how fundamental DevOps has become. High-performing teams that embrace this methodology report efficiency gains of up to 50%—a clear sign of its impact. It’s proof that DevOps isn't just about automation; it’s about making the critical cultural shifts needed for modern IT to succeed. You can discover more about the journey of DevOps and how it grew from a niche idea into an industry standard.

Executing The DevOps Lifecycle Stages

The DevOps methodology is not a static philosophy; it is an active, cyclical process engineered for continuous improvement. This process, often visualized as an "infinite loop," moves an idea through development into production, where operational feedback immediately informs the next iteration.

Each stage is tightly integrated with the next, with automation serving as the connective tissue that ensures a seamless, high-velocity workflow. The objective is to transform a clumsy series of manual handoffs into a single, unified, and automated flow.

The Initial Spark: Planning and Coding

Every feature or bug fix begins with a plan. In a DevOps context, this means breaking down business requirements into small, actionable work items within an agile framework.

-

Plan: Teams use project management tools like Jira or Azure Boards to define and prioritize work. Large epics are decomposed into smaller user stories and technical tasks, ensuring that business objectives are clearly understood before any code is written. This stage aligns developers, product owners, and stakeholders.

-

Code: Developers pull a task from the backlog and write the necessary code. They use a distributed version control system, typically Git, to commit their changes to a feature branch in a shared repository hosted on a platform like GitHub or GitLab. This

git commitandgit pushaction is the catalyst that initiates the automated lifecycle.

The collaborative nature of DevOps is evident here. Code is often reviewed by peers through pull requests, where QA engineers and other developers provide feedback, ensuring quality and adherence to standards before the code is merged.

The Automation Engine: Build, Test, and Release

Once code is pushed to the repository, the CI/CD pipeline takes over. This is where the core automation of DevOps resides, transforming source code into a deployable artifact. Understanding what Continuous Integration and Continuous Delivery (CI/CD) entails is fundamental to implementing these automated workflows.

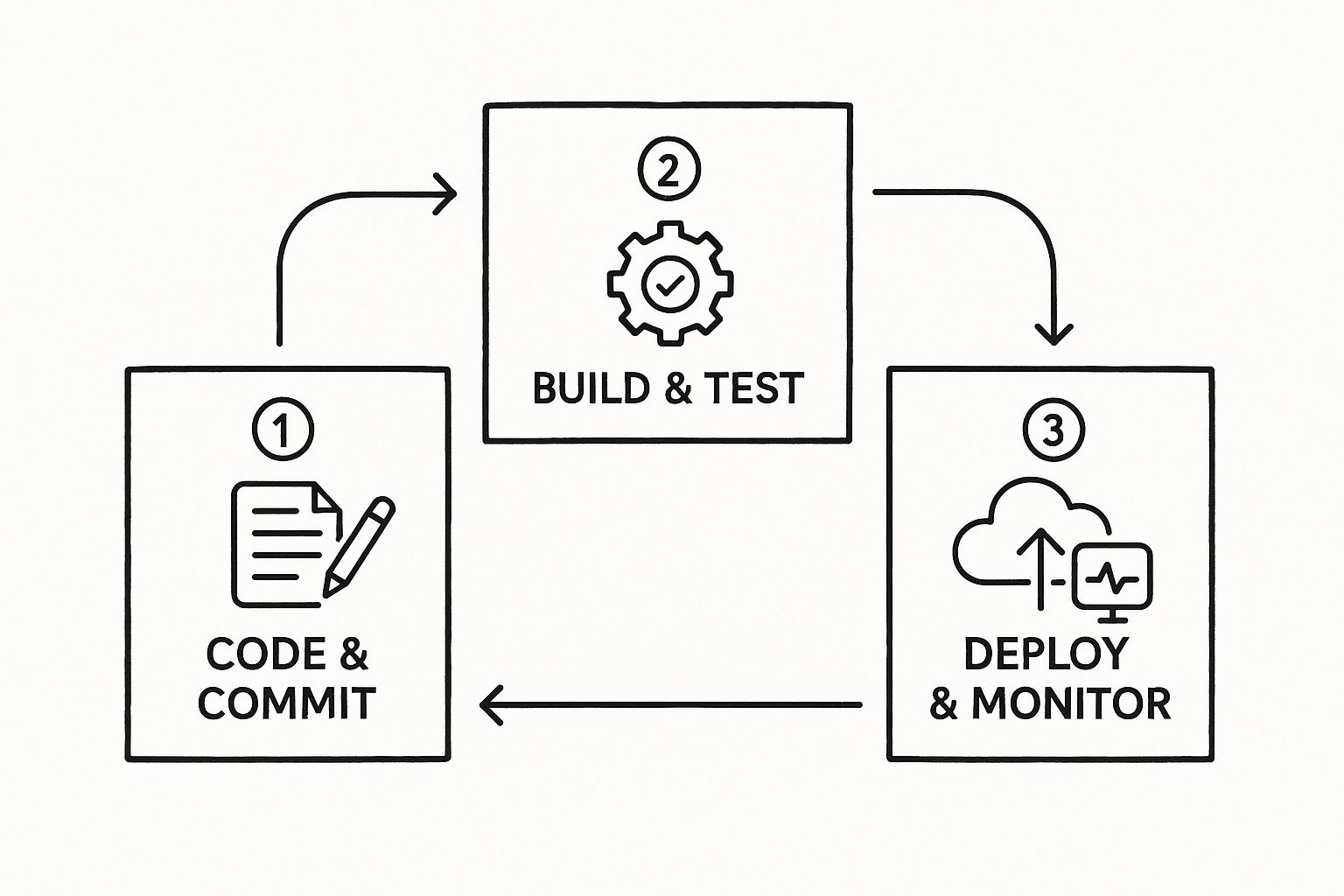

This infographic provides a high-level view of this automated, three-part flow that drives modern software delivery.

A code commit triggers a chain reaction of building, testing, and deployment actions, forming the continuous loop central to DevOps.

Let's break down these technical stages:

- Build: A CI server (like Jenkins or GitLab CI) detects the new commit and triggers a build job. Build tools like Maven (for Java) or npm (for Node.js) compile the source code, run linters, and package the application into a runnable artifact, such as a JAR file or, more commonly, a Docker image.

- Test: A successful build immediately initiates a series of automated test suites. This includes unit tests (Jest, JUnit), integration tests, and static code analysis (SonarQube). If any test fails, the pipeline stops, providing immediate feedback to the developer. This "fail-fast" approach prevents defects from propagating.

- Release: Once an artifact passes all automated tests, it is versioned and pushed to an artifact repository like Nexus or Artifactory. This creates an immutable, trusted artifact that is ready for deployment.

Closing The Loop: Deploy, Operate, and Monitor

The final stages involve delivering the software to users and ensuring its operational health. This is where the "Ops" in DevOps becomes prominent, driven by the same principles of automation and collaboration.

Deployment is no longer a high-stakes, manual event but a repeatable, low-risk, automated process.

- Deploy: Using the tested artifact, an Infrastructure as Code tool like Terraform provisions or updates the target environment. Then, a configuration management tool like Ansible or a container orchestrator like Kubernetes deploys the new application version, often using strategies like blue-green or canary deployments to ensure zero downtime.

- Operate & Monitor: Once live, the application's health and performance are continuously monitored. Tools like Prometheus scrape metrics (CPU, memory, latency), log aggregators like Fluentd collect logs, and platforms like Grafana or Datadog visualize this data in real-time dashboards. Automated alerting notifies the team of anomalies or threshold breaches.

This monitoring data is invaluable. It provides the quantitative feedback that flows directly back into the Plan phase, creating new tickets for performance improvements or bug fixes and completing the infinite loop. This cycle of continuous feedback and improvement is the engine that drives DevOps: delivering better software, faster and more reliably.

Building Your Essential DevOps Toolchain

While culture is the foundation of DevOps, a well-integrated toolchain is the technical engine that executes its principles. To achieve the goals of automation and velocity, you must assemble a set of tools that seamlessly cover every stage of the software delivery lifecycle.

This is not about collecting popular software; it is a strategic exercise in creating a cohesive, automated workflow. Each tool acts as a link in a chain, programmatically handing off its output to the next tool without requiring manual intervention. To make DevOps work, you need the right set of DevOps automation tools, each chosen for a specific job.

Core Components Of A Modern Toolchain

A robust DevOps toolchain is composed of specialized tools from different categories, integrated to form a single pipeline. A typical, highly effective toolchain includes the following components.

- Version Control: The single source of truth for all code and configuration. Git is the de facto standard, with platforms like GitLab or GitHub providing the centralized, collaborative repository.

- CI/CD Pipelines: The automation engine that orchestrates the build, test, and deployment workflow. GitLab CI, Jenkins, and GitHub Actions are leading choices for defining and executing these pipelines.

- Containerization: The technology for packaging an application and its dependencies into a lightweight, portable, and isolated unit. Docker is the industry standard for creating container images.

- Orchestration: The system for automating the deployment, scaling, and management of containerized applications. Kubernetes has become the dominant platform for container orchestration at scale.

- Configuration Management: The practice of ensuring that server and environment configurations are consistent and repeatable. Tools like Ansible use declarative or procedural definitions (playbooks) to automate infrastructure configuration and eliminate configuration drift.

- Monitoring & Observability: The tools required to understand the internal state of a system from its external outputs. A powerful open-source stack includes Prometheus for metrics collection, Grafana for visualization, and the ELK Stack (Elasticsearch, Logstash, Kibana) for log aggregation and analysis.

This diagram illustrates how these tools interoperate to create the continuous, automated flow that defines DevOps.

The toolchain functions as an infinite loop. Each tool completes its task and triggers the next, moving from planning and coding through to monitoring, with the data from monitoring providing feedback that initiates the next development cycle.

A Practical Toolchain In Action

Let’s trace a single code change through a technical toolchain to illustrate how these components are integrated.

- A developer finalizes a new feature and executes

git pushto send the code to a feature branch in a GitLab repository. This action triggers a webhook. - GitLab CI receives the webhook and initiates a predefined pipeline defined in a

.gitlab-ci.ymlfile. It spins up a temporary runner environment. - The first pipeline stage invokes Docker to build the application into a new, version-tagged container image based on a

Dockerfilein the repository. - Subsequent stages run automated tests against the newly built container. Upon successful test completion, the Docker image is pushed to a container registry (like GitLab's built-in registry or Docker Hub).

- The final pipeline stage executes an Ansible playbook. This playbook interfaces with the Kubernetes API server, instructing it to update the application's Deployment object with the new container image tag. Kubernetes then performs a rolling update, incrementally replacing old pods with new ones, ensuring zero downtime.

- As soon as the new pods are live, a Prometheus instance, configured to scrape metrics from the application, begins collecting performance data. This data is visualized in Grafana dashboards, providing the team with immediate, real-time insight into the release's health (e.g., latency, error rates, resource consumption).

This entire sequence—from a

git pushcommand to a fully monitored production deployment—occurs automatically, often within minutes. This is the tangible result of a well-architected DevOps toolchain. The tight, API-driven integration between these tools is what enables the speed and reliability promised by the DevOps methodology.

Implementing such a workflow requires a deep understanding of pipeline architecture. For any team building or refining their automation, studying established CI/CD pipeline best practices is a critical step.

How DevOps Drives Measurable Business Value

While the technical achievements of DevOps are significant, its ultimate value is measured in tangible business outcomes. Adopting DevOps is not merely an IT initiative; it is a business strategy designed to directly impact revenue, profitability, and customer satisfaction.

This is the critical link for securing executive buy-in. When a CI/CD pipeline accelerates release frequency, it's not just a technical metric. It is a direct reduction in time-to-market, enabling the business to outpace competitors and capture market share.

Linking Technical Gains to Financial Performance

Every operational improvement achieved through DevOps has a corresponding business benefit. The pervasive automation and deep collaboration at its core are engineered to eliminate waste and enhance efficiency, with results that are clearly visible on a company's financial statements.

Consider the financial impact of downtime or a failed deployment. A high change failure rate is not just a technical problem; it erodes customer trust and can lead to direct revenue loss. DevOps directly mitigates these risks.

- Reduced Operational Costs: By automating infrastructure provisioning (IaC) and application deployments (CI/CD), you reduce the manual effort required from highly paid engineers. This frees them to focus on innovation and feature development rather than operational toil, leading to better resource allocation and a lower total cost of ownership (TCO).

- Increased Revenue and Profitability: Delivering features to market faster and more reliably creates new revenue opportunities. Concurrently, the enhanced stability and performance of the application improve customer loyalty and reduce churn, which directly protects existing revenue streams.

The data from industry reports like the DORA State of DevOps Report provides clear evidence. High-performing organizations that master DevOps practices achieve 46 times more frequent code deployments and recover from incidents 96 times faster than their lower-performing peers. These elite performers also report a 60% reduction in change failure rates and a 22% improvement in customer satisfaction.

Measuring What Matters Most

To demonstrate success, it is crucial to connect DevOps metrics to business objectives. While engineering teams track technical Key Performance Indicators (KPIs) like deployment frequency and lead time for changes, leadership needs to see the business impact. Frameworks like Objectives and Key Results (OKRs) provide a structured methodology for aligning engineering efforts with strategic company goals.

By focusing on metrics that matter to the business—like Mean Time to Recovery (MTTR) and customer retention—you can clearly demonstrate the immense value that the DevOps methodology provides. For instance, a lower MTTR doesn't just mean systems are back online faster; it means you're protecting revenue and brand reputation.

Ultimately, DevOps drives business value by building a more resilient, agile, and efficient organization. It creates a powerful feedback loop where technical excellence leads to better business outcomes, which in turn justifies more investment in the people, processes, and tools that make it all possible.

This synergy is critical for any modern business. Integrating security early in this loop is a key part of it; you can dive deeper in our guide on DevOps security best practices at https://opsmoon.com/blog/devops-security-best-practices.

Got Questions About Implementing DevOps?

As you begin your DevOps implementation, certain technical and philosophical questions will inevitably arise. Let's address some of the most common ones with actionable, technical guidance.

What Is The Difference Between DevOps And Agile?

This is a critical distinction. While often used interchangeably, Agile and DevOps address different scopes of the software delivery process.

Agile is a project management methodology focused on the development phase. It organizes work into short, iterative cycles (sprints) to promote adaptive planning, evolutionary development, and rapid delivery of functional software. Its primary goal is to improve collaboration and efficiency between developers, testers, and product owners.

DevOps is a broader engineering and cultural methodology that encompasses the entire software delivery lifecycle. It extends Agile principles beyond development to include IT operations, security, and quality assurance. Its goal is to automate and integrate the processes between software development and IT teams so they can build, test, and release software faster and more reliably.

Here is a technical analogy:

- Agile optimizes the software factory—improving how developers build the car (the software) in collaborative, iterative sprints.

- DevOps builds and automates the entire supply chain, assembly line, and post-sale service network—from sourcing raw materials (planning) to delivering the car to the customer (deployment), monitoring its performance on the road (operations), and feeding that data back for future improvements.

In short, DevOps is not a replacement for Agile; it is a logical and necessary extension of it. You can't have a high-performing DevOps culture without a solid Agile foundation.

Is DevOps Just About Automation And Tools?

No. This is the most common and costly misconception. While tools and automation are highly visible components of DevOps, they are merely enablers of a deeper cultural shift.

At its core, DevOps is a cultural transformation centered on collaboration, shared ownership, and continuous improvement. Without that cultural shift, just buying a bunch of new tools is like buying a fancy oven when no one on the team knows how to bake. You'll just have expensive, unused equipment.

The tools exist to support and enforce the desired culture and processes. True DevOps success is achieved when teams adopt the philosophy first. A team that lacks psychological safety, operates in silos, and engages in blame will fail to achieve DevOps goals, no matter how sophisticated their GitHub Actions pipeline is.

How Can A Small Team Start Implementing DevOps?

You do not need a large budget or a dedicated "DevOps Team" to begin. In fact, a "big bang" approach is often counterproductive. The most effective strategy is to start small by identifying and automating your single most significant bottleneck.

Here is a practical, technical roadmap for a small team:

- Establish Git as the Single Source of Truth: This is the non-negotiable first step. All artifacts that define your system—application code, infrastructure configuration (e.g., Terraform files), pipeline definitions (

.gitlab-ci.yml), and documentation—must be stored and versioned in Git. - Automate the Build and Unit Test Stage: Select a simple, integrated CI tool like GitLab CI or GitHub Actions. Your first objective is to create a pipeline that automatically triggers on every

git push, compiles the application, and runs your existing unit tests. This establishes the initial feedback loop. - Automate One Manual Deployment: Identify the most painful, repetitive manual process your team performs. Is it deploying to a staging server? Is it running database schema migrations? Isolate that one task and automate it with a script (e.g., a simple Bash script or an Ansible playbook) that can be triggered by your CI pipeline.

- Implement Basic Application Monitoring: You cannot improve what you cannot measure. Instrument your application with a library to expose basic health and performance metrics (e.g., using a Prometheus client library). Set up a simple dashboard to visualize response times and error rates. This initiates the critical feedback loop from operations back to development.

The goal is to generate momentum. Each small, iterative automation is a win. It reduces toil and demonstrates value, building the cultural and technical foundation for tackling the next bottleneck. It begins with a shared commitment, followed by a single, focused, and actionable step.

Ready to move from theory to practice? OpsMoon connects you with the top 0.7% of remote DevOps engineers to accelerate your adoption journey. We start with a free work planning session to assess your needs and build a clear roadmap, whether you need Kubernetes experts, CI/CD pipeline architects, or end-to-end project delivery. Find your expert at OpsMoon today!