A Technical Guide to Kubernetes CI/CD Pipelines

Build, deploy, and manage advanced Kubernetes CI CD pipelines. This guide covers architectures, tools like Argo CD, and GitOps best practices for engineers.

In technical terms, Kubernetes CI/CD is the practice of leveraging a Kubernetes cluster as the execution environment for Continuous Integration and Continuous Delivery pipelines. This modern approach containerizes each stage of the CI/CD process—build, test, and deploy—into ephemeral pods. This contrasts sharply with legacy, VM-based CI/CD by utilizing Kubernetes' native orchestration for dynamic scaling, resource isolation, and high availability. For engineering leaders, this translates directly into faster, more reliable release cycles and empowers developers with a self-service, API-driven delivery model.

Why Kubernetes CI/CD Is the New Standard

In modern software delivery, speed and reliability are non-negotiable. Traditional CI/CD pipelines, often shackled to dedicated virtual machines, have become a notorious bottleneck. They are operationally rigid, difficult to scale horizontally, and require significant manual overhead for maintenance and dependency management—a monolithic architecture where a single point of failure can halt all development velocity.

Kubernetes completely inverts this model. It transforms the deployment environment from a fragile, imperative script-driven process into a declarative, self-healing ecosystem. Instead of providing a sequence of commands on how to deploy an application, you define its desired final state in a Kubernetes manifest (e.g., a Deployment.yaml file). The Kubernetes control plane then works relentlessly to converge the cluster's actual state with your declared state.

This is the architectural equivalent of upgrading from a fixed assembly line to a distributed, intelligent robotics factory. The factory's control system understands the final product specification and autonomously orchestrates all necessary resources, tools, and self-correction routines to build it with perfect fidelity, every time. This declarative control loop is the core technical advantage of a kubernetes ci cd pipeline. Before diving into pipeline specifics, a solid grasp of the underlying Kubernetes technology itself is foundational.

The Technical Drivers for Adoption

Several core technical advantages make Kubernetes the definitive platform for modern CI/CD:

- Declarative Infrastructure: The entire application environment—from

Ingressrules andPersistentVolumeClaimstoNetworkPoliciesandDeployments—is defined as version-controlled code. This eliminates configuration drift and ensures every deployment is idempotent and auditable via Git history. - Self-Healing and Resilience: Kubernetes' control plane continuously monitors the state of the cluster. It automatically restarts failed containers via kubelet, reschedules pods onto healthy nodes if a node fails, and uses readiness/liveness probes to manage application health, drastically reducing mean time to recovery (MTTR).

- Resource Efficiency and Scalability: CI/CD jobs run as pods, sharing the cluster's resource pool. The cluster autoscaler can provision or deprovision nodes based on pending pod requests, while the Horizontal Pod Autoscaler (HPA) can scale build agents or applications based on CPU/memory metrics. This model ends the financial waste of over-provisioned, static build servers.

This architectural shift has been decisive. Between 2020 and 2024, Kubernetes evolved from a niche option to the de facto standard for software delivery. CNCF data reveals that 96% of enterprises now use Kubernetes, with the average organization running over 20 clusters. This operational scale has mandated the adoption of standardized, declarative CI/CD practices centered around powerful GitOps tools like Argo CD and Flux. This new paradigm is an essential component of effective cloud native application development.

Designing Your Kubernetes Pipeline Architecture

Architecting a Kubernetes CI/CD pipeline is a critical engineering decision. This choice dictates the security posture, scalability limits, and developer experience of your entire delivery platform. The decision is not merely about tool selection; it's about defining the control plane for how code moves from a git commit to a running application pod within your cluster.

Your architectural choice fundamentally boils down to two models: running the entire CI/CD workflow natively within the Kubernetes cluster or orchestrating it from an external SaaS platform via in-cluster agents.

Each approach has distinct technical trade-offs. The in-cluster model provides deep, native integration with the Kubernetes API server, enabling powerful, cluster-aware automations. Conversely, an external system often integrates more seamlessly with existing SCM platforms and developer workflows. Let's dissect the technical implementation of each to engineer an efficient delivery machine.

This map visualizes the core pillars of a solid Kubernetes CI/CD strategy, showing how it boosts speed, reliability, and scale.

As you can see, Kubernetes isn't just a bystander; it's the central control plane that makes faster deployments, more dependable applications, and massive operational scale possible.

In-Cluster Kubernetes Native Pipelines

This model treats the CI/CD pipeline as a first-class workload running natively inside Kubernetes. Your pipeline is a Kubernetes application. Tools designed for this paradigm, such as Tekton, use Custom Resource Definitions (CRDs) to define pipeline components—Tasks, Pipelines, and PipelineRuns—as native Kubernetes objects manageable via kubectl.

This architecture offers compelling technical advantages. Since the pipeline is Kubernetes-native, it can dynamically provision pods for each Task in a PipelineRun. This provides exceptional elasticity and isolation. When a job starts, a pod with the exact required CPU, memory, and ServiceAccount permissions is created. Upon completion, the pod is terminated, freeing up resources immediately and optimizing cost and cluster utilization.

This native approach means your pipeline automatically inherits core Kubernetes features like scheduling, resource management via

ResourceQuotas, and high availability. It also simplifies security contexts, asNetworkPoliciesandRBACroles can be applied to pipeline pods just like any other workload.

For teams building a cloud-native platform from scratch, this model offers the tightest possible integration. The entire CI/CD system is managed declaratively through YAML manifests and kubectl, creating a consistent operational model with the rest of your applications.

External CI Systems with In-Cluster Runners

The second major architecture is a hybrid model. An external CI/CD platform—such as GitHub Actions, GitLab CI, or CircleCI—orchestrates the pipeline, but the actual compute happens inside your cluster. In this configuration, the external CI service delegates jobs to agents or runners deployed as pods within your cluster.

This is a prevalent architecture, especially for teams with existing investments in a specific CI/CD platform. The external tool manages the high-level workflow definition (e.g., .github/workflows/main.yml), handles triggers, and provides the user interface. The in-cluster runners execute the container-native tasks, like building Docker images with Kaniko or applying manifests with kubectl apply.

- GitHub Actions uses self-hosted runners, managed by the

actions-runner-controller, which you deploy into your cluster. This controller listens for job requests from GitHub and creates ephemeral pods to execute them. - GitLab CI provides a dedicated GitLab Runner that can be installed via a Helm chart. It can be configured to use the Kubernetes executor, which dynamically creates a new pod for each CI job.

This model creates a clean separation of concerns between the orchestration plane (the SaaS CI tool) and the execution plane (your Kubernetes cluster). It offers developers a familiar UI while leveraging Kubernetes for scalable, isolated build environments. The primary technical challenge is securely managing credentials (KUBECONFIG files, cloud provider keys) and network access between the external system and the in-cluster runners.

Regardless of the model, integrating the top CI/CD pipeline best practices is critical for building a robust and secure system.

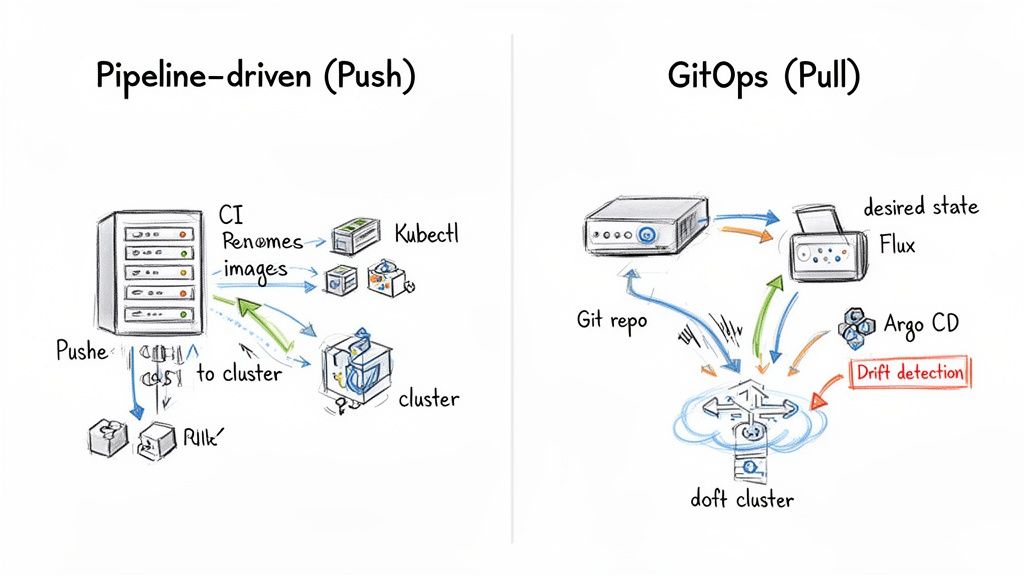

When architecting a Kubernetes CI/CD pipeline, the most fundamental decision is the deployment model: a pipeline-driven push model or a GitOps-based pull model. This is not just a tool choice; it's a philosophical decision between an imperative, command-based system and a declarative, reconciliation-based one.

This decision profoundly impacts your system's security posture, resilience to configuration drift, and operational complexity. The path you choose will directly determine development velocity, operational security, and the system's ability to scale without collapsing under its own weight.

The Traditional Push-Based Pipeline Model

The pipeline-driven approach is the classic, imperative model. A CI server, like Jenkins or GitLab CI, executes a sequence of scripted commands. A git merge to the main branch triggers a pipeline that builds a container image, pushes it to a registry, and then runs commands like kubectl apply -f deployment.yaml or helm upgrade --install to push the changes directly to the Kubernetes cluster.

In this model, the CI tool is the central actor and holds highly privileged credentials—often a kubeconfig file with cluster-admin permissions—with direct API access to your clusters. While this setup is straightforward to implement initially, it creates a significant security vulnerability. The CI system becomes a single, high-value target; a compromise of the CI server means a compromise of all your production clusters.

This model is also highly susceptible to configuration drift. If an engineer applies a manual hotfix using kubectl patch deployment my-app --patch '...' to resolve an incident, the pipeline has no awareness of this change. The live state of the cluster now deviates from the configuration defined in Git, creating an inconsistent and unreliable environment.

The Modern Pull-Based GitOps Model

GitOps inverts the control flow entirely. Instead of an external CI pipeline pushing changes, an agent running inside the cluster continuously pulls the desired state from a Git repository. Tools like Argo CD or Flux are implemented as Kubernetes controllers that constantly monitor and reconcile the live state of the cluster with the declarative manifests in a designated Git repository.

This is a fully declarative workflow where the Git repository becomes the undisputed single source of truth for the system's state. To deploy a change, an engineer simply updates a YAML file (e.g., changing an image: tag), commits, and pushes to Git. The in-cluster GitOps agent detects the new commit, pulls the updated manifest, and uses the Kubernetes API to make the cluster's state converge with the new declaration.

With GitOps, the cluster effectively manages itself. The CI server's role is reduced to building and publishing container images to a registry. It no longer requires—and should never have—direct credentials to the Kubernetes API server. This drastically reduces the attack surface and enhances the security posture.

The pull model enables powerful capabilities. The GitOps agent can instantly detect configuration drift (e.g., a manual kubectl change) and either raise an alert or, more powerfully, automatically revert the unauthorized change, enforcing the state defined in Git. This self-healing property ensures environment consistency and complete auditability, as every change to the system is tied directly to a Git commit hash.

The shift to GitOps is no longer a niche trend; it's becoming the standard for mature Kubernetes operations. Platform teams embracing this model report a 3.5× higher deployment frequency, cementing its place as the go-to for modern delivery. For more on this, check out the detailed platform engineering data on how GitOps is shaping the future of Kubernetes delivery on fairwinds.com.

To make the differences crystal clear, let's break down how these two models stack up against each other on the key technical points.

Pipeline-Driven CI/CD vs. GitOps: A Technical Comparison

| Aspect | Pipeline-Driven (e.g., Jenkins, GitLab CI) | GitOps (e.g., Argo CD, Flux) |

|---|---|---|

| Deployment Trigger | Push-based. CI pipeline is triggered by a Git commit and actively pushes changes to the cluster via kubectl or Helm commands. |

Pull-based. An in-cluster agent detects a new commit in the Git repo and pulls the changes into the cluster. |

| Source of Truth | The pipeline script and its execution logs. The Git repo only holds the initial configuration. | The Git repository is the single source of truth for the desired state of the entire system. |

| Security Model | High risk. The CI system requires powerful, often cluster-admin level, credentials to the Kubernetes API. |

Low risk. The CI system has no access to the cluster. The in-cluster agent has limited, pull-only permissions via a ServiceAccount. |

| Configuration Drift | Prone to drift. Manual kubectl changes go undetected, leading to inconsistencies between Git and the live state. |

Actively prevents drift. The agent constantly reconciles the cluster state, automatically reverting or alerting on unauthorized changes. |

| Rollbacks | Manual/scripted. Requires re-running a previous pipeline job or manually executing kubectl apply with an older configuration. |

Declarative and fast. Simply execute git revert <commit-hash>, and the agent automatically rolls the cluster back to the previous state. |

| Operational Model | Imperative. You define how to deploy with a sequence of steps (e.g., run script A, run script B). |

Declarative. You define what the end state should look like in Git, and the agent's reconciliation loop figures out how to get there. |

Ultimately, while push-based pipelines are familiar, the GitOps model provides a more secure, reliable, and scalable foundation for managing Kubernetes applications. It brings the same rigor and auditability of Git that we use for application code directly to our infrastructure and operations.

A Technical Review of Kubernetes CI/CD Tools

Selecting the right tool for your Kubernetes CI/CD pipeline is a critical architectural decision. It directly influences your team's workflow, security posture, and release velocity. The ecosystem is dense, with each tool built around a distinct operational philosophy.

The tools generally fall into two categories: Kubernetes-native tools that operate as controllers inside the cluster and external platforms that integrate to the cluster via agents. Understanding the technical implementation of each is key. A native tool like Argo CD communicates directly with the Kubernetes API server using Custom Resource Definitions, while an external system like GitHub Actions requires a secure bridge (a runner) to execute commands within your cluster. Let's perform a technical breakdown of the major players.

Kubernetes-Native Tools: The In-Cluster Operators

These tools are designed specifically for Kubernetes. They run as controllers or operators inside the cluster and use Custom Resource Definitions (CRDs) to extend the Kubernetes API. This is architecturally significant because it allows you to manage CI/CD workflows using the same declarative kubectl and Git-based patterns used for standard resources like Deployments or Services.

-

Argo CD & Argo Workflows: Argo CD is the dominant tool for GitOps-style continuous delivery. It operates as a controller that continuously reconciles the cluster's live state against declarative manifests in a Git repository. Its application-centric model and intuitive UI provide excellent visibility into deployment status, history, and configuration drift. Its companion project, Argo Workflows, is a powerful, Kubernetes-native workflow engine ideal for defining and executing complex CI jobs as a series of containerized steps within a DAG (Directed Acyclic Graph).

-

Flux: As a CNCF graduated project, Flux is another cornerstone of the GitOps ecosystem, known for its minimalist, infrastructure-as-code philosophy. Unlike Argo CD's monolithic UI, Flux is a composable set of specialized controllers (the GitOps Toolkit) that you manage primarily through

kubectland YAML manifests. This makes it highly extensible and a preferred choice for platform teams building fully automated, API-driven delivery systems. -

Tekton: For teams wanting to build a CI/CD system entirely on Kubernetes, Tekton provides the low-level building blocks. It offers a set of powerful, flexible CRDs like

Task(a sequence of containerized steps) andPipeline(a graph of tasks) to define every aspect of a CI process. Since each step runs in its own ephemeral pod, Tekton provides superior isolation and scalability, making it an excellent foundation for secure, bespoke CI platforms that operate exclusively within the cluster boundary.

External Integrators: The Hybrid Approach

These are established CI/CD platforms that have adapted to Kubernetes. They orchestrate pipelines externally but use agents or runners to execute jobs inside the cluster. This model is well-suited for organizations already standardized on platforms like GitHub or GitLab that want to leverage Kubernetes as a scalable and elastic backend for their build infrastructure.

-

GitHub Actions: The default CI tool for the GitHub ecosystem, Actions uses self-hosted runners to connect to your cluster. You deploy a runner controller (e.g.,

actions-runner-controller), which then launches ephemeral pods to execute the steps defined in your.github/workflowsYAML files. This provides a straightforward mechanism to bridge agit pushevent in your repository to command execution inside your private cluster network. -

GitLab CI: Similar to GitHub Actions, GitLab CI utilizes a GitLab Runner that can be installed into your cluster via a Helm chart. When configured with the Kubernetes executor, it dynamically provisions a new pod for each job, effectively turning Kubernetes into an elastic build farm. The tight integration with the GitLab SCM, container registry, and security scanning tools makes it a compelling all-in-one DevOps platform.

-

Jenkins X: This is not your traditional Jenkins. Jenkins X is a complete, opinionated CI/CD solution built from the ground up for Kubernetes. It automates the setup of modern CI/CD practices like GitOps and preview environments, orchestrating powerful cloud-native tools like Tekton and Helm under the hood. It offers an accelerated path to a fully functional, Kubernetes-native CI/CD system.

For a broader market analysis, see our guide to the best CI/CD tools available today.

Kubernetes CI/CD Tool Feature Matrix

This matrix provides a technical comparison of the most popular tools for building CI/CD pipelines on Kubernetes, helping you map their core features to your team's specific requirements.

| Tool | Primary Model | Key Features | Best For |

|---|---|---|---|

| Argo CD | GitOps (Pull-based) | Application-centric UI, drift detection, multi-cluster management, declarative rollouts via Argo Rollouts. | Teams that need a user-friendly and powerful continuous delivery platform with strong visualization. |

| Flux | GitOps (Pull-based) | Composable toolkit (source, kustomize, helm controllers), command-line focused, strong automation. | Platform engineers building automated infrastructure-as-code delivery systems from Git. |

| Tekton | In-Cluster CI (Event-driven) | Kubernetes-native CRDs (Task, Pipeline), extreme flexibility, strong isolation and security context. |

Building custom, secure, and highly scalable CI systems that run entirely inside Kubernetes. |

| GitHub Actions | External CI (Push-based) | Massive community marketplace, deep GitHub integration, self-hosted runners for Kubernetes. | Teams already using GitHub for source control who need a flexible and easy-to-integrate CI solution. |

| GitLab CI | External CI (Push-based) | All-in-one platform, integrated container registry, auto-scaling Kubernetes runners. | Organizations looking for a single, unified platform for the entire software development lifecycle. |

| Jenkins X | In-Cluster CI (Opinionated) | Automated GitOps setup, preview environments, integrates Tekton and other cloud-native tools. | Teams wanting a fast path to modern, Kubernetes-native CI/CD without building it all from scratch. |

The optimal choice depends on your team's existing toolchain, operational philosophy (GitOps vs. traditional CI), and whether you prefer an all-in-one platform or a more composable, build-it-yourself architecture.

Implementing Advanced Deployment Strategies

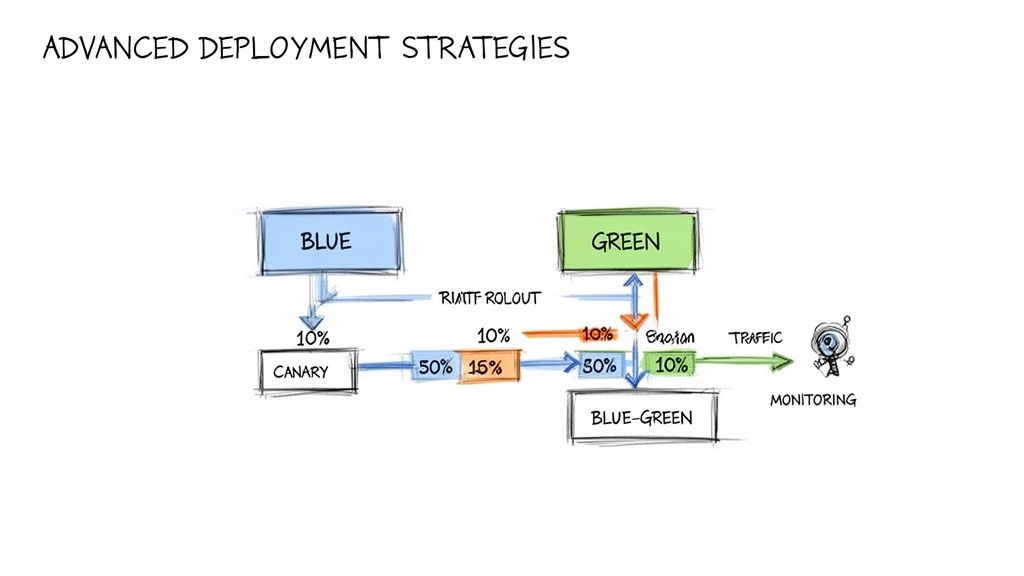

With a functional Kubernetes CI/CD pipeline, the next step is to evolve beyond simplistic, all-at-once RollingUpdate deployments that can impact user experience. The objective is to achieve zero-downtime releases with automated quality gates and rollback capabilities.

This requires implementing advanced deployment strategies. This involves intelligent traffic shaping, real-time performance analysis, and automated failure recovery. Kubernetes-native tools like Argo Rollouts and Flagger are controllers that extend Kubernetes, replacing the standard Deployment object with more powerful CRDs to manage these sophisticated release methodologies.

Blue-Green Deployments for Instant Rollbacks

A blue-green deployment minimizes risk by maintaining two identical production environments, designated "blue" (current version) and "green" (new version).

Initially, the Kubernetes Service selector points to the pods of the blue environment, which serves all live traffic. The CI/CD pipeline deploys the new application version to the green environment. Here, the new version can be comprehensively tested (e.g., via integration tests, smoke tests) against production infrastructure without affecting users.

Once the green environment is validated, the release is executed by updating the Service selector to point to the green pods. All user traffic is instantly routed to the new version.

The key benefit is near-instantaneous rollback. If post-release monitoring detects an issue, you can immediately revert by updating the Service selector back to the blue environment, which is still running the last known good version. This eliminates downtime associated with complex rollback procedures.

Canary Releases for Gradual Exposure

A canary release is a more gradual and data-driven strategy. Instead of a binary traffic switch, the new version is exposed to a small subset of user traffic—for example, 5%. This initial user group acts as the "canary," providing early feedback on the new version's performance and stability in a real production environment.

Tools like Argo Rollouts or Flagger automate this process by integrating with a service mesh (like Istio, Linkerd) or an ingress controller (like NGINX, Traefik) to precisely control traffic splitting. They continuously query a metrics provider (like Prometheus) to analyze key Service Level Indicators (SLIs).

- Automated Analysis: The tool executes Prometheus queries (e.g.,

sum(rate(http_requests_total{status_code=~"^5.*"}[1m]))) to measure error rates and latency for the canary version. - Progressive Delivery: If the SLIs remain within predefined thresholds, the tool automatically increases the traffic weight to the canary in stages—10%, 25%, 50%—until it handles 100% of traffic and is promoted to the stable version.

- Automated Rollback: If at any point an SLI threshold is breached (e.g., error rate exceeds 1%), the tool immediately aborts the rollout and shifts all traffic back to the stable version, preventing a widespread incident.

This methodology significantly limits the blast radius of a faulty release. A potential bug impacts only a small percentage of users, and the automated system can self-correct before it becomes a major outage.

Securing and Observing Your Pipeline

An advanced deployment strategy is incomplete without integrating security and observability directly into the Kubernetes CI/CD workflow—a practice known as DevSecOps.

For security, this involves adding automated gates at each stage:

- Image Scanning: Integrate tools like Trivy or Clair into the CI pipeline to scan container images for Common Vulnerabilities and Exposures (CVEs). A high-severity CVE should fail the build.

- Secrets Management: Never store secrets (API keys, database passwords) in Git. Use a dedicated secrets management solution like HashiCorp Vault or Sealed Secrets to securely inject credentials into pods at runtime.

- Policy Enforcement: Use an admission controller like OPA Gatekeeper to enforce cluster-wide policies via

ConstraintTemplates, such as blocking deployments from untrusted container registries or requiring specific pod security contexts.

On the observability front, Kubernetes‑native CI/CD is becoming a critical financial and reliability lever. Mature platform teams are now defining Service Level Objectives (SLOs) and using real-time telemetry from their observability platform to programmatically gate or roll back deployments based on performance metrics.

However, a word of caution: analysts project that by 2026, around 70% of Kubernetes clusters could become "forgotten" cost centers if organizations fail to implement disciplined lifecycle management and observability within their CI/CD processes. You can explore more of these observability trends and their financial impact on usdsi.org.

Knowing When to Partner with a DevOps Expert

Building a production-grade Kubernetes CI/CD platform is a significant engineering challenge. While many teams can implement a basic pipeline, recognizing the need for expert guidance can prevent the accumulation of architectural technical debt. The decision to engage an expert is typically driven by specific technical inflection points that exceed an in-house team's experience.

Clear triggers often signal the need for external expertise. A common one is the migration of a complex monolithic application to a cloud-native architecture. This is far more than a "lift and shift"; it requires deep expertise in containerization patterns, the strangler fig pattern for service decomposition, and strategies for managing stateful applications in Kubernetes. Architectural missteps here can lead to severe performance, security, and cost issues.

Another sign is the transition to a sophisticated, multi-cloud GitOps strategy. Managing deployments and configuration consistently across AWS (EKS), GCP (GKE), and Azure (AKS) introduces significant complexity in identity federation (e.g., IAM roles for Service Accounts), multi-cluster networking, and maintaining a single source of truth without creating operational silos.

Assessing Your Team's DevOps Maturity

Attempting to scale a platform engineering function without sufficient senior talent can lead to stagnation. If your team lacks hands-on experience implementing advanced deployment strategies like automated canary analysis with a service mesh, or if they struggle to secure pipelines with tools like OPA Gatekeeper and Vault, this indicates a critical capability gap. Proceeding without this expertise often leads to brittle, insecure systems that are operationally expensive to maintain.

Use this technical checklist to assess your team's current maturity:

- Pipeline Automation: Is the entire workflow from

git committo production deployment fully automated, or do manual handoffs (e.g., for approvals, configuration changes) still exist? - Security Integration: Are automated security gates—Static Application Security Testing (SAST), Dynamic Application Security Testing (DAST), image vulnerability scanning—integrated as blocking steps in every pipeline run?

- Observability: Can your team correlate a failed deployment directly to specific performance metrics (e.g., p99 latency, error rate SLOs) in your monitoring platform within minutes?

- Disaster Recovery: Do you have a documented and, critically, tested runbook for recovering your CI/CD platform and cluster state in a catastrophic failure scenario?

If you answered "no" to several of these questions, an expert partner could provide immediate value. Specialized expertise helps you bypass common architectural pitfalls that can take months or even years to refactor.

By strategically engaging expert help, you ensure your Kubernetes CI/CD strategy becomes a true business accelerator rather than an operational bottleneck. For teams seeking a clear architectural roadmap, a CI/CD consultant can provide the necessary strategy and execution horsepower.

Got questions about getting CI/CD right in Kubernetes? Let's tackle a few of the big ones we hear all the time.

Can I Still Use My Old Jenkins Setup for Kubernetes CI/CD?

Yes, but its architecture must be adapted for a cloud-native environment. Simply deploying a traditional Jenkins master on a Kubernetes cluster is suboptimal as it doesn't leverage Kubernetes' strengths.

A more effective approach is the hybrid model: maintain the Jenkins controller externally but configure it to use the Kubernetes plugin. This allows Jenkins to dynamically provision ephemeral build agents as pods inside the cluster for each pipeline job. This gives you the familiar Jenkins UI and plugin ecosystem combined with the scalability and resource efficiency of Kubernetes. For a more modern, Kubernetes-native experience, consider migrating to Jenkins X.

What's the Real Difference Between Argo CD and Flux?

Both are leading CNCF GitOps tools, but they differ in philosophy and architecture.

Argo CD is an application-centric, all-in-one solution. It provides a powerful web UI that offers developers and operators a clear, visual representation of application state, deployment history, and configuration drift. It is often preferred by teams that prioritize ease of use and high-level visibility for application delivery.

Flux is a composable, infrastructure-focused toolkit. It is a collection of specialized controllers (the GitOps Toolkit) designed to be driven programmatically via kubectl and declarative YAML. It excels in highly automated, infrastructure-as-code environments and is favored by platform engineering teams building custom, API-driven automation.

How Should I Handle Secrets in a Kubernetes Pipeline?

Storing plaintext secrets in a Git repository is a critical security vulnerability. A dedicated secrets management solution is non-negotiable.

- HashiCorp Vault: This is the industry-standard external secrets manager. It provides a central, secure store for secrets and can dynamically inject them into pods at runtime using a sidecar injector or a CSI driver, ensuring credentials are never written to disk.

- Sealed Secrets: This is a Kubernetes-native solution. It consists of a controller running in the cluster and a CLI tool (

kubeseal). Developers encrypt a standardSecretmanifest into aSealedSecretCRD, which can be safely committed to a public Git repository. Only the in-cluster controller holds the private key required to decrypt it back into a nativeSecret.

The fundamental principle is the complete separation of secrets from your application configuration repositories. This separation dramatically reduces your attack surface. Even if your Git repository is compromised, your most sensitive credentials remain secure. This practice is a cornerstone of any robust kubernetes ci cd security strategy.

Figuring out the right tools and security practices for Kubernetes can be a maze. OpsMoon gives you access to the top 0.7% of DevOps engineers who live and breathe this stuff. They can help you build a secure, scalable CI/CD platform that just works.

Book a free work planning session and let's map out your path forward.