A Technical Guide to Modern Web Application Architecture

A technical guide to modern web application architecture. Learn to design scalable, secure systems with expert insights on patterns, layers, and best practices.

Web application architecture is the structural blueprint that defines how a system's components—the client-side (frontend), the server-side (backend), and the database—interact. This framework dictates the flow of data, the separation of concerns, and the operational characteristics of the application, such as its performance, scalability, and maintainability.

The Blueprint for Digital Experiences

A well-engineered architecture is a strategic asset. It directly impacts key non-functional requirements and determines whether an application can handle traffic surges, mitigate security vulnerabilities, and accommodate future feature development. A suboptimal architectural choice can result in a brittle, slow, and unmaintainable system, accumulating significant technical debt.

Core Engineering Goals

Every architectural decision involves trade-offs between competing technical goals. The objective is to design a system that optimally balances these priorities based on business requirements.

- Scalability: This refers to the system's ability to handle increased load. Horizontal scaling (scaling out) involves adding more machines to the resource pool, while vertical scaling (scaling up) means increasing the capacity (CPU, RAM) of existing machines. Modern architectures heavily favor horizontal scaling due to its elasticity and fault tolerance.

- Performance: Measured by latency and throughput, performance is the system's responsiveness under a specific workload. This involves optimizing everything from database query execution plans to client-side rendering times and network overhead.

- Security: This is the practice of designing and implementing controls across all layers of the application to protect data integrity, confidentiality, and availability. This includes secure coding practices, infrastructure hardening, and robust authentication/authorization mechanisms.

- Maintainability: This quality attribute measures the ease with which a system can be modified to fix bugs, add features, or refactor code. High maintainability is achieved through modularity, low coupling, high cohesion, and clear documentation.

Modern web applications increasingly leverage client-side processing to deliver highly interactive user experiences. It is projected that by 2025, over 95% of new digital products will be cloud-native, often using patterns like Single-Page Applications (SPAs). This architectural style shifts significant rendering logic to the client's browser, reducing server load and minimizing perceived latency by fetching data asynchronously via APIs. For a deeper dive, see this resource on the evolution of web development on GeeksforGeeks.org.

A superior architecture is one that defers critical and irreversible decisions. It maximizes optionality, allowing the system to adapt to new technologies and evolving business requirements without necessitating a complete rewrite.

The Fundamental Building Blocks

At a high level, nearly every web application is composed of three fundamental tiers. Understanding the specific function and technologies of each tier is essential for deconstructing and designing any web system.

Let's dissect these core components. The table below outlines each component, its technical function, and common implementation technologies.

Core Components of a Web Application

| Component | Primary Role | Key Technologies |

|---|---|---|

| Client (Presentation Tier) | Renders the UI, manages client-side state, and initiates HTTP requests to the server. | HTML, CSS, JavaScript (e.g., React, Vue, Angular) |

| Server (Application Tier) | Executes business logic, processes client requests, enforces security rules, and orchestrates data access. | Node.js, Python (Django, Flask), Java (Spring), Go |

| Database (Data Tier) | Provides persistent storage for application data, ensuring data integrity, consistency, and durability. | PostgreSQL, MySQL, MongoDB |

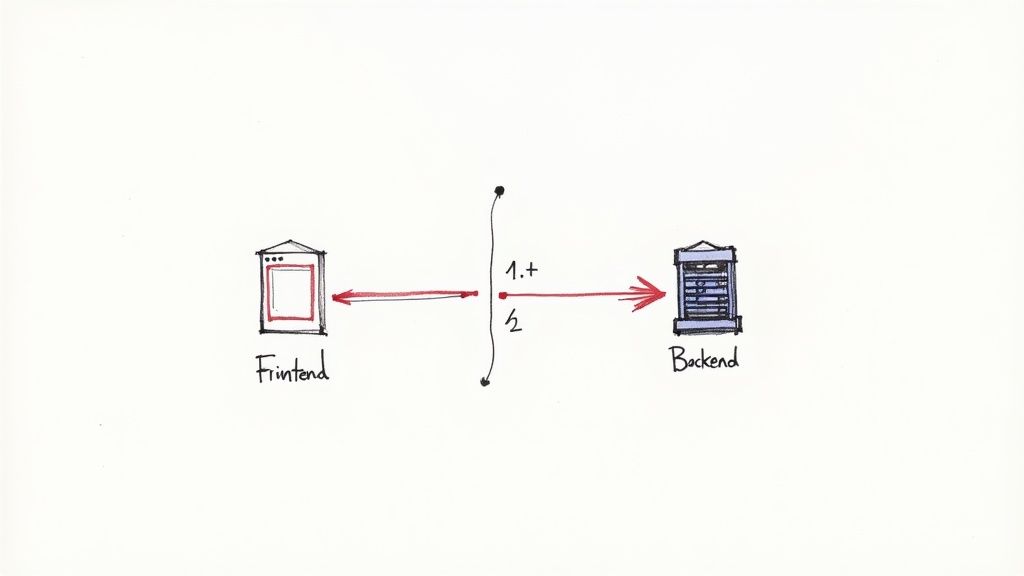

These tiers are in constant communication via a well-defined protocol, typically HTTP. A user action on the client triggers an asynchronous JavaScript call (e.g., using fetch or axios) to an API endpoint on the server. The server processes the request, which may involve executing business logic and performing CRUD (Create, Read, Update, Delete) operations on the database. It then sends a response, usually in JSON format, back to the client, which updates the UI accordingly. This request-response cycle is the fundamental operational loop of the web.

The Evolution from Monoliths to Microservices

To comprehend modern architectural patterns, one must understand the engineering challenges they were designed to solve. The trajectory of web application architecture is a direct response to increasing demands for complexity, scale, and development velocity.

Initially, the web consisted of static HTML files served from a web server like Apache. A request for a URL mapped directly to a file on the server's disk. This model was simple and performant for static content but lacked the ability to generate dynamic, user-specific experiences.

The Rise of Dynamic Content and the Monolith

The mid-1990s saw the advent of server-side scripting languages like PHP, ColdFusion, and ASP. This enabled server-side rendering (SSR), where the server would execute code to query a database and dynamically generate an HTML page for each request. For over a decade, this was the dominant paradigm for web applications.

This capability led to the prevalence of the monolithic architecture. In this model, the entire application—presentation logic, business logic, and data access layer—is contained within a single, tightly-coupled codebase. The entire application is developed, tested, deployed, and scaled as a single unit.

This approach offers initial simplicity, making it suitable for small projects and startups focused on rapid prototyping. However, as the application and the development team grow, this model's limitations become significant liabilities.

A monolith exhibits high internal coupling. A change in one module can have unforeseen ripple effects across the entire system, making maintenance and feature development increasingly risky and time-consuming as the codebase expands.

The Breaking Point and the Need for Change

For scaling organizations, large monolithic applications introduce severe engineering bottlenecks.

- Deployment Bottlenecks: A bug in a minor feature can block the release of the entire application. This leads to infrequent, high-risk "big bang" deployments and forces teams into rigid, coordinated release cycles.

- Scaling Challenges: If a single function, such as a payment processing module, experiences high traffic, the entire monolith must be scaled. This is resource-inefficient, as you replicate components that are not under load.

- Technology Lock-in: The entire application is built on a single technology stack. Adopting a new language or framework for a specific task that is better suited for it requires a massive, often infeasible, refactoring effort.

These challenges created a strong impetus for a new architectural paradigm that would allow for decoupling and independent evolution of system components. This push for agility, independent scalability, and technological heterogeneity led to the rise of distributed systems and, most notably, the microservices pattern. Exploring various microservices architecture design patterns is crucial for successfully implementing these complex systems.

Deconstructing the Modern Architectural Layers

To architect a robust system, we must dissect a modern web application into its logical layers. This layered approach enforces a "separation of concerns," a core principle of software engineering where each module addresses a distinct responsibility. When a user request is initiated, it propagates through these layers to deliver the final output.

This separation is the foundation of any maintainable and scalable web application architecture. Let's examine the three primary layers: presentation, business, and persistence.

The Presentation Layer (Client-Side)

This layer encompasses all code executed within the user's browser. Its primary function is to render the user interface and manage local user interactions. In modern applications, this is a sophisticated client-side application in its own right.

The core mechanism is the programmatic manipulation of the Document Object Model (DOM), a tree-like representation of the HTML document. Modern JavaScript frameworks like React, Vue, and Angular excel at this. They implement a declarative approach to UI development, managing the application's "state" (data that can change over time) and efficiently re-rendering the DOM only when the state changes. This is what enables fluid, desktop-like experiences.

This capability was unlocked by the standardization of the DOM Level 1 in 1998, which provided a platform- and language-neutral interface for programs to dynamically access and update the content, structure, and style of documents. This paved the way for Asynchronous JavaScript and XML (AJAX), enabling web pages to update content without a full page reload. You can find a detailed timeline in this full history of the web's evolution on matthewgerstman.com.

The Business Layer (Server-Side)

When the presentation layer needs to perform an action that requires authority or persistent data (e.g., processing a payment), it sends an API request to the business layer, or backend. This is the application's core, where proprietary business rules are encapsulated and executed.

This layer's responsibilities are critical:

- Processing Business Logic: Implementing the algorithms and rules that define the application's functionality, such as validating user permissions, calculating financial data, or processing an order workflow.

- Handling API Requests: Exposing a well-defined set of endpoints (APIs) that the client communicates with, typically using a RESTful or GraphQL interface over HTTP/S with JSON payloads.

- Coordinating with Other Services: Interacting with third-party services (e.g., a payment gateway like Stripe) or other internal microservices through their APIs.

The business layer is typically developed using languages like Node.js, Python, Go, or Java. It is often designed to be stateless, meaning it retains no client-specific session data between requests. This statelessness is a key enabler for horizontal scalability, as any server instance can process a request from any client.

The Persistence Layer (Data Storage)

The business layer requires a mechanism to store and retrieve data durably, which is the function of the persistence layer. This layer includes not just the database itself but all components involved in managing the application's long-term state.

The choice of database technology is a critical architectural decision with long-term consequences for performance, scalability, and data integrity.

The persistence layer is the authoritative system of record. Its design, including the data model and access patterns, directly dictates the application's performance characteristics and its ability to scale under load.

Selecting the right database for the job is paramount. A relational database like PostgreSQL enforces a strict schema and provides ACID (Atomicity, Consistency, Isolation, Durability) guarantees, making it ideal for transactional data. In contrast, a NoSQL document database like MongoDB offers a flexible schema, which is advantageous for storing unstructured or semi-structured data like user profiles or product catalogs.

Beyond the primary database, this layer typically includes:

- Caching Systems: An in-memory data store like Redis is used to cache frequently accessed data, such as query results or session information. This dramatically reduces latency and offloads read pressure from the primary database.

- Data Access Patterns: This defines how the application queries and manipulates data. Using an Object-Relational Mapper (ORM) can abstract away raw SQL, but it's crucial to understand the queries it generates to avoid performance pitfalls like the N+1 problem.

Together, these three layers form a cohesive system. A user interaction on the presentation layer triggers a request to the business layer, which in turn interacts with the persistence layer to read or write data, ultimately returning a response that updates the user's view.

Comparing Core Architectural Patterns

Selecting an architectural pattern is a foundational engineering decision that profoundly impacts development velocity, operational complexity, cost, and team structure. Each pattern represents a distinct philosophy on how to organize and deploy code, with a unique set of trade-offs.

A pragmatic architectural choice requires a deep understanding of the practical implications of the most common patterns: the traditional Monolith, the distributed Microservices model, and the event-driven Serverless approach.

The Monolithic Approach

A monolithic architecture structures an application as a single, indivisible unit. All code for the user interface, business logic, and data access is contained within one codebase, deployed as a single artifact.

For a standard e-commerce application, this means the modules for user authentication, product catalog management, and order processing are all tightly coupled within the same process. Its primary advantage is simplicity, particularly in the initial stages of a project.

- Unified Deployment: A new release is straightforward; the entire application artifact is deployed at once.

- Simplified Development: In early stages, end-to-end testing and debugging can be simpler as there are no network boundaries between components.

- Lower Initial Overhead: There is no need to manage a complex distributed system, reducing the initial operational burden.

However, this simplicity erodes as the application scales. A change in one module requires re-testing and re-deploying the entire system, increasing risk and creating a development bottleneck. Scaling is also inefficient; if only one module is under heavy load, the entire application must be scaled, leading to wasted resources.

The Microservices Approach

Microservices architecture decomposes a large application into a suite of small, independent services. Each service is organized around a specific business capability—such as an authentication service, a product catalog service, or a payment service—and runs in its own process.

These services communicate with each other over a network using lightweight protocols, typically HTTP-based APIs. This pattern directly addresses the shortcomings of the monolith. The payment service can be updated and deployed without affecting the user service. Crucially, each service can be scaled independently based on its specific resource needs, enabling fine-grained, cost-effective scaling.

Key Insight: Microservices trade upfront architectural simplicity for long-term scalability and development agility. The initial operational complexity is higher, but this is offset by gains in team autonomy, fault isolation, and deployment flexibility for large-scale applications.

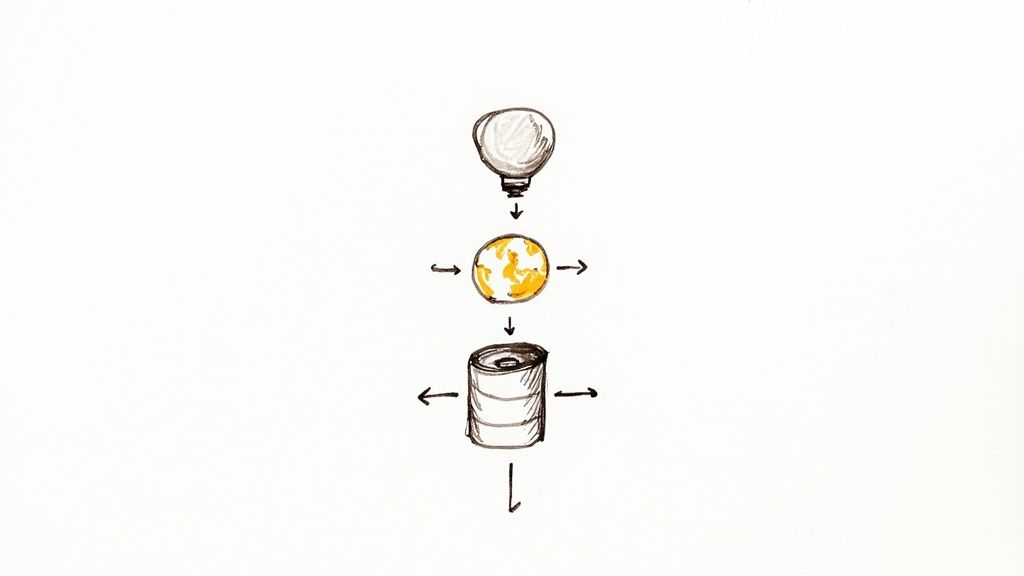

The Serverless Approach

Serverless architecture, or Function-as-a-Service (FaaS), represents a higher level of abstraction. Instead of managing servers or containers, you deploy code in the form of stateless functions that are triggered by events. These events can be HTTP requests, messages on a queue, or file uploads to a storage bucket.

The cloud provider dynamically provisions and manages the infrastructure required to execute the function. You are billed only for the compute time consumed during execution, often with millisecond precision. This pay-per-use model can be extremely cost-effective for applications with intermittent or unpredictable traffic. The trade-offs include potential vendor lock-in and increased complexity in local testing and debugging.

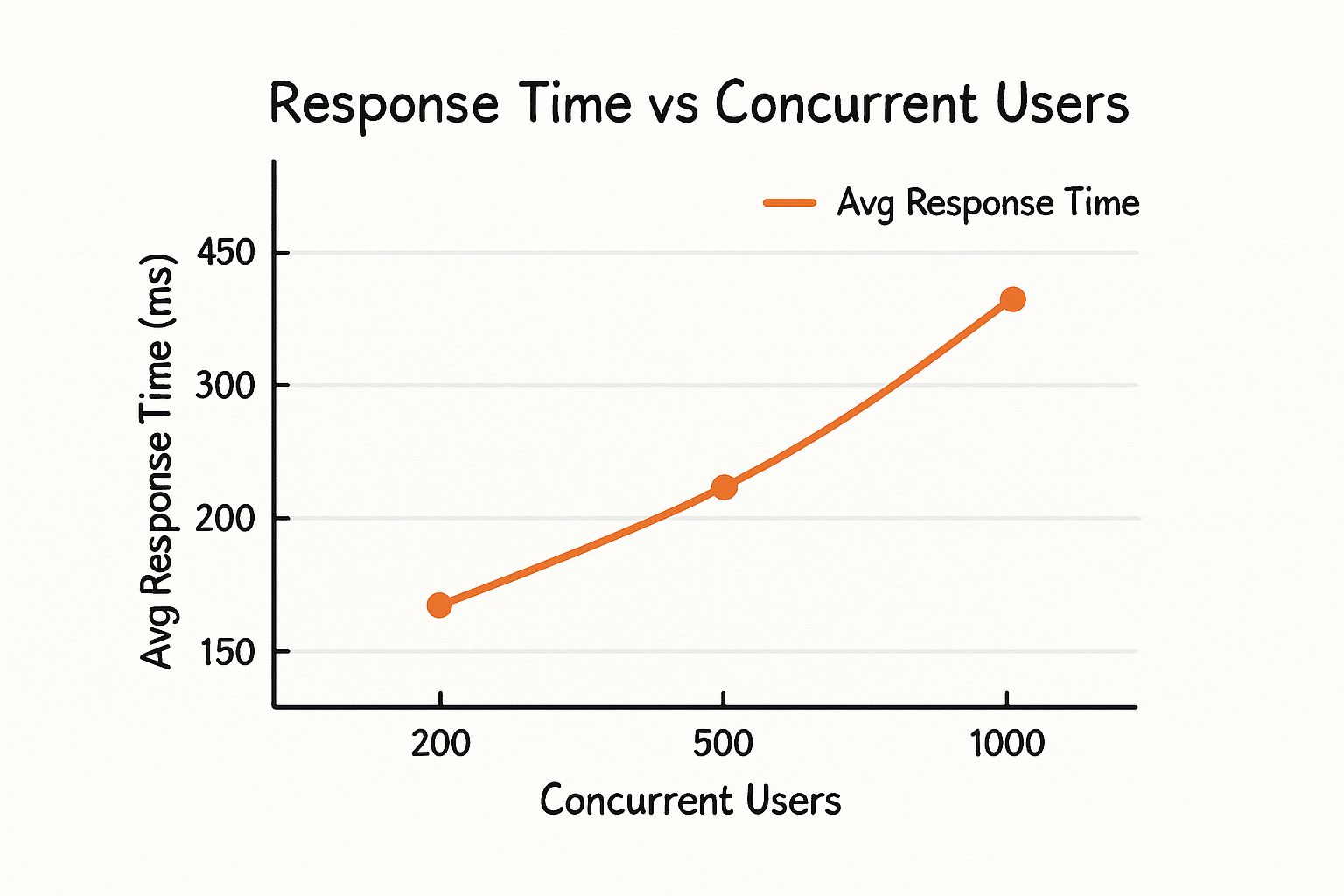

This infographic illustrates how response times can degrade as concurrent load increases—a critical factor in architectural selection.

As shown, an architecture that is not designed for horizontal scaling will experience exponential increases in response time under load, leading to poor user experience and potential system failure.

A Head-to-Head Comparison

The choice between these patterns is not about finding the "best" one but about aligning the pattern's characteristics with your project's technical and business requirements. The following table provides a comparative analysis.

Comparison of Architectural Patterns

| Attribute | Monolith | Microservices | Serverless |

|---|---|---|---|

| Deployment | Simple; single unit | Complex; independent services | Simple; function-based |

| Scalability | Low; all or nothing | High; granular | High; automatic |

| Dev Velocity | Fast initially, slows over time | Slower initially, fast at scale | Fast for small functions |

| Operational Cost | High fixed cost at scale | High initial, efficient later | Pay-per-use; can be low |

| Fault Isolation | Poor; one failure can crash all | Excellent; contained failures | Excellent; isolated functions |

This table provides a high-level summary. The final decision must consider your team's expertise, business goals, and the application's projected growth trajectory.

Automating the deployment pipeline is critical for all these architectures. For technical guidance, refer to our guide on CI/CD pipeline best practices. To further explore design principles, this article on 10 Essential Software Architecture Patterns is an excellent resource.

Actionable Best Practices for Modern System Design

A sound architectural blueprint is necessary but not sufficient. Its successful implementation depends on adhering to proven engineering principles that ensure resilience, security, and performance. This section provides a practical checklist for translating architectural diagrams into robust, production-ready systems.

Design for Failure

In any distributed system, component failures are inevitable. A robust web application architecture anticipates and gracefully handles these failures. The objective is to build a self-healing system where the failure of a non-critical component does not cause a cascading failure of the entire application.

Implement patterns like the Circuit Breaker, which monitors for failures. When the number of failures exceeds a threshold, the circuit breaker trips, stopping further calls to the failing service and preventing it from being overwhelmed. This allows the failing service time to recover. Also, implement retries with exponential backoff for transient network issues, where the delay between retries increases exponentially to avoid overwhelming a struggling service.

Architect for Horizontal Scalability

Design your system for growth from day one. Horizontal scalability is the practice of increasing capacity by adding more machines to your resource pool, as opposed to vertical scaling (adding more power to a single machine). It is the preferred approach for cloud-native applications due to its elasticity and fault tolerance.

Key techniques include:

- Load Balancing: Use a load balancer (e.g., Nginx, HAProxy, or a cloud provider's service) to distribute incoming traffic across multiple server instances using algorithms like Round Robin, Least Connections, or IP Hash.

- Stateless Application Layers: Ensure your application servers do not store client session data locally. Externalize state to a shared data store like Redis or a database. This allows any server to handle any request, making scaling out and in trivial.

- Database Read Replicas: For read-heavy workloads, create one or more read-only copies of your primary database. Direct all read queries to these replicas to offload the primary database, which then only has to handle write operations.

Implement Robust Security at Every Layer

Security must be an integral part of the architecture, not an afterthought. A "defense in depth" strategy, which involves implementing security controls at every layer of the stack, is essential for protecting against threats.

Focus on these fundamentals:

- Input Validation: Sanitize and validate all user-supplied data on the server side to prevent injection attacks like SQL Injection and Cross-Site Scripting (XSS).

- Secure Authentication: Implement standard, battle-tested authentication protocols like OAuth 2.0 and OpenID Connect. Use JSON Web Tokens (JWTs) for securely transmitting information between parties as a JSON object.

- Principle of Least Privilege: Ensure that every component and user in the system has only the minimum set of permissions required to perform its function.

For a comprehensive guide on integrating security into your development lifecycle, review these DevOps security best practices.

Optimize the Persistence Layer

The database is frequently the primary performance bottleneck in a web application. A single unoptimized query can degrade the performance of the entire system.

A well-indexed query can execute orders of magnitude faster than its unindexed counterpart. Proactive query analysis and indexing provide one of the highest returns on investment for performance optimization.

Prioritize these actions:

- Database Indexing: Use your database's query analyzer (e.g.,

EXPLAIN ANALYZEin PostgreSQL) to identify slow queries. Create indexes on columns used inWHEREclauses,JOINconditions, andORDER BYclauses to accelerate data retrieval. - Multi-Layer Caching: Implement caching at various levels of your application. This can include caching database query results, API responses, and fully rendered HTML fragments. This significantly reduces the load on backend systems.

- Asynchronous Communication: For long-running tasks like sending emails or processing large files, do not block the main request thread. Use a message queue like RabbitMQ or Kafka to offload the task to a background worker process. The application can then respond immediately to the user, improving perceived performance.

Sustaining a clean architecture over time requires actively managing technical debt. Explore these strategies for tackling technical debt to keep your system maintainable.

Common Questions About Web Application Architecture

Even with a firm grasp of architectural patterns and best practices, specific implementation questions often arise during a project. Addressing these common technical dilemmas is key to making sound architectural decisions.

When Should I Choose Microservices Over a Monolith?

This is a critical decision that defines a project's trajectory. A monolith is generally the pragmatic choice for Minimum Viable Products (MVPs), projects with inherently coupled business logic, or small development teams. The initial simplicity of development and deployment allows for rapid iteration.

Conversely, a microservices architecture should be strongly considered for large, complex applications that require high scalability and team autonomy. If the product roadmap involves multiple independent teams shipping features concurrently, or if you need the flexibility to use different technology stacks for different business domains (polyglot persistence/programming), microservices provide the necessary decoupling. The initial operational overhead is significant, but it is justified by the long-term benefits of independent deployability and improved fault isolation.

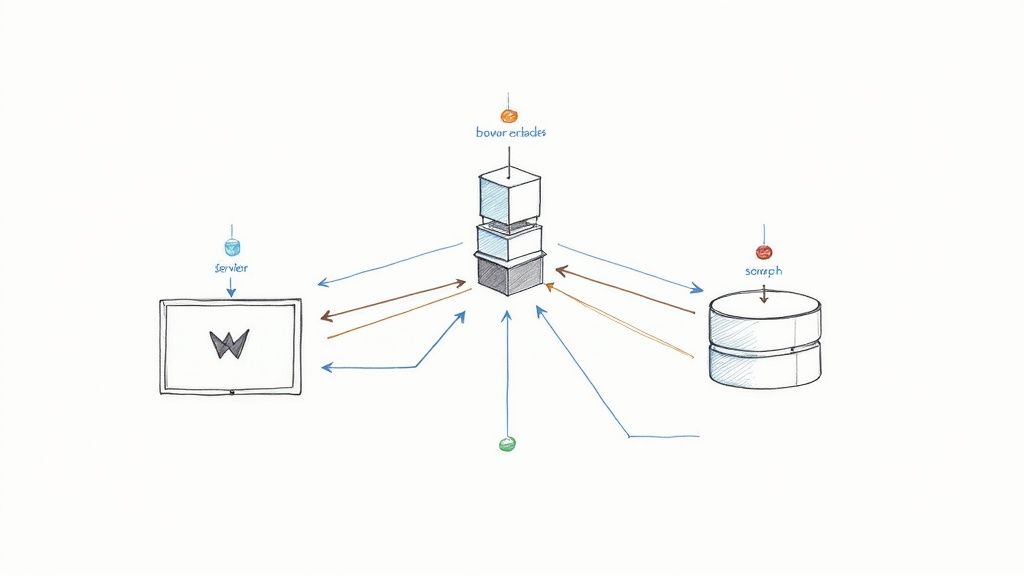

What Is the Role of an API Gateway in a Distributed System?

An API Gateway acts as a single entry point for all client requests to a backend system, particularly one based on microservices. It sits between the client applications and the backend services.

Its primary functions include:

- Request Routing: It intelligently routes incoming requests to the appropriate downstream microservice.

- Cross-Cutting Concerns: It centralizes the implementation of concerns that apply to multiple services, such as authentication, authorization, rate limiting, and logging. This prevents code duplication in the microservices themselves.

- Response Aggregation: It can invoke multiple microservices and aggregate their responses into a single, unified response for the client, simplifying client-side logic.

By acting as this intermediary, an API Gateway decouples clients from the internal structure of the backend and provides a centralized point for security and policy enforcement.

An API Gateway is not merely a reverse proxy; it is a strategic control plane. It abstracts the complexity of a distributed backend, enhancing security, manageability, and the developer experience for frontend teams.

How Does Serverless Differ from Containerization?

This distinction is about the level of abstraction. Both serverless computing (e.g., AWS Lambda) and containerization (e.g., Docker and Kubernetes) are modern deployment models, but they operate at different layers of the infrastructure stack.

Serverless (FaaS) abstracts away all infrastructure management. You deploy code as event-triggered functions, and the cloud provider automatically handles provisioning, scaling, and execution. The billing model is based on actual execution time, making it highly cost-effective for event-driven workloads or applications with sporadic traffic.

Containerization, using tools like Docker, packages an application with all its dependencies into a standardized unit called a container. You are still responsible for deploying and managing the lifecycle of these containers, often using an orchestrator like Kubernetes. Containers provide greater control over the execution environment, which is beneficial for complex applications with specific OS-level dependencies.

Why Is a Stateless Architecture Better for Scalability?

A stateless application design is a prerequisite for effective horizontal scaling. The principle is that the application server does not store any client-specific session data between requests. Each request is treated as an independent transaction, containing all the information necessary for its processing.

This is critical because it means any server in a cluster can process a request from any client at any time. Session state is externalized to a shared data store, such as a distributed cache like Redis or a database. This decoupling of compute and state allows you to dynamically add or remove server instances in response to traffic fluctuations without disrupting user sessions, which is the essence of elastic horizontal scalability.

At OpsMoon, we specialize in implementing the exact architectural patterns and best practices discussed here. Our elite DevOps engineers help you design, build, and manage scalable, secure, and high-performance systems tailored to your business goals. Start with a free work planning session today.