Mastering Software Release Cycles

Dive deep into modern software release cycles. This technical guide covers agile vs DevOps, CI/CD pipelines, automation best practices, and key DORA metrics.

A software release cycle is the sequence of stages that transforms source code from a developer's machine into a feature in a user's hands. It’s the entire automated or manual process for building, testing, and deploying software. A well-defined cycle isn't just a process; it's a critical engineering system that dictates your organization's delivery velocity, product quality, and competitive agility.

What Are Software Release Cycles

At its core, a software release cycle is the technical and procedural bridge between a git commit and production deployment. This is a critical engineering function that imposes structure on how features are planned, built, tested, and shipped. Without a well-defined cycle, engineering teams operate in a state of chaos, characterized by missed deadlines, production incidents, and a high change failure rate.

A mature release process establishes a predictable cadence for the entire organization. It provides concrete answers to key questions like, "When will feature X be deployed?" and "What is the rollback plan for this update?" This predictability is invaluable, enabling the synchronization of engineering velocity with marketing campaigns, sales enablement, and customer support training.

From Monoliths to Micro-Updates

Historically, software was released in large, infrequent batches known as "monolithic" releases. Teams would spend months, or even years, developing a massive update, culminating in a high-stakes, "big bang" deployment. This approach, inherent to the Waterfall methodology, was slow, incredibly risky, and provided almost no opportunity to react to customer feedback. A single critical bug discovered late in the cycle could delay the entire release for weeks.

Today, the industry has shifted dramatically toward smaller, high-frequency releases. This evolution is driven by methodologies like Agile and the engineering culture of DevOps, which prioritize velocity and iterative improvement. Instead of one major release per year, high-performing teams now deploy code multiple times a day.

This is a fundamental paradigm shift in engineering.

A well-managed release cycle transforms software delivery from a high-risk event into a routine, low-impact business operation. The goal is to make releases so frequent and reliable that they become boring.

The Strategic Value of a Defined Cycle

Implementing a formal software release cycle provides tangible engineering and business benefits. It creates a framework for continuous improvement and operational excellence. A structured approach enables teams to:

- Improve Product Quality: By integrating dedicated testing phases (like Alpha and Beta) and automated quality gates, you systematically identify and remediate bugs before they impact the user base.

- Increase Development Velocity: A repeatable, automated process eliminates manual toil. This frees up engineers from managing deployments to focus on writing code and solving business problems.

- Enhance Predictability and Planning: Business stakeholders get a clear view of the feature pipeline, allowing for coordinated go-to-market strategies across the company.

- Mitigate Deployment Risk: Deploying small, incremental changes is inherently less risky than a monolithic release. The blast radius of a potential issue is minimized, and Mean Time To Recovery (MTTR) is significantly reduced.

Before we dive into different models, let's break down the key stages of a modern release cycle.

Here is a technical overview of each stage.

Key Stages of a Modern Release Cycle

| Stage | Primary Objective | Key Activities & Tooling |

|---|---|---|

| Development | Translate requirements into functional code. | Coding, peer reviews (pull requests), unit testing (JUnit, pytest), git commit. |

| Testing | Validate code stability, functionality, and performance. | Integration testing, automated end-to-end testing (Cypress), static code analysis (SonarQube). |

| Staging/Pre-Production | Validate the release artifact in a production-mirror environment. | Final QA validation, smoke testing, user acceptance testing (UAT), stakeholder demos. |

| Deployment/Release | Promote the tested artifact to the live production environment. | Canary releases, blue-green deployments, feature flag management (LaunchDarkly). |

| Post-Release | Monitor application health and impact of the release. | Observability (Prometheus, Grafana), error tracking (Sentry), log analysis (ELK Stack). |

These stages form the technical backbone of software delivery. Now, let's explore how different methodologies orchestrate these stages to ship code.

A Technical Breakdown of Release Phases

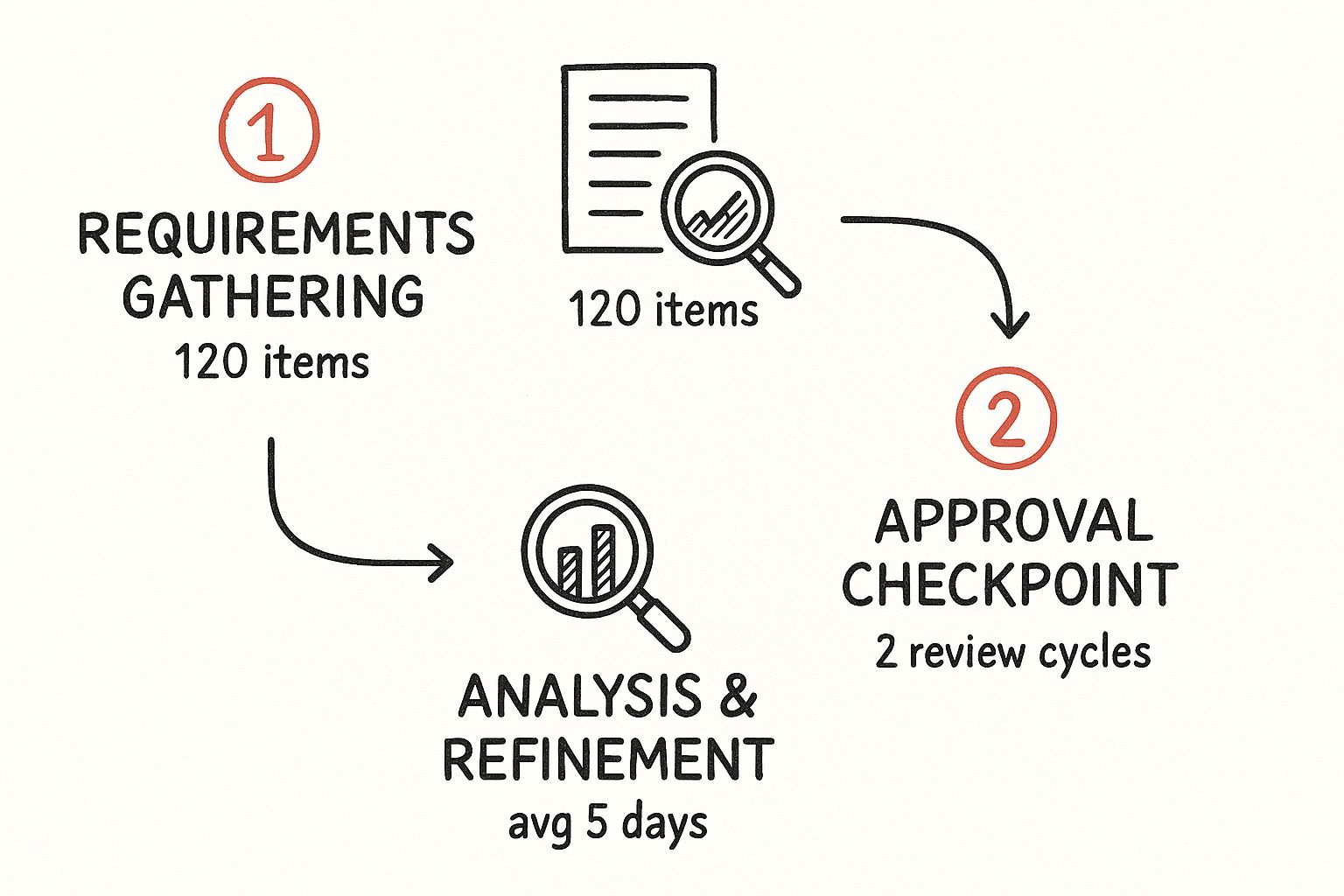

To fully understand software release cycles, one must follow the artifact's journey from a developer's first line of code to its execution in a production environment. This is a sequence of distinct, technically-driven phases, each with specific goals, tooling, and quality gates.

For engineering and operations teams, optimizing these phases is the key to shipping reliable software on a predictable schedule. The process begins before a single line of code is written, with rigorous planning and requirements definition. This upfront work establishes the scope and success criteria for the subsequent development effort.

The flow below illustrates a typical planning process, from a backlog of ideas to the approved user stories that initiate development.

This funneling process ensures that engineering resources are focused on validated, high-value business objectives.

Pre-Alpha: The Genesis of a Feature

The Pre-Alpha phase translates a user story into functional code. It commences with sprint planning, where product owners and developers define and commit to a scope of work. Once development begins, version control becomes the central hub of activity.

Most teams employ a branching strategy like GitFlow. A dedicated feature branch is created from the develop branch for each task (e.g., feature/user-authentication). This crucial practice isolates new, unstable code from the stable integration branch, preventing developers from disrupting each other's work. Developers commit their code to these feature branches, often multiple times a day.

Alpha and Beta: Internal Validation and Real-World Feedback

Once a feature is "code complete," it enters the Alpha phase. The developer merges their feature branch into a develop or integration branch via a pull request, which triggers a series of automated checks. A core component of modern development is a robust Continuous Integration pipeline, which you can learn more about in our guide on what is continuous integration. This CI pipeline automatically executes unit tests and integration tests to provide immediate feedback on code quality and detect regressions.

The primary goal here is internal validation. Quality Assurance (QA) engineers execute automated end-to-end tests using frameworks like Cypress or Selenium. These tools simulate user workflows in a browser, verifying that critical paths through the application remain functional.

Next, the Beta phase exposes the software to a limited, external audience, serving as its first real-world validation. A tight feedback loop is critical, often facilitated by tools that capture bug reports, crash data (e.g., Sentry), and user suggestions directly from the application. This User Acceptance Testing (UAT) provides invaluable data on how the software performs under real-world network conditions and usage patterns—scenarios that are impossible to fully replicate in-house.

A well-structured UAT process can uncover up to 60% of critical bugs that automated and internal QA tests might miss. Why? Because real users interact with software in wonderfully unpredictable ways.

Release Candidate: Locking and Stabilizing

After Beta feedback is triaged and addressed, the feature graduates to the Release Candidate (RC) phase. This milestone is typically marked by a "code freeze," a policy prohibiting new features or non-critical changes from being merged into the release branch. The team's sole focus becomes stabilization.

A new release branch is created from the develop branch (e.g., release/v1.2.0). The team executes a final, exhaustive suite of regression tests against this RC build. If any show-stopping bugs are found, fixes are committed directly to the release branch and then merged back into develop to prevent regression. The objective is to produce a build artifact that is identical to what will be deployed to production. During this stage, solid strategies for managing project scope creep are vital to prevent last-minute changes from destabilizing the release.

General Availability: Deployment and Monitoring

Finally, the release reaches General Availability (GA). The stable release candidate branch is merged into the main branch and tagged with a version number (e.g., v1.2.0). This tagged commit becomes the immutable source of truth for the production release. The focus now shifts to deployment strategy.

Common deployment patterns include:

- Blue-Green Deployment: Two identical production environments ("Blue" and "Green") are maintained. If Blue is live, the new version is deployed to the idle Green environment. After verification, a load balancer or router redirects all traffic to Green. This provides near-zero downtime and a simple rollback mechanism: redirect traffic back to Blue.

- Canary Release: The new version is rolled out to a small subset of users (e.g., 1%). The team monitors performance metrics and error rates for this cohort. If the metrics remain healthy, the release is gradually rolled out to the entire user base.

Post-release monitoring is an active, ongoing part of the cycle. Teams use observability platforms with tools like Prometheus for metrics collection and Grafana for visualization, tracking application health, resource utilization, and error rates in real time. This immediate feedback loop is critical for validating the release's stability or alerting the on-call team to an incident before it impacts the entire user base.

Comparing Release Methodologies

The choice of methodology for your software release cycles is a foundational engineering decision. It dictates team culture, technology stack, and the velocity at which you can deliver value. These are not merely project management styles; they are distinct engineering philosophies.

Each methodology—Waterfall, Agile, and DevOps—provides a different framework for building software and managing change, impacting everything from inter-team communication protocols to the toolchains engineers use daily.

The Waterfall Blueprint: Linear and Locked-In

The Waterfall model represents the traditional, sequential approach to software development. It's a rigid, linear process where each phase must be fully completed before the next begins. Requirements are gathered and signed off upfront, followed by a stepwise progression through design, implementation, verification, and deployment.

This rigidity imposes significant technical constraints, often leading to monolithic architectures. Since the requirements are fixed early on, there is little to no room for adaptation, making it a poor fit for products in dynamic markets. Its primary use today is in projects with immutable scope, such as those in heavily regulated government or aerospace sectors.

- Team Structure: Characterized by functional silos. Requirements analysts, designers, developers, QA testers, and operations engineers work in separate teams with formal handoffs between stages.

- Architecture: Naturally encourages monolithic applications. The long development cycle makes it prohibitively expensive and difficult to refactor the architecture once development is underway.

- Tooling: Emphasizes comprehensive documentation and heavyweight project management tools like Microsoft Project. Testing is typically a manual, end-of-cycle phase.

For a side-by-side look at the strategic differences, this guide comparing Waterfall vs Agile methodologies breaks things down nicely.

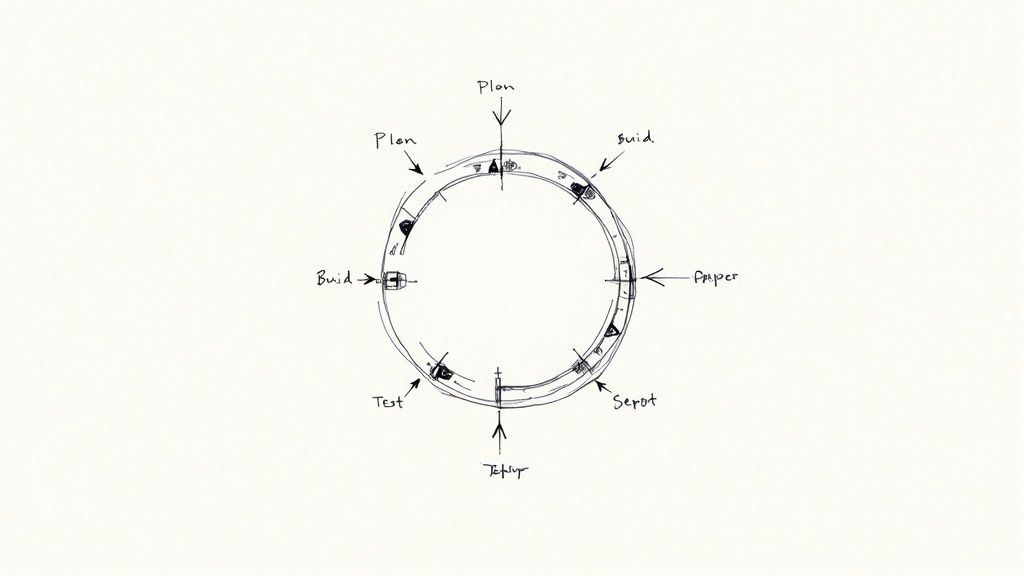

Agile: Built for Iteration and Adaptation

Agile methodologies, such as Scrum and Kanban, emerged as a direct response to the inflexibility of Waterfall. Agile decomposes development into short, iterative cycles called sprints—typically lasting 1 to 4 weeks.

At the conclusion of each sprint, the team delivers a potentially shippable increment of the product. The methodology is built around feedback loops and champions cross-functional teams where developers, testers, and product owners collaborate continuously. This adaptability makes Agile an excellent fit for microservices architectures, where individual services can be developed, tested, and deployed independently.

The core technical advantage of Agile is its tight feedback loop. By building and shipping in small increments, teams can rapidly validate technical decisions and user assumptions, drastically reducing the risk of investing months in building a feature that provides no value.

DevOps: Fusing Agile Culture with Automation

DevOps represents the logical extension of Agile principles, aiming to eliminate the final silo between development ("Dev") and operations ("Ops"). The overarching goal is to merge software development and IT operations into a single, seamless, and highly automated workflow.

The engine of DevOps is the CI/CD pipeline (Continuous Integration/Continuous Delivery or Deployment). This automated pipeline orchestrates the entire release process—from a developer's code commit to building, testing, and deploying the artifact. This high degree of automation is what enables elite teams to confidently deploy new code to production multiple times per day. We break down the finer points in our guide on continuous deployment vs continuous delivery.

Looking forward, the integration of AI is set to further accelerate these cycles. By 2025, AI-powered tools are expected to simplify complex tech stacks and make development more accessible through low-code/no-code platforms. While Agile remains the dominant approach, with roughly 63% of teams using it, the underlying DevOps principles of speed and automation are becoming universal.

Technical Comparison of Release Methodologies

To analyze these models from a technical standpoint, a direct comparison is useful. The table below outlines key engineering differences.

| Attribute | Waterfall | Agile | DevOps |

|---|---|---|---|

| Architecture | Monolithic, tightly coupled systems | Often microservices, loosely coupled components | Microservices, serverless, container-based |

| Release Frequency | Infrequent (months or years) | Frequent (weeks) | On-demand (multiple times per day) |

| Testing Approach | Separate, end-of-cycle phase (often manual) | Continuous testing integrated into each sprint | Fully automated, continuous testing in the CI/CD pipeline |

| Team Structure | Siloed, functional teams (Dev, QA, Ops) | Cross-functional, self-organizing teams | Single, unified team with shared ownership (Dev + Ops) |

| Risk Management | Risk is high; identified late in the process | Risk is low; addressed incrementally in each iteration | Risk is minimized through automation, monitoring, and rapid rollback |

| Toolchain | Project management, documentation tools | Collaboration tools, sprint boards (Jira, Trello) | CI/CD, IaC, monitoring, container orchestration (Jenkins, Terraform) |

Ultimately, your choice of methodology is a strategic decision that directly impacts your ability to compete. Migrating from Waterfall to Agile provides flexibility, but embracing a DevOps culture and toolchain is what delivers the velocity and reliability required by modern software products.

Putting Best Practices Into Action

Theoretical knowledge is valuable, but building a high-performance release process requires implementing specific, actionable engineering practices. These are battle-tested methods that differentiate elite teams by enabling them to ship code faster, safer, and more reliably.

This is where we move from concepts to the tangible disciplines of software engineering. Every detail, from versioning schemes to infrastructure management, directly impacts delivery speed and system stability. The objective is to engineer a system where releasing software is a routine, low-risk, automated activity, not a high-stress, all-hands-on-deck event.

Versioning with Semantic Precision

A foundational practice is implementing a standardized versioning scheme. Semantic Versioning (SemVer) is the de facto industry standard, providing a universal language for communicating the nature of changes in a release. Its MAJOR.MINOR.PATCH format instantly conveys the impact of an update.

The specification is as follows:

- MAJOR version (

X.0.0): Incremented for incompatible API changes. This signals a breaking change that will require consumers of the API to update their code. - MINOR version (

1.X.0): Incremented when new functionality is added in a backward-compatible manner. - PATCH version (

1.0.X): Incremented for backward-compatible bug fixes.

Adopting SemVer eliminates ambiguity. When a dependency updates from 2.1.4 to 2.1.5, you can be confident it's a safe bug fix. A change to 2.2.0 signals new, non-breaking features, while a jump to 3.0.0 is a clear warning to consult the release notes and audit your code for required changes.

Decouple Deployment from Release with Feature Flags

One of the most powerful techniques in modern software delivery is to separate the deployment of code from its release to users. This means you can merge code into the main branch and deploy it to production servers while it remains invisible to users. This is achieved through feature flagging.

A feature flag is essentially a conditional statement (if/else) in your code that controls the visibility of a new feature. This allows you to toggle functionality on or off for specific user segments—or everyone—without requiring a new deployment. Tools like LaunchDarkly or Flagsmith are designed to manage these flags at scale.

By using feature flags, teams can safely deploy unfinished work, perform canary releases to small groups of users (like "beta testers" or "customers in Canada"), and instantly flip a "kill switch" to disable a buggy feature if it causes trouble.

This fundamentally changes the risk profile of deployments. Pushing code to production becomes a routine technical task. The business decision to release a feature becomes a separate, controlled action that can be executed at the optimal time.

Automate Everything: Infrastructure and Security

A truly robust release process requires automation that extends beyond the application layer to the underlying infrastructure. Infrastructure as Code (IaC) is the practice of managing and provisioning infrastructure (servers, databases, networks) through machine-readable definition files. Using tools like Terraform or AWS CloudFormation, you define your entire environment in version-controlled code.

This enforces consistency across all environments—development, staging, and production—eliminating the "it works on my machine" class of bugs. For a deeper dive into building a resilient automation backbone, our guide on CI/CD pipeline best practices is an excellent resource.

Security must also be an integrated, automated part of the pipeline. The DevSecOps philosophy embeds security practices directly into the development lifecycle. This involves running automated security tools on every code commit.

- Static Application Security Testing (SAST): Tools like SonarQube or Snyk scan source code for known vulnerabilities and security anti-patterns.

- Dynamic Application Security Testing (DAST): Tools probe the running application, simulating attacks to identify vulnerabilities like SQL injection or cross-site scripting.

By automating these checks within the CI pipeline (e.g., via Jenkins or GitHub Actions), you "shift security left," catching and remediating vulnerabilities early when they are significantly cheaper and easier to fix. This proves that high velocity and strong security are not mutually exclusive.

Finding Your Optimal Release Cadence

"How often should we release?" is a critical question for any engineering organization, and there is no single correct answer. The optimal release cadence is a dynamic equilibrium between market demand, technical capability, and business risk tolerance. Achieving this balance is key to maintaining a competitive edge without compromising product stability.

Your ideal release frequency is not static; it is a variable influenced by several key factors. Releasing too frequently can lead to developer burnout and a high change failure rate, eroding user trust. Releasing too slowly means delayed value delivery, loss of market share to more agile competitors, and unmet customer needs.

Technical and Business Drivers of Your Cadence

Ultimately, your release frequency is constrained by your technical architecture and operational maturity, but it is driven by business and market requirements. A large, monolithic application with complex interdependencies and a manual testing process cannot technically support daily deployments. Conversely, a decoupled microservices architecture with a mature CI/CD pipeline and high test coverage can.

When determining your cadence, you must evaluate these core factors:

- Architectural Limitations: Is your application designed for independent deployability? A tightly coupled monolith requires extensive regression testing for even minor changes, inherently slowing the release cycle.

- Team Capacity and Maturity: What is your team's proficiency with automation, testing, and DevOps practices? A high-performing team can sustain a much faster release tempo.

- Market Expectations: In a fast-paced B2C market, users expect a continuous stream of new features and fixes. In a conservative B2B enterprise environment, customers may prefer less frequent, predictable updates that they can plan and train for.

- Risk Tolerance: What is the business impact of a production incident? For an e-commerce site, a minor bug may be an annoyance. For software controlling a medical device, the consequences are catastrophic.

The objective isn't merely to release faster; it's to build a system that enables you to release at the speed the business requires while maintaining high standards of quality and stability. Automation is the core enabler of this capability.

Contrasting Industry Standards

Software release cycles vary dramatically across industries, largely due to differing user expectations and regulatory constraints. In the gaming industry, by 2025, weekly updates are standard practice to maintain player engagement with new content. Contrast this with healthcare or finance, where software releases often occur on a quarterly schedule to accommodate rigorous compliance and security validation processes.

This disparity is almost always a reflection of automation maturity. Organizations with sophisticated CI/CD pipelines can deploy up to 200 times more frequently than those reliant on manual processes. You can get a deeper look at these deployment frequency trends on eltegra.ai.

Striking the Right Balance

Determining your optimal cadence is an iterative process. Begin by establishing a baseline. Measure your current state using key DORA metrics like Deployment Frequency and Change Failure Rate. If your failure rate is high, you must invest in improving test automation and CI/CD practices before attempting to increase release velocity.

If your system is stable, experiment by increasing the release frequency for a single team or service. A common mistake is enforcing a "one-size-fits-all" release schedule across the entire organization. A superior strategy is to empower individual teams to determine the cadence that best suits their specific service's architecture and risk profile. This allows you to innovate rapidly where it matters most while ensuring stability for critical core services.

The Dollars and Cents Behind a Release

A software release cycle is an economic activity as much as it is a technical one. Every decision, from the chosen development methodology to the CI/CD toolchain, has a direct financial impact. A release is not just a technical milestone; it is a significant business investment.

A substantial portion of a project's budget is consumed during the initial development phases. Data indicates that design and implementation can account for over 63% of a project's total cost. This represents a major capital expenditure before any revenue is generated.

How Your Methodology Hits Your Wallet

The structure of your release cycle directly influences cash flow. The Waterfall model requires a massive upfront capital investment. Because each phase is sequential, the majority of the budget is spent long before the product is launched. This is a high-risk financial model; if the product fails to gain market traction, the entire investment is lost.

Agile, by contrast, aligns with an operational expenditure model. Investment is made in smaller, self-contained sprints. This approach distributes the cost over time, creating a more predictable financial outlook and significantly reducing risk. A return on investment can be realized on individual features much sooner, providing the financial agility modern businesses require.

The Real ROI of Automation and Upkeep

As software complexity increases, so do the associated costs, particularly in Quality Assurance. QA expenses have been observed to increase by up to 26% as digital products become more sophisticated. You can dig into more software development trends and stats over at Manektech.com. This is where investment in test automation yields a clear and significant return.

View test automation as a direct cost-reduction strategy. It identifies defects early in the development cycle when they are exponentially cheaper to fix. This shift reduces manual QA hours and prevents the need for expensive, reputation-damaging hotfixes post-release.

Expenditure does not cease at launch. Post-release maintenance is a significant, recurring operational cost that is often underestimated.

- Annual Maintenance: As a rule of thumb, ongoing maintenance costs approximately 15-20% of the initial development budget annually. For a $1 million project, this translates to $150,000 to $200,000 per year in operational expenses.

Here, the business case for modern DevOps practices becomes undeniable. Investments in CI/CD pipelines, automated testing, and Infrastructure as Code can be directly correlated to reduced waste, lower maintenance costs, and faster time-to-market, providing a clear path to improved financial performance.

Got Technical Questions? We've Got Answers

When implementing modern software delivery practices, several recurring technical challenges arise. Addressing these correctly is critical for any team striving to improve its release process. Let's tackle some of the most common questions from engineers and technical leaders.

What Is the Difference Between Continuous Delivery and Continuous Deployment?

This is a fundamental concept, and the distinction is subtle but critical.

Continuous Delivery ensures that your codebase is always in a deployable state. Every change committed to the main branch is automatically built, tested, and packaged into a release artifact. However, the final step of deploying this artifact to production requires a manual trigger. This provides a human gate for making the final business decision on when to release.

Continuous Deployment is the next level of automation. It removes the manual trigger. If an artifact successfully passes all automated tests and quality gates in the pipeline, it is automatically deployed to production without human intervention. The choice between the two depends on your organization's risk tolerance and the maturity of your automated testing suite.

How Do You Handle Database Migrations in an Automated Release?

Managing database schema changes within a CI/CD pipeline is a high-stakes operation. An incorrect approach can lead to data corruption or application downtime.

The non-negotiable first step is to bring database schema changes under version control. Schema migration tools like Flyway or Liquibase are designed for this purpose, allowing you to manage, version, and apply schema changes programmatically.

The core principle is to always ensure backward compatibility.

The golden rule is simple: Never deploy code that relies on a database change before that migration has actually run. Always deploy the database change first, then the application code that needs it. This little sequence is your best defense against errors.

For more complex, non-trivial schema changes (e.g., renaming a column), an expand-and-contract pattern is often employed to achieve zero-downtime migrations. This involves multiple deployments: 1) Add the new column/table (expand). 2) Deploy code that writes to both old and new schemas. 3) Backfill data from the old schema to the new. 4) Deploy code that reads only from the new schema. 5) Finally, deploy a change to remove the old schema (contract). This phased approach ensures the application remains functional throughout the migration process.

What Key Metrics Measure Release Cycle Health?

"If you can't measure it, you can't improve it." To objectively assess the performance of your release process, focus on the four key DORA metrics. These have become the industry standard for measuring the effectiveness of a software delivery organization.

- Deployment Frequency: How often does your organization successfully release to production? Elite performers deploy on-demand, multiple times a day.

- Lead Time for Changes: How long does it take to get a commit from a developer's workstation into production? Elite performers achieve this in less than one hour.

- Change Failure Rate: What percentage of deployments to production result in a degraded service and require remediation (e.g., a hotfix or rollback)? Elite performers have a rate under 15%.

- Time to Restore Service: When an incident or defect that impacts users occurs, how long does it take to restore service? Elite performers recover in less than one hour.

Tracking these four metrics provides a balanced view of both throughput (speed) and stability (quality), offering a data-driven framework for continuous improvement.

Ready to build a high-performance release process with expert guidance? At OpsMoon, we connect you with the top 0.7% of DevOps engineers to accelerate your software delivery. Start with a free work planning session and map out your path to a faster, more reliable release cycle.