Mastering Software Quality Assurance Processes: A Technical Guide

A technical guide to essential software quality assurance processes. Learn to build quality into your SDLC with actionable strategies and proven methodologies.

Software quality assurance isn't a procedural checkbox; it's an engineering discipline. It is a systematic approach focused on preventing defects throughout the software development lifecycle (SDLC), not merely detecting them at the end.

This represents a fundamental paradigm shift. Instead of reactively debugging a near-complete application, you architect the entire development process to minimize the conditions under which bugs can be introduced.

Building Quality In, Not Bolting It On

Historically, QA was treated as a final validation gate before a release. A siloed team received a feature drop and was tasked with identifying all its flaws. This legacy model is inefficient, costly, and incompatible with modern high-velocity software delivery methodologies like CI/CD.

A deeply integrated approach is required, where quality is a shared responsibility, engineered into every stage of the SDLC. This is the core principle of modern software quality assurance processes.

Quality cannot be "added" to a product post-facto; it must be built in from the first commit.

The Critical Difference Between QA and QC

To implement this effectively, it's crucial to understand the technical distinction between Quality Assurance (QA) and Quality Control (QC). These terms are often conflated, but they represent distinct functions.

- Quality Control (QC) is reactive and product-centric. It involves direct testing and inspection of the final artifact to identify defects. Think of it as executing a test suite against a compiled binary.

- Software Quality Assurance (SQA) is proactive and process-centric. It involves designing, implementing, and refining the processes and standards that prevent defects from occurring. It's about optimizing the SDLC itself to produce higher-quality outcomes.

Consider an automotive assembly line. QC is the final inspector who identifies a scratch on a car's door before shipment. SQA is the team that engineers the robotic arm's path, specifies the paint's chemical composition, and implements a calibration schedule to ensure such scratches are never made.

QC finds defects after they're created. SQA engineers the process to prevent defect creation. This proactive discipline is the foundation of high-velocity, high-reliability software engineering.

Why Proactive SQA Matters

A process-first SQA focus yields significant technical and business dividends. A defect identified during the requirements analysis phase—such as an ambiguous acceptance criterion—can be rectified in minutes with a conversation.

If that same logical flaw persists into production, the cost to remediate it can be 100x greater. This cost encompasses not just developer time for patching and redeployment, but also potential data corruption, customer churn, and brand reputation damage.

This isn't merely about reducing rework; it's about increasing development velocity. By building upon a robust foundation of clear standards, automated checks, and well-defined processes, development teams can innovate with greater confidence. Ultimately, rigorous software quality assurance processes produce systems that are reliable, predictable, and earn user trust through consistent performance.

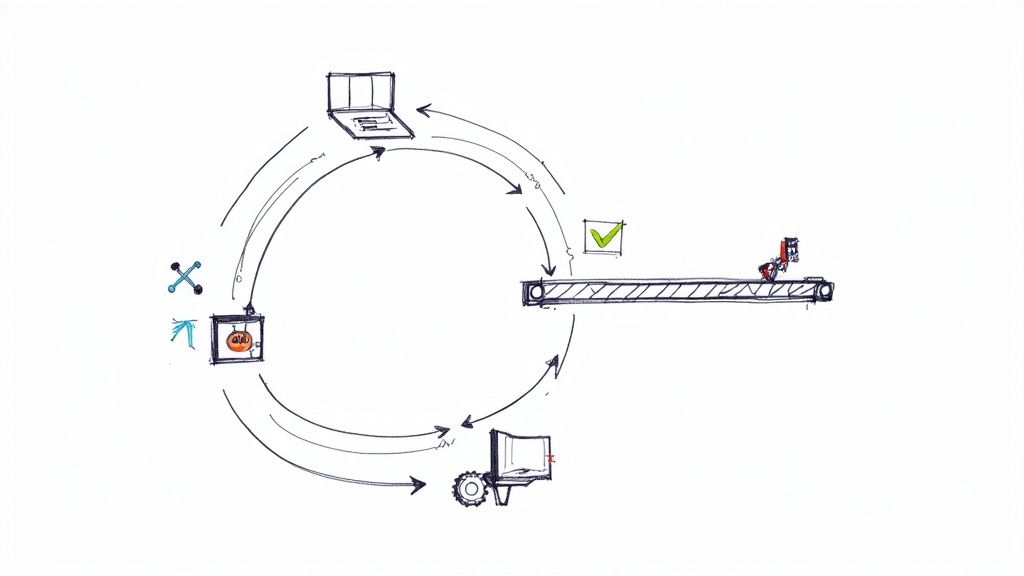

The Modern SQA Process Lifecycle

A mature software quality assurance process is not a chaotic pre-release activity but a systematic, multi-phase lifecycle engineered for predictability and precision. Each phase builds upon the outputs of the previous one, methodically transforming an abstract requirement into a tangible, high-quality software artifact. The objective is to embed quality into the development workflow, from initial design to post-deployment monitoring.

This lifecycle is governed by a proactive engineering mindset. It commences long before code is written and persists after deployment, establishing a continuous feedback loop that drives iterative improvement. Let's deconstruct the technical phases of this modern SQA process.

Proactive Requirements Analysis

The entire lifecycle is predicated on the quality of its inputs, making QA's involvement in requirements analysis non-negotiable. The primary goal is to eliminate ambiguity before it can propagate downstream as a defect. QA engineers collaborate with product managers and developers to rigorously scrutinize user stories and technical specifications.

Their core function is to define clear, objective, and testable acceptance criteria. A requirement like "user login should be fast" is untestable and therefore useless. QA transforms it into a specific, verifiable statement: "The /api/v1/login endpoint must return a 200 OK status with a JSON Web Token (JWT) in the response body within 300ms at the 95th percentile (p95) under a simulated load of 50 concurrent users." This precision eradicates guesswork and provides a concrete engineering target.

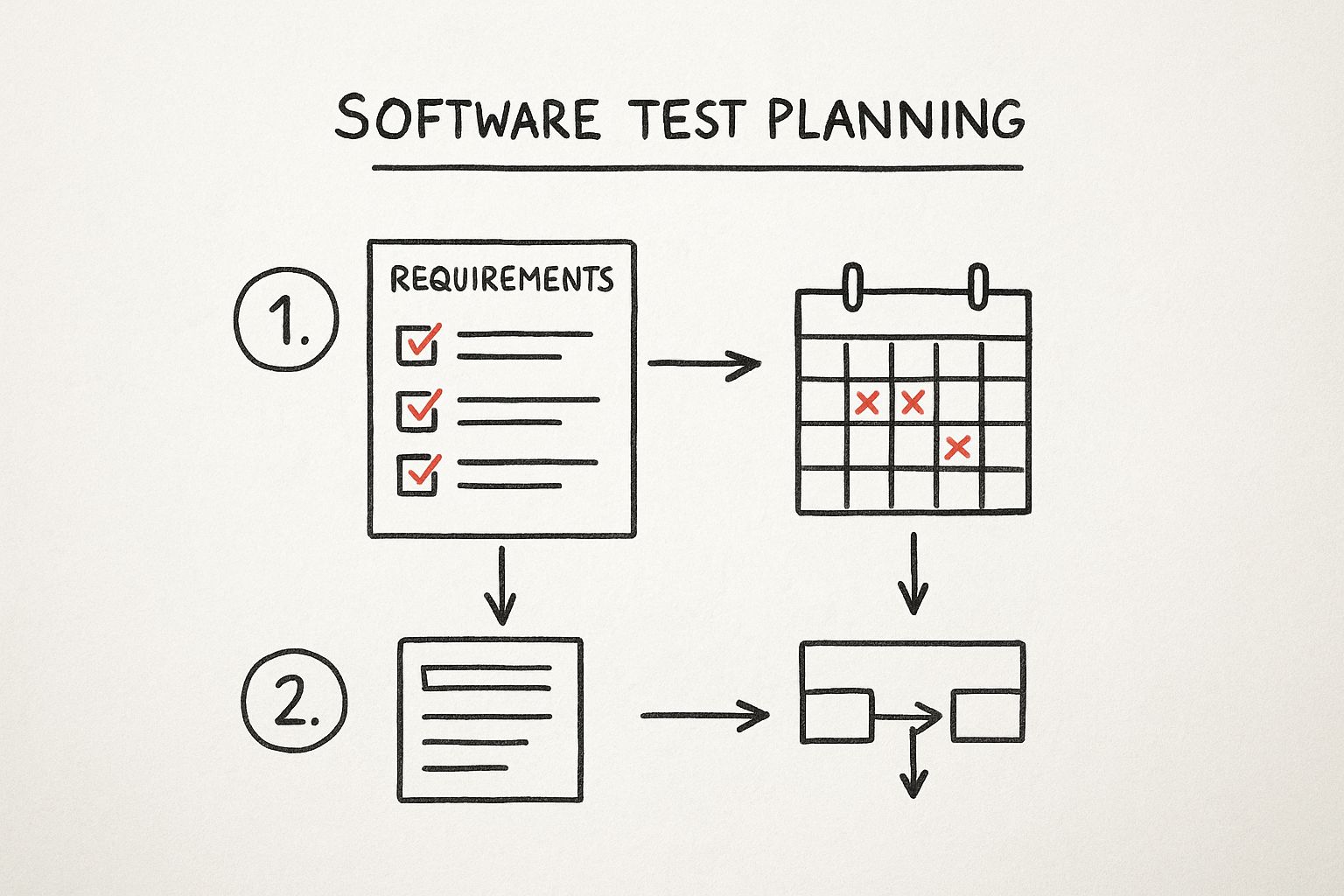

Strategic Test Planning

With validated requirements, the next phase is to architect a comprehensive test strategy. This moves beyond ad-hoc test cases to a risk-based approach, concentrating engineering effort on areas with the highest potential impact or failure probability. The primary artifact produced is the Master Test Plan.

This document codifies the testing scope and approach, detailing:

- Objectives and Scope: Explicitly defining which user stories, features, and API endpoints are in scope, and just as critically, which are out of scope for the current cycle.

- Risk Analysis: Identifying high-risk components (e.g., payment gateways, data migration scripts, authentication services) that require more extensive test coverage.

- Resource and Environment Allocation: Specifying the necessary infrastructure, software versions (e.g., Python 3.9, PostgreSQL 14), and seed data required for test environments.

- Schedules and Deliverables: Aligning testing milestones with the overall project timeline, ensuring integration into the broader software release lifecycle.

Strategic planning provides a clear, executable roadmap for the entire quality effort.

This visual underscores how a well-structured plan, with clear dependencies and timelines, is essential for an organized and effective testing phase.

Systematic Test Design and Environment Provisioning

This phase translates the high-level strategy into executable test cases and scripts. Effective test design prioritizes robustness, reusability, and maintainability. This includes writing explicit steps, defining precise expected outcomes (e.g., "expect HTTP status 201 Created"), and employing design patterns like the Page Object Model (POM) in UI automation to decouple test logic from UI implementation, reducing test fragility.

Concurrently, consistent test environments are provisioned. Modern teams leverage Infrastructure as Code (IaC) using tools like Terraform or configuration management tools like Ansible. This practice ensures that every test environment—from a developer's local Docker container to the shared staging server—is an identical, reproducible clone of the production configuration, eliminating the "it works on my machine" class of defects.

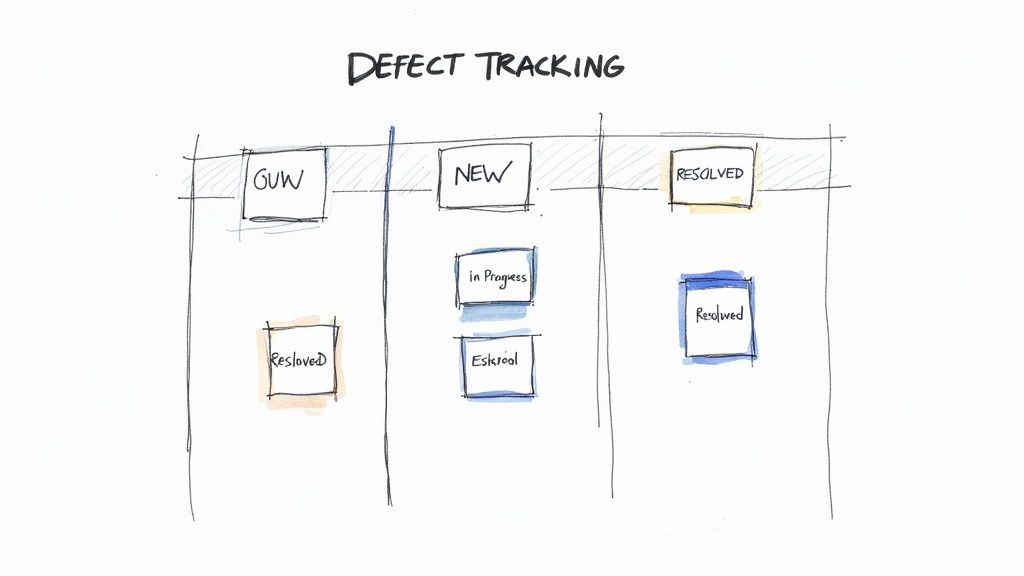

Rigorous Test Execution and Defect Management

Execution is the phase where planned tests are run against the application under test (AUT). This is a methodical process, not an exploratory one. Testers execute test cases systematically, whether manually or through automated suites integrated into a CI/CD pipeline.

When an anomaly is detected, a detailed defect report is logged in a tracking system like Jira. A high-quality bug report is a technical document containing:

- A clear, concise title summarizing the fault.

- Numbered, unambiguous steps to reproduce the issue.

- Expected result vs. actual result.

- Supporting evidence: screenshots, HAR files, API request/response payloads, and relevant log snippets (e.g.,

tail -n 100 /var/log/app.log).

This level of detail is critical for minimizing developer time spent on diagnosis, directly reducing the Mean Time To Resolution (MTTR). The global software quality automation market is projected to reach USD 58.6 billion in 2025, a testament to the industry's investment in optimizing this process.

A great defect report isn't an accusation; it's a collaboration tool. It provides the development team with all the necessary information to replicate, understand, and resolve a bug efficiently, turning a problem into a quick solution.

Test Cycle Closure and Retrospectives

Upon completion of the test execution phase, the cycle is formally closed. This involves analyzing the collected data to generate a Release Readiness Report. This report summarizes key metrics like code coverage trends, pass/fail rates by feature, and the number and severity of open defects. It provides stakeholders with the quantitative data needed to make an informed go/no-go decision for the release.

The process doesn't end with the report. The team conducts a retrospective to analyze the SQA process itself. What were the sources of test flakiness? Did a gap in the test plan allow a critical bug to slip through? The insights from this meeting are used to refine the process for the next development cycle, ensuring the software quality assurance process itself is a system subject to continuous improvement.

Your Engineering Guide To QA Testing Types

Building robust software requires systematically verifying its behavior through a diverse array of testing types. A comprehensive quality assurance process leverages a portfolio of testing methodologies, each designed to validate a specific aspect of the system. Knowing which technique to apply at each stage of the SDLC is a hallmark of a mature engineering organization.

These testing types can be broadly categorized into two families: functional and non-functional.

Functional testing answers the question: "Does the system perform its specified functions correctly?" Non-functional testing addresses the question: "How well does the system perform those functions under various conditions?"

Dissecting Functional Testing

Functional tests are the foundation of any SQA strategy. They verify the application's business logic against its requirements, ensuring that inputs produce the expected outputs. This is achieved through a hierarchical approach, starting with granular checks and expanding to cover the entire system.

The functional testing hierarchy is often visualized as the "Testing Pyramid":

- Unit Tests: Written by developers, these tests validate the smallest possible piece of code in isolation—a single function, method, or class. They are executed via frameworks like JUnit or PyTest, run in milliseconds, and provide immediate feedback within the CI pipeline. They form the broad base of the pyramid.

- Integration Tests: Once units are verified, integration tests check the interaction points between components. This could be the communication between two microservices via a REST API, or an application's ability to correctly read and write from a database. Understanding what is API testing is paramount here, as APIs are the connective tissue of modern software.

- System Tests: These are end-to-end (E2E) tests that validate the complete, integrated application. They simulate real user workflows in a production-like environment to ensure all components function together as a cohesive whole to meet the specified business requirements.

- User Acceptance Testing (UAT): The final validation phase before release. Here, actual end-users or product owners execute tests to confirm that the system meets their business needs and is fit for purpose in a real-world context.

Exploring Critical Non-Functional Testing

A feature that functionally works but takes 30 seconds to load is, from a user's perspective, broken. While functional tests confirm the application's correctness, non-functional tests ensure its operational viability, building user trust and system resilience.

Non-functional testing is what separates a merely functional product from a truly reliable and delightful one. It addresses the critical "how" questions—how fast, how secure, and how easy is it to use?

Critical non-functional testing disciplines include:

- Performance Testing: A category of testing focused on measuring system behavior under load. It includes Load Testing (simulating expected user traffic), Stress Testing (pushing the system beyond its limits to identify its breaking point), and Spike Testing (evaluating the system's response to sudden, dramatic increases in load).

- Security Testing: A non-negotiable practice involving multiple tactics. SAST (Static Application Security Testing) analyzes source code for known vulnerabilities. DAST (Dynamic Application Security Testing) probes the running application for security flaws. This often culminates in Penetration Testing, where security experts attempt to ethically exploit the system.

- Usability Testing: This focuses on the user experience (UX). It involves observing real users as they interact with the software to identify points of confusion, inefficient workflows, or frustrating UI elements.

The Role of Automated Regression Testing in CI/CD

Every code change, whether a new feature or a refactoring, introduces the risk of inadvertently breaking existing functionality. This is known as a regression.

Manually re-testing the entire application after every commit is computationally and logistically infeasible in a CI/CD environment. This is why automated regression testing is a cornerstone of modern SQA.

A regression suite is a curated set of automated tests (a mix of unit, API, and key E2E tests) that cover the application's most critical functionalities. This suite is configured to run automatically on every code commit or pull request. If a test fails, the CI build is marked as failed, blocking the defective code from being merged or deployed. It serves as an automated safety net that enables high development velocity without sacrificing stability.

Comparison of Key Software Testing Types

This table provides a technical breakdown of key testing types, their objectives, typical execution points in the SDLC, and common tooling.

| Testing Type | Primary Objective | When It's Performed | Example Tools |

|---|---|---|---|

| Unit Testing | Verify a single, isolated piece of code (function/method). | During development, in the CI pipeline on commit. | JUnit, NUnit, PyTest, Jest |

| Integration Testing | Ensure different software modules work together correctly. | After unit tests pass, in the CI pipeline. | Postman, REST Assured, Supertest |

| System Testing | Validate the complete and fully integrated application. | On a dedicated staging or QA environment. | Selenium, Cypress, Playwright |

| UAT | Confirm the software meets business needs with real users. | Pre-release, after system testing is complete. | User-led, manual validation |

| Performance Testing | Measure system speed, stability, and scalability. | Staging/performance environment, post-build. | JMeter, Gatling, k6 |

| Security Testing | Identify and fix security vulnerabilities. | Continuously throughout the SDLC. | OWASP ZAP, SonarQube, Snyk |

| Regression Testing | Ensure new code changes do not break existing features. | On every commit or pull request in CI/CD. | Combination of automation tools |

Understanding these distinctions allows for the construction of a strategic, multi-layered quality assurance process that validates all aspects of software reliability and performance.

Integrating QA Into Your CI/CD Pipeline

In modern software engineering, release velocity is a key competitive advantage. The traditional model, where QA is a distinct phase following development, is an inhibitor to this velocity, creating a bottleneck in any DevOps workflow.

To achieve high-speed delivery, quality assurance must be integrated directly into the Continuous Integration and Continuous Deployment (CI/CD) pipeline. This transforms the pipeline from a mere code delivery mechanism into an automated quality assurance engine that provides a rapid feedback loop.

This practice is the technical implementation of the "Shift-Left" philosophy. The core principle is to move testing activities as early as possible in the development lifecycle. Detecting a bug via a failing unit test on a developer's local machine is trivial to fix. Detecting the same bug in production is a high-cost, high-stress incident.

The Technical Blueprint For Pipeline Integration

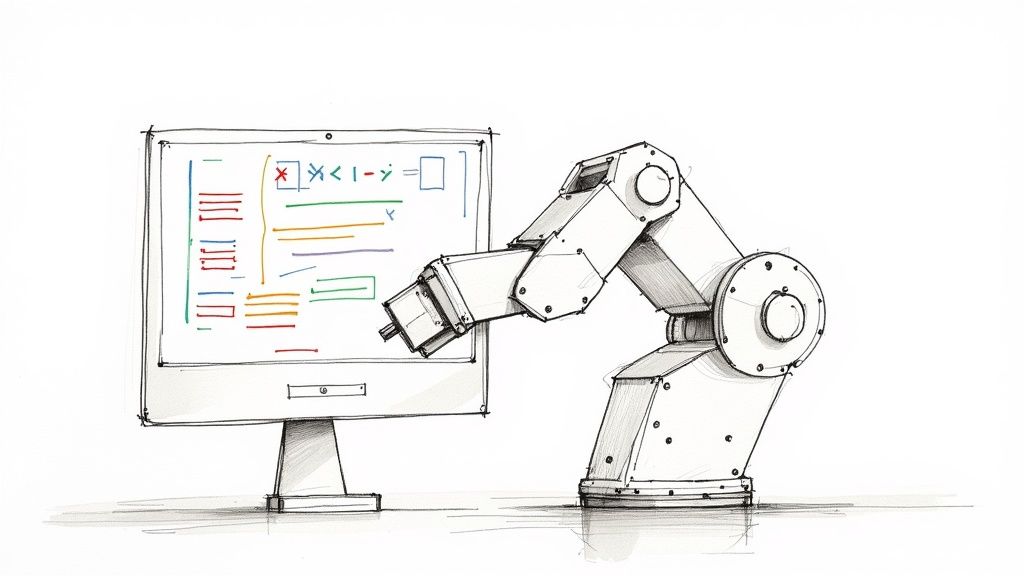

Embedding QA into a CI/CD pipeline involves automating various types of tests at specific trigger points. When a developer commits code, the pipeline automatically orchestrates a sequence of validation stages. It acts as an automated gatekeeper, preventing defective code from progressing toward production.

This continuous, automated validation makes quality a prerequisite for every change, not a final inspection. This is the fundamental mechanism for achieving both speed and stability.

Tools like Jenkins are commonly used as the orchestration engine for these automated workflows.

The dashboard provides a clear, stage-by-stage visualization of the build, test, and deployment process, offering immediate insight into the health of any pending release.

Building Automated Quality Gates

Integrating tests is not just about execution; it's about establishing automated quality gates.

A quality gate is a codified, non-negotiable standard within the pipeline. It is an automated decision point that enforces a quality threshold. If the code fails to meet this bar, the pipeline halts progression. This concept is central to shipping code with high velocity and safety.

If the predefined standards are not met, the gate fails, the build is marked as 'failed', and the code is rejected. Here is a step-by-step breakdown of a typical CI/CD pipeline with integrated quality gates:

-

Code Commit Triggers the Build: A developer pushes code to a Git repository like GitHub. A configured webhook triggers a build job on a CI server (e.g., Jenkins or GitLab CI). The server clones the repository and initiates the build process.

-

Unit & Integration Tests Run: The pipeline's first quality gate is the execution of fast-running tests: the automated unit and integration test suites. These verify the code's internal logic and component interactions. A single test failure causes the build to fail immediately, providing rapid feedback to the developer.

-

Automated Deployment to Staging: Upon passing the initial gate, the pipeline packages the application (e.g., into a Docker container) and deploys it to a dedicated staging environment. This environment should be a high-fidelity replica of production.

-

API & E2E Tests Kick Off: With the application running in staging, the pipeline triggers the next set of gates. Automated testing frameworks like Selenium or Cypress execute end-to-end (E2E) tests that simulate complex user journeys. Concurrently, API-level tests are executed to validate service contracts and endpoint behaviors.

This layered testing strategy ensures that every facet of the application is validated automatically. The specific structure of these pipelines often depends on the release strategy—understanding the differences between continuous deployment vs continuous delivery is crucial for proper implementation.

The advent of cloud-based testing platforms enables massive parallelization of these tests across numerous browsers and device configurations without managing physical infrastructure. By engineering these automated quality gates, you create a resilient system that facilitates rapid code releases without compromising stability or user trust.

Measuring The Impact Of Your SQA Processes

In engineering, what is not measured cannot be improved. Without quantitative data, any effort to enhance software quality assurance processes is based on anecdote and guesswork. It is essential to move beyond binary pass/fail results and analyze the key performance indicators (KPIs) that reveal process effectiveness, justify resource allocation, and drive data-informed improvements.

Metrics provide objective evidence of an SQA strategy's health. Tracking the right KPIs transforms abstract quality goals into concrete, actionable insights that guide engineering decisions.

Evaluating Test Suite Health

The entire automated quality strategy hinges on the reliability and efficacy of the test suite. If the engineering team does not trust the test results, the data is useless. Two primary metrics provide a clear signal on the health of your testing assets.

- Code Coverage: This metric quantifies the percentage of the application's source code that is executed by the automated test suite. While 100% coverage is not always a practical or meaningful goal, a low or declining coverage percentage indicates significant blind spots in the testing strategy.

- Flakiness Rate: A "flaky" test exhibits non-deterministic behavior—it passes and fails intermittently without any underlying code changes, often due to race conditions, environment instability, or poorly written assertions. A high flakiness rate erodes trust in the CI pipeline and leads to wasted developer time investigating false positives.

A healthy test suite is characterized by high, targeted code coverage and a flakiness rate approaching zero. This fosters team-wide confidence in the build signal.

Mastering Defect Management Metrics

Defect metrics are traditional but powerful indicators of quality. They provide insight not just into the volume of bugs, but also into the team's efficiency at detecting and resolving them before they impact users.

- Defect Density: This measures the number of confirmed defects per unit of code size, typically expressed as defects per thousand lines of code (KLOC). A high defect density in a specific module can be a strong indicator of underlying architectural issues or excessive complexity.

- Defect Leakage: This critical metric tracks the percentage of defects that were not caught by the SQA process and were instead discovered in production (often reported by users). A high leakage rate is a direct measure of the ineffectiveness of the pre-release quality gates.

- Mean Time To Resolution (MTTR): This KPI measures the average time elapsed from when a defect is reported to when a fix is deployed to production. A low MTTR reflects an agile and efficient engineering process.

Monitoring these metrics helps identify weaknesses in both the codebase and the development process. The objective is to continuously drive defect density and leakage down while reducing MTTR.

Gauging Pipeline and Automation Efficiency

In a DevOps context, the performance and stability of the CI/CD pipeline are directly proportional to the team's ability to deliver value. Effective software quality assurance processes must act as an accelerator, not a brake.

An efficient pipeline is a quality multiplier. It provides rapid feedback, enabling developers to iterate faster and with greater confidence. The goal is to make quality checks a seamless and nearly instantaneous part of the development workflow.

Pipeline efficiency can be measured with these key metrics:

- Test Execution Duration: The total wall-clock time required to run the entire automated test suite. Increasing duration slows down the feedback loop for developers and can become a significant bottleneck.

- Automated Test Pass Rate: The percentage of automated tests that pass on their first run for a new build. A chronically low pass rate can indicate either systemic code quality issues or an unreliable (flaky) test suite.

For teams aiming for elite performance, mastering best practices for continuous integration is a critical next step.

Connecting SQA To Business Impact

Ultimately, quality assurance activities must demonstrate tangible business value. This means translating engineering metrics into financial terms that resonate with business stakeholders. This is especially critical given that 40% of large organizations allocate over a quarter of their IT budget to testing and QA, according to recent software testing statistical analyses. Demonstrating a clear return on this investment is paramount.

Metrics that bridge this gap include:

- Cost per Defect: This calculates the total cost of finding and fixing a single bug, factoring in engineering hours, QA resources, and potential customer impact. This powerfully illustrates the cost savings of early defect detection ("shift-left").

- ROI of Test Automation: This metric compares the cost of developing and maintaining the automation suite against the savings it generates (e.g., reduced manual testing hours, prevention of costly production incidents). A positive ROI provides a clear business case for automation investments.

Essential SQA Performance Metrics and Formulas

This table summarizes the key performance indicators (KPIs) crucial for tracking the effectiveness and efficiency of your software quality assurance processes.

| Metric | Formula / Definition | What It Measures |

|---|---|---|

| Code Coverage | (Lines of Code Executed by Tests / Total Lines of Code) * 100 |

The percentage of your codebase exercised by automated tests, revealing potential testing gaps. |

| Flakiness Rate | (Number of False Failures / Total Test Runs) * 100 |

The reliability and trustworthiness of your automated test suite. |

| Defect Density | Total Defects / Size of Codebase (e.g., in KLOC) |

The concentration of bugs in your code, highlighting potentially problematic modules. |

| Defect Leakage | (Bugs Found in Production / Total Bugs Found) * 100 |

The effectiveness of your pre-release testing at catching bugs before they reach customers. |

| MTTR | Average time from bug report to resolution |

The efficiency and responsiveness of your development team in fixing reported issues. |

| Test Execution Duration | Total time to run all automated tests |

The speed of your CI/CD feedback loop; a key indicator of pipeline efficiency. |

| ROI of Test Automation | (Savings from Automation - Cost of Automation) / Cost of Automation |

The financial value and business justification for your investment in test automation. |

By integrating these metrics into dashboards and regular review cycles, you can transition from a reactive "bug hunting" culture to a proactive, data-driven quality engineering discipline.

SQA In The Real World: Your Questions Answered

Implementing a robust SQA process requires navigating practical challenges. Theory is one thing; execution is another. Here are technical answers to common questions engineers and managers face during implementation.

How Do You Structure A QA Team In An Agile Framework?

The legacy model of a separate QA team acting as a gatekeeper is an anti-pattern in Agile or Scrum environments. It creates silos and bottlenecks. The modern, effective approach is to make quality a shared responsibility of the entire team.

The most effective structure is embedding QA engineers directly within each cross-functional development team. This organizational design has significant technical benefits:

- Tighter Collaboration: The QA engineer participates in all sprint ceremonies, from planning and backlog grooming to retrospectives. They can identify ambiguous requirements and challenge untestable user stories before development begins.

- Faster Feedback Loops: Developers receive immediate feedback on their code within the same sprint, often through automated tests written in parallel with feature development. This reduces the bug fix cycle time from weeks to hours.

- Shared Ownership: When the entire team—developers, QA, and product—is collectively accountable for the quality of the deliverable, a proactive culture emerges. The focus shifts from blame to collaborative problem-solving.

In this model, the QA engineer's role evolves from a manual tester to a "quality coach" or Software Development Engineer in Test (SDET). They empower developers with better testing tools, contribute to the test automation framework, and champion quality engineering best practices across the team.

What Is The Difference Between A Test Plan And A Test Strategy?

These terms are not interchangeable in a mature SQA process; they represent documents of different scope and longevity.

A Test Strategy is a high-level, long-lived document that defines an organization's overarching approach to testing. It's the "constitution" of quality for the engineering department. A Test Plan is a tactical, project-specific document that details the testing activities for a particular release or feature. It's the "battle plan."

The Test Strategy is static and foundational. It answers questions like:

- What are our quality objectives and risk tolerance levels?

- What is our standard test automation framework and toolchain?

- What is our policy on different test types (e.g., target code coverage for unit tests)?

A Test Plan, conversely, is dynamic and scoped to a single project or sprint. It specifies the operational details:

- What specific features, user stories, and API endpoints are in scope for testing?

- What are the explicit entry and exit criteria for this test cycle?

- What is the resource allocation (personnel) and schedule for testing activities?

- What specific test environments (and their configurations) are required?

How Should We Manage Test Data Effectively?

Ineffective test data management (TDM) is a primary cause of flaky and unreliable automated tests. Using production data for testing is a major security risk and introduces non-determinism. A disciplined TDM strategy is essential for stable test automation.

Proper TDM involves several key technical practices:

- Data Masking and Anonymization: Use automated tools to scrub production database copies of all personally identifiable information (PII) and other sensitive data. This creates a safe, realistic, and compliant dataset for staging environments.

- Synthetic Data Generation: For testing edge cases or scenarios not present in production data, use libraries and tools to generate large volumes of structurally valid but artificial data. This is crucial for load testing and testing new features with no existing data.

- Database Seeding Scripts: Every automated test run must start from a known, consistent state. This is achieved through scripts (e.g., SQL scripts, application-level seeders) that are executed as part of the test setup (or

beforeEachhook) to wipe and populate the test database with a predefined dataset.

Treating test data as a critical asset, version-controlled and managed with the same rigor as application code, is fundamental to achieving a stable and trustworthy automation pipeline.

Ready to integrate expert-level software quality assurance processes without the hiring overhead? OpsMoon connects you with the top 0.7% of remote DevOps and QA engineers. We build the high-velocity CI/CD pipelines and automated quality gates that let you ship code faster and with more confidence. Start with a free work planning session to map out your quality roadmap. Learn more at OpsMoon.