Mastering Site Reliability Engineering Principles

Learn essential site reliability engineering principles to build resilient systems. This guide covers SLOs, error budgets, automation, and incident response.

At its core, Site Reliability Engineering (SRE) applies a software engineering mindset to solve infrastructure and operations problems. The objective is to build highly reliable and scalable software systems by automating operational tasks, defining reliability with quantitative metrics, and striking a data-driven balance between deploying new features and ensuring system stability.

Why Modern Systems Need SRE

As digital services scale in complexity and users expect zero downtime, traditional operational models are no longer viable. The classic paradigm—where a development team "throws code over the wall" to a siloed operations team—creates a critical bottleneck. This forces a false choice: either decelerate innovation or accept declining system reliability.

Site Reliability Engineering (SRE) was conceived to resolve this conflict. It reframes operations not as a manual, reactive chore, but as a proactive software engineering challenge. Instead of merely firefighting when systems break, SRE focuses on engineering systems that are inherently resilient to failure.

The Origin of SRE

The discipline was established at Google in 2003 to manage explosive system growth. The siloed structure of development and operations teams was leading to frequent outages and significant delays in feature releases. To address this, a team led by Ben Treynor Sloss began applying engineering principles to operations, aggressively automating repetitive work and building sophisticated monitoring platforms.

This new methodology proved highly effective, dramatically improving system reliability and setting a new industry standard. For a deeper historical context, LogRocket has a great overview of SRE's origins.

This fundamental shift in mindset is the key to SRE. It creates a sustainable, data-driven framework where development velocity and operational stability are aligned, not opposed. Adopting this discipline provides significant technical and business advantages:

- Improved System Reliability: By treating reliability as a core feature with quantifiable goals—not an afterthought—SRE makes systems more resilient and consistently available.

- Faster Innovation Cycles: Data-driven error budgets provide a quantitative framework for risk assessment, allowing teams to release features confidently without guessing about the potential impact on stability.

- Reduced Operational Cost: Ruthless automation eliminates manual toil, freeing up engineers to focus on high-value projects that deliver lasting architectural improvements.

Defining Reliability With SLOs And Error Budgets

In Site Reliability Engineering, reliability is not a qualitative goal; it's a number that is tracked, measured, and agreed upon. This is where the core site reliability engineering principles are implemented, built on two foundational concepts: Service Level Objectives (SLOs) and Error Budgets.

These are not abstract terms. They are practical, quantitative tools that provide a shared, data-driven language to define, measure, and manage service stability. Instead of pursuing the economically unfeasible goal of 100% uptime, SRE focuses on what users actually perceive and what level of performance is acceptable.

From User Happiness To Hard Data

The process begins by identifying the user-critical journeys. Is it API response time? Is it the success rate of a file upload? This defines what needs to be measured.

This brings us to the Service Level Indicator (SLI). An SLI is a direct, quantitative measurement of a specific aspect of your service's performance that correlates with user experience.

Common SLIs used in production environments include:

- Availability: The proportion of valid requests served successfully. Typically expressed as a ratio:

(successful_requests / total_valid_requests) * 100. - Latency: The time taken to service a request, measured in milliseconds (ms). It is crucial to measure this at specific percentiles (e.g., 95th, 99th, 99.9th) to understand the experience of the majority of users, not just the average.

- Error Rate: The percentage of requests that fail with a specific error class, such as HTTP 5xx server errors. Calculated as

(failed_requests / total_requests) * 100. - Throughput: The volume of requests a system handles, often measured in requests per second (RPS). This is a key indicator for capacity planning.

Once you are instrumenting and collecting SLIs, you can define a Service Level Objective (SLO). An SLO is a specific target value or range for an SLI, measured over a defined compliance period (e.g., a rolling 30 days). This is the internal goal your team formally commits to achieving.

SLO Example: “Over a rolling 28-day period, the 95th percentile (p95) latency for the

/api/v1/checkoutendpoint will be less than 300ms, as measured from the load balancer.”

This statement is technically precise and powerful. It transforms ambiguous user feedback like "the site feels slow" into a concrete, measurable engineering target that aligns engineers, product managers, and stakeholders.

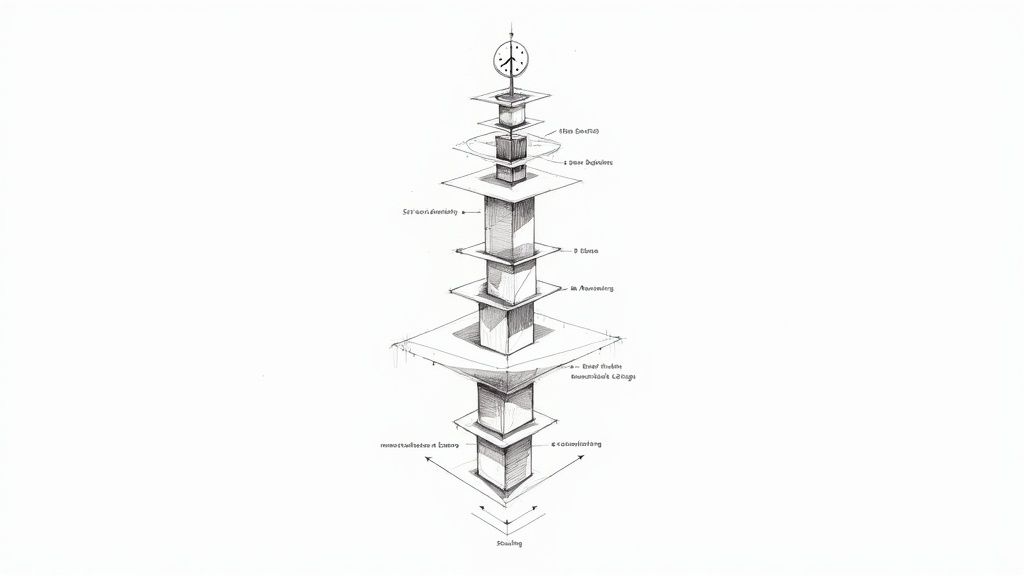

The image below illustrates the hierarchical relationship between these concepts, showing how specific indicators inform the broader objective for a service.

As you can see, SLIs are the granular, raw data points that serve as the building blocks for defining what success looks like in your SLO.

To further clarify these concepts, let's compare SLIs, SLOs, and their contractual cousin, SLAs.

SLI vs SLO vs SLA Explained

While the acronyms are similar, their functions are distinct. SLIs are the raw measurements, SLOs are the internal engineering targets based on those measurements, and SLAs are the external, contractual promises made to customers.

| Metric | What It Measures | Primary Audience | Consequence of Failure |

|---|---|---|---|

| SLI | A direct, quantitative measure of a service's behavior (e.g., p99 latency, error rate). | Internal Engineering & Product Teams | Informs SLOs; no direct consequence. |

| SLO | An internal target for an SLI over time (e.g., 99.95% availability over 30 days). | Internal Engineering & Product Teams | Triggers an Error Budget policy violation and freezes risky changes. |

| SLA | A formal, contractual agreement with customers about service performance. | External Customers & Legal Teams | Financial penalties, service credits, or contract termination. |

Understanding this hierarchy is critical. You cannot set a meaningful SLO without a well-defined SLI, and you should never commit to an SLA that is stricter than your internal SLOs.

The Power Of The Error Budget

This is where the SRE model becomes truly actionable. The moment you define an SLO, you implicitly create an Error Budget. It is the mathematical inverse of your objective—the precise, quantifiable amount of unreliability you are willing to tolerate.

If your availability SLO is 99.95%, your error budget is the remaining 0.05%. This is not an acceptance of failure; it is a budget for risk. The error budget becomes the currency for innovation.

This concept is central to how Google's SRE teams operate. SLOs serve as the north star for reliability, while error budgets determine the pace of feature deployment. For example, a 99.9% uptime SLO translates to an error budget of approximately 43.8 minutes of downtime per 30-day period. Once that budget is consumed, a pre-agreed policy is enacted: all non-essential feature deployments are frozen until the budget is replenished.

The error budget empowers development teams with data-driven autonomy. If the budget is healthy, the team can ship a new feature, run a performance experiment, or perform risky infrastructure maintenance. If a series of incidents exhausts the budget, the team’s sole priority shifts to hardening the system and restoring the SLO.

This creates a self-regulating system that programmatically balances innovation against stability. You can dive deeper into the nuances of service reliability engineering in our comprehensive guide.

In Site Reliability Engineering, toil is the primary adversary.

Toil is defined as manual, repetitive, automatable, tactical work that has no enduring engineering value and scales linearly with service growth. Restarting a server, manually provisioning a database, or running a script to clear a cache are all examples. Performing such a task once is an operation. Performing it weekly is toil, and it is an inefficient use of skilled engineering resources.

A core SRE principle is the imperative to automate repetitive tasks. This is not merely for convenience; it is about systematically freeing engineers to focus on high-leverage work: building, innovating, and solving complex architectural problems.

This relentless drive for automation goes beyond time savings. Every manual intervention is an opportunity for human error, introducing inconsistencies that can cascade into major outages. Automation enforces configuration consistency, hardens systems against configuration drift, and makes every process deterministic, auditable, and repeatable.

The goal is to treat operations as a software problem. The solution is not more engineers performing manual tasks; it's better automation code.

The 50% Rule for Engineering

How do you ensure you are systematically reducing toil? A foundational guideline from SRE teams at Google is the "50% Rule."

The principle is straightforward: SRE teams must cap time spent on operational work (toil and on-call duties) at 50%. The remaining 50% (or more) must be allocated to engineering projects that provide long-term value, such as building automation tools, enhancing monitoring systems, or re-architecting services for improved reliability.

This rule is not arbitrary; it establishes a self-correcting feedback loop. If toil consumes more than 50% of the team's time, it is a signal that the system is unstable or lacks sufficient automation. It becomes the team's top engineering priority to automate that toil away. This mechanism forces investment in permanent solutions over getting trapped in a reactive cycle of firefighting.

From Manual Tasks to Self-Healing Systems

Eliminating toil is a systematic process. It begins with quantifying where engineering time is being spent, identifying the most time-consuming manual tasks, and prioritizing automation efforts based on their potential return on investment.

The evolution from manual intervention to a fully automated, self-healing system typically follows a clear trajectory.

Common Targets for Automation:

- Infrastructure Provisioning: Manual server setup is obsolete. SREs utilize Infrastructure as Code (IaC) with tools like Terraform, Pulumi, or Ansible. This allows the entire infrastructure stack to be defined in version-controlled configuration files, enabling the creation or destruction of entire environments with a single command—deterministically and flawlessly.

- Deployment Pipelines: Manual deployments are unacceptably risky. Automated canary or blue-green deployment strategies drastically reduce the blast radius of a faulty release. An intelligent CI/CD pipeline can deploy a change to a small subset of traffic, monitor key SLIs in real-time, and trigger an automatic rollback at the first sign of degradation, often before a human is even alerted.

- Alert Remediation: Many alerts have predictable, scriptable remediation paths. A self-healing system is designed to execute these fixes automatically. For example, a "low disk space" alert can trigger an automated runbook that archives old log files or extends a logical volume, resolving the issue without human intervention.

By converting manual runbooks into robust, tested automation code, you are not just eliminating toil. You are codifying your team's operational expertise, making your systems more resilient, predictable, and scalable.

Engineering for Resilience and Scale

True reliability cannot be added as a final step in the development lifecycle. It must be designed into the architecture of a system from the initial design phase.

This is a core tenet of SRE. We don't just react to failures; we proactively engineer services designed to withstand turbulence. This requires early engagement with development teams to influence architectural decisions, ensuring that when components inevitably fail, the user impact is minimized or eliminated.

Building for Failure

A fundamental truth of complex distributed systems is that components will fail. The SRE mindset does not chase the impossible goal of 100% uptime. Instead, it focuses on building systems that maintain core functionality even when individual components are degraded or unavailable.

This is achieved through specific architectural patterns.

A key technique is graceful degradation. Instead of a service failing completely, it intelligently sheds non-essential functionality to preserve the core user experience. For an e-commerce site, if the personalized recommendation engine fails, a gracefully degrading system would still allow users to search, browse, and complete a purchase. The critical path remains operational.

Another critical pattern is the circuit breaker. When a downstream microservice begins to fail, a circuit breaker in the calling service will "trip," temporarily halting requests to the failing service and returning a cached or default response. This prevents a localized failure from causing a cascading failure that brings down the entire application stack.

Planning for Unpredictable Demand

Scalability is the counterpart to resilience. A system that cannot handle a sudden increase in traffic is just as unreliable as one that crashes due to a software bug. This requires rigorous load testing and capacity planning, especially in environments with variable traffic patterns.

Modern capacity planning is more than just adding servers; it involves deep analysis of usage data to forecast future demand and provision resources just-in-time. This is where effective cloud infrastructure management services demonstrate their value, providing the observability and automation tools necessary to scale resources intelligently.

But how do you validate that these resilient designs work as intended? You test them by intentionally breaking them.

The Art of Controlled Destruction: Chaos Engineering

This leads to one of the most powerful practices in the SRE toolkit: chaos engineering.

Chaos engineering is the discipline of experimenting on a system in order to build confidence in the system's ability to withstand turbulent conditions in production. You intentionally inject controlled failures to proactively identify and remediate weaknesses before they manifest as user-facing outages.

While it may sound disruptive, it is a highly disciplined and controlled practice. Using frameworks like Gremlin or AWS Fault Injection Simulator, SREs run "gameday" experiments that simulate real-world failures in a controlled environment.

Classic chaos experiments include:

- Terminating a VM instance or container pod: Does your auto-scaling and failover logic function correctly and within the expected timeframe?

- Injecting network latency or packet loss: How do your services behave under degraded network conditions? Do timeouts and retries function as designed?

- Saturating CPU or memory: Where are the hidden performance bottlenecks and resource limits in your application stack?

By embracing failure as an inevitability, SREs transform reliability from a reactive fire drill into a proactive engineering discipline. We build systems that don't just survive change—they adapt to it.

Mastering Incident Response and Postmortems

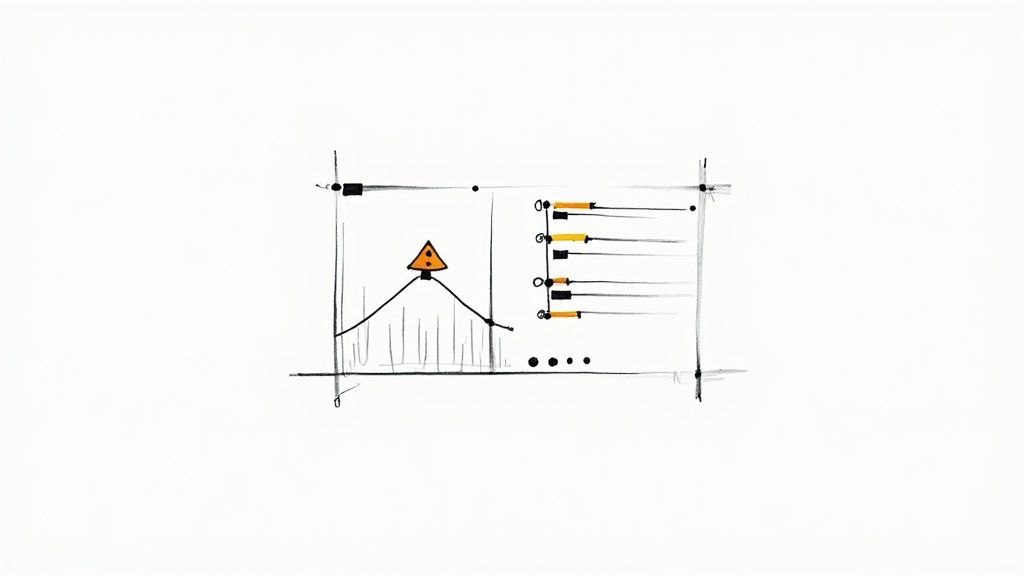

Despite robust engineering, incidents will occur. The true measure of a mature operations team is not in preventing every failure, but in how it responds. This is what differentiates a minor, contained issue from a major outage that exhausts your error budget and erodes user trust.

In Site Reliability Engineering, incident response is not a chaotic scramble. It is a structured, well-rehearsed practice. When a high-severity alert fires, the singular goal is service restoration. This requires a pre-defined playbook that eliminates ambiguity in high-stress situations. A robust plan includes clear on-call rotation schedules, severity level (SEV) definitions to classify impact, and a command structure to prevent the response from descending into chaos.

Establishing an Incident Command Structure

During a major outage, ambiguity is the enemy. A formal command structure, with clearly defined roles and responsibilities, is the best defense. It ensures communication is streamlined, decisions are decisive, and every team member understands their specific duties.

This structure allows subject matter experts to focus on technical remediation without being distracted by status updates or coordination overhead.

To maintain order during a crisis, a clear command structure is essential. The table below outlines the key roles found in most mature incident response frameworks.

Incident Response Roles and Responsibilities

| Role | Primary Responsibility | Key Actions |

|---|---|---|

| Incident Commander (IC) | Leads the overall response, making strategic decisions and keeping the effort focused on resolution. | Declares the incident, assembles the team, and delegates tasks. Not necessarily the most senior engineer. |

| Communications Lead | Manages all internal and external communication about the incident. | Drafts status updates for executive stakeholders and posts to public status pages. |

| Operations Lead | Owns the technical investigation and coordinates remediation efforts. | Directs engineers in diagnosing the issue, analyzing telemetry (logs, metrics, traces), and applying fixes. |

This structure is optimized for efficiency under pressure. The IC acts as the coordinator, the Comms Lead manages information flow, and the Ops Lead directs the technical resolution. The clear separation of duties prevents miscommunication and keeps the focus on recovery.

The Power of Blameless Postmortems

The incident is resolved. The service is stable. The most critical work is about to begin. The blameless postmortem is a core site reliability engineering principle that transforms every incident into an invaluable learning opportunity.

The entire philosophy is predicated on one idea:

The goal is to understand the systemic causes of an incident, not to assign individual blame. Human error is a symptom of a flawed system, not the root cause. A process that allows a single human mistake to have a catastrophic impact is the real vulnerability to be fixed.

Removing the fear of retribution fosters psychological safety, empowering engineers to be completely transparent about the contributing factors. This unfiltered, factual feedback is essential for uncovering deep-seated weaknesses in your technology, processes, and automation.

A thorough postmortem reconstructs a precise timeline of events, identifies all contributing factors (technical and procedural), and generates a set of prioritized, actionable remediation items with owners and deadlines. This creates a powerful feedback loop where every outage directly hardens the system against that entire class of failure. This is not about fixing bugs; it is about making continuous, systemic improvement a reality.

Adopting SRE Principles in Your Organization

A persistent myth suggests Site Reliability Engineering is only for hyper-scale companies like Google or Netflix. This is a misconception. Organizations of all sizes are successfully adapting and implementing these principles to improve their operational maturity.

The SRE journey is not a monolithic roadmap. A large enterprise might establish a dedicated SRE organization. A startup or mid-sized company might embed SRE responsibilities within existing DevOps or platform engineering teams. The power of SRE lies in its flexibility as a mindset, not its rigidity as an organizational chart.

Charting Your SRE Adoption Path

How do you begin? A proven approach is the people, process, and technology framework. This model provides a structured way to introduce SRE concepts incrementally without causing organizational disruption. The goal is to progressively weave the SRE mindset into your existing engineering culture.

This is not a new trend. By 2016, SRE had already expanded beyond its origins. While Google famously employed over 1,000 site reliability engineers, other innovators like Netflix, Airbnb, and LinkedIn had already adapted the model. They either built specialized teams or, as is now more common, integrated SRE responsibilities directly into their DevOps and platform roles.

The key takeaway is that you do not need a large, dedicated team to start. Begin by selecting a single, business-critical service, defining its SLOs, and empowering a team to own its reliability.

This small, focused effort can create a powerful ripple effect. Once one team experiences firsthand how data-driven reliability targets and error budgets improve both their work-life balance and the customer experience, the culture begins to shift organically.

If you are considering how to introduce this level of stability and performance to your systems, exploring specialized SRE services can provide the roadmap and expertise to accelerate your adoption. It’s about building a resilient, scalable foundation for future innovation.

Alright, you've absorbed the core principles of SRE. Now let's address some of the most common implementation questions.

SRE vs. DevOps: What's the Real Difference?

This is the most frequent point of confusion. Both SRE and DevOps aim to solve the same problem: breaking down organizational silos between development and operations to deliver better software, faster.

The clearest distinction is this: DevOps is the cultural philosophy—the "what." SRE is a specific, prescriptive implementation of that philosophy—the "how."

A popular analogy states, "If DevOps is an interface, SRE is a class that implements it."

SRE provides the concrete engineering practices to make the DevOps philosophy tangible. It introduces hard data and strict rules—like SLOs, error budgets, and the 50% cap on toil—that translate broad cultural goals into specific, measurable engineering disciplines.

Can We Do SRE If We're Just a Small Startup?

Yes, absolutely. You may not need a formal "SRE Team," but you can and should adopt the SRE mindset. The key is to start small and focus on high-impact, low-effort practices that yield the greatest reliability return.

You don't need a massive organizational change. Start with these three actions:

- Define one simple SLO: Choose a single, critical user journey (e.g., login API, checkout flow) and establish a clear, measurable reliability target for its latency or availability.

- Automate one painful task: Identify the most hated, error-prone manual task your team performs. Write a script to automate it and reclaim that engineering time.

- Run blameless postmortems: The next time an incident occurs, gather the team to analyze the systemic causes. Focus on the process failures, not the people involved.

These initial steps activate the core feedback loops of SRE without requiring a large organizational investment.

What's the Very First Thing I Should Do to Get Started?

If you do only one thing, do this: Select your most business-critical service and define its first Service Level Objective (SLO).

This single action is a powerful catalyst. It forces a cascade of essential conversations that are foundational to building a reliable system.

To set an SLO, you must first define what reliability means to your users by instrumenting SLIs. Then, you must gain consensus from all stakeholders on a specific, measurable target. Once that SLO is defined, you automatically get an error budget. That budget becomes your data-driven framework for balancing feature velocity against stability. It all begins with that one number.

Ready to turn these principles into a more reliable and scalable system? OpsMoon can connect you with top-tier SRE and DevOps engineers who live and breathe this stuff. We can help you build your reliability roadmap, starting with a free work planning session. Learn more about how OpsMoon can help.