Linkerd vs Istio: A Technical Comparison for Engineers

A technical Linkerd vs Istio comparison for engineers. We analyze architecture, performance, security, and complexity to help you choose the right service mesh.

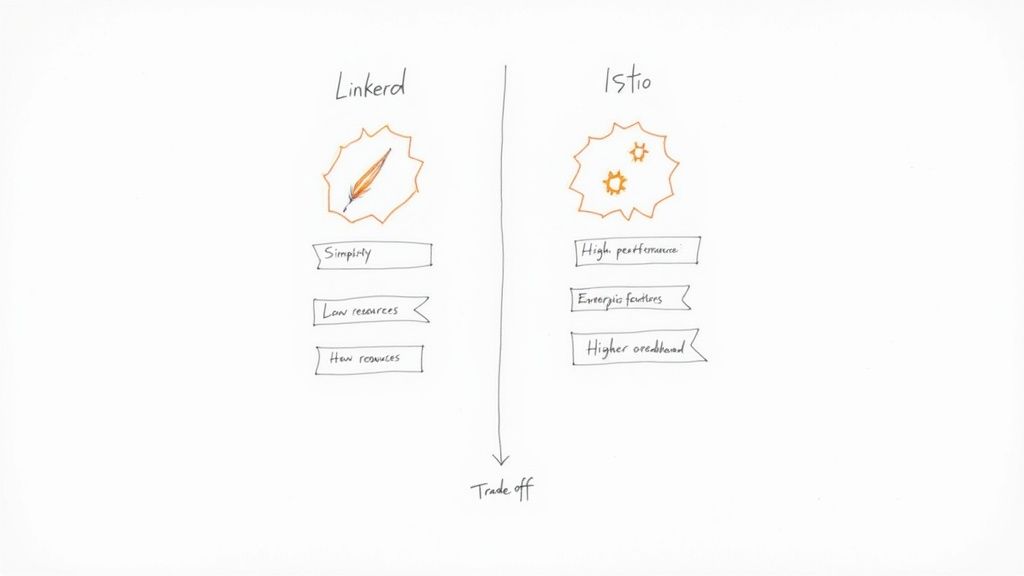

The fundamental choice between Linkerd and Istio reduces to a classic engineering trade-off: operational simplicity versus feature-rich extensibility.

For teams that prioritize minimal resource overhead, predictable performance, and rapid implementation, Linkerd is the technically superior choice. Conversely, for organizations with complex, heterogeneous environments and a dedicated platform engineering team, Istio provides a deeply customizable, albeit operationally demanding, control plane.

Choosing Your Service Mesh: A Technical Guide

Selecting a service mesh is a significant architectural commitment that directly impacts the reliability, security, and observability of your Kubernetes workloads. The decision hinges on a critical trade-off: operational simplicity versus feature depth. Linkerd and Istio represent opposing philosophies on this spectrum.

Linkerd is engineered from the ground up for simplicity and efficiency. It delivers core service mesh functionalities—like mutual TLS (mTLS) and Layer 7 observability—with a "just works" operational model. Its lightweight, Rust-based "micro-proxies" are purpose-built to minimize performance overhead, a critical factor in latency-sensitive applications.

Istio, conversely, leverages the powerful and feature-complete Envoy proxy. It offers an extensive API for fine-grained traffic management, advanced security policy enforcement, and broad third-party extensibility. This flexibility is invaluable for organizations that require granular control over their service-to-service communication, but it necessitates significant investment in platform engineering expertise to manage its complexity.

The core dilemma in the Linkerd vs. Istio debate is not determining which mesh is "better" in the abstract. It is about aligning a specific tool with your organization's technical requirements, operational maturity, and engineering resources. The cost of advanced features must be weighed against the operational overhead required to maintain them.

Linkerd vs Istio At a Glance

This table provides a high-level technical comparison, highlighting the core philosophical and architectural differences that inform the choice between the two service meshes.

| Attribute | Linkerd | Istio |

|---|---|---|

| Core Philosophy | Simplicity, performance, and operational ease. | Extensibility, feature-richness, and deep control. |

| Ease of Use | Designed for a "just works" experience. Simple to operate. | Steep learning curve; requires significant expertise. |

| Data Plane Proxy | Ultra-lightweight, Rust-based "micro-proxy". | Feature-rich, C++-based Envoy proxy. |

| Resource Use | Very low CPU and memory footprint. | Significantly higher resource requirements. |

| mTLS | Enabled by default with zero configuration. | Highly configurable via detailed policy CRDs. |

| Primary Audience | Teams prioritizing velocity and low operational overhead. | Enterprises with complex networking and security needs. |

This overview sets the stage for a deeper analysis of architecture, features, and operational realities.

Comparing Service Mesh Architectures

The architectural design of a service mesh directly dictates its performance profile, resource consumption, and operational complexity. Linkerd and Istio present two fundamentally different approaches to managing service-to-service communication within a cluster. A clear understanding of these architectural distinctions is critical for selecting the right tool.

How these advanced networking tools function as critical components within your overall tech stack is the first step. Linkerd is architected around a principle of minimalism, featuring a lightweight control plane and a highly efficient data plane.

Istio adopts a more comprehensive, feature-rich architecture. This design prioritizes flexibility and granular control, which inherently results in a more complex system with a larger resource footprint.

Linkerd: The Minimalist Control plane and Micro-Proxy

Linkerd's control plane is intentionally lean, comprising a small set of core components responsible for configuration, telemetry aggregation, and identity management. This minimalist design simplifies operations and significantly reduces the memory and CPU overhead required to run the mesh.

The key differentiator for Linkerd is its data plane, which utilizes an ultra-lightweight "micro-proxy" written in Rust. This proxy is not a general-purpose networking tool; it is purpose-built for core service mesh functions: mTLS, telemetry, and basic traffic shifting. By avoiding the feature bloat of a general-purpose proxy, the Linkerd proxy adds negligible latency overhead to service requests.

Proxy injection is straightforward: annotating a pod with linkerd.io/inject: enabled triggers a mutating admission webhook that automatically adds the initContainer and the linkerd-proxy sidecar.

# Example Pod Spec after Linkerd Injection

spec:

containers:

- name: my-app

image: my-app:1.0

# --- Injected by Linkerd ---

- name: linkerd-proxy

image: cr.l5d.io/linkerd/proxy:stable-2.14.0

ports:

- name: linkerd-proxy

containerPort: 4143

# ... other proxy configurations

initContainers:

# The init container sets up iptables rules to redirect traffic through the proxy

- name: linkerd-init

image: cr.l5d.io/linkerd/proxy-init:v2.0.0

args:

- --incoming-proxy-port

- '4143'

# ... other args for traffic redirection

The choice of Rust for Linkerd's proxy is a significant architectural decision. It provides memory safety guarantees without the performance overhead of a garbage collector, resulting in a smaller, faster, and more secure data plane specifically optimized for the service mesh role.

Istio: The Monolithic Control Plane and Envoy Proxy

Istio's architecture centers on a monolithic control plane binary, istiod, which consolidates the functions of formerly separate components like Pilot, Citadel, and Galley. This binary is responsible for service discovery, configuration propagation (via xDS APIs), and certificate management. While this consolidation simplifies deployment compared to older Istio versions, istiod remains a substantial, resource-intensive component.

The data plane is powered by the Envoy proxy, a high-performance, C++-based proxy developed at Lyft. Envoy is exceptionally powerful and extensible, supporting a vast array of protocols and advanced traffic management features far beyond Linkerd's scope. This power comes at the cost of significant resource consumption and configuration complexity. Effective Istio administration often requires deep expertise in Envoy's configuration and operational nuances, contributing to Istio's steep learning curve.

This architectural difference has a direct, measurable impact on performance. Benchmarks consistently demonstrate Linkerd's efficiency advantage. In production-grade load tests running 2,000 requests per second (RPS), Linkerd exhibited 163 milliseconds lower P99 latency than Istio.

Furthermore, Linkerd's Rust-based proxy consumes an order of magnitude less CPU and memory, often 40-60% fewer resources than Envoy. The Linkerd control plane can operate with 200-300MB of memory, whereas Istio's istiod typically requires 1GB or more in a production environment. You can review detailed findings on service mesh performance for a comprehensive analysis. This level of efficiency is critical for organizations implementing microservices architecture design patterns, where minimizing per-pod overhead is paramount.

Analyzing Core Traffic Management Features

Traffic management is where the core philosophies of Linkerd and Istio become most apparent. Both meshes can implement essential patterns like canary releases and circuit breaking, but their respective APIs and operational models differ significantly.

This choice directly impacts your team's daily workflows and the overall complexity of your CI/CD pipeline. Linkerd leverages standard Kubernetes resources and supplements them with its own lightweight CRDs, whereas Istio introduces a powerful but complex set of its own Custom Resource Definitions (CRDs) for traffic engineering.

Canary Releases: A Practical Comparison

Implementing a canary release is a primary use case for a service mesh. The objective is to direct a small percentage of production traffic to a new service version to validate its stability before a full rollout.

With Linkerd, this is typically orchestrated by a progressive delivery tool like Flagger or Argo Rollouts. These tools manipulate standard Kubernetes Service and Deployment objects to shift traffic. The mesh observes these changes and enforces the traffic split, keeping the logic declarative and Kubernetes-native.

Istio, in contrast, requires explicit traffic routing rules defined using its VirtualService and DestinationRule CRDs. This provides powerful, fine-grained control but adds a layer of mesh-specific configuration that must be managed.

Consider a simple 90/10 traffic split.

Istio VirtualService for Canary Deployment

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: my-service-vs

spec:

hosts:

- my-service

http:

- route:

- destination:

host: my-service

subset: v1

weight: 90

- destination:

host: my-service

subset: v2

weight: 10

This YAML explicitly instructs the Istio data plane to route 90% of traffic for the my-service host to pods in the v1 subset and 10% to the v2 canary subset. This level of granular control is a key strength of Istio, but it requires learning and maintaining mesh-specific APIs. Linkerd's approach, relying on external controllers to manipulate standard Kubernetes objects, feels less intrusive to teams already proficient with kubectl.

Retries and Timeouts

Configuring reliability patterns like retries and timeouts further highlights the philosophical divide. Both meshes excel at preventing cascading failures by intelligently retrying transient errors or enforcing request timeouts.

Linkerd manages this behavior via a ServiceProfile CRD. This resource is applied to a standard Kubernetes Service and provides directives to Linkerd's proxies regarding request handling for that service.

Linkerd ServiceProfile for Retries

apiVersion: linkerd.io/v1alpha2

kind: ServiceProfile

metadata:

name: my-service.default.svc.cluster.local

spec:

routes:

- name: POST /api/endpoint

condition:

method: POST

pathRegex: /api/endpoint

isRetryable: true

timeout: 200ms

In this configuration, only POST requests to the specified path are marked as retryable, with a strict 200ms timeout. The rule is scoped, declarative, and directly associated with the Kubernetes Service it configures.

Istio again utilizes its VirtualService CRD, which offers a more extensive set of matching conditions and retry policies.

Istio VirtualService for Retries

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: my-service-vs

spec:

hosts:

- my-service

http:

- route:

- destination:

host: my-service

retries:

attempts: 3

perTryTimeout: 2s

retryOn: 5xx

Here, Istio defines a broader policy: retry any request to my-service up to three times if it fails with a 5xx status code. This is powerful but decouples the reliability configuration from the service manifest itself.

The key takeaway is technical: Linkerd's traffic management is service-centric, designed as a lightweight extension of the Kubernetes resource model. Istio's is route-centric, providing a powerful, independent API for network traffic control that operates alongside Kubernetes APIs.

Observability: Golden Metrics vs. Deep Telemetry

The two meshes have distinct observability philosophies. Linkerd provides the "golden metrics"—success rate, requests per second, and latency—for all HTTP, gRPC, and TCP traffic out of the box, with zero configuration. For many teams, this provides immediate, actionable insight into service health and performance.

This data highlights how Linkerd's lower resource footprint and latency contribute to its philosophy of providing essential metrics with minimal overhead.

Istio, leveraging the extensive capabilities of the Envoy proxy, can generate a vast amount of detailed telemetry. While requiring more configuration, this allows for highly customized dashboards and deep, protocol-specific analysis. For teams requiring this level of insight, a robust Prometheus service monitoring setup is essential to effectively capture and analyze this rich data stream.

Implementing Security and mTLS

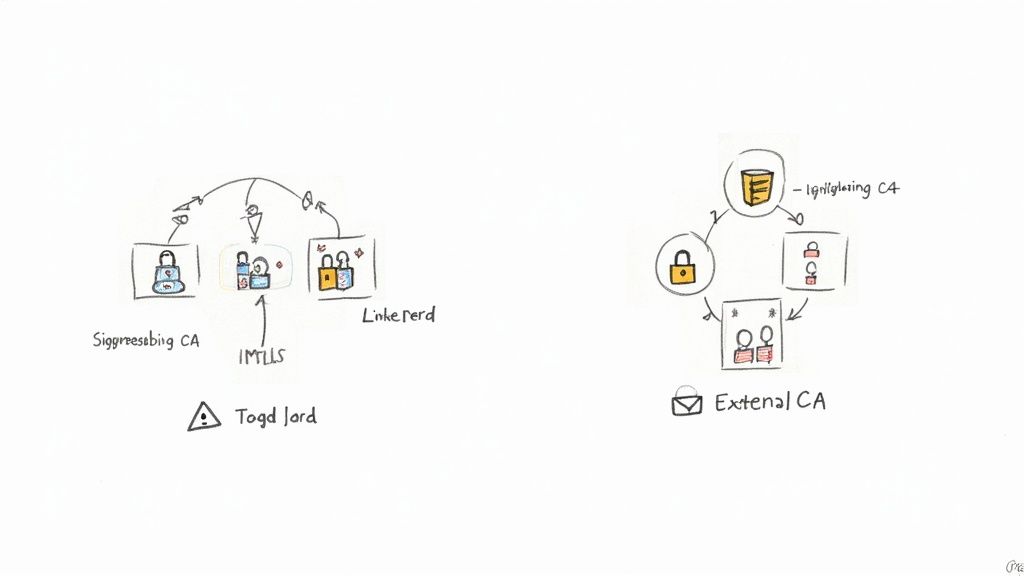

Securing inter-service communication is a primary driver for service mesh adoption. Mutual TLS (mTLS) encrypts all in-cluster traffic, mitigating the risk of eavesdropping and man-in-the-middle attacks. The Linkerd vs Istio comparison reveals two distinct approaches to implementing this critical security control.

Linkerd's philosophy is "secure by default," whereas Istio provides a flexible, policy-driven security model. A service mesh is a foundational component for a modern security posture, enabling mTLS and the fine-grained access controls required for a Zero Trust Architecture Design.

Linkerd and Zero-Trust by Default

Linkerd's approach to security prioritizes simplicity and immediate enforcement. Upon installation, the Linkerd control plane deploys its own lightweight certificate authority. When a service is added to the mesh, mTLS is automatically enabled for all traffic to and from its pods.

This "zero-trust by default" model requires no additional configuration to achieve baseline traffic encryption.

- Automatic Certificate Management: The Linkerd control plane manages the entire certificate lifecycle—issuance, rotation, and revocation—transparently.

- SPIFFE Identity: Each workload is issued a cryptographically verifiable identity compliant with the SPIFFE standard, based on its Kubernetes Service Account.

- Operational Simplicity: The operational burden is minimal. Encryption is an inherent property of the mesh, not a feature that requires explicit policy configuration.

This model is ideal for teams that need to meet security and compliance requirements quickly without dedicating engineering resources to managing complex security policies.

Linkerd's security philosophy posits that encryption should be a non-negotiable default, not an optional feature. By making mTLS automatic and transparent, it eliminates the risk of human error leaving service communication unencrypted in production.

Istio and Flexible Security Policies

Istio provides a more granular and powerful security toolkit, but this capability requires explicit configuration. Rather than being universally "on," mTLS in Istio is managed through specific Custom Resource Definitions (CRDs).

The primary resource for this is PeerAuthentication. This CRD allows administrators to define mTLS policies at various scopes: mesh-wide, per-namespace, or per-workload.

The mTLS mode can be configured as follows:

- PERMISSIVE: The proxy accepts both mTLS-encrypted and plaintext traffic. This mode is essential for incremental migration to the service mesh.

- STRICT: Only mTLS-encrypted traffic is accepted; all plaintext connections are rejected.

- DISABLE: mTLS is disabled for the specified workload(s).

To enforce strict mTLS for an entire namespace, you would apply the following manifest:

apiVersion: security.istio.io/v1beta1

kind: PeerAuthentication

metadata:

name: "default"

namespace: "my-app-ns"

spec:

mtls:

mode: STRICT

This level of control is a key differentiator in the Linkerd vs Istio debate. Istio also supports integration with external Certificate Authorities, such as HashiCorp Vault or an internal corporate PKI, a common requirement in large enterprises.

For organizations subject to strict compliance regimes, applying Kubernetes security best practices becomes a matter of defining explicit, auditable Istio policies. While this requires more initial setup, it provides platform teams with precise control over the security posture of every service, making it better suited for environments with complex and varied security requirements.

When you're picking a service mesh, the initial install is just the beginning. The real story unfolds during "Day 2" operations—the endless cycle of upgrades, debugging, and routine maintenance. This is where the true cost of ownership for Linkerd vs. Istio becomes painfully obvious, and where their core philosophies directly hit your team's sanity and budget.

Linkerd is built around a "just works" philosophy, which almost always means less operational headache. Its architecture is deliberately simple, making upgrades feel less like a high-wire act and debugging far more straightforward. For any team that doesn't have a dedicated platform engineering squad, Linkerd’s simplicity is a game-changer. It lets developers get back to building features instead of fighting with the mesh.

Istio, on the other hand, comes with a much steeper learning curve and a heavier operational load. All its power is in its deep customizability, but that power demands specialized expertise to manage without causing chaos. Teams running Istio in production typically need dedicated engineers who live and breathe its complex CRDs, understand the quirks of the Envoy proxy, and can navigate its deep ties into the Kubernetes networking stack.

The Real Cost of Upgrades and Maintenance

Upgrading your service mesh is one of those critical, high-stress moments. A bad upgrade can take down traffic across your entire cluster. Here, the difference between the two is night and day.

Linkerd's upgrade process is usually a non-event. The linkerd upgrade command does most of the work, and because its components are simple and decoupled, the risk of some weird cascading failure is low. The project's focus on a minimal, solid feature set means fewer breaking changes between versions, which translates to a predictable and quick maintenance routine.

Istio upgrades are a much bigger deal. While the process has gotten better with tools like istioctl upgrade, the sheer number of moving parts—from the istiod control plane to every single Envoy sidecar and a whole zoo of CRDs—creates way more things that can go wrong. It’s common practice to recommend canary control plane deployments for Istio just to lower the risk, which is yet another complex operational task to manage.

The operational burden isn’t just about man-hours; it’s about cognitive load. Linkerd is designed to stay out of your way, minimizing the mental overhead required for daily management. Istio demands constant attention and deep expertise to operate reliably at scale.

Ecosystem Integration and Support

Both Linkerd and Istio play nice with the cloud-native world, especially core tools like Prometheus and Grafana. Linkerd gives you out-of-the-box dashboards that light up its "golden metrics" with zero setup. Istio offers far more extensive telemetry that you can use to build incredibly detailed custom dashboards, but that's on you to set up and maintain.

When it comes to ingress controllers, both are flexible. Istio has its own powerful Gateway resource that can act as a sophisticated entry point for traffic. Linkerd, true to form, just works seamlessly with any standard ingress controller you already use, like NGINX, Traefik, or Emissary-ingress.

The community and support landscape is another big piece of the puzzle. Both projects are CNCF-graduated and have lively communities. But you can see their philosophies reflected in their adoption trends. Linkerd has seen explosive growth, particularly among teams that value simplicity and getting things done fast.

According to a CNCF survey analysis, Linkerd saw a 118% overall growth rate between 2020 and 2021, with its production usage actually surpassing Istio's in North America and Europe. More recent 2024 data shows that 73% of survey participants chose Linkerd for current or planned use, compared to just 34% for Istio. This points to a major industry shift toward less complex tools. You can dig into these adoption trends and their implications yourself. The data suggests that for a huge number of use cases, Linkerd’s minimalist approach gets you to value much faster with a significantly lower long-term operational bill.

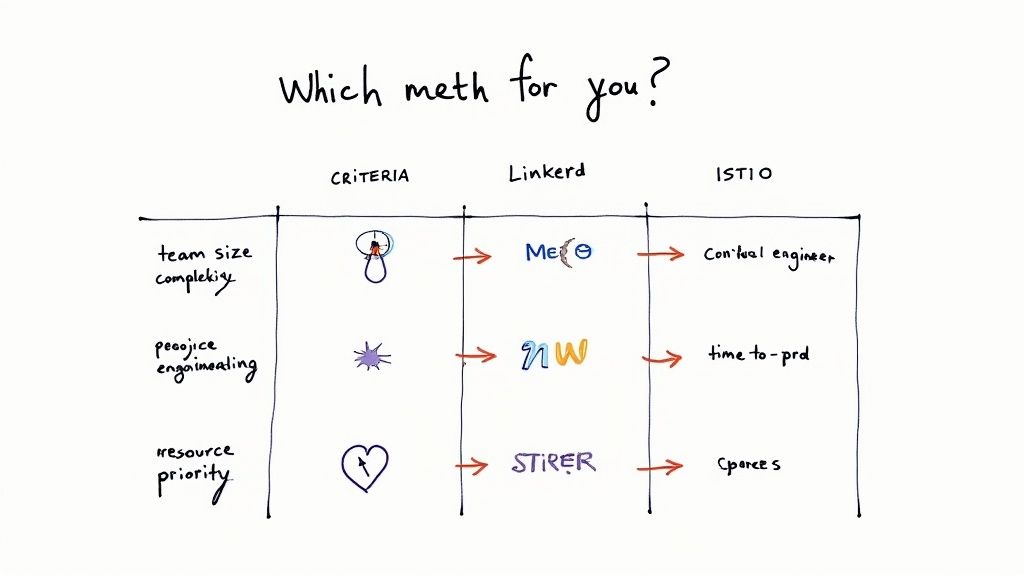

Making The Right Choice: A Decision Framework

Here's the bottom line: choosing between Linkerd and Istio isn't really a feature-to-feature battle. It’s a strategic decision that hinges on your team's expertise, your company's goals, and how much operational horsepower you're willing to invest.

This framework is about getting past the spec sheets. It’s about asking the right questions. Are you a lean team trying to ship fast and need something that just works? Or are you a large enterprise with a dedicated platform team ready to tame a complex beast for ultimate control? Your answer is your starting point.

When To Bet On Linkerd

Linkerd is the pragmatic pick. It's for teams who see a service mesh as a utility—something that should deliver immediate value without becoming a full-time job. Speed, simplicity, and low overhead are the name of the game here.

You should seriously consider Linkerd if your organization:

- Is just starting with service mesh: Its famous "just works" installation and automatic mTLS mean you get security and observability right out of the box. It’s the perfect on-ramp for a first-time adoption.

- Cares deeply about performance: If your application is sensitive to every millisecond of latency, Linkerd’s feather-light Rust proxy gives you a clear edge with a much smaller resource footprint.

- Needs to move fast: The goal is to get core service mesh benefits—like traffic visibility and encrypted communications—in days, not months. Linkerd’s simplicity gets you there quicker and with less risk.

Linkerd's core philosophy is simple: deliver 80% of the service mesh benefits for 20% of the operational pain. It's built for teams that need to focus on their applications, not on managing the mesh.

When To Go All-In On Istio

Istio is a powerhouse. Its strength is its incredible flexibility and deep feature set, making it the go-to for complex, large-scale environments with very specific, demanding needs. Think of it as a toolkit for surgical control over your network.

Istio is the logical choice when your organization:

- Has complex networking puzzles to solve: For multi-cluster, multi-cloud, or hybrid setups that demand sophisticated routing, Istio’s Gateways and VirtualServices offer control that is second to none.

- Manages more than just HTTP/gRPC: If you're dealing with raw TCP traffic, MongoDB connections, or other L4 protocols, Istio's Envoy-based data plane is built for it.

- Has a dedicated platform engineering team: Let's be honest, Istio is complex. A successful adoption requires engineers who can invest the time to manage it. If you have that team, the payoff is immense.

Ultimately, it’s a classic trade-off. Linkerd gets you to value faster with a lower long-term operational cost. Istio provides a powerful, if complex, solution for the toughest networking challenges at scale. This framework should help you see which path truly aligns with your team and your goals.

Technical Decision Matrix Linkerd vs Istio

To make this even more concrete, here's a decision matrix mapping specific technical needs to the right tool. Use this to guide conversations with your engineering team and clarify which mesh aligns with your actual day-to-day requirements.

| Use Case / Requirement | Choose Linkerd If… | Choose Istio If… |

|---|---|---|

| Primary Goal | You need security and observability with minimal effort. | You need granular traffic control and maximum extensibility. |

| Team Structure | You have a small-to-medium team with limited DevOps capacity. | You have a dedicated platform or SRE team to manage the mesh. |

| Performance Priority | Latency is critical; you need the lightest possible proxy. | You can tolerate slightly higher latency for advanced features. |

| Protocol Support | Your services primarily use HTTP, gRPC, or TCP. | You need to manage a wide array of L4/L7 protocols (e.g., Kafka, Redis). |

| Multi-Cluster | You have basic multi-cluster needs and value simplicity. | You have complex multi-primary or multi-network topologies. |

| Security Needs | Zero-config, automatic mTLS is sufficient for your compliance. | You require fine-grained authorization policies (e.g., JWT validation). |

| Extensibility | You're happy with the core features and don't plan to customize. | You plan to use WebAssembly (Wasm) plugins to extend proxy functionality. |

| Time to Value | You need to be in production within days or a few weeks. | You have a longer implementation timeline and can absorb the learning curve. |

This matrix isn't about finding a "winner." It's about matching the tool to the job. Linkerd is designed for simplicity and speed, making it a fantastic choice for the majority of use cases. Istio is built for power and control, excelling where complexity is a given. Choose the one that solves your problems, not the one with the longest feature list.

Common Technical Questions

When you get past the high-level feature lists in any Linkerd vs Istio debate, a few hard-hitting technical questions always come up. These are the ones that really get to the core of implementation pain, long-term strategy, and where the service mesh world is heading.

Can I Actually Migrate Between Linkerd and Istio?

Yes, a migration is technically feasible, but it is a major engineering effort, not a simple swap. The two service meshes use fundamentally incompatible CRDs and configuration models, so an in-place migration of a running workload is impossible.

The only viable strategy is a gradual, namespace-by-namespace migration. This involves running both Linkerd and Istio control planes in the same cluster simultaneously, each managing a distinct set of namespaces. You would then methodically move services from a Linkerd-managed namespace to an Istio-managed one (or vice versa), which involves changing annotations, redeploying workloads, and re-configuring traffic policies using the target mesh's CRDs. This dual-mesh approach introduces significant operational complexity around observability and policy enforcement during the migration period.

Does Linkerd's Simplicity Mean It's Not "Enterprise-Ready"?

This is a common misconception that conflates complexity with capability. Linkerd's design philosophy is simplicity, but this does not render it unsuitable for large-scale, demanding production environments. In fact, its low resource footprint, predictable performance, and high stability are significant advantages at scale.

Linkerd is widely used in production by major enterprises. Its core feature set—automatic mTLS, comprehensive L7 observability, and simple traffic management—addresses the primary requirements of the vast majority of enterprise use cases.

The key takeaway here is that "enterprise-ready" should not be synonymous with "complex." For many organizations, Linkerd's reliability and low operational overhead make it the more strategic enterprise choice, as it allows engineering teams to focus on application development rather than mesh administration.

How Does Istio Ambient Mesh Change the Game?

Istio's Ambient Mesh represents a significant architectural evolution toward a sidecar-less model. Instead of injecting a proxy into each application pod, Ambient Mesh utilizes a shared, node-level proxy (ztunnel) for L4 functionality (like mTLS) and optional, per-service-account waypoint proxies for L7 processing (like traffic routing and retries).

This design directly addresses the resource overhead and operational friction associated with the traditional sidecar model.

- Performance: Ambient significantly reduces the per-pod resource cost, closing the gap with Linkerd, particularly in clusters with high pod density. However, recent benchmarks indicate that Linkerd's purpose-built micro-proxy can still maintain a latency advantage under heavy, production-like loads.

- Operational Complexity: For application developers, Ambient simplifies operations by decoupling the proxy lifecycle from the application lifecycle (i.e., no more pod restarts to update the proxy). However, the underlying complexity of Istio's configuration model and its extensive set of CRDs remains, preserving the steep learning curve for platform operators.

While Ambient Mesh makes Istio a more compelling option from a resource efficiency standpoint, it does not fundamentally alter the core trade-off. The decision between Linkerd vs Istio still hinges on balancing Linkerd's operational simplicity against Istio's extensive feature set and configuration depth.

Figuring out which service mesh is right for you—and then actually implementing it—requires some serious expertise. OpsMoon connects you with the top 0.7% of DevOps engineers who can guide your Linkerd or Istio journey, from the first evaluation to running it all in production. Get started with a free work planning session at https://opsmoon.com.