How to Automate Software Testing: A Technical Guide

Learn how to automate software testing with this technical guide. Discover tool selection, framework design, CI/CD integration, and expert best practices.

Automating software testing isn't just about writing scripts; it's about engineering a resilient quality gate. The objective is to strategically implement automation for the highest return on investment (ROI), starting with a concrete plan that pinpoints repetitive, high-risk test cases and sets clear, quantifiable goals. A robust strategy ensures your test suite becomes a core asset, not technical debt, enabling faster, more reliable software delivery.

Building Your Test Automation Strategy

Initiating automation without a technical blueprint leads to a brittle, high-maintenance test suite. A well-architected strategy is your north star, guiding every technical decision, from selecting the right framework to integrating tests into your CI/CD pipeline.

The goal isn't to eliminate manual testing. Instead, it's to augment your QA team, freeing them from repetitive regression checks to focus on high-impact activities that require human intuition, such as exploratory testing, usability analysis, and complex edge-case validation.

This shift is a market imperative. The pressure to accelerate delivery cycles without compromising quality is immense. The global test automation market is projected to reach $49.9 billion by 2025, a significant leap from $15.87 billion in 2019, underscoring automation's role as a competitive necessity.

Pinpoint High-ROI Automation Candidates

Your first task is a technical audit of your existing manual test suite to identify high-ROI candidates. Automating everything is a classic anti-pattern that leads to wasted engineering effort. A selective, data-driven approach is critical.

Analyze your manual testing process to identify bottlenecks and prime automation targets:

- Repetitive and Tedious Tests: Any deterministic, frequently executed test case is a prime candidate. Regression suites that run before every release are the most common and valuable target. Automating these provides an immediate and significant reduction in manual effort.

- Critical-Path User Journeys: Implement "smoke tests" that cover core application functionality. For an e-commerce platform, this would be user registration, login, product search, adding to cart, and checkout. A failure in these flows renders the application unusable. These tests should be stable, fast, and run on every commit.

- Data-Driven Tests: Scenarios requiring validation against multiple data sets are ideal for automation. A script can iterate through a CSV or JSON file containing hundreds of input combinations (e.g., different user profiles, product types, payment methods) in minutes, a task that is prohibitively time-consuming and error-prone for a manual tester.

The most successful automation initiatives begin by targeting the mundane but critical tests. Automating your regression suite builds a reliable safety net, giving your development team the confidence to refactor code and ship features rapidly.

Define Tangible Goals and Metrics

With your initial scope defined, establish what success looks like in technical terms. Vague goals like "improve quality" are not actionable. Implement SMART (Specific, Measurable, Achievable, Relevant, Time-Bound) goals. Truly effective software quality assurance processes are built on concrete, measurable metrics.

Implement the following key performance indicators (KPIs) to track your automation's effectiveness:

- Defect Detection Efficiency (DDE): Calculate this as

(Bugs found by Automation) / (Total Bugs Found) * 100. An increasing DDE demonstrates that your automated suite is effectively catching regressions before they reach production. - Test Execution Time: Measure the wall-clock time for your full regression suite run. A primary objective should be to reduce this from days to hours, and ultimately, minutes, by leveraging parallel execution in your CI pipeline.

- Reduced Manual Testing Effort: Quantify the hours saved. If a manual regression cycle took 40 hours and the automated suite runs in 1 hour, you've reclaimed 39 engineering hours per cycle. This is a powerful metric for communicating ROI to stakeholders.

Deciding what to automate first requires a structured approach. Use a prioritization matrix to objectively assess candidates based on business criticality and technical feasibility.

Prioritizing Test Cases for Automation

| Test Case Type | Automation Priority | Justification |

|---|---|---|

| Regression Tests | High | Executed frequently; automating them saves significant time and prevents new features from breaking existing functionality. The ROI is immediate and recurring. |

| Critical Path / Smoke Tests | High | Verifies core application functionality. A failure here is a showstopper. These tests form the essential quality gate in a CI/CD pipeline. |

| Data-Driven Tests | High | Involves testing with multiple data sets. Automation removes the tedious, error-prone manual effort and provides far greater test coverage. |

| Performance / Load Tests | Medium | Crucial for scalability but requires specialized tools (e.g., JMeter, k6) and expertise. Best tackled after core functional tests are stable. |

| Complex User Scenarios | Medium | Tests involving multiple steps and integrations. High value, but can be brittle and complex to maintain. Requires robust error handling and stable locators. |

| UI / Visual Tests | Low | Prone to frequent changes, making scripts fragile. Often better suited for manual or exploratory testing, though tools like Applitools can automate visual validation. |

| Exploratory Tests | Not a Candidate | Relies on human intuition, domain knowledge, and creativity. This is where manual testing provides its highest value. |

This matrix serves as a technical guideline. By focusing on the high-priority categories first, you build a stable foundation and demonstrate value early, securing buy-in for scaling your automation efforts.

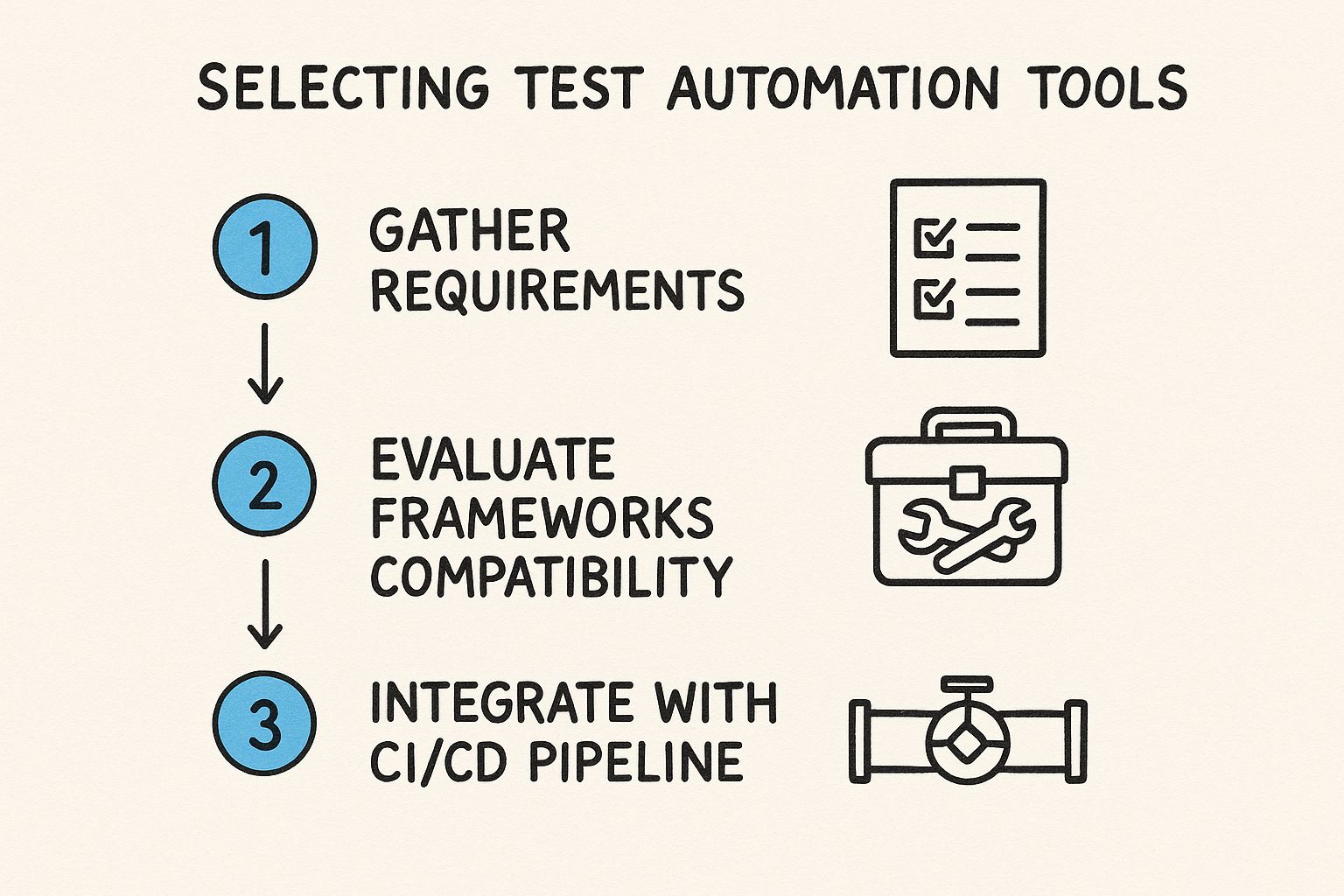

Choosing the Right Automation Tools and Frameworks

Selecting your automation toolchain is the most critical technical decision in this process. The right choice empowers your team; the wrong one creates a maintenance nightmare that consumes more resources than it saves.

This decision must be driven by your technical requirements—your application's architecture, your team's programming language proficiency, and your long-term scalability goals.

Matching Tools to Your Application Architecture

Your application's tech stack dictates your toolset. A tool designed for web UI testing is useless for validating a backend Kafka stream.

- For Web Applications: Industry standards are open-source drivers like Selenium and modern alternatives like Playwright. Selenium has a vast ecosystem and language bindings. Playwright offers modern features like auto-waits and network request interception out-of-the-box, which significantly reduces test flakiness.

- For Mobile Apps: Use a cross-platform framework like Appium (which leverages native drivers) for testing across iOS and Android with a single codebase. For deeper, platform-specific testing, use native frameworks: Espresso for Android (offering fast, reliable in-process tests) and XCUITest for iOS.

- For APIs: This layer offers the highest ROI for automation. API tests are fast, stable, and decoupled from UI changes. Use tools like Postman for exploratory testing and collection runs, or code-based libraries like Rest-Assured (Java) or Playwright's API testing module for full integration into your test framework.

A common architectural mistake is attempting to force one tool to test all layers. A "best-of-breed" approach is superior. Use Playwright for the UI, Rest-Assured for the REST APIs, and a specialized library for message queues. This ensures you are using the most efficient tool for each specific task.

Open Source vs. Integrated Platforms

The next decision is between the flexibility of open-source libraries and the convenience of commercial, all-in-one platforms.

Open-source tools like Selenium provide maximum control but require you to build your framework from scratch, integrating test runners, assertion libraries, and reporting tools yourself.

Integrated platforms like Katalon bundle an IDE, execution engine, and reporting dashboard. They often include low-code and record-and-playback features, which can accelerate script creation for teams with mixed technical skill sets.

This unified environment can lower the barrier to entry, but may come at the cost of flexibility and control compared to a custom-built open-source framework.

The Non-Negotiable Role of a Testing Framework

This is a critical architectural point. A tool like Selenium is a browser automation library; it knows how to drive a browser. A testing framework like Pytest (Python), TestNG (Java), or Cypress (JavaScript) provides the structure to organize, execute, and report on tests.

Attempting to build an automation suite using only a driver library is an anti-pattern that leads to unmaintainable code.

Frameworks provide the essential scaffolding for:

- Test Organization: Structuring tests into classes and modules.

- Assertions: Providing rich libraries for clear pass/fail validation (e.g.,

assertThat(user.getName()).isEqualTo("John Doe")). - Data-Driven Testing: Using annotations like

@DataProvider(TestNG) orpytest.mark.parametrizeto inject test data from external sources like YAML or CSV files. - Fixtures and Hooks: Managing test setup and teardown logic (e.g.,

@BeforeMethod,@AfterMethod) to ensure tests are atomic and independent.

For example, using TestNG with Selenium allows you to use annotations like @BeforeMethod to initialize a WebDriver instance and @DataProvider to feed login credentials into a test method. This separation of concerns between test infrastructure and test logic is fundamental to building a scalable and maintainable automation suite.

Writing Your First Maintainable Automated Tests

With your toolchain selected, the next phase is implementation. The primary goal is not just to write tests that pass, but to write code that is clean, modular, and maintainable. This initial engineering discipline is what separates a long-lasting automation asset from a short-lived liability.

Your first technical hurdle is reliably interacting with the application's UI. The stability of your entire suite depends on robust element location strategies.

Mastering Element Location Strategies

CSS Selectors and XPath are the two primary mechanisms for locating web elements. Understanding their technical trade-offs is crucial.

- CSS Selectors: The preferred choice for performance and readability. Prioritize using unique and stable attributes like

id. A selector for a login button should be as simple and direct as"#login-button". - XPath: More powerful and flexible than CSS, XPath can traverse the entire Document Object Model (DOM). It is essential for complex scenarios, such as locating an element based on its text content (

//button[text()='Submit']) or its relationship to another element (//div[@id='user-list']/div/h3[text()='John Doe']/../button[@class='delete-btn']). However, it is generally slower and more brittle than a well-crafted CSS selector.

Technical Best Practice: Collaborate with developers to add stable, test-specific attributes to the DOM, such as

data-testid="submit-button". This decouples your tests from fragile implementation details like CSS class names or DOM structure, dramatically improving test resilience. Locating an element by[data-testid='submit-button']is the most robust strategy.

Implementing the Page Object Model

Placing element locators and interaction logic directly within test methods is a critical anti-pattern that leads to unmaintainable code. A change to a single UI element could require updates across dozens of test files. The Page Object Model (POM) is the standard design pattern to solve this.

POM is an object-oriented pattern where each page or significant component of your application is represented by a corresponding class.

- This class encapsulates all element locators for that page.

- It exposes public methods that represent user interactions, such as

login(username, password)orsearchForItem(itemName).

This creates a crucial separation of concerns: test scripts contain high-level test logic and assertions, while page objects handle the low-level implementation details of interacting with the UI. When a UI element changes, the fix is made in one place: the corresponding page object class. This makes maintenance exponentially more efficient.

Managing Test Data and Version Control

Hardcoding test data (e.g., usernames, passwords, search terms) directly into test scripts is a poor practice. Externalize this data into formats like JSON, YAML, or CSV files. This allows you to easily run tests against different environments (dev, staging, prod) and add new test cases without modifying test code.

All automation code, including test scripts, page objects, and data files, must be stored in a version control system like Git. This is non-negotiable. Using Git enables collaboration through pull requests, provides a full history of changes, and allows you to integrate your test suite with CI/CD systems.

The field is evolving rapidly, with AI-powered tools emerging to address these challenges. In fact, 72% of QA professionals now use AI to assist in test script generation and maintenance. These tools promise to further reduce the effort of creating and maintaining robust tests. You can explore more test automation statistics to understand these industry shifts.

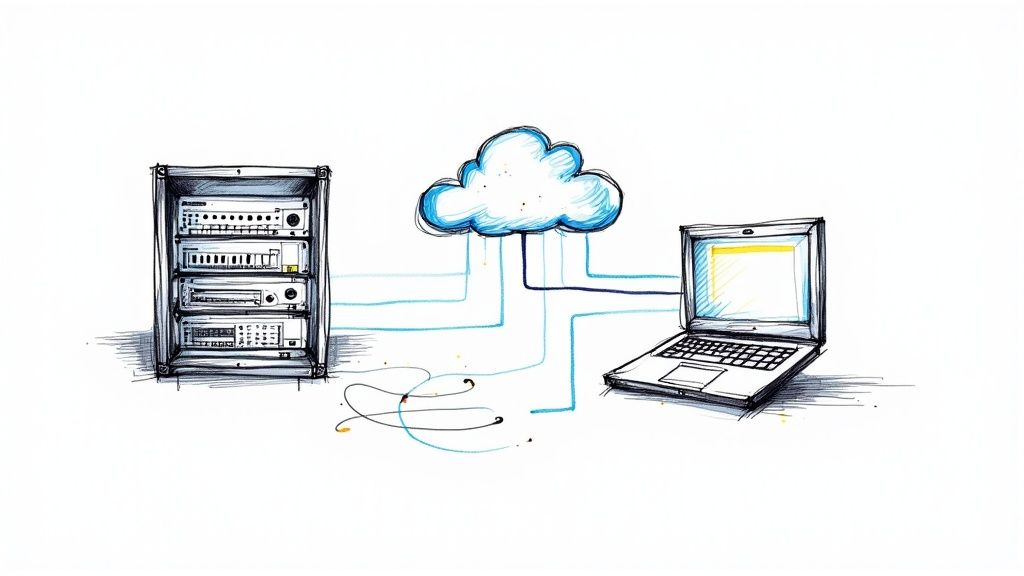

Integrating Automation into Your CI/CD Pipeline

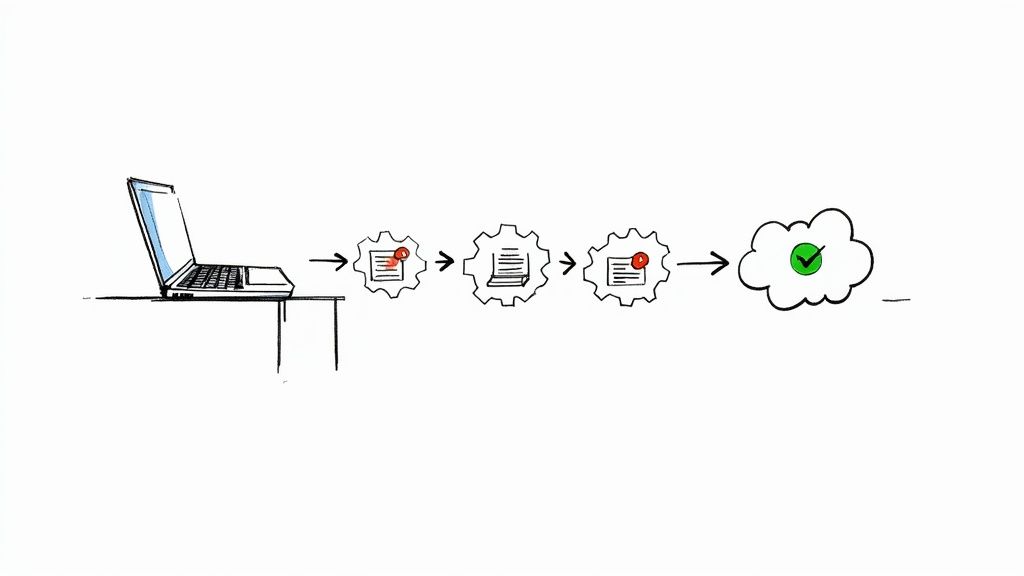

A suite of automated tests provides maximum value only when it is fully integrated into the development workflow. Continuous Integration/Continuous Delivery (CI/CD) transforms your test suite from a periodic check into an active, automated quality gate that provides immediate feedback.

Integrating tests into a CI/CD pipeline means they execute automatically on every code change. This creates a tight feedback loop, enabling developers to detect and fix regressions within minutes, not weeks. This velocity is a key driver of DevOps adoption; 54% of developers use DevOps to release code faster, with nearly 30% citing test automation as a primary enabler. You can learn more about how DevOps is shaping testing trends at globalapptesting.com.

As this diagram illustrates, pipeline integration is the capstone of the automation process. It's the mechanism that operationalizes your test scripts into a continuous quality engine.

Configuring Your Pipeline Triggers

Your first step is to configure the pipeline's execution triggers in your CI/CD tool, whether it's Jenkins, GitLab CI, or GitHub Actions. The most effective trigger for quality assurance is a pull/merge request to your main development branches (e.g., main or develop).

For example, in a GitHub Actions workflow YAML file, you would define this trigger:

on:

pull_request:

branches: [ main, develop ]

This configuration ensures that no code can be merged until the associated test suite passes, effectively preventing regressions from entering the primary codebase.

For more granular control, use test tagging (e.g., @smoke, @regression) to run different test suites at different stages. A fast smoke suite can run on every push to a feature branch, while the full, time-consuming regression suite runs only on pull requests to main. For a deeper technical guide, review our article on CI/CD pipeline best practices.

Crucial Advice: Configure your pipeline to fail loudly and block merges on test failure. A broken build must be an immediate, high-priority event. This creates a culture of quality where developers own the stability of the test suite because it is a direct gatekeeper to their code delivery.

Deciding when to run tests is a strategic choice with clear technical trade-offs.

CI/CD Trigger Strategy Comparison

| Trigger Event | Pros | Cons | Best For |

|---|---|---|---|

| On Every Commit | Immediate feedback, catches bugs instantly. | Can be resource-intensive, may slow down developers if tests are long. | Teams practicing trunk-based development with fast, targeted test suites (unit & integration tests). |

| On Pull/Merge Request | Validates changes before merging, keeps the main branch clean. | Feedback is slightly delayed compared to on-commit. | The most common and balanced approach for teams using feature branches. Ideal for running end-to-end tests. |

| Nightly/Scheduled | Runs comprehensive, long-running tests without blocking developers. | Feedback is significantly delayed (up to 24 hours). | Running full regression or performance tests that are too slow for the main pipeline. |

| Manual Trigger | Gives full control over when resource-intensive tests are run. | Relies on human intervention, negates the "continuous" aspect. | Kicking off pre-release validation or running specialized test suites on demand against a staging environment. |

A hybrid approach is often optimal: run unit and integration tests on every commit, run end-to-end tests on pull requests, and run full performance and regression suites nightly.

Slashing Feedback Times with Parallel Execution

As your test suite grows, execution time becomes a critical bottleneck. A two-hour regression run is unacceptable for rapid feedback. The solution is parallel execution.

Modern test runners (like TestNG, Pytest, and Jest) and CI/CD tools natively support parallelization. This involves sharding your test suite across multiple concurrent jobs or containers. For example, you can configure your pipeline to spin up four Docker containers and distribute your tests among them. This can reduce a 60-minute test run to a 15-minute run, providing much faster feedback to developers.

Setting Up Automated Alerting

Fast feedback is useless if it's not visible. The final step is to integrate automated notifications into your team's communication tools.

CI/CD platforms provide native integrations for services like Slack or Microsoft Teams. Configure your pipeline to send a notification to a dedicated engineering channel only on failure. The alert must include the committer's name, a link to the failed build log, and a summary of the failed tests. This enables developers to immediately diagnose and resolve the issue, making continuous testing a practical reality.

Analyzing Results and Scaling Your Automation

A running test suite is the beginning, not the end. The goal is to transform test execution data into actionable quality intelligence. This is the feedback loop that drives continuous improvement in your product and processes.

Standard test framework reports are a starting point. To truly scale, integrate dedicated reporting tools like Allure or ReportPortal. These platforms aggregate historical test data, providing dashboards that visualize test stability, failure trends, and execution times. This allows you to identify problematic areas of your application and flaky tests that need refactoring, moving beyond single-run analysis to longitudinal quality monitoring.

Integrating robust reporting and analysis is a hallmark of a mature DevOps practice. For more on this, see our guide to the top 10 CI/CD pipeline best practices for 2025.

Tackling Test Flakiness Head-On

A "flaky" test—one that passes and fails intermittently without any code changes—is the most insidious threat to an automation suite. Flakiness erodes trust; if developers cannot rely on the test results, they will begin to ignore them.

Implement a rigorous process for managing flakiness:

- Quarantine Immediately: The moment a test is identified as flaky, move it to a separate, non-blocking test run. A flaky test must never be allowed to block a build.

- Root Cause Analysis: Analyze historical data. Does the test fail only in a specific browser or environment? Does it fail when run in parallel with another specific test? This often points to race conditions or test data contamination.

- Refactor Wait Strategies: The most common cause of flakiness is improper handling of asynchronicity. Replace all fixed waits (

sleep(5)) with explicit, conditional waits (e.g.,WebDriverWaitin Selenium) that pause execution until a specific condition is met, such as an element becoming visible or clickable.

A flaky test suite is functionally equivalent to a broken test suite. The purpose of automation is to provide a deterministic signal of application quality. If that signal is unreliable, the entire investment is compromised.

Scaling Your Test Suite Sustainably

Scaling automation is an software engineering challenge. It requires managing complexity and technical debt within your test codebase.

Treat your test code with the same rigor as your production code. Conduct regular code reviews and refactoring sessions. Identify and extract duplicate code into reusable helper methods and utility classes.

A more advanced technique is to implement self-healing locators. This involves creating a wrapper around your element-finding logic. If the primary selector (e.g., data-testid) fails, the wrapper can intelligently attempt to find the element using a series of fallback selectors (e.g., ID, name, CSS class). This can make your suite more resilient to minor, non-breaking UI changes.

Finally, be disciplined about test suite growth. Every new test adds to the maintenance burden. Prioritize new tests based on code coverage gaps in high-risk areas and critical new features.

Common Software Test Automation Questions

Even with a robust strategy and modern tools, you will encounter technical challenges. Understanding common failure modes can help you proactively architect your suite for resilience.

Answering these key questions at the outset will differentiate a trusted automation suite from a neglected one.

What Is the Biggest Mistake to Avoid When Starting Out?

The most common mistake is attempting to automate 100% of test cases from the start. This "boil the ocean" approach inevitably leads to a complex, unmaintainable suite that fails to deliver value before the team loses momentum and stakeholder buy-in.

This strategy results in high initial costs, engineer burnout, and a loss of faith in automation's potential.

Instead, adopt an iterative, value-driven approach. Start with a small, well-defined scope: 5-10 critical-path "smoke" tests. Engineer a robust framework around this small set of tests, ensuring they run reliably in your CI/CD pipeline. This delivers early, demonstrable wins and builds the solid technical foundation required for future expansion.

How Should You Handle Intermittent Test Failures?

Flaky tests are the poison of any automation suite because they destroy trust. A deterministic, reliable signal is paramount.

When a flaky test is identified, immediately quarantine it by moving it to a separate, non-blocking pipeline job. This prevents it from impeding developer workflow.

Then, perform a technical root cause analysis. The cause is almost always one of three issues:

- Asynchronicity/Timing Issues: The test script is executing faster than the application UI can update.

- Test Data Dependency: Tests are not atomic. One test modifies data in a way that causes a subsequent test to fail.

- Environment Instability: The test environment itself is unreliable (e.g., slow network, overloaded database, flaky third-party APIs).

Refactor tests to use explicit, conditional waits instead of fixed sleeps. Ensure every test is completely self-contained, creating its own required data before execution and cleaning up after itself upon completion to ensure idempotency.

A small suite of 100% reliable tests is infinitely more valuable than a huge suite of tests that are only 90% reliable. Consistency is the cornerstone of developer trust.

Can You Completely Automate UI Testing?

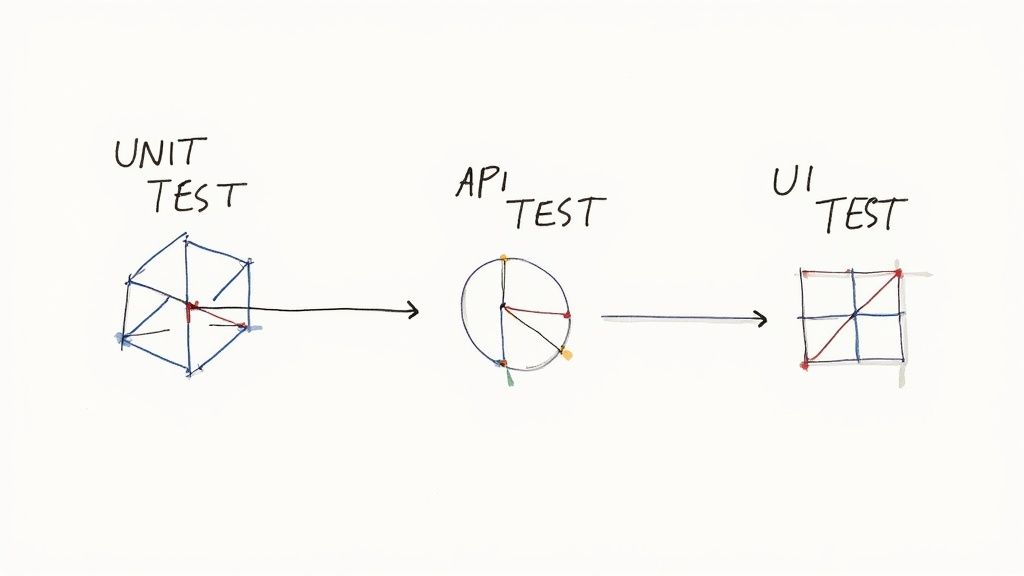

While technically approaching 100% UI automation coverage is possible, it is a strategic anti-pattern. UI tests are inherently brittle, slow to execute, and expensive to maintain.

The optimal strategy is the "testing pyramid." The base should be a large volume of fast, stable unit tests. The middle layer should consist of integration and API tests that validate business logic without the overhead of the UI. The top of the pyramid should be a small, carefully selected set of end-to-end UI tests that cover only the most critical user journeys.

Human-centric validation, such as exploratory testing, usability analysis, and aesthetic evaluation, cannot be automated. Reserve your manual testing efforts for these high-value activities where human expertise is irreplaceable.

At OpsMoon, we build the robust CI/CD pipelines and DevOps frameworks that bring your automation strategy to life. Our top-tier remote engineers can help you integrate, scale, and maintain your test suites for maximum impact. Plan your work with us for free and find the right expert for your team.