A Technical Guide to Engineering Productivity Measurement

A practical guide to engineering productivity measurement. Learn how to implement DORA and Flow metrics to optimize your software development lifecycle.

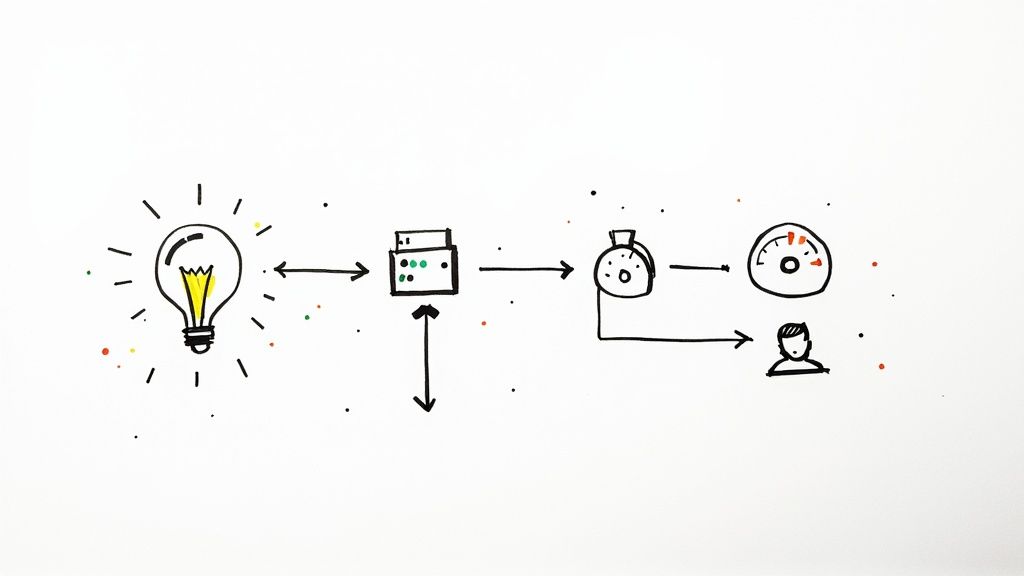

At a technical level, engineering productivity measurement is the quantitative analysis of a software delivery lifecycle (SDLC) to identify and eliminate systemic constraints. The goal is to optimize the flow of value from ideation to production. This has evolved significantly from obsolete metrics like lines of code (LOC) or commit frequency.

Today, the focus is on a holistic system view, leveraging robust frameworks like DORA and Flow Metrics. These frameworks provide a multi-dimensional understanding of speed, quality, and business outcomes, enabling data-driven decisions for process optimization.

Why Legacy Metrics are Technically Flawed

For decades, attempts to quantify software development mirrored industrial-era manufacturing models, focusing on individual output. This paradigm is fundamentally misaligned with the non-linear, creative problem-solving nature of software engineering.

Metrics like commit volume or LOC fail because they measure activity, not value delivery. For example, judging a developer by commit count is analogous to judging a database administrator by the number of SQL queries executed; it ignores the impact and efficiency of those actions. This flawed approach incentivizes behaviors detrimental to building high-quality, maintainable systems.

The Technical Debt Caused by Vanity Metrics

These outdated, activity-based metrics don't just provide a noisy signal; they actively introduce system degradation. When the objective function is maximizing ticket closures or commits, engineers are implicitly encouraged to bypass best practices, leading to predictable negative outcomes:

- Increased Technical Debt: Rushing to meet a ticket quota often means skimping on unit test coverage, neglecting SOLID principles, or deploying poorly architected code. This technical debt accrues interest, manifesting as increased bug rates and slower future development velocity. Learn more about how to how to manage technical debt systematically.

- Gaming the System: Engineers can easily manipulate these metrics. A single, cohesive feature branch can be rebased into multiple small, atomic commits (

git rebase -ifollowed by splitting commits) to inflate commit counts without adding any value. This pollutes the git history and provides no real signal of progress. - Discouraging High-Leverage Activities: Critical engineering tasks like refactoring, mentoring junior engineers, conducting in-depth peer reviews, or improving CI/CD pipeline YAML files are disincentivized. These activities are essential for long-term system health but don't translate to high commit volumes or new LOC.

The history of software engineering is littered with attempts to find a simple productivity proxy. Early metrics like Source Lines of Code (SLOC) were debunked because they penalize concise, efficient code (e.g., replacing a 50-line procedural block with a 5-line functional equivalent would appear as negative productivity). For a deeper academic look, this detailed paper details these historical challenges.

Shifting Focus From Activity to System Throughput

The fundamental flaw of vanity metrics is tracking activity instead of impact. Consider an engineer who spends a week deleting 2,000 lines of legacy code, replacing it with a single call to a well-maintained library. This act reduces complexity, shrinks the binary size, and eliminates a potential source of bugs.

Under legacy metrics, this is negative productivity (negative LOC). In reality, it is an extremely high-leverage engineering action that improves system stability and future velocity.

True engineering productivity measurement is about instrumenting the entire software delivery value stream to analyze its health and throughput, from

git committo customer value realization.

This is why frameworks like DORA and Flow Metrics are critical. They shift the unit of analysis from the individual engineer to the performance of the system as a whole.

Instead of asking, "What is the commit frequency per developer?" these frameworks help us answer the questions that drive business value: "What is our deployment pipeline's cycle time?" and "What is the change failure rate of our production environment?"

Mastering DORA Metrics for Elite Performance

To move beyond activity tracking and measure system-level outcomes, a balanced metrics framework is essential. The industry gold standard is DORA (DevOps Research and Assessment). It provides a data-driven, non-gamed view of software delivery performance through four key metrics.

These metrics create a necessary tension between velocity and stability. This is not a tool for individual performance evaluation but a diagnostic suite for the entire engineering system, from local development environments to production.

The Two Pillars: Speed and Throughput

The first two DORA metrics quantify the velocity of your value stream. They answer the critical question: "What is the throughput of our delivery pipeline?"

- Deployment Frequency: This metric measures the rate of successful deployments to production. Elite teams deploy on-demand, often multiple times per day. High frequency indicates a mature CI/CD pipeline (

.gitlab-ci.yml, Jenkinsfile), extensive automated testing, and a culture of small, incremental changes (trunk-based development). It is a proxy for team confidence and process automation. - Lead Time for Changes: This measures the median time from a code commit (

git commit) to its successful deployment in production. It reflects the efficiency of the entire SDLC, including code review, CI build/test cycles, and deployment stages. A short lead time (less than a day for elite teams) means there is minimal "wait time" in the system. Optimizing software release cycles directly reduces this metric.

The Counterbalance: Stability and Quality

Velocity without quality results in a system that rapidly accumulates technical debt and user-facing failures. The other two DORA metrics provide the stability counterbalance, answering: "How reliable is the value we deliver?"

The power of DORA lies in its inherent balance. Optimizing for Deployment Frequency without monitoring Change Failure Rate is like increasing a web server's request throughput without monitoring its error rate. You are simply accelerating failure delivery.

Here are the two stability metrics:

- Change Failure Rate (CFR): This is the percentage of deployments that result in a production failure requiring remediation (e.g., a hotfix, rollback, or patch). A low CFR (under 15% for elite teams) is a strong indicator of quality engineering practices, such as comprehensive test automation (unit, integration, E2E), robust peer reviews, and effective feature flagging.

- Mean Time to Restore (MTTR): When a failure occurs, this metric tracks the median time to restore service. MTTR is a pure measure of your incident response and system resilience. Elite teams restore service in under an hour, which demonstrates strong observability (logging, metrics, tracing), well-defined incident response protocols (runbooks), and automated recovery mechanisms (e.g., canary deployments with automatic rollback).

The Four DORA Metrics Explained

| Metric | Measures | What It Tells You | Performance Level (Elite) |

|---|---|---|---|

| Deployment Frequency | How often code is successfully deployed to production. | Your team's delivery cadence and pipeline efficiency. | On-demand (multiple times per day) |

| Lead Time for Changes | The time from code commit to production deployment. | The overall efficiency of your development and release process. | Less than one day |

| Change Failure Rate | The percentage of deployments causing production failures. | The quality and stability of your releases. | 0-15% |

| Mean Time to Restore | How long it takes to recover from a production failure. | The effectiveness of your incident response and recovery process. | Less than one hour |

Analyzing these as a system prevents local optimization at the expense of global system health.

Gathering DORA Data From Your Toolchain

The data required for DORA metrics already exists within your existing development toolchain. The task is to aggregate and correlate data from these disparate sources.

Here's how to instrument your system to collect the data:

- Git Repository: Use git hooks or API calls to platforms like GitHub or GitLab to capture commit timestamps and pull request merge events. This is the starting point for Lead Time for Changes. A

git logcan provide the raw data. - CI/CD Pipeline: Your CI/CD server (e.g., Jenkins, GitLab CI, GitHub Actions) logs every deployment event. Successful production deployments provide the data for Deployment Frequency. Failed deployments are a potential input for CFR.

- Incident Management Platform: Systems like PagerDuty or Opsgenie log incident creation (

alert_triggered) and resolution (incident_resolved) timestamps. The delta between these is your raw data for MTTR. - Project Management Tools: By tagging commits with ticket IDs (e.g.,

git commit -m "feat(auth): Implement OAuth2 flow [PROJ-123]"), you can link deployments back to work items in Jira. This allows you to correlate production incidents with the specific changes that caused them, feeding into your Change Failure Rate.

Automating this data aggregation builds a real-time dashboard of your engineering system's health. This enables a tight feedback loop: measure the system, identify a constraint (e.g., long PR review times), implement a process experiment (e.g., setting a team-wide SLO for PR reviews), and measure again to validate the outcome.

Using Flow Metrics to See the Whole System

While DORA metrics provide a high-resolution view of your deployment pipeline, Flow Metrics zoom out to analyze the entire value stream, from ideation to delivery.

Analogy: DORA measures the efficiency of a factory's final assembly line and shipping dock. Flow Metrics track the entire supply chain, from raw material procurement to final customer delivery, identifying bottlenecks at every stage.

This holistic perspective is critical because it exposes "wait states"—the periods where work is idle in a queue. Optimizing just the deployment phase is a local optimization if the primary constraint is a week-long wait for product approval before development even begins.

This visualization highlights the balance required in a healthy engineering system: rapid delivery must be paired with rapid recovery to ensure that increased velocity does not degrade system stability.

The Four Core Flow Metrics

Flow Metrics quantify the movement of work items (features, defects, tech debt, risks) through your system, making invisible constraints visible.

- Flow Velocity: The number of work items completed per unit of time (e.g., items per sprint or per week). It is a measure of throughput, answering, "What is our completion rate?"

- Flow Time: The total elapsed time a work item takes to move from 'work started' to 'work completed' (e.g., from

In ProgresstoDoneon a Kanban board). It measures the end-to-end cycle time, answering, "How long does a request take to be fulfilled?" - Flow Efficiency: The ratio of active work time to total Flow Time. If a feature had a Flow Time of 10 days but only required two days of active coding, reviewing, and testing, its Flow Efficiency is 20%. The other 80% was idle wait time, indicating a major systemic bottleneck.

- Flow Load: The number of work items currently in an active state (Work In Progress or WIP). According to Little's Law,

Average Flow Time = Average WIP / Average Throughput. A consistently high Flow Load indicates multitasking and context switching, which increases the Flow Time for all items.

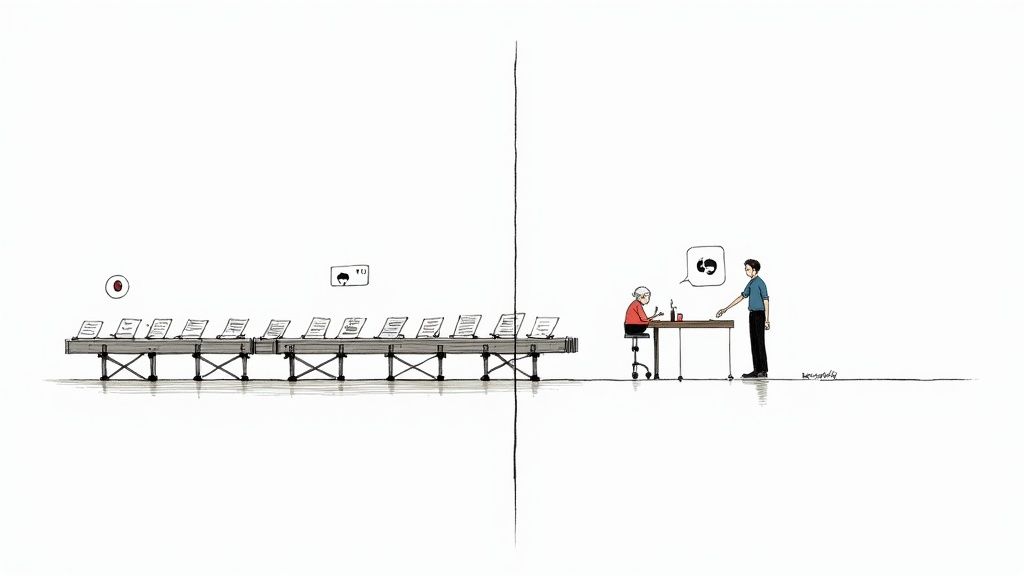

Flow Metrics are not about pressuring individuals to work faster. They are about optimizing the system to reduce idle time and improve predictability, showing exactly where work gets stuck.

Mapping Your Value Stream to Get Started

You can begin tracking Flow Metrics with your existing project management tool. The first step is to accurately model your value stream.

- Define Your Workflow States: Map the explicit stages in your process onto columns on a Kanban or Scrum board. A typical workflow is:

Backlog->In Progress->Code Review->QA/Testing->Ready for Deploy->Done. Be as granular as necessary to reflect reality. - Classify Work Item Types: Use labels or issue types to categorize work (e.g., Feature, Defect, Risk, Debt). This helps you analyze how effort is distributed. Are you spending 80% of your time on unplanned bug fixes? That's a critical insight.

- Start Tracking Time in State: Most modern tools (like Jira or Linear) automatically log timestamps for transitions between states. This is the raw data you need. If not, you must manually record the entry/exit time for each work item in each state.

- Calculate the Metrics: With this time-series data, the calculations become straightforward. Flow Time is

timestamp(Done) - timestamp(In Progress). Flow Velocity isCOUNT(items moved to Done)over a time period. Flow Load isCOUNT(items in any active state)at a given time. Flow Efficiency isSUM(time in active states) / Flow Time.

A Practical Example

A team implements a new user authentication feature. The ticket enters In Progress on Monday at 9 AM. The developer completes the code and moves it to Code Review on Tuesday at 5 PM.

The ticket sits in the Code Review queue for 48 hours until Thursday at 5 PM, when a review is completed in two hours. It then waits in the QA/Testing queue for another 24 hours before being picked up.

The final Flow Time was over five days, but the total active time (coding + review + testing) was less than two days. The Flow Efficiency is ~35%, immediately highlighting that the primary constraints are wait times in the review and QA queues, not development speed.

Without Flow Metrics, this systemic delay would be invisible. With them, the team can have a data-driven retrospective about concrete solutions, such as implementing a team-wide SLO for code review turnaround or dedicating specific time blocks for QA.

Choosing Your Engineering Intelligence Tools

Once you understand DORA and Flow Metrics, the next step is automating their collection and analysis. The market for engineering productivity measurement tools is extensive, ranging from comprehensive platforms to specialized CI/CD plugins and open-source solutions. The key is to select a tool that aligns with your specific goals and existing tech stack.

How to Evaluate Your Options

Choosing a tool is a strategic decision that depends on your team's scale, budget, and technical maturity. A startup aiming to shorten its lead time has different needs than a large enterprise trying to visualize dependencies across 50 microservices teams.

To make an informed choice, ask these questions:

- What is our primary objective? Are we solving for slow deployment cycles (DORA)? Are we trying to identify system bottlenecks (Flow)? Or are we focused on improving the developer experience (e.g., reducing build times)? Define your primary problem statement first.

- What is the integration overhead? The tool must seamlessly integrate with your source code repositories (GitHub, GitLab), CI/CD pipelines (Jenkins, CircleCI), and project management systems (Jira, Linear). Evaluate the ease of setup and the quality of the integrations. A tool that requires significant manual configuration or data mapping will quickly become a burden.

- Does it provide actionable insights or just raw data? A dashboard full of charts is not useful. The best tools surface correlations and highlight anomalies, turning data into actionable recommendations. The goal is to facilitate team-level discussions, not create analysis paralysis for managers.

Before committing, consult resources like a comprehensive comparison of top AI-powered analytics tools to understand the current market landscape.

Comparison of Productivity Tooling Approaches

The tooling landscape can be broken down into three main categories. Each offers a different set of trade-offs in terms of cost, flexibility, and ease of use.

| Tool Category | Pros | Cons | Best For |

|---|---|---|---|

| Comprehensive Platforms | All-in-one dashboards, automated insights, connects data sources for you. | Higher cost, can be complex to configure initially. | Teams wanting a complete, out-of-the-box solution for DORA, Flow, and developer experience metrics. |

| CI/CD Analytics Plugins | Easy to set up, provides focused data on deployment pipeline health. | Limited scope, doesn't show the full value stream. | Teams focused specifically on optimizing their build, test, and deployment processes. |

| DIY & Open-Source Scripts | Highly customizable, low to no cost for the software itself. | Requires significant engineering time to build and maintain, no support. | Teams with spare engineering capacity and very specific, unique measurement needs. |

Your choice should be guided by your available resources and the specific problems you aim to solve.

Many comprehensive platforms excel at data visualization, which is critical for making complex data understandable.

This dashboard from LinearB, for example, correlates data from Git, project management, and CI/CD tools to present unified metrics like cycle time. This allows engineering leaders to move from isolated data points to a holistic view of system health, identifying trends and outliers that would otherwise be invisible.

Ultimately, the best tool is one that integrates smoothly into your workflow and presents data in a way that sparks blameless, constructive team conversations. For a related perspective, our application performance monitoring tools comparison covers tools for monitoring production systems. The objective is always empowerment, not surveillance.

Building a Culture of Continuous Improvement

Instrumenting your SDLC and collecting data is a technical exercise. The real challenge of engineering productivity measurement is fostering a culture where this data is used for system improvement, not individual judgment.

Without the right cultural foundation, even the most sophisticated metrics will be gamed or ignored. The objective is to transition from a top-down, command-and-control approach to a decentralized model where teams own their processes and use data to drive their own improvements.

This begins with an inviolable principle: metrics describe the performance of the system, not the people within it. They must never be used in performance reviews, for stack ranking, or for comparing individual engineers. This is the fastest way to destroy psychological safety and incentivize metric manipulation over genuine problem-solving.

Data is a flashlight for illuminating systemic problems—like pipeline bottlenecks, tooling friction, or excessive wait states. It is not a hammer for judging individuals.

This mindset shifts the entire conversation from blame ("Why was your lead time so high?") to blameless problem-solving ("Our lead time increased by 15% last sprint; let's look at the data to see which part of the process is slowing down.").

Fostering Psychological Safety

Productive, data-informed conversations require an environment of high psychological safety, where engineers feel secure enough to ask questions, admit mistakes, and challenge the status quo without fear of reprisal.

Without it, your metrics become a measure of how well your team can hide problems.

Leaders must actively cultivate this environment:

- Celebrate Learning from Failures: When a deployment fails (increasing CFR), treat it as a valuable opportunity to improve the system (e.g., "This incident revealed a gap in our integration tests. How can we improve our test suite to catch this class of error in the future?").

- Encourage Questions and Dissent: During retrospectives, actively solicit counter-arguments and different perspectives. Make it clear that challenging assumptions is a critical part of the engineering process.

- Model Vulnerability: Leaders who openly discuss their own mistakes and misjudgments create an environment where it's safe for everyone to do the same.

Driving Change with Data-Informed Retrospectives

The team retrospective is the ideal forum for applying this data. Metrics provide an objective, factual starting point that elevates the conversation beyond subjective feelings.

For example, a vague statement like, "I feel like code reviews are slow," transforms into a data-backed observation: "Our Flow Efficiency was 25% this sprint, and the data shows that the average ticket spent 48 hours in the 'Code Review' queue. What experiments can we run to reduce this wait time?"

This approach enables the team to:

- Identify a specific, measurable problem.

- Hypothesize a solution (e.g., "We will set a team SLO of reviewing all PRs under 24 hours old before starting new work.").

- Measure the impact of the experiment in the next sprint using the same metric.

This creates a scientific, iterative process of continuous improvement. To further this, teams can explore platforms that reduce DevOps overhead, freeing up engineering cycles for core product development.

Productivity improvement is a marathon. On a global scale, economies have only closed their productivity gaps by an average of 0.5% per year since 2010, highlighting that meaningful gains require sustained effort and systemic innovation. You can explore the full findings on global productivity trends for a macroeconomic perspective. By focusing on blameless, team-driven improvement, you build a resilient culture that can achieve sustainable gains.

Common Questions About Measuring Productivity

Introducing engineering productivity measurement will inevitably raise valid concerns from your team. Addressing these questions transparently is essential for building the trust required for success.

Can You Measure Without a Surveillance Culture?

This is the most critical concern. The fear of "Big Brother" monitoring every action is legitimate. The only effective counter is an absolute, publicly stated commitment to a core principle: we measure systems, not people.

DORA and Flow metrics are instruments for diagnosing the health of the delivery pipeline, not for evaluating individual engineers. They are used to identify systemic constraints, such as a slow CI/CD pipeline or a cumbersome code review process that impacts everyone.

These metrics should never be used to create leaderboards or be factored into performance reviews. Doing so creates a toxic culture and incentivizes gaming the system.

The goal is to reframe the conversation from "Who is being slow?" to "What parts of our system are creating drag?" This transforms data from a tool of judgment into a shared instrument for blameless, team-owned improvement.

Making this rule non-negotiable is the foundation of the psychological safety needed for this initiative to succeed.

How Can Metrics Handle Complex Work?

Engineers correctly argue that software development is not an assembly line. It involves complex, research-intensive, and unpredictable work. How can metrics capture this nuance?

This is precisely why modern frameworks like DORA and Flow were designed. They abstract away from the content of the work and instead measure the performance of the system that delivers that work.

- DORA is agnostic to task complexity. It measures the velocity and stability of your delivery pipeline, whether the change being deployed is a one-line bug fix or a 10,000-line new microservice.

- Flow Metrics track how smoothly any work item—be it a feature, defect, or technical debt task—moves through your defined workflow. It highlights the "wait time" where work is idle, which is a source of inefficiency regardless of the task's complexity.

These frameworks do not attempt to measure the cognitive load or creativity of a single task. They measure the predictability, efficiency, and reliability of your overall delivery process.

When Can We Expect to See Results?

Leaders will want a timeline for ROI. It is crucial to set expectations correctly. Your initial data is a baseline measurement, not a grade. It provides a quantitative snapshot of your current system performance.

Meaningful, sustained improvement typically becomes visible within one to two quarters. Lasting change is not instantaneous; it is the result of an iterative cycle:

- Analyze the baseline data to identify the primary bottleneck.

- Formulate a hypothesis and run a small, targeted process experiment.

- Measure again to see if the experiment moved the metric in the desired direction.

This continuous loop of hypothesis, experiment, and validation is what drives sustainable momentum and creates a high-performing engineering culture.

Ready to move from theory to action? OpsMoon provides the expert DevOps talent and strategic guidance to help you implement a healthy, effective engineering productivity measurement framework. Start with a free work planning session to build your roadmap. Find your expert today at opsmoon.com.