Top Database Migration Best Practices for DevOps Success

Learn key database migration best practices to ensure a smooth, secure, and efficient process. Essential tips for DevOps teams.

Database migration is one of the most high-stakes operations a DevOps team can undertake. A single misstep can lead to irreversible data loss, extended downtime, and severe performance degradation, impacting the entire business. As systems evolve and scale, migrating from legacy on-premise databases to modern cloud-native solutions, or shifting between different database technologies (e.g., from SQL to NoSQL), has become a routine challenge. However, routine does not mean simple.

Success requires more than just moving data; it demands a strategic, technical, and methodical approach that is fully integrated into the DevOps lifecycle. Executing a flawless migration is a non-negotiable skill that separates high-performing engineering teams from the rest. This article moves beyond generic advice and dives deep into eight technical database migration best practices essential for any engineering team.

We will cover the granular details of planning, executing, and validating a migration, providing actionable steps, technical considerations, and practical examples. From comprehensive data assessment and incremental migration strategies to robust testing, security, and rollback planning, you will gain the insights needed to navigate this complex process with precision and confidence.

1. Comprehensive Data Assessment and Pre-Migration Planning

The most critical phase of any database migration happens before a single byte of data is moved. A comprehensive data assessment is not a cursory check; it's a deep, technical audit of your source database ecosystem. This foundational step is arguably the most important of all database migration best practices, as it prevents scope creep, uncovers hidden dependencies, and mitigates risks that could otherwise derail the entire project.

The objective is to create a complete data dictionary and a dependency graph of every database object. This goes far beyond just tables and columns. It involves a meticulous cataloging of views, stored procedures, triggers, user-defined functions (UDFs), sequences, and scheduled jobs. This granular understanding forms the bedrock of a successful migration strategy.

How It Works: A Technical Approach

The process involves two primary activities: schema discovery and data profiling.

- Schema Discovery: Use automated tools to inventory all database objects. For JDBC-compliant databases like Oracle or PostgreSQL,

SchemaSpyis an excellent open-source tool that generates a visual and interactive map of your schema, including entity-relationship (ER) diagrams and dependency chains. For others, you can run native catalog queries. For example, in SQL Server, you would querysys.objectsandsys.sql_modulesto extract definitions for procedures and functions. - Data Profiling: Once you have the schema, you must understand the data within it. This means analyzing data types, nullability, character sets, and data distribution. For instance, you might discover a

VARCHAR(255)column in your source MySQL database that mostly contains integers, making it a candidate for a more efficientINTtype in the target. This analysis directly informs schema conversion, such as mapping a PostgreSQLTIMESTAMP WITH TIME ZONEto a SQL ServerDATETIMEOFFSET.

Key Insight: A successful assessment transforms abstract migration goals into a concrete, technical roadmap. It helps you accurately estimate data transfer times, identify complex inter-object dependencies that could break applications, and pinpoint data quality issues like orphaned records or inconsistent formatting before they become production problems.

Actionable Tips for Implementation

- Document Everything Centrally: Use a tool like Confluence or a Git-based wiki to create a single source of truth for all findings, including schema maps, data type mappings, and identified risks.

- Create Data Lineage Diagrams: Visually map how data flows from its source through various transformations and into its final tables. This is invaluable for understanding the impact of changing a single view or stored procedure.

- Establish Clear Success Metrics: Before starting, define what success looks like. This includes technical metrics (e.g., less than 1% data validation errors, p99 query latency under 200ms) and business metrics (e.g., zero downtime for critical services).

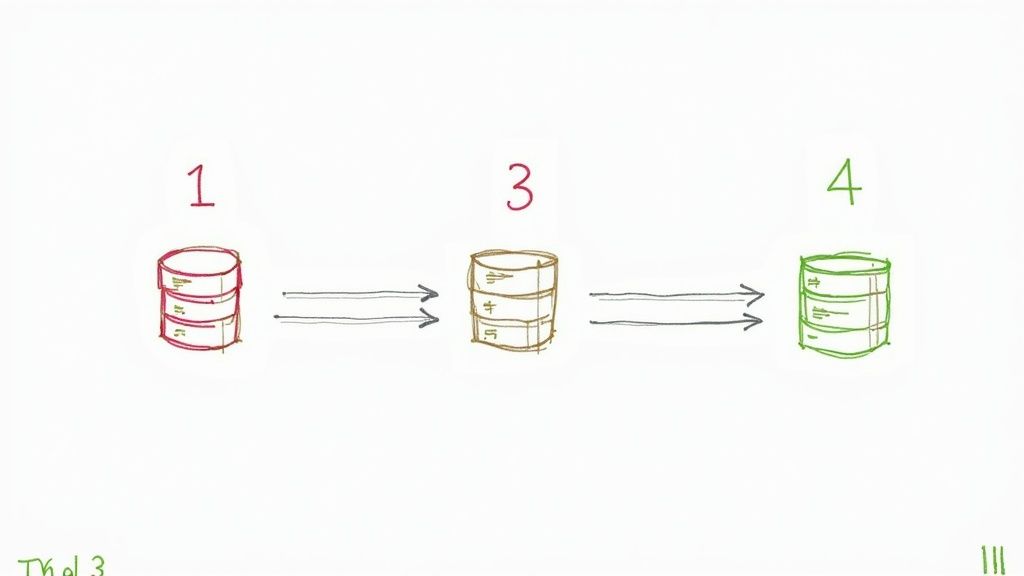

2. Incremental Migration Strategy

Attempting to migrate an entire database in a single, high-stakes event, often called a "big bang" migration, is fraught with risk. An incremental migration strategy, one of the most critical database migration best practices, mitigates this danger by breaking the process into smaller, manageable, and verifiable phases. This approach treats the migration not as one monolithic task but as a series of controlled mini-migrations, often organized by module, business function, or even by individual tables.

This method, often associated with Martin Fowler's "Strangler Fig Pattern," allows teams to validate each phase independently, reducing the blast radius of potential failures. Tech giants like Stripe and GitHub have famously used this technique to move massive, mission-critical datasets with minimal disruption, demonstrating its effectiveness at scale.

How It Works: A Technical Approach

An incremental migration typically involves running the source and target databases in parallel while gradually shifting traffic and data. This requires a robust mechanism for data synchronization and a routing layer to direct application requests.

- Phased Data Movement: Instead of moving all data at once, you transfer logical chunks. For example, you might start with a low-risk, read-only dataset like user profiles. Once that is successfully moved and validated, you proceed to a more complex module like product catalogs, and finally to high-transactional data like orders or payments.

- Application-Level Routing: A proxy layer or feature flags within the application code are used to control which database serves which requests. Initially, all reads and writes go to the source. Then, you might enable dual-writing, where new data is written to both databases simultaneously. Gradually, reads for specific data segments are redirected to the new database until it becomes the primary system of record. For instance, using a feature flag, you could route

GET /api/usersto the new database whilePOST /api/ordersstill points to the old one.

Key Insight: Incremental migration transforms a high-risk, all-or-nothing operation into a low-risk, iterative process. It provides continuous feedback loops, allowing your team to learn and adapt with each phase. This drastically improves the chances of success and significantly reduces the stress and potential business impact of a large-scale cutover.

Actionable Tips for Implementation

- Start with Read-Only or Low-Impact Data: Begin your migration with the least critical data segments. This allows your team to test the migration pipeline, tools, and validation processes in a low-risk environment before tackling business-critical data.

- Implement a Data Reconciliation Layer: Create automated scripts or use tools to continuously compare data between the source and target databases. This "data-diff" mechanism is essential for ensuring consistency and catching discrepancies early during the parallel-run phase.

- Maintain Detailed Migration Checkpoints: For each phase, document the exact steps taken, the data moved, the validation results, and any issues encountered. This creates an auditable trail and provides a clear rollback point if a phase fails, preventing a complete restart.

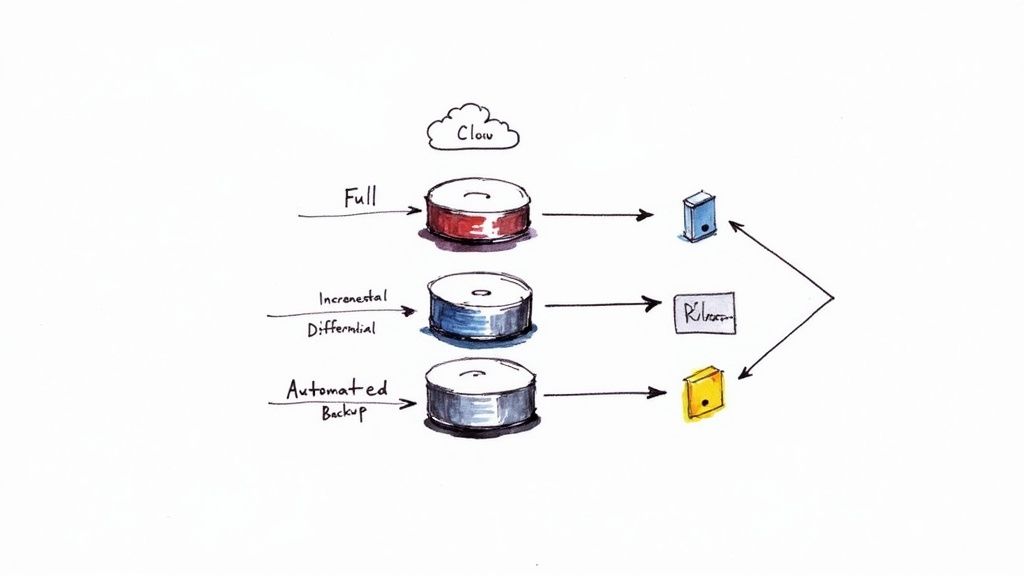

3. Robust Backup and Recovery Strategy

A migration without a bulletproof backup and recovery plan is a high-stakes gamble. This strategy is not merely about creating a pre-migration backup; it’s a continuous, multi-layered process that provides a safety net at every stage of the project. It ensures that no matter what fails, whether due to data corruption, network issues, or unexpected schema incompatibilities, you have a verified, immediate path back to a known-good state. This practice transforms a potential catastrophe into a manageable incident.

The core principle is to treat backups as the primary artifact of your recovery plan. A backup is useless if it cannot be restored successfully. Therefore, this best practice emphasizes not just the creation of backups before, during, and after the migration, but the rigorous testing of restore procedures to guarantee their viability under pressure.

How It Works: A Technical Approach

The implementation involves creating a hierarchy of backups and a documented, rehearsed recovery playbook.

- Multi-Point Backups: Before the migration cutover begins, take a full, cold backup of the source database. During a phased or continuous migration, implement point-in-time recovery (PITR) capabilities. For example, PostgreSQL's continuous archiving (using

archive_command) or SQL Server's full recovery model allows you to restore the database to any specific moment before a failure occurred. This is critical for minimizing data loss during the migration window. - Restore Drills: Regularly and systematically test your restore procedures. This means spinning up a separate, isolated environment, restoring your latest backup to it, and running a suite of validation scripts to check for data integrity and application connectivity. For instance, you could automate a weekly restore test using a CI/CD pipeline that provisions temporary infrastructure, runs the restore command (e.g.,

pg_restoreorRMAN), and executes data validation queries.

Key Insight: The true value of your backup strategy is measured by your confidence in your ability to restore, not by the number of backups you have. A tested recovery plan is one of the most vital database migration best practices because it provides the operational confidence needed to execute a complex migration, knowing you can roll back cleanly and quickly if needed.

Actionable Tips for Implementation

- Test Restores, Not Just Backups: Schedule regular, automated drills to restore backups to a staging environment. Validate data integrity and application functionality against the restored database.

- Geographically Distribute Backups: Store backup copies in different geographic regions, especially when migrating to the cloud. Services like AWS S3 cross-region replication or Azure Geo-redundant storage (GRS) automate this process.

- Automate Backup Verification: Use database-native tools or scripts to perform integrity checks on your backup files immediately after creation. For SQL Server, use

RESTORE VERIFYONLY; for Oracle, use theVALIDATEcommand in RMAN. - Document and Practice Recovery Playbooks: Maintain clear, step-by-step documentation for your restore process. Run timed fire drills with the engineering team to ensure everyone knows their role in a recovery scenario.

4. Data Validation and Quality Assurance

A migration without rigorous data validation is a leap of faith that often ends in failure. Data validation is not a single, post-migration checkbox; it is a continuous quality assurance process woven into every stage of the project. This practice ensures data integrity, completeness, and accuracy by systematically verifying that the data in the target database is an exact, functional replica of the source data.

The goal is to prevent data loss or corruption, which can have catastrophic consequences for business operations, analytics, and customer trust. Implementing a multi-layered validation strategy is one of the most critical database migration best practices, transforming a high-risk procedure into a controlled, predictable event. For example, financial institutions like Thomson Reuters implement real-time validation for time-sensitive data streams, ensuring zero corruption during migration.

How It Works: A Technical Approach

The process involves a three-phase validation cycle: pre-migration, in-flight, and post-migration.

- Pre-Migration Baseline: Before any data is moved, establish a clear baseline of the source data. This involves running count queries on every table (

SELECT COUNT(*) FROM table_name), calculating checksums on key columns, and profiling data distributions. For example, you might record the sum of a transactionalamountcolumn or the maximum value of anidfield. These metrics serve as your immutable source of truth. - In-Flight and Post-Migration Reconciliation: After the migration, run the exact same set of queries and checksum calculations on the target database. The results must match the pre-migration baseline perfectly. For large datasets, this can be automated with scripts that compare row counts, checksums, and other aggregates between the source and target. Tools like

datacompyin Python are excellent for performing detailed, column-by-column comparisons between two DataFrames loaded from the respective databases.

Key Insight: Effective validation is about more than just matching row counts. It requires a deep, semantic understanding of the data. You must validate business logic by running predefined queries that test critical application functions, ensuring relationships, constraints, and business rules remain intact in the new environment.

Actionable Tips for Implementation

- Establish Data Quality Baselines: Before migrating, create a definitive report of key data metrics from the source. This includes row counts, null counts, min/max values for numeric columns, and checksums.

- Automate Validation with Scripts: Write and reuse scripts (Python, shell, or SQL) to automate the comparison of pre- and post-migration baselines. This ensures consistency and significantly reduces manual effort and human error.

- Use Statistical Sampling for Large Datasets: For multi-terabyte databases where a full data comparison is impractical, use statistical sampling. Validate a representative subset of data (e.g., 1-5%) in-depth to infer the quality of the entire dataset.

- Involve Business Users in Defining Criteria: Work with stakeholders to define what constitutes "valid" data from a business perspective. They can provide test cases and queries that reflect real-world usage patterns.

5. Performance Testing and Optimization

A successful migration isn't just about moving data without loss; it's about ensuring the new system performs better or at least as well as the old one under real-world stress. Performance testing and optimization are non-negotiable database migration best practices. This phase validates that the target database can handle production workloads efficiently and meets all service-level objectives (SLOs) for latency and throughput.

Failing to conduct rigorous performance testing is a common pitfall that leads to post-launch slowdowns, application timeouts, and a poor user experience. This step involves more than simple query benchmarks; it requires simulating realistic user traffic and system load to uncover bottlenecks in the new environment, from inefficient queries and missing indexes to inadequate hardware provisioning.

How It Works: A Technical Approach

The process centers on creating a controlled, production-like environment to measure and tune the target database's performance before the final cutover.

- Load Generation: Use sophisticated load testing tools like

JMeter,Gatling, ork6to simulate concurrent user sessions and transactional volume. The goal is to replicate peak traffic patterns observed in your production analytics. For instance, if your application experiences a surge in writes during business hours, your test scripts must mimic that exact behavior against the migrated database. - Query Analysis and Tuning: With the load test running, use the target database's native tools to analyze performance. In PostgreSQL, this means using

EXPLAIN ANALYZEto inspect query execution plans and identify slow operations like full table scans. In SQL Server, you would use Query Store to find regressed queries. This analysis directly informs what needs optimization, such as rewriting a query to use a more efficient join or creating a covering index to satisfy a query directly from the index.

Key Insight: Performance testing is not a one-off check but a continuous feedback loop. Each identified bottleneck, whether a slow query or a configuration issue, should be addressed, and the test should be re-run. This iterative cycle, as demonstrated by engineering teams at Twitter and LinkedIn, is crucial for building confidence that the new system is ready for production prime time.

Actionable Tips for Implementation

- Test with Production-Scale Data: Use a sanitized but full-size clone of your production data. Testing with a small data subset will not reveal how indexes and queries perform at scale, providing a false sense of security.

- Establish Performance Baselines: Before the migration, benchmark key queries and transactions on your source system. This baseline provides objective, measurable criteria to compare against the target system's performance.

- Monitor System-Level Metrics: Track CPU utilization, memory usage, I/O operations per second (IOPS), and network throughput on the database server during tests. A bottleneck may not be in the database itself but in the underlying infrastructure. This holistic view is a core part of effective application performance optimization.

6. Comprehensive Testing Strategy

A successful database migration is not measured by the data moved but by the uninterrupted functionality of the applications that depend on it. This is why a comprehensive, multi-layered testing strategy is a non-negotiable part of any database migration best practices. Testing cannot be an afterthought; it must be an integrated, continuous process that validates data integrity, application performance, and business logic from development through to post-launch.

The objective is to de-risk the migration by systematically verifying every component that interacts with the database. This approach goes beyond simple data validation. It involves simulating real-world workloads, testing edge cases, and ensuring that every application function, from user login to complex report generation, performs as expected on the new database system.

How It Works: A Technical Approach

A robust testing strategy is built on several layers, each serving a distinct purpose:

- Data Integrity and Validation Testing: This is the foundational layer. The goal is to verify that the data in the target database is a complete and accurate representation of the source. Use automated scripts to perform row counts, checksums on critical columns, and queries that compare aggregates (SUM, AVG, MIN, MAX) between the source and target. Tools like

dbt (data build tool)can be used to write and run data validation tests as part of the migration workflow. - Application and Integration Testing: Once data integrity is confirmed, you must test the application stack. This involves running existing unit and integration test suites against the new database. The key is to catch functional regressions, such as a stored procedure that behaves differently or a query that is no longer performant. For example, Amazon's database migration teams use extensive A/B testing, directing a small percentage of live traffic to the new database to compare performance and error rates in real time.

Key Insight: Comprehensive testing transforms the migration from a high-stakes "big bang" event into a controlled, verifiable process. It provides empirical evidence that the new system is ready for production, preventing costly post-migration firefighting and ensuring business continuity.

Actionable Tips for Implementation

- Develop Test Cases from Business Scenarios: Don't just test technical functions; test business processes. Map out critical user journeys (e.g., creating an order, updating a user profile) and build test cases that validate them end-to-end.

- Automate Everything Possible: Manually testing thousands of queries and data points is impractical and error-prone. Integrate your migration testing into an automated workflow, which is a core tenet of modern CI/CD. Learn more about building robust CI/CD pipelines to see how automation can be applied here.

- Involve End-Users for UAT: User Acceptance Testing (UAT) is the final gate before go-live. Involve power users and key business stakeholders to test the new system with real-world scenarios that automated tests might miss. Microsoft's own internal database migrations rely heavily on comprehensive UAT to sign off on readiness.

7. Security and Compliance Considerations

A database migration isn't just a technical data-moving exercise; it's a security-sensitive operation that must uphold stringent data protection and regulatory standards. Neglecting this aspect can lead to severe data breaches, hefty fines, and reputational damage. This practice involves embedding security and compliance controls into every stage of the migration, from initial planning to post-migration validation, ensuring data integrity, confidentiality, and availability are never compromised.

This means treating security not as an afterthought but as a core requirement of the migration project. For organizations in regulated industries like finance (SOX, PCI DSS) or healthcare (HIPAA), maintaining compliance is non-negotiable. The goal is to ensure the entire process, including the tools used and the data's state during transit and at rest, adheres to these predefined legal and security frameworks.

How It Works: A Technical Approach

The process integrates security controls directly into the migration workflow. This involves a multi-layered strategy that addresses potential vulnerabilities at each step.

- Data Encryption: All data must be encrypted both in transit and at rest. For data in transit, this means using protocols like TLS 1.2+ for all connections between the source, migration tools, and the target database. For data at rest, implement transparent data encryption (TDE) on the target database (e.g., SQL Server TDE, Oracle TDE) or leverage native cloud encryption services like AWS KMS or Azure Key Vault.

- Identity and Access Management (IAM): A zero-trust model is essential. Create specific, temporary, and least-privilege IAM roles or database users exclusively for the migration process. These accounts should have just enough permissions to read from the source and write to the target, and nothing more. For example, in AWS, a dedicated IAM role for a DMS task should have precise

dms:*,s3:*, andec2:*permissions, but no administrative access. These credentials must be revoked immediately upon project completion.

Key Insight: Viewing a database migration through a security lens transforms it from a risky necessity into an opportunity. It allows you to shed legacy security vulnerabilities, implement modern, robust controls like granular IAM policies and comprehensive encryption, and establish a stronger compliance posture in the new environment than you had in the old one.

Actionable Tips for Implementation

- Conduct Pre- and Post-Migration Security Audits: Before starting, perform a security assessment of the source to identify existing vulnerabilities. After the migration, run a comprehensive audit and penetration test on the new target environment to validate that security controls are effective and no new weaknesses were introduced.

- Use Certified Tools and Platforms: When migrating to the cloud, leverage providers and tools that are certified for your specific compliance needs (e.g., AWS for HIPAA, Azure for FedRAMP). This simplifies the audit process significantly.

- Maintain Immutable Audit Logs: Configure detailed logging for all migration activities. Ensure these logs capture who accessed what data, when, and from where. Store these logs in an immutable storage location, like an S3 bucket with Object Lock, to provide a clear and tamper-proof audit trail for compliance verification. You can learn more about how security is integrated into modern workflows by exploring DevOps security best practices on opsmoon.com.

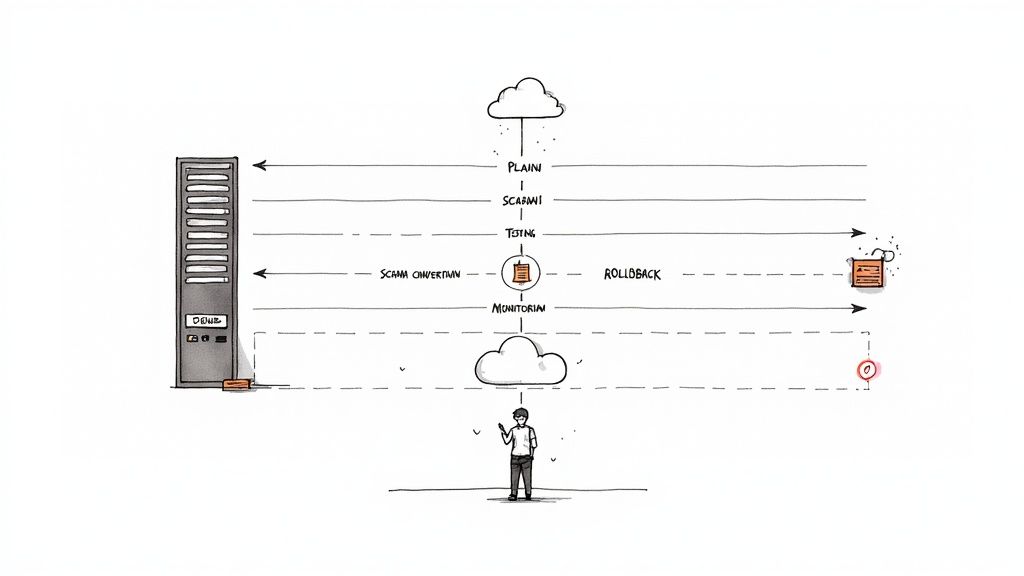

8. Monitoring and Rollback Planning

Even the most meticulously planned migration can encounter unforeseen issues. This is where a robust monitoring and rollback strategy transitions from a safety net to a mission-critical component. Effective planning isn't just about watching for errors; it's about defining failure, instrumenting your systems to detect it instantly, and having a practiced, automated plan to revert to a stable state with minimal impact. This practice is a cornerstone of modern Site Reliability Engineering (SRE) and one of the most vital database migration best practices for ensuring business continuity.

The goal is to move beyond reactive firefighting. By establishing comprehensive monitoring and detailed rollback procedures, you can transform a potential catastrophe into a controlled, low-stress event. This involves setting up real-time alerting, performance dashboards, and automated rollback triggers to minimize both downtime and data loss.

How It Works: A Technical Approach

This practice combines proactive monitoring with a pre-defined incident response plan tailored for the migration.

- Comprehensive Monitoring Setup: Before the cutover, deploy monitoring agents and configure dashboards that track both system-level and application-level metrics. Use tools like Prometheus for time-series metrics, Grafana for visualization, and the ELK Stack (Elasticsearch, Logstash, Kibana) for log aggregation. Key metrics to monitor include query latency (p95, p99), error rates (HTTP 5xx, database connection errors), CPU/memory utilization on the new database, and replication lag if using a phased approach.

- Defining Rollback Triggers: A rollback plan is useless without clear, automated triggers. These are not subjective judgments made during a crisis but pre-agreed-upon thresholds. For example, a trigger could be defined as: "If the p99 query latency for the

ordersservice exceeds 500ms for more than three consecutive minutes, or if the API error rate surpasses 2%, initiate automated rollback." This removes human delay and emotion from the decision-making process.

Key Insight: A rollback plan is not a sign of failure but a mark of professional engineering. The ability to quickly and safely revert a failed deployment protects revenue, user trust, and team morale. Companies like Netflix and Uber have pioneered these techniques, using canary analysis and feature flags to expose the new database to a small percentage of traffic first, closely monitoring its performance before proceeding.

Actionable Tips for Implementation

- Practice the Rollback: A rollback plan that has never been tested is a recipe for disaster. Conduct drills in a staging environment to validate your scripts, automation, and communication protocols. The team should be able to execute it flawlessly under pressure.

- Monitor Business Metrics: Technical metrics are crucial, but they don't tell the whole story. Monitor key business indicators like "user sign-ups per hour" or "completed checkouts." A drop in these metrics can be the earliest sign that something is wrong, even if system metrics appear normal.

- Establish Clear Communication Protocols: When an issue is detected, who gets alerted? Who has the authority to approve a rollback? Document this in a runbook and integrate it with your alerting tools like PagerDuty or Opsgenie to ensure the right people are notified immediately.

Best Practices Comparison Matrix for Database Migration

| Strategy | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| Comprehensive Data Assessment and Planning | High – requires detailed analysis and expertise | High – specialized tools and team involvement | Clear project scope, reduced surprises, accurate estimates | Large, complex migrations needing risk reduction | Early issue identification, detailed planning |

| Incremental Migration Strategy | Medium to High – managing phased migration | Medium to High – maintaining dual systems | Minimized downtime, continuous validation, lower risks | Critical systems needing minimal disruption | Early issue detection, flexible rollback |

| Robust Backup and Recovery Strategy | Medium – backup setup and testing complexity | Medium to High – storage & infrastructure | Safety net for failures, compliance, quick recovery | Migrations with high data loss risk | Data safety, compliance adherence |

| Data Validation and Quality Assurance | Medium – requires automation and validation rules | Medium – additional processing power | Ensured data integrity, compliance, confidence in results | Migrations where data accuracy is mission-critical | Early detection of quality problems |

| Performance Testing and Optimization | High – requires realistic test environments | High – testing infrastructure and tools | Bottleneck identification, scalability validation | Systems with strict performance SLAs | Proactive optimization, reduced downtime |

| Comprehensive Testing Strategy | High – multi-layered, cross-team coordination | High – testing tools and resource allocation | Reduced defects, validated functionality | Complex systems with critical business processes | Early detection, reliability assurance |

| Security and Compliance Considerations | Medium to High – integrating security controls | Medium to High – security tools and audits | Regulatory compliance, data protection | Regulated industries (healthcare, finance, etc.) | Compliance assurance, risk mitigation |

| Monitoring and Rollback Planning | Medium – monitoring tools and rollback setup | Medium – monitoring infrastructure | Rapid issue detection, minimized downtime | Migrations requiring high availability | Fast response, minimized business impact |

From Planning to Production: Mastering Your Next Migration

Successfully navigating a database migration is a hallmark of a mature, high-performing DevOps team. It’s far more than a simple data transfer; it is a meticulously orchestrated engineering initiative that tests your team's planning, execution, and risk management capabilities. The journey from your legacy system to a new, optimized environment is paved with the technical database migration best practices we've explored. Adhering to these principles transforms what could be a high-stakes gamble into a predictable, controlled, and successful project.

The core theme connecting these practices is proactive control. Instead of reacting to problems, you anticipate them. A deep data assessment prevents scope creep, while an incremental strategy breaks down an overwhelming task into manageable, verifiable stages. This approach, combined with robust backup and recovery plans, creates a safety net that allows your team to operate with confidence rather than fear. You are not just moving data; you are engineering resilience directly into the migration process itself.

Key Takeaways for Your Team

To truly master your next migration, internalize these critical takeaways:

- Planning is Paramount: The most successful migrations are won long before the first byte is transferred. Your initial data assessment, schema mapping, and strategic choice between big bang and phased approaches will dictate the project's trajectory.

- Trust, But Verify (Automate Verification): Never assume data integrity. Implement automated data validation scripts that compare checksums, row counts, and sample data sets between the source and target databases. This continuous verification is your most reliable quality gate.

- Performance is a Feature: A migration that degrades performance is a failure, even if all the data arrives intact. Integrate performance testing early, simulating realistic production loads to identify and resolve bottlenecks in the new environment before your users do.

- Design for Failure: A comprehensive rollback plan is not an admission of doubt; it is a sign of professional diligence. Your team should be able to trigger a rollback with the same precision and confidence as the cutover itself.

Your Actionable Next Steps

Translate this knowledge into action. Begin by auditing your team's current migration playbook against the practices outlined in this article. Identify the gaps, whether in automated testing, security scanning, or post-migration monitoring. Start small by introducing one or two improved practices into your next minor database update, building muscle memory for larger, more critical projects.

Ultimately, embracing these database migration best practices is about more than just avoiding downtime. It's about delivering tangible business value. A well-executed migration unlocks improved scalability, enhanced security, lower operational costs, and the ability to leverage modern data technologies. It empowers your developers, delights your users, and positions your organization to innovate faster and more effectively. This strategic approach ensures the project concludes not with a sigh of relief, but with a clear, measurable improvement to your technological foundation.

Executing a flawless migration requires deep, specialized expertise that may not exist in-house. OpsMoon connects you with a global network of elite, vetted DevOps and SRE freelancers who have mastered complex database migrations. Find the precise skills you need to de-risk your project and ensure a seamless transition by exploring the talent at OpsMoon.