8 Container Security Best Practices for 2025

Discover 8 technical and actionable container security best practices to harden your environment. Learn to implement image scanning, runtime security, and more.

Containers have fundamentally reshaped how we build, ship, and run applications. This shift to ephemeral, distributed environments brings incredible velocity and scalability, but it also introduces a new, complex attack surface that traditional security models struggle to address. A single vulnerable library in a base image, an overly permissive runtime configuration, or a compromised CI/CD pipeline can create cascading failures across your entire infrastructure. Protecting these workloads requires a proactive, multi-layered strategy that integrates security into every stage of the container lifecycle.

This guide moves beyond high-level theory to provide a technical, actionable walkthrough of critical container security best practices. We will dissect eight essential strategies, from image hardening and vulnerability scanning to runtime defense and secure orchestration. For each practice, you will find specific implementation details, code snippets, recommended tooling, and practical examples that you can apply directly to your own environments.

The goal is to provide a comprehensive playbook for engineers, architects, and security professionals tasked with safeguarding containerized applications. You will learn how to:

- Harden your container images to minimize the attack surface from the start.

- Integrate automated scanning into your CI/CD pipeline to catch vulnerabilities before they reach production.

- Enforce the principle of least privilege for containers and orchestrators like Kubernetes.

- Implement robust runtime security monitoring to detect and respond to threats in real-time.

By mastering these techniques, you can build a resilient defense-in-depth posture that protects your digital supply chain without sacrificing development speed. Let's dive into the technical specifics of securing your containerized ecosystem.

1. Scan Container Images for Vulnerabilities

Container image scanning is a foundational, non-negotiable practice for securing modern software delivery pipelines. This process involves automatically analyzing every layer of a container image, from the base operating system to application libraries and custom code, for known security flaws. A critical first step in securing your containers is implementing robust security vulnerability scanning. This proactive approach identifies Common Vulnerabilities and Exposures (CVEs), malware, and critical misconfigurations before an image is ever deployed, effectively shifting security left in the development lifecycle.

Why It's a Top Priority

Failing to scan images is like leaving your front door unlocked. A single vulnerable library can provide an entry point for an attacker to compromise your application, access sensitive data, or move laterally across your network. By integrating scanning directly into your CI/CD pipeline, you create an automated security gate that prevents vulnerable code from ever reaching production. For example, a simple command like trivy image --severity CRITICAL,HIGH your-image:tag can be configured to fail a build pipeline if critical CVEs are found, automatically blocking a risky deployment.

Actionable Implementation Strategy

To effectively implement this container security best practice, integrate scanning at multiple strategic points in your workflow.

- During the Build: Scan the image as a step in your CI/CD pipeline. Here is a sample GitLab CI job using Trivy:

scan_image: stage: test image: aquasec/trivy:latest script: - trivy image --exit-code 1 --severity HIGH,CRITICAL $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA - Before Registry Push: Use a pre-push hook or a dedicated CI stage to scan the final image before it's stored in a registry like Docker Hub or Amazon ECR. This prevents vulnerable artifacts from polluting your trusted registry.

- Before Deployment: Use a Kubernetes admission controller like Kyverno or OPA Gatekeeper to scan images via an API call to your scanner just before they are scheduled to run. This acts as a final line of defense against images that may have been pushed to the registry before a new CVE was discovered.

Set clear, automated policies for vulnerability management. For instance, your CI pipeline should be configured to fail a build if any "Critical" or "High" severity CVEs are detected, while logging "Medium" and "Low" severity issues in a system like Jira for scheduled remediation. This automated enforcement removes manual bottlenecks and ensures consistent security standards.

2. Use Minimal Base Images

Using minimal base images is a core tenet of effective container security best practices. This strategy involves building containers from the smallest possible foundation, stripped of all non-essential components. Instead of a full-featured OS like ubuntu:latest, you start with an image containing only the bare minimum libraries and binaries required to run your application. This drastically shrinks the attack surface by eliminating unnecessary packages, shells (bash), and utilities (curl, wget) that could harbor vulnerabilities or be co-opted by an attacker post-compromise.

Why It's a Top Priority

Every package, library, and tool included in a container image is a potential security liability. A larger base image not only increases scan times and storage costs but also broadens the potential for exploitation. By adopting minimal images like distroless, scratch, or alpine, you inherently reduce risk. The gcr.io/distroless/static-debian11 image, for example, is only a few megabytes and contains no package manager or shell, making it extremely difficult for an attacker to explore or install tools after a compromise. For a more in-depth look at this practice, you can explore additional Docker security best practices.

Actionable Implementation Strategy

Adopting minimal base images requires a deliberate, structured approach integrated directly into your development workflow.

- Select the Right Base: Start with

gcr.io/distroless/staticfor statically compiled languages like Go. For applications needing a C library (glibc), usegcr.io/distroless/base-debian11. For Python or Node.js,alpineis a popular choice, but be mindful of potential compatibility issues withmusl libcversus the more commonglibc. - Leverage Multi-Stage Builds: Use multi-stage builds in your

Dockerfileto separate the build environment from the final runtime environment. This ensures that compilers (gcc), build tools (maven), and development dependencies (-devpackages) are never included in the production image.# Build Stage FROM golang:1.19-alpine AS builder WORKDIR /app COPY . . RUN go build -o main . # Final Stage FROM gcr.io/distroless/static-debian11 COPY --from=builder /app/main / CMD ["/main"] - Strictly Manage Dependencies: Explicitly define every dependency and use a

.dockerignorefile to prevent extraneous files, like source code (.git/), local configurations (.env), or documentation (README.md), from being copied into the image. This enforces a clean, predictable, and minimal final product.

3. Implement Runtime Security Monitoring

While static image scanning secures your assets before they run, runtime security monitoring is the essential practice of observing container behavior during execution. This dynamic approach acts as a vigilant watchdog for your live environments, detecting and responding to anomalous activities in real-time. It moves beyond static analysis to monitor actual container operations, including system calls (syscalls), network connections, file access, and process execution, providing a critical layer of defense against zero-day exploits and threats that only manifest post-deployment.

Why It's a Top Priority

Neglecting runtime security is like installing a vault door but leaving the windows open. Attackers can exploit zero-day vulnerabilities or leverage misconfigurations that static scans might miss. A runtime security system can detect these breaches as they happen. For example, a tool like Falco can detect if a shell process (bash, sh) is spawned inside a container that shouldn't have one, or if a container makes an outbound network connection to a known malicious IP address. This real-time visibility is a cornerstone of a comprehensive container security strategy, providing the means to stop an active attack before it escalates.

Actionable Implementation Strategy

To implement effective runtime security monitoring, focus on establishing behavioral baselines and integrating alerts into your response workflows. Tools like Falco, Aqua Security, and StackRox are leaders in this space. This is a core component of a broader strategy, which you can explore further by understanding what is continuous monitoring.

- Establish Behavioral Baselines: Deploy your monitoring tool in a "learning" or "detection" mode first. This allows the system to build a profile of normal application behavior (e.g., "this process only ever reads from

/dataand connects to the database on port 5432"). This reduces false positives when you switch to an enforcement mode. - Deploy a Tool Like Falco: Falco is a CNCF-graduated project that uses eBPF or kernel modules to tap into syscalls. You can define rules in YAML to detect specific behaviors. For example, a simple Falco rule to detect writing to a sensitive directory:

- rule: Write below binary dir desc: an attempt to write to any file below a set of binary directories condition: > (open_write) and (fd.directory in (/bin, /sbin, /usr/bin, /usr/sbin)) output: "File opened for writing below binary dir (user=%user.name command=%proc.cmdline file=%fd.name)" priority: ERROR - Integrate and Automate Responses: Connect runtime alerts to your incident response systems like a SIEM (e.g., Splunk) or a SOAR platform. Create automated runbooks that can take immediate action, such as using Kubernetes to quarantine a pod by applying a network policy that denies all traffic, notifying the on-call team via PagerDuty, or triggering a forensic snapshot of the container's filesystem.

Prioritize solutions that leverage technologies like eBPF for deep kernel-level visibility with minimal performance overhead.

4. Apply Principle of Least Privilege

Applying the principle of least privilege is a fundamental pillar of container security, dictating that a container should run with only the minimum permissions, capabilities, and access rights required to perform its function. This involves practices like running processes as a non-root user, dropping unnecessary Linux capabilities, and mounting the root filesystem as read-only. This proactive security posture drastically limits the "blast radius" of a potential compromise, ensuring that even if an attacker exploits a vulnerability, their ability to inflict further damage is severely restricted.

Why It's a Top Priority

Running a container with default, excessive privileges is akin to giving an intern the keys to the entire company. By default, docker run grants a container significant capabilities. An exploited application running as root could potentially escape the container, access the host's filesystem, or attack other containers on the same node. Enforcing least privilege mitigates this by design. For example, if a container's filesystem is read-only, an attacker who gains execution cannot write malware or modify configuration files.

Actionable Implementation Strategy

Integrating the principle of least privilege requires a multi-faceted approach, embedding security controls directly into your image definitions and orchestration configurations.

- Enforce Non-Root Execution: Always specify a non-root user in your

Dockerfileusing theUSERinstruction (e.g.,USER 1001). In Kubernetes, enforce this cluster-wide by settingrunAsNonRoot: truein the pod'ssecurityContext:apiVersion: v1 kind: Pod metadata: name: my-secure-pod spec: securityContext: runAsUser: 1001 runAsGroup: 3000 runAsNonRoot: true containers: - name: my-app image: my-app:latest - Drop Linux Capabilities: By default, containers are granted a range of Linux capabilities. Explicitly drop all capabilities (

--cap-drop=ALLin Docker, ordrop: ["ALL"]in KubernetessecurityContext) and then add back only those that are absolutely necessary for the application to function (e.g.,add: ["NET_BIND_SERVICE"]to bind to privileged ports below 1024). - Implement Read-Only Filesystems: Configure your container's root filesystem to be read-only. In Kubernetes, this is achieved by setting

readOnlyRootFilesystem: truein the container'ssecurityContext. Any directories that require write access, such as for logs or temporary files, can be mounted as separate writable volumes (emptyDiror persistent volumes).

Adopting these container security best practices creates a hardened runtime environment. This systematic reduction of the attack surface is a critical strategy championed by standards like the NIST and CIS Benchmarks.

5. Sign and Verify Container Images

Image signing and verification is a critical cryptographic practice that establishes a verifiable chain of trust for your containerized applications. This process involves using digital signatures to guarantee that an image is authentic, comes from an authorized source, and has not been tampered with since it was built. By implementing content trust, you create a security control that prevents the deployment of malicious or unauthorized images, safeguarding your environment from sophisticated supply chain attacks.

Why It's a Top Priority

Failing to verify image integrity is akin to accepting a package without checking the sender or looking for signs of tampering. An attacker with access to your registry could substitute a legitimate image with a compromised version containing malware or backdoors. Image signing mitigates this risk by ensuring only images signed by trusted keys can be deployed. This is a core component of the SLSA (Supply-chain Levels for Software Artifacts) framework and is essential for building a secure software supply chain.

Actionable Implementation Strategy

Integrating image signing and verification requires automating the process within your CI/CD pipeline and enforcing it at deployment time.

- Automate Signing in CI/CD: Integrate a tool like Cosign (part of the Sigstore project) into your continuous integration pipeline. A signing step in your pipeline might look like this:

# Set up Cosign with your private key (stored as a CI secret) export COSIGN_PASSWORD="your-key-password" cosign sign --key "cosign.key" your-registry/your-image:tagThis command generates a signature and pushes it as an OCI artifact to the same repository as your image.

- Enforce Verification at Deployment: Use a Kubernetes admission controller, such as Kyverno or OPA Gatekeeper, to intercept all deployment requests. Configure a policy that requires a valid signature from a trusted public key for any image before it can be scheduled on a node. A simple Kyverno cluster policy could look like:

apiVersion: kyverno.io/v1 kind: ClusterPolicy metadata: name: check-image-signatures spec: validationFailureAction: Enforce rules: - name: verify-image match: resources: kinds: - Pod verifyImages: - image: "your-registry/your-image:*" key: |- -----BEGIN PUBLIC KEY----- ... your public key data ... -----END PUBLIC KEY----- - Manage Signing Keys Securely: Treat your signing keys like any other high-value secret. Store private keys in a secure vault like HashiCorp Vault or a cloud provider's key management service (KMS). Cosign integrates with these services, allowing you to sign images without ever exposing the private key to the CI environment.

6. Secure Container Registry Management

A container registry is the central nervous system of a containerized workflow, acting as the single source of truth for all container images. Securing this registry is a critical container security best practice, as a compromised registry can become a super-spreader of malicious or vulnerable images across your entire organization. This practice involves a multi-layered defense strategy, including robust access control, continuous scanning, lifecycle management, and infrastructure hardening to protect the images stored within it.

Why It's a Top Priority

Leaving a container registry unsecured is equivalent to giving attackers the keys to your software supply chain. They could inject malicious code into your base images, replace production application images with compromised versions, or exfiltrate proprietary code. A secure registry ensures image integrity and authenticity from build to deployment. For example, using features like repository immutability in Amazon ECR prevents tags from being overwritten, ensuring that my-app:prod always points to the same verified image digest.

Actionable Implementation Strategy

To properly secure your container registry, you must implement controls across access, scanning, and lifecycle management.

- Implement Granular Access Control: Use Role-Based Access Control (RBAC) to enforce the principle of least privilege. Create distinct roles for read, write, and administrative actions. Use namespaces or projects (features in registries like Harbor or Artifactory) to segregate images by team or environment (e.g.,

dev-team-repo,prod-repo). For cloud registries like ECR, use IAM policies to grant specific CI/CD rolesecr:GetAuthorizationTokenandecr:BatchCheckLayerAvailabilitypermissions, but restrictecr:PutImageto only the CI pipeline's service account. - Automate Security and Lifecycle Policies: Configure your registry to automatically scan any newly pushed image for vulnerabilities. For ECR, enable "Scan on push." Furthermore, implement retention policies to automatically prune old, untagged, or unused images. This can be done with lifecycle policies. For example, an ECR lifecycle policy could automatically expire any image tagged with

dev-*after 14 days, reducing clutter and minimizing the attack surface from stale images. - Harden the Registry Infrastructure: Always enforce TLS for all registry communications to encrypt data in transit. Enable detailed audit logging (e.g., AWS CloudTrail for ECR) and integrate these logs with your SIEM system for threat detection and forensic analysis. When managing credentials for registry access, it's crucial to follow robust guidelines; you can learn more about secrets management best practices to strengthen your approach.

7. Implement Network Segmentation and Policies

Network segmentation is a foundational container security best practice that enforces strict control over traffic flow between containers, pods, and external networks. This strategy creates isolated security boundaries, effectively applying zero-trust principles within your cluster. By defining and enforcing precise rules for network communication using Kubernetes NetworkPolicies, you can drastically limit an attacker's ability to move laterally across your environment if a single container is compromised.

Why It's a Top Priority

An unsegmented network is a flat, open field for attackers. By default, all pods in a Kubernetes cluster can communicate with each other. If an attacker compromises a public-facing web server pod, they can freely probe and attack internal services like databases or authentication APIs. Implementing network policies transforms this open field into a series of locked, isolated rooms, where communication is only permitted through explicitly approved doorways. This is a critical control for compliance frameworks like PCI-DSS.

Actionable Implementation Strategy

Effective network segmentation requires a deliberate, policy-as-code approach integrated into your GitOps workflow. This ensures your network rules are versioned, audited, and deployed consistently alongside your applications.

- Start with Default-Deny: Implement a baseline "default-deny" policy for critical namespaces. This blocks all ingress traffic by default, forcing developers to explicitly define and justify every required communication path.

apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: default-deny-ingress namespace: critical-app spec: podSelector: {} policyTypes: - Ingress - Use Labels for Policy Selection: Define clear and consistent labels for your pods (e.g.,

app: frontend,tier: database). Use these labels in your NetworkPolicy selectors to create scalable, readable rules. Here is an example allowing afrontendpod to connect to abackendpod on a specific port:apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: backend-policy namespace: critical-app spec: podSelector: matchLabels: app: backend policyTypes: - Ingress ingress: - from: - podSelector: matchLabels: app: frontend ports: - protocol: TCP port: 8080 - Implement Egress Controls: In addition to controlling inbound (ingress) traffic, restrict outbound (egress) traffic to prevent compromised containers from exfiltrating data or connecting to command-and-control servers.

When designing your container network, it's crucial to understand how to control inbound and outbound traffic. For a primer on how to manage network access and secure your perimeter, you might explore guides on configuring network devices like routers and firewalls for secure access. Ensure your CNI plugin (e.g., Calico, Cilium, Weave Net) supports and enforces NetworkPolicies.

8. Regularly Update and Patch Container Components

Regularly updating and patching is the relentless process of maintaining current versions of every component within your container ecosystem. This includes base images (FROM ubuntu:22.04), application dependencies (package.json), language runtimes (python:3.10), and orchestration platforms like Kubernetes itself (the control plane and kubelets). This practice ensures that newly discovered vulnerabilities are promptly remediated. Since the threat landscape is constantly evolving, a systematic, automated approach to updates is a non-negotiable container security best practice.

Why It's a Top Priority

Neglecting updates is like knowingly leaving a backdoor open for attackers. A single unpatched vulnerability, such as Log4Shell (CVE-2021-44228), can lead to a complete system compromise via remote code execution. The goal is to minimize the window of opportunity for exploitation by treating infrastructure and dependencies as living components that require constant care. Organizations that automate this process drastically reduce their risk exposure.

Actionable Implementation Strategy

To build a robust updating and patching strategy, you must integrate automation and process into your development and operations workflows.

- Automate Dependency Updates: Integrate tools like GitHub's Dependabot or Renovate Bot directly into your source code repositories. Configure them to scan for outdated dependencies in

pom.xml,requirements.txt, orpackage.jsonfiles. These tools automatically open pull requests with the necessary version bumps and can be configured to run your test suite to validate the changes before merging. - Establish a Rebuild Cadence: Implement a CI/CD pipeline that automatically rebuilds all your golden base images on a regular schedule (e.g., weekly or nightly). This pipeline should run

apt-get update && apt-get upgrade(for Debian-based images) or its equivalent. This, in turn, should trigger automated rebuilds of all dependent application images, ensuring OS-level patches are propagated quickly and consistently. - Embrace Immutability: Adhere to the principle of immutable infrastructure. Never SSH into a running container to apply a patch (

kubectl exec -it <pod> -- apt-get upgrade). Instead, always build, test, and deploy a new, patched image to replace the old one using a rolling update deployment strategy in Kubernetes. - Implement Phased Rollouts: Use deployment strategies like canary releases or blue-green deployments to test updates safely in a production environment. For Kubernetes, tools like Argo Rollouts or Flagger can automate this process, gradually shifting traffic to the new patched version while monitoring key performance indicators (KPIs) like error rates and latency. If metrics degrade, the rollout is automatically reversed.

8 Key Container Security Practices Comparison

| Security Practice | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| Scan Container Images for Vulnerabilities | Moderate – requires integration with CI/CD and regular updates | Scanning tools, vulnerability databases, compute resources | Early detection of known vulnerabilities, compliance | Dev teams integrating security in CI/CD pipelines | Automates vulnerability detection, improves visibility, reduces attack surface |

| Use Minimal Base Images | Moderate to High – requires refactoring and build process changes | Minimal runtime resources, advanced build expertise | Smaller images, fewer vulnerabilities, faster startup | Applications needing lean, secure containers | Reduces attack surface, faster deployments, cost savings on storage/network |

| Implement Runtime Security Monitoring | High – needs real-time monitoring infrastructure and tuning | Dedicated monitoring agents, compute overhead, incident response | Detects unknown threats and zero-day exploits in real time | High-security environments requiring continuous threat detection | Real-time threat detection, automated response, forensic support |

| Apply Principle of Least Privilege | Moderate – requires detailed capability analysis and application changes | Configuration effort, testing time | Limits privilege escalation, reduces breach impact | Security-sensitive applications and multi-tenant environments | Limits attack blast radius, enforces security by design, compliance |

| Sign and Verify Container Images | Moderate to High – involves cryptographic setup and CI/CD integration | Key management infrastructure, process modification | Ensures image authenticity and integrity | Organizations with strict supply chain security | Prevents tampering, enforces provenance, supports compliance |

| Secure Container Registry Management | Moderate to High – requires secure infrastructure and policy enforcement | Dedicated registry infrastructure, access controls | Protects image storage and distribution | Enterprises managing private and public image registries | Centralized security control, access management, auditability |

| Implement Network Segmentation and Policies | High – complex planning and ongoing maintenance needed | Network policy tools, CNI plugins, monitoring resources | Limits lateral movement, enforces zero-trust networking | Kubernetes clusters, microservices architectures | Reduces breach impact, granular traffic control, compliance support |

| Regularly Update and Patch Container Components | Moderate – requires organized patch management and testing | Automated update tools, testing environments | Reduced exposure to known vulnerabilities | All containerized deployments | Maintains current security posture, reduces technical debt, improves stability |

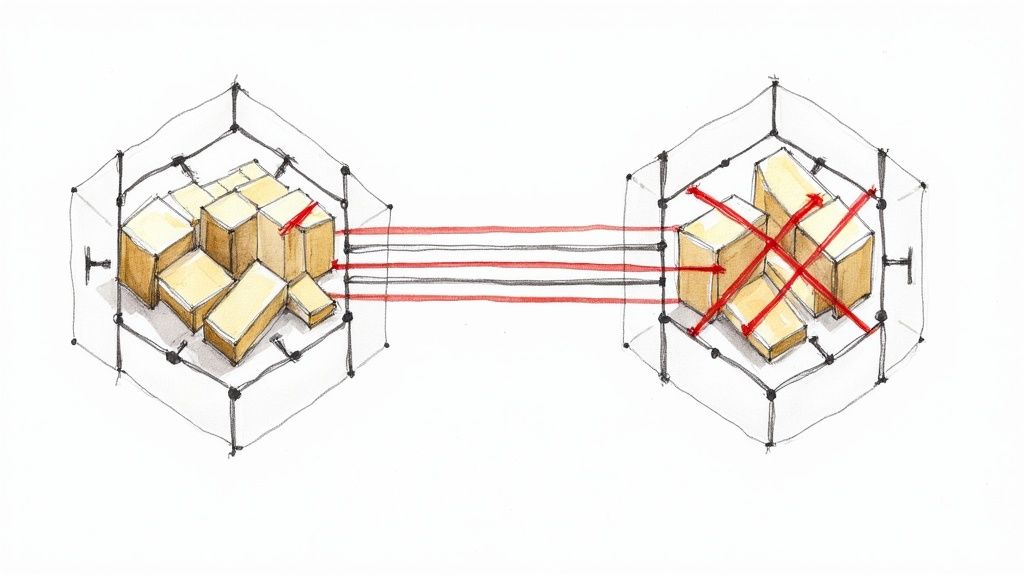

From Theory to Practice: Operationalizing Your Container Security Strategy

Navigating the landscape of container security can feel like assembling a complex puzzle. We've explored a comprehensive set of critical practices, from hardening images at the source to implementing robust runtime defenses. Each piece, from vulnerability scanning and minimal base images to image signing and network segmentation, plays an indispensable role in forming a resilient security posture. The journey, however, doesn't end with understanding these individual concepts. The true measure of a successful security program lies in its operationalization, transforming this collection of best practices into an integrated, automated, and continuously improving system.

The core takeaway is that container security is not a one-time setup but a continuous lifecycle. It must be woven into the fabric of your DevOps culture, becoming an intrinsic part of every commit, build, and deployment. Adopting a "shift-left" mentality is paramount; security cannot be an afterthought bolted on before a release. By integrating tools like Trivy or Clair into your CI/CD pipeline, you automate the detection of vulnerabilities before they ever reach a production environment. Similarly, enforcing the principle of least privilege through Kubernetes Pod Security Standards or OPA/Gatekeeper isn't just a configuration task, it's a fundamental design principle that should guide how you architect your applications and their interactions from day one.

Synthesizing a Holistic Defense-in-Depth Strategy

The power of these container security best practices is magnified when they are layered together to create a defense-in-depth strategy. No single control is foolproof, but their combined strength creates a formidable barrier against attackers. Consider this synergy:

- Minimal Base Images (

distroless, Alpine) reduce the attack surface, giving vulnerability scanners fewer libraries and binaries to flag. - Vulnerability Scanning in CI/CD catches known CVEs in the packages that remain, ensuring your builds start from a clean slate.

- Image Signing with tools like Notary or Cosign provides a cryptographic guarantee that the clean, scanned image is the exact one being deployed, preventing tampering.

- Principle of Least Privilege (e.g., non-root users, read-only filesystems) limits the potential damage an attacker can do if they manage to exploit a zero-day vulnerability not caught by scanners.

- Runtime Security Monitoring with Falco or Sysdig acts as the final line of defense, detecting and alerting on anomalous behavior within a running container that could indicate a breach.

When viewed through this lens, security evolves from a disjointed checklist into a cohesive, mutually reinforcing system. Each practice covers the potential gaps of another, building a security model that is resilient by design.

Actionable Next Steps: Building Your Security Roadmap

Moving forward requires a structured approach. Your immediate goal should be to establish a baseline and identify the most critical gaps in your current workflows. Begin by integrating an image scanner into your primary development pipeline; this often yields the most immediate and impactful security improvements. Concurrently, conduct an audit of your runtime environments. Are your containers running as root? Are your Kubernetes network policies too permissive? Answering these questions will illuminate your highest-priority targets for remediation.

From there, build a phased roadmap. You might dedicate the next quarter to implementing image signing and securing your container registry. The following quarter could focus on deploying a runtime security tool and refining your network segmentation policies. The key is to make incremental, measurable progress rather than attempting to boil the ocean. This iterative process not only makes the task more manageable but also allows your team to build expertise and adapt these practices to your specific technological and business context. Ultimately, mastering these container security best practices is a strategic investment that pays dividends in reduced risk, increased customer trust, and more resilient, scalable applications.

Implementing a comprehensive container security strategy requires deep expertise and dedicated resources. OpsMoon provides elite, pre-vetted DevOps and platform engineers who specialize in building secure, scalable, cloud-native infrastructures. Let our experts help you integrate these best practices seamlessly into your workflows, so you can focus on innovation while we secure your foundation.