A Technical Blueprint for Agile and Continuous Delivery

Master Agile and Continuous Delivery with this technical guide. Learn CI/CD pipeline setup, automation, and advanced strategies to boost your DevOps workflow.

Pairing Agile development methodologies with a Continuous Delivery pipeline creates a highly efficient system for building and deploying software. These are not just buzzwords; Agile provides the iterative development framework, while Continuous Delivery supplies the technical automation to make rapid, reliable releases a reality.

Think of Agile as the strategic planning framework. It dictates the what and why of your development process, breaking down large projects into small, manageable increments. Conversely, Continuous Delivery (CD) is the technical execution engine. It automates the build, test, and release process, ensuring that the increments produced by Agile sprints can be deployed quickly and safely.

The Technical Synergy of Agile and Continuous Delivery

To make this concrete, consider a high-performance software team. Agile is their development strategy. They work in short, time-boxed sprints, continuously integrate feedback, and adapt their plan based on evolving requirements. This iterative approach ensures the product aligns with user needs.

Continuous Delivery is the automated CI/CD pipeline that underpins this strategy. It's the technical machinery that takes committed code, compiles it, runs a gauntlet of automated tests, and prepares a deployment-ready artifact. This automation ensures that every code change resulting from the Agile process can be released to production almost instantly and with high confidence.

The Core Partnership

The relationship between agile and continuous delivery is symbiotic. Agile's iterative nature, focusing on small, frequent changes, provides the ideal input for a CD pipeline. Instead of deploying a monolithic update every six months, your team pushes small, verifiable changes, often multiple times a day. This dramatically reduces deployment risk and shortens the feedback loop from days to minutes.

This operational model is the core of a mature DevOps culture. For a deeper dive into the organizational structure, review our guide on what the DevOps methodology is. It emphasizes breaking down silos between development and operations teams through shared tools and processes.

In essence: Agile provides the backlog of well-defined, small work items. Continuous Delivery provides the automated pipeline to build, test, and release the code resulting from that work. Achieving high-frequency, low-risk deployments requires both.

How They Drive Technical and Business Value

Implementing this combined approach yields significant, measurable benefits across both engineering and business domains. It's not just about velocity; it's about building resilient, high-quality products efficiently.

- Accelerated Time-to-Market: Features and bug fixes can be deployed to production in hours or even minutes after a code commit, providing a significant competitive advantage.

- Reduced Deployment Risk: Deploying small, incremental changes through an automated pipeline drastically lowers the change failure rate. High-performing DevOps teams report change failure rates between 0-15%.

- Improved Developer Productivity: Automation of builds, testing, and deployment frees engineers from manual, error-prone tasks, allowing them to focus on feature development and problem-solving.

- Enhanced Feedback Loops: Deploying functional code to users faster enables rapid collection of real-world feedback, ensuring development efforts are aligned with user needs and market demands.

This provides the strategic rationale. Now, let's transition from the "why" to the "how" by examining the specific technical practices for implementing Agile frameworks and building the automated CD pipelines that power them.

Implementing Agile: Technical Practices for Engineering Teams

Let's move beyond abstract theory. For engineering teams, Agile isn't just a project management philosophy; it's a set of concrete practices that define the daily development workflow. We will focus on how frameworks like Scrum and Kanban translate into tangible engineering actions for teams aiming to master agile and continuous delivery.

This is not a niche methodology. Over 70% of organizations globally have adopted Agile practices. Scrum remains the dominant framework, used by 87% of Agile organizations, while Kanban is used by 56%. This data underscores that Agile is a fundamental shift in software development. You can explore more statistics on the widespread adoption of Agile project management.

This wide adoption makes understanding the technical implementation of these frameworks essential for any modern engineer.

Scrum for Engineering Excellence

Scrum provides a time-boxed, iterative structure that imposes a predictable cadence for shipping high-quality code. Its ceremonies are not mere meetings; they serve distinct engineering purposes.

This diagram illustrates the core feedback loops driving the process.

User stories are selected from the product backlog to form a sprint backlog. The development team then implements these stories, producing a potentially shippable software increment at the end of the sprint.

Let's break down the technical value of Scrum's key components:

- Sprint Planning: This is where the engineering team commits to a set of deliverables for the upcoming sprint (typically 1-4 weeks). User stories are broken down into technical tasks, sub-tasks, and implementation details. Complexity is estimated using story points, and dependencies are identified.

- Daily Stand-ups: This is a 15-minute tactical sync-up focused on unblocking technical impediments. A developer might report, "The authentication service API is returning unexpected 503 errors, blocking my work on the login feature." This allows another team member to immediately offer assistance or escalate the issue.

- Sprint Retrospectives: This is a dedicated session for process improvement from a technical perspective. Discussions are concrete: "Our CI build times increased by 20% last sprint; we need to investigate parallelizing the test suite," or "The code review process is slow; let's agree on a 24-hour turnaround SLA." This is where ground-level technical optimizations are implemented.

Kanban for Visualizing Your Workflow

While Scrum is time-boxed, Kanban is a flow-based system designed to optimize the continuous movement of work from conception to deployment. For technical teams, its primary benefit is making process bottlenecks visually explicit, which aligns perfectly with a continuous delivery model.

Kanban's most significant technical advantage is its ability to reduce context switching. By visualizing the entire workflow and enforcing Work-in-Progress (WIP) limits, it compels the team to focus on completing tasks, thereby improving code quality and reducing cycle time.

Kanban's core practices provide direct technical benefits:

- Visualize the Workflow: A Kanban board is a real-time model of your software delivery process, with columns representing stages like

Backlog,In Development,Code Review,QA Testing, andDeployed. This visualization immediately highlights where work is accumulating. - Limit Work-in-Progress (WIP): This is Kanban's core mechanism for managing flow. By setting explicit limits on the number of items per column (e.g., max 2 tasks in

Code Review), you prevent developers from juggling multiple tasks, which leads to higher-quality code and fewer bugs caused by cognitive overload. - Manage Flow: The objective is to maintain a smooth, predictable flow of tasks across the board. If the

QA Testingcolumn is consistently empty, it's a clear signal of an upstream bottleneck, prompting the team to investigate and resolve the root cause.

Building Your First Continuous Delivery Pipeline

With Agile practices structuring the work, the next step is to build the technical backbone that delivers it: the Continuous Delivery (CD) pipeline.

This pipeline is an automated workflow that takes source code from version control and systematically builds, tests, and prepares it for release. Its purpose is to ensure every code change is validated and deployable. A well-designed pipeline is the foundation for turning the principles of agile and continuous delivery into a practical reality.

The process starts with robust source code management. Git is the de facto standard for version control. A disciplined branching strategy is non-negotiable for managing concurrent development of features, bug fixes, and releases without introducing instability.

Defining Your Branching Strategy

A branching model like GitFlow provides a structured approach to managing your codebase. It uses specific branches for distinct purposes, preventing unstable or incomplete code from reaching the production environment.

A typical GitFlow implementation includes:

mainbranch: Represents the production-ready state of the code. Only tested, stable code is merged here.developbranch: An integration branch for new features. All feature branches are merged intodevelopbefore being prepared for a release.featurebranches: Created fromdevelopfor each new user story or task (e.g.,feature/user-authentication). This isolates development work.releasebranches: Branched fromdevelopwhen preparing for a new production release. Final testing, bug fixing, and versioning occur here before merging intomain.hotfixbranches: Created directly frommainto address critical production bugs. The fix is merged back into bothmainanddevelop.

This strategy creates a predictable, automatable path for code to travel from development to production.

The Automated Build Stage

The CD pipeline is triggered the moment a developer pushes code to a branch. The first stage is the automated build. Here, the pipeline compiles the source code, resolves dependencies, and packages the application into a deployable artifact (e.g., a JAR file, Docker image, or static web assets).

Tools like Maven, Gradle (for JVM-based languages), or Webpack (for JavaScript) automate this process. They read a configuration file (e.g., pom.xml or build.gradle), download the necessary libraries, compile the code, and package the result. A successful build is the first validation that the code is syntactically correct and its dependencies are met.

The build stage is the first quality gate. A build failure stops the pipeline immediately and notifies the developer. This creates an extremely tight feedback loop, preventing broken code from progressing further.

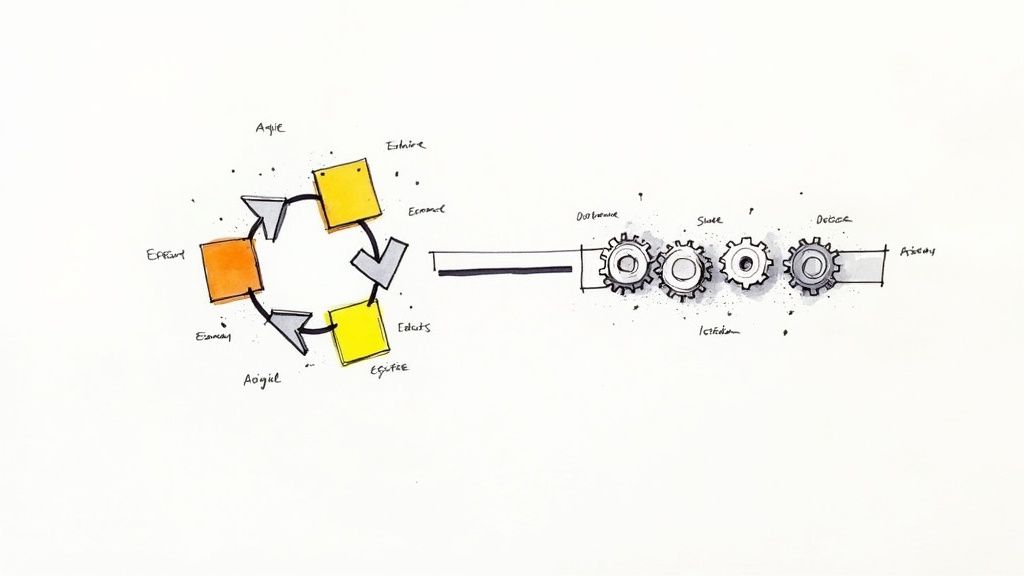

This infographic illustrates how different Agile frameworks structure the work that flows into your pipeline.

Regardless of the framework used, the pipeline serves as the engine that validates and delivers the resulting work.

Integrating Automated Testing Stages

After a successful build, the pipeline proceeds to the most critical phase for quality assurance: automated testing. This is a multi-stage process, with each stage providing a different level of validation.

- Unit Tests: These are fast, granular tests that validate individual components (e.g., a single function or class) in isolation. They are executed using frameworks like JUnit or Jest and should have high code coverage.

- Integration Tests: These tests verify that different components or services of the application interact correctly. This might involve testing the communication between your application and a database or an external API.

- End-to-End (E2E) Tests: E2E tests simulate a full user journey through the application. Tools like Cypress or Selenium automate a web browser to perform actions like logging in, adding items to a cart, and completing a purchase to ensure the entire system functions cohesively.

The table below summarizes these core pipeline stages, their purpose, common tools, and key metrics.

Key Stages of a Continuous Delivery Pipeline

| Pipeline Stage | Purpose | Common Tools | Key Metric |

|---|---|---|---|

| Source Control | Track code changes and manage collaboration. | Git, GitHub, GitLab | Commit Frequency |

| Build | Compile source code into a runnable artifact. | Maven, Gradle, Webpack | Build Success Rate |

| Unit Testing | Verify individual code components in isolation. | JUnit, Jest, PyTest | Code Coverage (%) |

| Integration Testing | Ensure different parts of the application work together. | Postman, REST Assured | Pass/Fail Rate |

| Deployment | Release the application to an environment. | Jenkins, ArgoCD, AWS CodeDeploy | Deployment Frequency |

| Monitoring | Observe application performance and health. | Prometheus, Datadog | Error Rate, Latency |

The effective implementation and automation of these stages are what make a CD pipeline a powerful tool for quality assurance.

Advanced Deployment Patterns

The final stage is deployment. Modern CD pipelines use sophisticated patterns to release changes safely with zero downtime, replacing the risky "big bang" approach.

- Rolling Deployment: The new version is deployed to servers incrementally, one by one or in small batches, replacing the old version. This limits the impact of a potential failure.

- Blue-Green Deployment: Two identical production environments ("Blue" and "Green") are maintained. If Blue is live, the new version is deployed to the idle Green environment. After thorough testing, traffic is switched from Blue to Green via a load balancer, enabling instant release and rollback.

- Canary Deployment: The new version is released to a small subset of users (e.g., 5%). Key performance metrics (error rates, latency) are monitored. If the new version is stable, it is gradually rolled out to the entire user base.

These patterns transform deployments from high-stress events into routine, low-risk operations, which is the ultimate goal of agile and continuous delivery.

Automated Testing as Your Pipeline Quality Gate

A CD pipeline is only as valuable as the confidence it provides. High-frequency releases are only possible with a robust, automated testing strategy that functions as a quality gate at each stage of the pipeline.

This is where agile and continuous delivery are inextricably linked. Agile promotes rapid iteration, and CD provides the automation engine. Automated testing is the safety mechanism that allows this engine to operate at high speed, preventing regressions and bugs from reaching production.

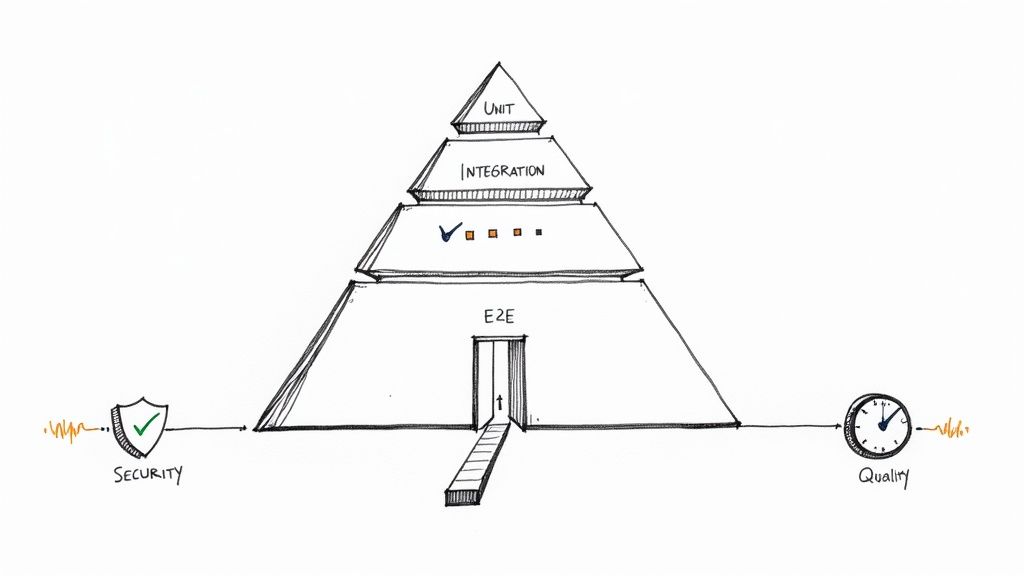

Building on the Testing Pyramid

The "testing pyramid" is a model for structuring a balanced and efficient test suite. It advocates for a large base of fast, low-level tests and progressively fewer tests as you move up to slower, more complex ones. The primary goal is to optimize for fast feedback.

The core principle of the pyramid is to maximize the number of fast, reliable unit tests, have a moderate number of integration tests, and a minimal number of slow, brittle end-to-end tests. This strategy balances test coverage with feedback velocity.

The Foundation: Unit Tests

Unit tests form the base of the pyramid. They are small, isolated tests that verify a single piece of code (a function, method, or class) in complete isolation from external dependencies like databases or APIs. As a result, they execute extremely quickly—thousands can run in seconds.

For example, a unit test for an e-commerce application might validate a calculate_tax() function by passing it a price and location and asserting that the returned tax amount is correct. This provides the first and most immediate line of defense against bugs.

The Middle Layer: Service and Integration Tests

Integration tests form the middle layer, verifying the interactions between different components of your system. This includes testing database connectivity, API communication between microservices, or interactions with third-party services.

Key strategies for effective integration tests include:

- Isolating Services: Use test doubles like mocks or stubs to simulate the behavior of external dependencies. This allows you to test the integration point itself without relying on a fully operational external service.

- Managing Test Data: Use tools like Testcontainers to programmatically spin up and seed ephemeral databases for each test run. This ensures tests are reliable, repeatable, and run in a clean environment.

The Peak: End-to-End Tests

At the top of the pyramid are end-to-end (E2E) tests. These are the most comprehensive but also the most complex and slowest tests. They simulate a complete user journey through the application, typically by using a tool like Selenium or Cypress to automate a real web browser.

Due to their slowness and fragility (propensity to fail due to non-code-related issues), E2E tests should be used sparingly. Reserve them for validating only the most critical, user-facing workflows, such as user registration or the checkout process.

To effectively leverage these tools, a deep understanding is essential. Reviewing common Selenium Interview Questions can provide valuable insights into its practical application.

Integrating Non-Functional Testing

A comprehensive quality gate must extend beyond functional testing to include non-functional requirements like security and performance. This embodies the "Shift Left" philosophy: identifying and fixing issues early in the development lifecycle when they are least expensive to remediate. We cover this topic in more detail in our guide on how to automate software testing.

Integrating these checks directly into the CD pipeline is a powerful practice.

- Automated Security Scans:

- Static Application Security Testing (SAST): Tools scan source code for known security vulnerabilities before compilation.

- Dynamic Application Security Testing (DAST): Tools probe the running application to identify vulnerabilities by simulating attacks.

- Performance Baseline Checks: Automated performance tests run with each build to measure key metrics like response time and resource consumption. The build fails if a change introduces a significant performance regression.

By integrating this comprehensive suite of automated checks, the CD pipeline evolves from a simple deployment script into an intelligent quality gate, providing the confidence needed to ship code continuously.

How to Bridge Agile Planning with Your CD Pipeline

Connecting your Agile project management tool (e.g., Jira, Azure DevOps) to your CD pipeline creates a powerful, transparent, and traceable workflow. This technical integration links the planned work (user stories) to the delivered code, providing a closed feedback loop.

The process begins when a developer selects a user story, such as "Implement OAuth Login," and creates a new feature branch in Git named feature/oauth-login. This git checkout -b command establishes the first link between the Agile plan and the technical implementation.

From this point, every git push to that branch automatically triggers the CD pipeline, initiating a continuous validation process. The pipeline becomes an active participant, running builds, unit tests, and static code analysis against every commit.

From Pull Request to Automated Feedback

When the feature is complete, the developer opens a pull request (PR) to merge the feature branch into the main develop branch. This action triggers the full CI/CD workflow, which acts as an automated quality gate, providing immediate feedback directly within the PR interface.

This tight integration is a cornerstone of a modern agile and continuous delivery practice, making the pipeline's status a central part of the code review process.

This feedback loop typically includes:

- Build Status: A clear visual indicator (e.g., a green checkmark) confirms that the code compiles successfully. A failure blocks the merge.

- Test Results: The pipeline reports detailed test results, such as 100% unit test pass rate and 98% code coverage.

- Code Quality Metrics: Static analysis tools like SonarQube post comments directly in the PR, highlighting code smells, cyclomatic complexity issues, or duplicated code blocks.

- Security Vulnerabilities: Integrated security scanners can automatically flag vulnerabilities introduced by new dependencies, blocking the merge until the insecure package is updated.

This immediate, automated feedback enforces quality standards without manual intervention.

Shifting Left for Built-In Quality

This automated feedback mechanism is the practical application of the "Shift Left" philosophy. Instead of discovering quality and security issues late in the lifecycle (on the right side of a project timeline), they are identified and fixed early (on the left), during development.

By integrating security scans, dependency analysis, and performance tests directly into the pull request workflow, the pipeline is transformed from a mere deployment tool into an integral part of the Agile development process itself. This aligns with the Agile principle of building quality in from the very beginning.

This methodology is becoming a global standard. Enterprise adoption of Agile is projected to grow at a CAGR of 19.5% through 2026, with 83% of companies citing faster delivery as the primary driver. This trend highlights the necessity of supporting Agile principles with robust automation. You can explore how Agile is influencing business strategy in this detailed statistical report.

Working Through the Common Roadblocks

Transitioning to an agile and continuous delivery model is a significant cultural and technical undertaking that often uncovers deep-seated challenges. Overcoming these requires practical solutions to common implementation hurdles.

Cultural resistance from teams accustomed to traditional waterfall methodologies is common. The shift to short, iterative cycles can feel chaotic without proper guidance. Additionally, breaking down organizational silos between development and operations requires a deliberate effort to foster shared ownership and communication.

Dealing with Technical Debt in Old Systems

A major technical challenge is integrating automated testing into legacy codebases that were not designed for testability. Writing fast, reliable unit tests for such systems can seem impossible.

Instead of attempting a large-scale refactoring, apply the "strangler" pattern. When modifying existing code for a new feature or bug fix, write tests specifically for the code being changed. This incremental approach gradually increases test coverage and builds a safety net over time without halting new development.

Treat the lack of tests as technical debt. Each new commit should pay down a small portion of this debt. Over time, this makes the codebase more stable, maintainable, and amenable to further automation.

Taming Complex Database Migrations

Automating database schema changes is a high-risk area where errors can cause production outages. The solution is to manage database changes with the same rigor as application code.

Key practices for de-risking database deployments include:

- Version Control Your Schemas: Store all database migration scripts in Git alongside the application code. This provides a clear audit trail of all changes.

- Make Small, Reversible Changes: Design migrations to be small, incremental, and backward-compatible. This allows the application to be rolled back without requiring a complex database rollback.

- Test Migrations in the Pipeline: The CI/CD pipeline should automate the process of spinning up a temporary database, applying the new migration scripts, and running tests to validate both schema and data integrity before deployment.

Navigating the People Problem

Ultimately, the success of this transition depends on people. As Agile practices expand beyond software teams into other business functions, this becomes even more critical.

The 16th State of Agile report highlights that Agile principles are increasingly shaping leadership, marketing, and operations. This enterprise-wide adoption demonstrates that Agile is becoming a cultural backbone for business agility. You can learn more about how Agile is reshaping entire companies in these recent report insights. Overcoming resistance is not just an IT challenge but a strategic business objective.

Questions We Hear All The Time

As teams implement these practices, several key questions frequently arise. Clarifying these concepts is essential for alignment and success.

Is Continuous Delivery the Same as Continuous Deployment?

No, but they are closely related concepts representing different levels of automation.

Continuous Delivery (CD) ensures that every code change that passes the automated tests is automatically built, tested, and deployed to a staging environment, resulting in a production-ready artifact. However, the final deployment to production requires a manual trigger.

Continuous Deployment, in contrast, automates the final step. If a change passes all automated quality gates, it is automatically deployed to production without any human intervention. Teams typically mature to Continuous Delivery first, building the necessary confidence and automated safeguards before progressing to Continuous Deployment.

How Does Feature Flagging Help with Continuous Delivery?

Feature flags (or feature toggles) are a powerful technique for decoupling code deployment from feature release. They allow you to deploy new, incomplete code to production but keep it hidden behind a runtime configuration flag, invisible to users.

This technique is a key enabler for agile and continuous delivery:

- Test in Production: You can enable a new feature for a specific subset of users (e.g., internal staff or a beta group) to gather feedback from the live production environment without a full-scale launch.

- Enable Trunk-Based Development: Developers can merge their work into the main branch frequently, even if the feature is not complete. The unfinished code remains disabled by a feature flag, preventing instability.

- Instant Rollback ("Kill Switch"): If a newly released feature causes issues, you can instantly disable it by turning off its feature flag, mitigating the impact without requiring a full deployment rollback.

Ready to build a powerful DevOps practice without the hiring headaches? At OpsMoon, we connect you with the top 0.7% of remote DevOps engineers to build, automate, and manage your infrastructure. Get your free work planning session today.