10 Terraform Modules Best Practices for Production-Grade IaC

Master our top 10 Terraform modules best practices. Learn technical strategies for versioning, testing, and security to build reliable IaC in 2025.

Terraform has fundamentally transformed infrastructure management, but creating robust, reusable modules is an art form that requires discipline and strategic thinking. Simply writing HCL isn't enough; true success lies in building modules that are secure, scalable, and easy for your entire team to consume without ambiguity. This guide moves beyond the basics, offering a deep dive into 10 technical Terraform modules best practices that separate fragile, one-off scripts from production-grade infrastructure blueprints.

We will provide a structured approach to module development, covering everything from disciplined versioning and automated testing to sophisticated structural patterns that ensure your Infrastructure as Code is as reliable as the systems it provisions. The goal is to establish a set of standards that make your modules predictable, maintainable, and highly composable. Following these practices helps prevent common pitfalls like configuration drift, unexpected breaking changes, and overly complex, unmanageable code.

Each point in this listicle offers specific implementation details, code examples, and actionable insights designed for immediate application. Whether you're a seasoned platform engineer standardizing your organization's infrastructure or a DevOps consultant building solutions for clients, these strategies will help you build Terraform modules that accelerate delivery and significantly reduce operational risk. Let's explore the essential practices for mastering Terraform module development and building infrastructure that scales.

1. Use Semantic Versioning for Module Releases

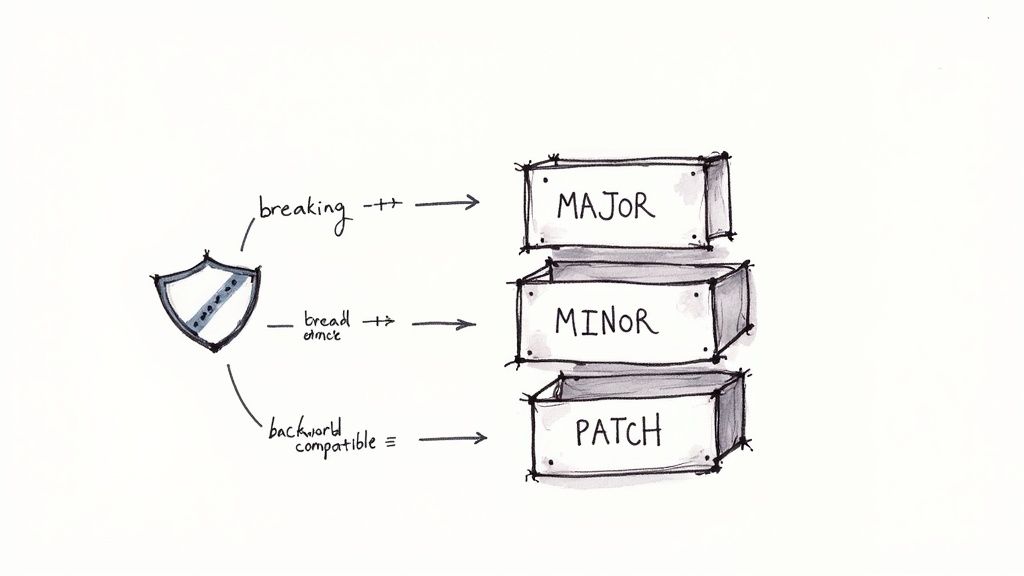

One of the most crucial Terraform modules best practices is to treat your modules like software artifacts by implementing strict version control. Semantic Versioning (SemVer) provides a clear and predictable framework for communicating the nature of changes between module releases. This system uses a three-part MAJOR.MINOR.PATCH number to signal the impact of an update, preventing unexpected disruptions in production environments.

Adopting SemVer allows module consumers to confidently manage dependencies. When you see a version change, you immediately understand its potential impact: a PATCH update is a safe bug fix, a MINOR update adds features without breaking existing configurations, and a MAJOR update signals significant, backward-incompatible changes that require careful review and likely refactoring.

How Semantic Versioning Works

The versioning scheme is defined by a simple set of rules that govern how version numbers get incremented:

- MAJOR version (X.y.z): Incremented for incompatible API changes. This signifies a breaking change, such as removing a variable, renaming an output, or fundamentally altering a resource's behavior.

- MINOR version (x.Y.z): Incremented when you add functionality in a backward-compatible manner. Examples include adding a new optional variable or a new output.

- PATCH version (x.y.Z): Incremented for backward-compatible bug fixes. This could be correcting a resource property or fixing a typo in an output.

For instance, HashiCorp's official AWS VPC module, a staple in the community, strictly follows SemVer. A jump from v3.14.0 to v3.15.0 indicates new features were added, while a change to v4.0.0 would signal a major refactor. This predictability is why the Terraform Registry mandates SemVer for all published modules.

Actionable Implementation Tips

To effectively implement SemVer in your module development workflow:

- Tag Git Releases: Always tag your releases in Git with a

vprefix, likev1.2.3. This is a standard convention that integrates well with CI/CD systems and the Terraform Registry. The command isgit tag v1.2.3followed bygit push origin v1.2.3. - Maintain a

CHANGELOG.md: Clearly document all breaking changes, new features, and bug fixes in a changelog file. This provides essential context beyond the version number. - Use Version Constraints: In your root module, specify version constraints for module sources to prevent accidental upgrades to breaking versions. Use the pessimistic version operator for a safe balance:

version = "~> 1.0"allows patch and minor releases but not major ones. - Automate Versioning: Integrate tools like semantic-release into your CI/CD pipeline. This can analyze commit messages (e.g.,

feat:,fix:,BREAKING CHANGE:) to automatically determine the next version number, generate changelog entries, and create the Git tag.

2. Implement a Standard Module Structure

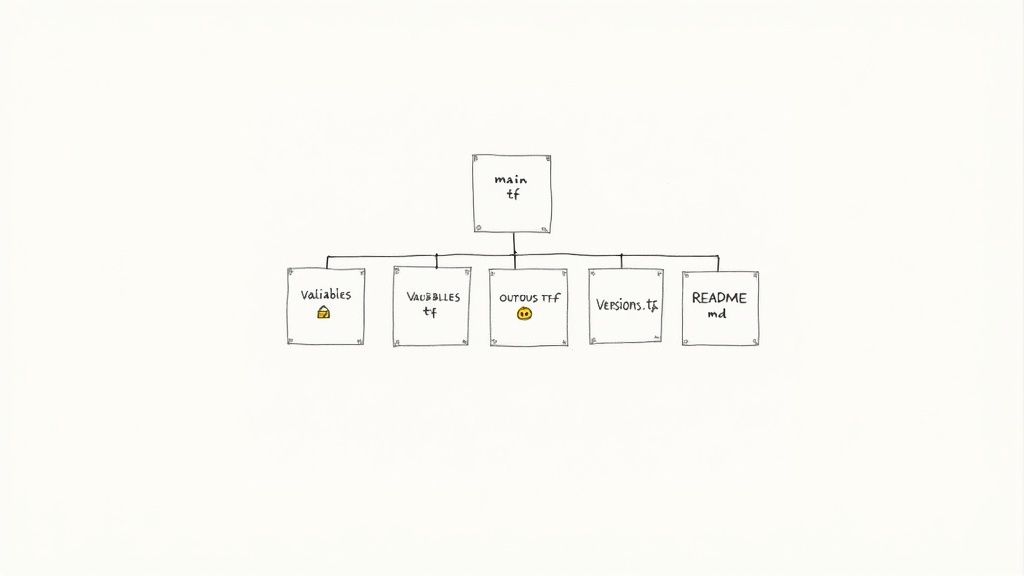

Adopting a standardized file structure is a foundational best practice for creating predictable, maintainable, and discoverable Terraform modules. HashiCorp recommends a standard module structure that logically organizes files, making it instantly familiar to any developer who has worked with Terraform. This convention separates resource definitions, variable declarations, and output values into distinct files, which dramatically improves readability and simplifies collaboration.

This structural consistency is not just a stylistic choice; it's a functional one. It allows developers to quickly locate specific code blocks, understand the module's interface (inputs and outputs) at a glance, and integrate automated tooling for documentation and testing. When modules are organized predictably, the cognitive overhead for consumers is significantly reduced, accelerating development and minimizing errors.

How the Standard Structure Works

The recommended structure organizes your module's code into a set of well-defined files, each with a specific purpose. This separation of concerns is a core principle behind effective Terraform modules best practices.

main.tf: Contains the primary set of resources that the module manages. This is the core logic of your module.variables.tf: Declares all input variables for the module, including their types, descriptions, and default values. It defines the module's API.outputs.tf: Declares the output values that the module will return to the calling configuration. This is what consumers can use from your module.versions.tf: Specifies the required versions for Terraform and any providers the module depends on, ensuring consistent behavior across environments.README.md: Provides comprehensive documentation, including the module's purpose, usage examples, and details on all inputs and outputs.

Prominent open-source projects like the Google Cloud Foundation Toolkit and the Azure Verified Modules initiative mandate this structure across their vast collections of modules. This ensures every module, regardless of its function, feels consistent and professional.

Actionable Implementation Tips

To effectively implement this standard structure in your own modules:

- Generate Documentation Automatically: Use tools like

terraform-docsto auto-generate yourREADME.mdfrom variable and output descriptions. Integrate it into a pre-commit hook to keep documentation in sync with your code. - Isolate Complex Logic: Keep

main.tffocused on primary resource creation. Move complex data transformations or computed values into a separatelocals.tffile to improve clarity. - Provide Usage Examples: Include a complete, working example in an

examples/subdirectory. This serves as both a test case and a quick-start guide for consumers. For those just starting, you can learn the basics of Terraform module structure to get a solid foundation. - Include Licensing and Changelogs: For shareable modules, always add a

LICENSEfile (e.g., Apache 2.0, MIT) to clarify usage rights and aCHANGELOG.mdto document changes between versions.

3. Design for Composition Over Inheritance

One of the most impactful Terraform modules best practices is to favor composition over inheritance. This means building small, focused modules that do one thing well, which can then be combined like building blocks. This approach contrasts sharply with creating large, monolithic modules filled with complex logic and boolean flags to handle every possible use case. By designing for composition, you create a more flexible, reusable, and maintainable infrastructure codebase.

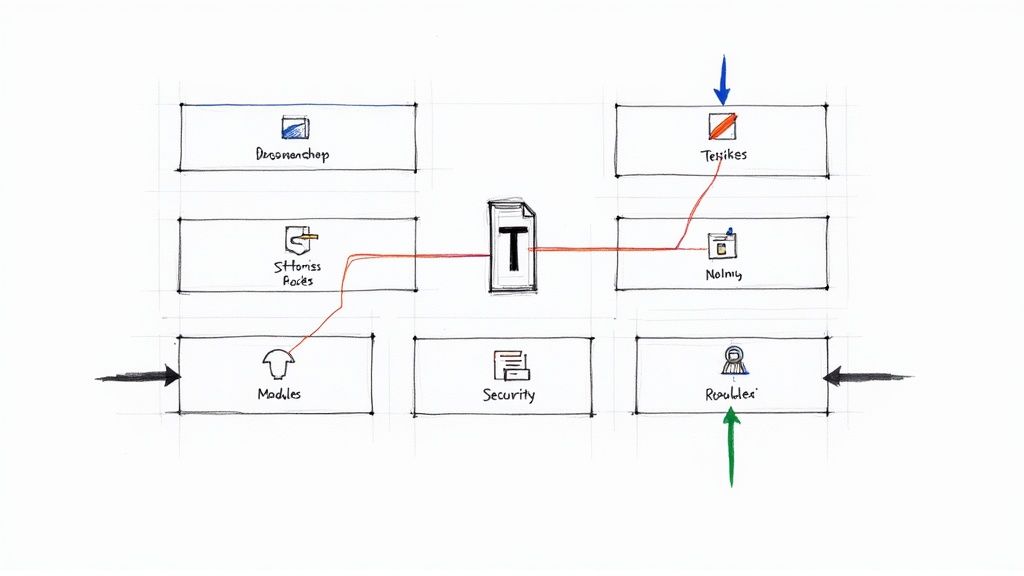

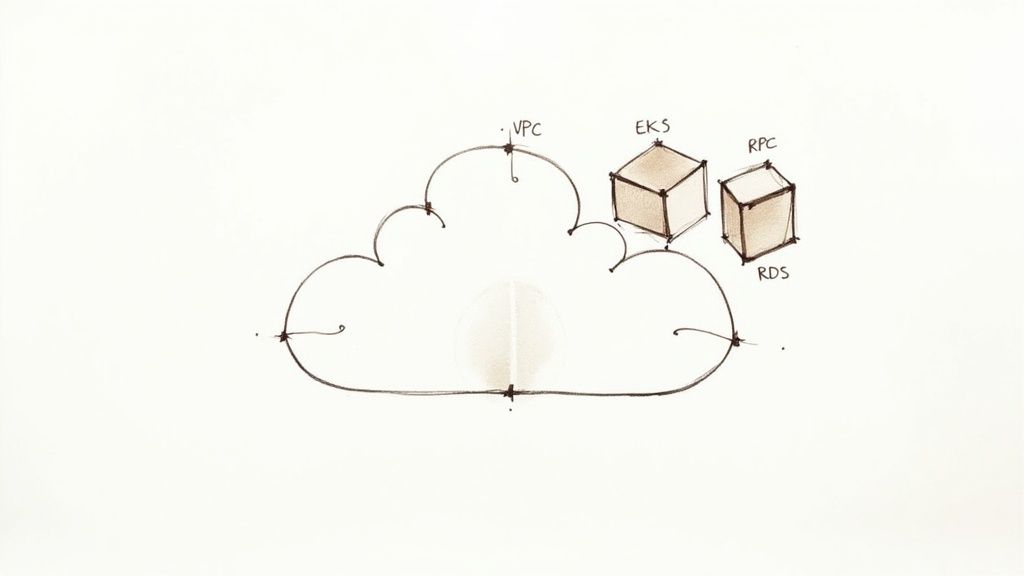

Inspired by the Unix philosophy, this practice encourages creating modules with a clear, singular purpose. Instead of a single aws-infrastructure module that provisions a VPC, EKS cluster, and RDS database, you would create separate aws-vpc, aws-eks, and aws-rds modules. The outputs of one module (like VPC subnet IDs) become the inputs for another, allowing you to "compose" them into a complete environment. This pattern significantly reduces complexity and improves testability.

How Composition Works

Composition in Terraform is achieved by using the outputs of one module as the inputs for another. This creates a clear and explicit dependency graph where each component is independent and responsible for a specific piece of infrastructure.

- Small, Focused Modules: Each module manages a single, well-defined resource or logical group of resources (e.g., an

aws_security_group, anaws_s3_bucket, or an entire VPC network). - Clear Interfaces: Modules expose necessary information through outputs, which serve as a public API for other modules to consume.

- Wrapper Modules: For common patterns, you can create "wrapper" or "composite" modules that assemble several smaller modules into a standard architecture, promoting DRY (Don't Repeat Yourself) principles without sacrificing flexibility.

A prime example is Gruntwork's infrastructure catalog, which offers separate modules like vpc-app, vpc-mgmt, and vpc-peering instead of a single, all-encompassing VPC module. This allows consumers to pick and combine only the components they need.

Actionable Implementation Tips

To effectively implement a compositional approach in your module design:

-

Ask "Does this do one thing well?": When creating a module, constantly evaluate its scope. If you find yourself adding numerous conditional variables (

create_x = true), it might be a sign the module should be split. -

Chain Outputs to Inputs: Design your modules to connect seamlessly. For example, the

vpc_idandprivate_subnetsoutputs from a VPC module should be directly usable as inputs for a compute module.# vpc/outputs.tf output "vpc_id" { value = aws_vpc.main.id } output "private_subnet_ids" { value = aws_subnet.private[*].id } # eks/main.tf module "eks" { source = "./modules/eks" vpc_id = module.vpc.vpc_id subnets = module.vpc.private_subnet_ids # ... } -

Avoid Deep Nesting: Keep module dependency depth reasonable, ideally no more than two or three levels. Overly nested modules can become difficult to understand and debug.

-

Document Composition Patterns: Use the

examples/directory within your module to demonstrate how it can be composed with other modules to build common architectures. This serves as powerful, executable documentation.

4. Use Input Variable Validation and Type Constraints

A core tenet of creating robust and user-friendly Terraform modules is to implement strict input validation. By leveraging Terraform's type constraints and custom validation rules, you can prevent configuration errors before a terraform apply is even attempted. This practice shifts error detection to the left, providing immediate, clear feedback to module consumers and ensuring the integrity of the infrastructure being deployed.

Enforcing data integrity at the module boundary is a critical aspect of Terraform modules best practices. It makes modules more predictable, self-documenting, and resilient to user error. Instead of allowing a misconfigured value to cause a cryptic provider error during an apply, validation rules catch the mistake during the planning phase, saving time and preventing failed deployments.

How Input Validation Works

Introduced in Terraform 0.13, variable validation allows module authors to define precise requirements for input variables. This is accomplished through several mechanisms working together:

- Type Constraints: Explicitly defining a variable's type (

string,number,bool,list(string),map(string),object) is the first line of defense. For complex, structured data,objecttypes provide a schema for nested attributes. - Validation Block: Within a

variableblock, one or morevalidationblocks can be added. Each contains acondition(an expression that must returntruefor the input to be valid) and a customerror_message. - Default Values: Providing sensible defaults for optional variables simplifies the module's usage and guides users.

For example, a module for an AWS RDS instance can validate that the backup_retention_period is within the AWS-allowed range of 0 to 35 days. This simple check prevents deployment failures and clarifies platform limitations directly within the code.

Actionable Implementation Tips

To effectively integrate validation into your modules:

-

Always Be Explicit: Specify a

typefor every variable. Avoid leaving it asanyunless absolutely necessary, as this bypasses crucial type-checking. -

Use Complex Types for Grouped Data: When multiple variables are related, group them into a single

objecttype. You can mark specific attributes as optional or required using theoptional()modifier.variable "database_config" { type = object({ instance_class = string allocated_storage = number engine_version = optional(string, "13.7") }) description = "Configuration for the RDS database instance." } -

Enforce Naming Conventions: Use

validationblocks with regular expressions to enforce resource naming conventions, such ascondition = can(regex("^[a-z0-9-]{3,63}$", var.bucket_name)). -

Write Clear Error Messages: Your

error_messageshould explain why the value is invalid and what a valid value looks like. For instance: "The backup retention period must be an integer between 0 and 35." -

Mark Sensitive Data: Always set

sensitive = truefor variables that handle secrets like passwords, API keys, or tokens. This prevents Terraform from displaying their values in logs and plan output.

5. Maintain Comprehensive and Auto-Generated Documentation

A well-architected Terraform module is only as good as its documentation. Without clear instructions, even the most powerful module becomes difficult to adopt and maintain. One of the most critical Terraform modules best practices is to automate documentation generation, ensuring it stays synchronized with the code, remains comprehensive, and is easy for consumers to navigate. Tools like terraform-docs are essential for this process.

Automating documentation directly from your HCL code and comments creates a single source of truth. This practice eliminates the common problem of outdated README files that mislead users and cause implementation errors. By programmatically generating details on inputs, outputs, and providers, you guarantee that what users read is precisely what the code does, fostering trust and accelerating adoption across teams.

How Automated Documentation Works

The core principle is to treat documentation as code. Tools like terraform-docs parse your module's .tf files, including variable and output descriptions, and generate a structured Markdown file. This process can be integrated directly into your development workflow, often using pre-commit hooks or CI/CD pipelines to ensure the README.md is always up-to-date with every code change.

Leading open-source communities like Cloud Posse and Gruntwork have standardized this approach. Their modules feature automatically generated READMEs that provide consistent, reliable information on variables, outputs, and usage examples. The Terraform Registry itself relies on this format to render module documentation, making it a non-negotiable standard for publicly shared modules.

Actionable Implementation Tips

To effectively implement automated documentation in your module development workflow:

- Integrate

terraform-docs: Install the tool and add it to a pre-commit hook. Configure.pre-commit-config.yamlto runterraform-docson your module directory, which automatically updates theREADME.mdbefore any code is committed. - Write Detailed Descriptions: Be explicit in the

descriptionattribute for every variable and output. Explain its purpose, accepted values, and any default behavior. This is the source for your generated documentation. - Include Complete Usage Examples: Create a

main.tffile within anexamples/directory that demonstrates a common, working implementation of your module.terraform-docscan embed these examples directly into yourREADME.md. - Document Non-Obvious Behavior: Use comments or the README header to explain any complex logic, resource dependencies, or potential "gotchas" that users should be aware of.

- Add a Requirements Section: Clearly list required provider versions, external tools, or specific environment configurations necessary for the module to function correctly.

6. Implement Comprehensive Automated Testing

Treating your Terraform modules as production-grade software requires a commitment to rigorous, automated testing. This practice involves using frameworks to validate that modules function correctly, maintain backward compatibility, and adhere to security and compliance policies. By integrating automated testing into your development lifecycle, you build a critical safety net that ensures module reliability and enables developers to make changes with confidence.

Automated testing moves beyond simple terraform validate and terraform fmt checks. It involves deploying real infrastructure in isolated environments to verify functionality, test edge cases, and confirm that updates do not introduce regressions. This proactive approach catches bugs early, reduces manual review efforts, and is a cornerstone of modern Infrastructure as Code (IaC) maturity.

How Automated Testing Works

Automated testing for Terraform modules typically involves a "plan, apply, inspect, destroy" cycle executed by a testing framework. A test suite will provision infrastructure using your module, run assertions to check if the deployed resources meet expectations, and then tear everything down to avoid unnecessary costs. This process is usually triggered automatically in a CI/CD pipeline upon every commit or pull request.

Leading organizations rely heavily on this practice. For instance, Gruntwork, the creators of the popular Go framework Terratest, uses it to test their modules against live cloud provider accounts. Similarly, Cloud Posse integrates Terratest with GitHub Actions to create robust CI/CD workflows, ensuring every change is automatically vetted. These frameworks allow you to write tests in familiar programming languages, making infrastructure validation as systematic as application testing. For a deeper dive into selecting the right solutions for your testing framework, an automated testing tools comparison can be highly beneficial.

Actionable Implementation Tips

To effectively integrate automated testing into your module development:

- Start with Your Examples: Leverage your module's

examples/directory as the basis for your test cases. These examples should represent common use cases that can be deployed and validated. - Use Dedicated Test Accounts: Never run tests in production environments. Isolate testing to dedicated cloud accounts or projects with strict budget and permission boundaries to prevent accidental impact.

- Implement Static Analysis: Integrate tools like

tfsecandCheckovinto your CI pipeline to automatically scan for security misconfigurations and policy violations before any infrastructure is deployed. These tools analyze the Terraform plan or code directly, providing rapid feedback. - Test Failure Scenarios: Good tests verify not only successful deployments but also that the module fails gracefully. Explicitly test variable validation rules to ensure they reject invalid inputs as expected. For more insights, you can explore various automated testing strategies.

7. Minimize Use of Conditional Logic and Feature Flags

A key principle in creating maintainable Terraform modules is to favor composition over configuration. This means resisting the urge to build monolithic modules controlled by numerous boolean feature flags. Overusing conditional logic leads to complex, hard-to-test modules where the impact of a single variable change is difficult to predict. This approach is a cornerstone of effective Terraform modules best practices, ensuring clarity and reliability.

By minimizing feature flags, you create modules that are focused and explicit. Instead of a single, complex module with a create_database boolean, you create separate, purpose-built modules like rds-instance and rds-cluster. This design choice drastically reduces the cognitive load required to understand and use the module, preventing the combinatorial explosion of configurations that plagues overly complex code.

How to Prioritize Composition Over Conditionals

The goal is to design smaller, single-purpose modules that can be combined to achieve a desired outcome. This pattern makes your infrastructure code more modular, reusable, and easier to debug, as each component has a clearly defined responsibility.

- Separate Modules for Distinct Resources: If a boolean variable would add or remove more than two or three significant resources, it's a strong indicator that you need separate modules. For example, instead of an

enable_public_accessflag, create distinctpublic-subnetandprivate-subnetmodules. - Use

countorfor_eachfor Multiplicity: Use Terraform's built-in looping constructs to manage multiple instances of a resource, not to toggle its existence. To disable a resource, set the count or thefor_eachmap to empty:resource "aws_instance" "example" { count = var.create_instance ? 1 : 0 # ... } - Create Wrapper Modules: For common configurations, create a "wrapper" or "composition" module that combines several smaller modules. This provides a simplified interface for common patterns without polluting the base modules with conditional logic.

For instance, Cloud Posse maintains separate eks-cluster and eks-fargate-profile modules. This separation ensures each module does one thing well, and users can compose them as needed. This is far cleaner than a single EKS module with an enable_fargate flag that conditionally creates an entirely different set of resources.

Actionable Implementation Tips

To effectively reduce conditional logic in your module development:

- Follow the Rule of Three: If a boolean flag alters the creation or fundamental behavior of three or more resources, split the logic into a separate module.

- Document Necessary Conditionals: When a conditional is unavoidable (e.g., using

countto toggle a single resource), clearly document its purpose, impact, and why it was deemed necessary in the module'sREADME.md. - Leverage Variable Validation: Use custom validation rules in your

variables.tffile to prevent users from selecting invalid combinations of features, adding a layer of safety. - Prefer Graduated Modules: Instead of feature flags, consider offering different versions of a module, such as

my-service-basicandmy-service-advanced, to cater to different use cases.

8. Pin Provider Versions with Version Constraints

While versioning your modules is critical, an equally important Terraform modules best practice is to explicitly lock the versions of Terraform and its providers. Failing to pin provider versions can introduce unexpected breaking changes, as a simple terraform init might pull a new major version of a provider with a different API. This can lead to deployment failures and inconsistent behavior across environments.

By defining version constraints, you ensure that your infrastructure code behaves predictably and reproducibly every time it runs. This practice is fundamental to maintaining production stability, as it prevents your configurations from breaking due to unvetted upstream updates from provider maintainers. It transforms your infrastructure deployments from a risky process into a deterministic one.

How Version Constraints Work

Terraform provides specific blocks within your configuration to manage version dependencies. These blocks allow you to set rules for which versions of the Terraform CLI and providers are compatible with your code:

- Terraform Core Version (

required_version): This setting in theterraformblock ensures that the code is only run by compatible versions of the Terraform executable. - Provider Versions (

required_providers): This block specifies the source and version for each provider used in your module. It's the primary mechanism for preventing provider-related drift.

For example, the AWS provider frequently introduces significant changes between major versions. A constraint like source = "hashicorp/aws", version = ">= 4.0, < 5.0" ensures your module works with any v4.x release but prevents an automatic, and likely breaking, upgrade to v5.0. This gives you control over when and how you adopt new provider features.

Actionable Implementation Tips

To effectively manage version constraints and ensure stability:

- Commit

.terraform.lock.hcl: This file records the exact provider versions selected duringterraform init. Committing it to your version control repository ensures that every team member and CI/CD pipeline uses the same provider dependencies, guaranteeing reproducibility. - Use the Pessimistic Version Operator (

~>): For most cases, the~>operator provides the best balance between stability and receiving non-breaking updates. A constraint likeversion = "~> 4.60"will allow all patch releases (e.g.,4.60.1) but will not upgrade to4.61or5.0. - Automate Dependency Updates: Integrate tools like Dependabot or Renovate into your repository. These services automatically create pull requests to update your provider versions, allowing you to review changelogs and test the updates in a controlled manner before merging.

- Test Provider Upgrades Thoroughly: Before applying a minor or major provider version update in production, always test it in a separate, non-production environment. This allows you to identify and fix any required code changes proactively.

9. Design for Multiple Environments and Workspaces

A hallmark of effective infrastructure as code is reusability, and one of the most important Terraform modules best practices is designing them to be environment-agnostic. Modules should function seamlessly across development, staging, and production without containing hard-coded, environment-specific logic. This is achieved by externalizing all configurable parameters, allowing the same module to provision vastly different infrastructure configurations based on the inputs it receives.

This approach dramatically reduces code duplication and management overhead. Instead of maintaining separate, nearly identical modules for each environment (e.g., s3-bucket-dev, s3-bucket-prod), you create a single, flexible s3-bucket module. The calling root module then supplies the appropriate variables for the target environment, whether through .tfvars files, CI/CD variables, or Terraform Cloud/Enterprise workspaces.

How Environment-Agnostic Design Works

The core principle is to treat environment-specific settings as inputs. This means every value that could change between environments, such as instance sizes, replica counts, feature flags, or naming conventions, must be defined as a variable. The module's internal logic then uses these variables to construct the desired infrastructure.

For example, a common pattern is to use variable maps to define environment-specific configurations. A module for an EC2 instance might accept a map like instance_sizes = { dev = "t3.small", stg = "t3.large", prod = "m5.xlarge" } and select the appropriate value based on a separate environment variable. This keeps the conditional logic clean and centralizes configuration in the root module, where it belongs.

Actionable Implementation Tips

To create robust, multi-environment modules:

-

Use an

environmentVariable: Accept a dedicatedenvironment(orstage) variable to drive naming, tagging, and conditional logic within the module. -

Leverage Variable Maps: Define environment-specific values like instance types, counts, or feature toggles in maps. Use a lookup function to select the correct value:

lookup(var.instance_sizes, var.environment, "t3.micro").# variables.tf variable "environment" { type = string } variable "instance_type_map" { type = map(string) default = { dev = "t3.micro" prod = "m5.large" } } # main.tf resource "aws_instance" "app" { instance_type = lookup(var.instance_type_map, var.environment) # ... } -

Avoid Hard-Coded Names: Never hard-code resource names. Instead, construct them dynamically using a

name_prefixvariable combined with the environment and other unique identifiers:name = "${var.name_prefix}-${var.environment}-app". -

Provide Sensible Defaults: Set default variable values that are appropriate for a non-production or development environment. This makes the module easier to test and use for initial experimentation.

-

Document Environment-Specific Inputs: Clearly document which variables are expected to change per environment and provide recommended values for production deployments in your

README.md. You can learn more about how this fits into a broader strategy by reviewing these infrastructure as code best practices.

10. Expose Meaningful and Stable Outputs

A key element of effective Terraform modules best practices is designing a stable and useful public interface, and outputs are the primary mechanism for this. Well-defined outputs expose crucial resource attributes and computed values, allowing consumers to easily chain modules together or integrate infrastructure with other systems. Think of outputs as the public API of your module; they should be comprehensive, well-documented, and stable across versions.

Treating outputs with this level of care transforms your module from a simple resource collection into a reusable, composable building block. When a module for an AWS RDS instance outputs the database endpoint, security group ID, and ARN, it empowers other teams to consume that infrastructure without needing to understand its internal implementation details. This abstraction is fundamental to building scalable and maintainable infrastructure as code.

How to Design Effective Outputs

A well-designed output contract focuses on providing value for composition. The goal is to expose the necessary information for downstream dependencies while hiding the complexity of the resources created within the module.

- Essential Identifiers: Always output primary identifiers like IDs and ARNs (Amazon Resource Names). For example, a VPC module must output

vpc_id,private_subnet_ids, andpublic_subnet_ids. - Integration Points: Expose values needed for connecting systems. An EKS module should output the

cluster_endpointandcluster_certificate_authority_datafor configuringkubectl. - Sensitive Data: Properly handle sensitive values like database passwords or API keys by marking them as

sensitive = true. This prevents them from being displayed in CLI output. - Complex Data: Use object types to group related attributes. Instead of separate

db_instance_endpoint,db_instance_port, anddb_instance_usernameoutputs, you could have a singledatabase_connection_detailsobject.

A well-architected module's outputs tell a clear story about its purpose and how it connects to the broader infrastructure ecosystem. They make your module predictable and easy to integrate, which is the hallmark of a high-quality, reusable component.

Actionable Implementation Tips

To ensure your module outputs are robust and consumer-friendly:

- Add Descriptions: Every output block should have a

descriptionargument explaining what the value represents and its intended use. This serves as inline documentation for anyone using the module. - Maintain Stability: Avoid removing or renaming outputs in minor or patch releases, as this is a breaking change. Treat your output structure as a contract with your consumers.

- Use Consistent Naming: Adopt a clear naming convention, such as

resource_type_attribute(e.g.,iam_role_arn), to make outputs predictable and self-explanatory. - Output Entire Objects: For maximum flexibility, you can output an entire resource object (

value = aws_instance.this). This gives consumers access to all resource attributes, but be cautious as any change to the resource schema could become a breaking change for your module's API. - Document Output Schema: Clearly list all available outputs and their data types (e.g., list, map, object) in your module's

README.md. This is essential for usability.

Top 10 Terraform Module Best Practices Comparison

| Item | Implementation complexity | Resource requirements | Expected outcomes | Ideal use cases | Key advantages |

|---|---|---|---|---|---|

| Use Semantic Versioning for Module Releases | Medium — release process and discipline | CI/CD release automation, git tagging, maintainers | Clear change semantics; safer dependency upgrades | Published modules, multi-team libraries, registries | Predictable upgrades; reduced breakage; standardizes expectations |

| Implement a Standard Module Structure | Low–Medium — adopt/refactor layout conventions | Documentation tools, repo restructuring, linters | Consistent modules, easier onboarding | Team repositories, public registries, large codebases | Predictable layout; tooling compatibility; simpler reviews |

| Design for Composition Over Inheritance | Medium–High — modular design and interfaces | More modules to manage, interface docs, dependency tracking | Reusable building blocks; smaller blast radius | Large projects, reuse-focused orgs, multi-team architectures | Flexibility; testability; separation of concerns |

| Use Input Variable Validation and Type Constraints | Low–Medium — add types and validation rules | Authoring validation, tests, IDE support | Fewer runtime errors; clearer inputs at plan time | Modules with complex inputs or security constraints | Early error detection; self-documenting; better UX |

| Maintain Comprehensive and Auto-Generated Documentation | Medium — tooling and CI integration | terraform-docs, pre-commit, CI jobs, inline comments | Up-to-date READMEs; improved adoption and discoverability | Public modules, onboarding-heavy teams, catalogs | Synchronized docs; reduced manual work; consistent format |

| Implement Comprehensive Automated Testing | High — test frameworks and infra setup | Test accounts, CI pipelines, tooling (Terratest, kitchen) | Higher reliability; fewer regressions; validated compliance | Production-critical modules, enterprise, compliance needs | Confidence in changes; regression prevention; compliance checks |

| Minimize Use of Conditional Logic and Feature Flags | Low–Medium — design choices and possible duplication | More focused modules, documentation, maintenance | Predictable behavior; simpler tests; lower config complexity | Modules requiring clarity and testability | Simpler codepaths; easier debugging; fewer config errors |

| Pin Provider Versions with Version Constraints | Low — add required_version and provider pins | Lock file management, update process, coordination | Reproducible deployments; fewer unexpected breaks | Production infra, enterprise environments, audited systems | Predictability; reproducibility; controlled upgrades |

| Design for Multiple Environments and Workspaces | Medium — variable patterns and workspace awareness | Variable maps, testing across envs, documentation | Single codebase across envs; easier promotion | Multi-environment deployments, Terraform Cloud/Enterprise | Reuse across environments; consistent patterns; reduced duplication |

| Expose Meaningful and Stable Outputs | Low–Medium — define stable API and sensitive flags | Documentation upkeep, design for stability, testing | Clear module API; easy composition and integration | Composable modules, integrations, downstream consumers | Clean interfaces; enables composition; predictable integration |

Elevating Your Infrastructure as Code Maturity

Mastering the art of building robust, reusable, and maintainable Terraform modules is not just an academic exercise; it's a strategic imperative for any organization serious about scaling its infrastructure effectively. Throughout this guide, we've explored ten foundational best practices, moving from high-level concepts to granular, actionable implementation details. These principles are not isolated suggestions but interconnected components of a mature Infrastructure as Code (IaC) strategy. Adhering to these Terraform modules best practices transforms your codebase from a collection of configurations into a reliable, predictable, and scalable system.

The journey begins with establishing a strong foundation. Disciplined approaches like Semantic Versioning (Best Practice #1) and a Standard Module Structure (Best Practice #2) create the predictability and consistency necessary for teams to collaborate effectively. When developers can instantly understand a module's layout and trust its version contract, the friction of adoption and maintenance decreases dramatically. This structural integrity is the bedrock upon which all other practices are built.

From Good Code to Great Infrastructure

Moving beyond structure, the real power of Terraform emerges when you design for composability and resilience. The principle of Composition Over Inheritance (Best Practice #3) encourages building small, focused modules that can be combined like building blocks to construct complex systems. This approach, paired with rigorous Input Variable Validation (Best practice #4) and pinned provider versions (Best Practice #8), ensures that each block is both reliable and secure. Your modules become less about monolithic deployments and more about creating a flexible, interoperable ecosystem.

This ecosystem thrives on trust, which is earned through two critical activities: documentation and testing.

- Comprehensive Automated Testing (Best Practice #6): Implementing a robust testing pipeline with tools like

terraform validate,tflint, and Terratest is non-negotiable for production-grade modules. It provides a safety net that catches errors before they reach production, giving engineers the confidence to refactor and innovate. - Auto-Generated Documentation (Best Practice #5): Tools like

terraform-docsturn documentation from a chore into an automated, reliable byproduct of development. Clear, up-to-date documentation democratizes module usage, reduces the support burden on creators, and accelerates onboarding for new team members.

The Strategic Value of IaC Excellence

Ultimately, embracing these Terraform modules best practices is about elevating your operational maturity. When you minimize conditional logic (Best Practice #7), design for multiple environments (Best Practice #9), and expose stable, meaningful outputs (Best practice #10), you are doing more than just writing clean code. You are building a system that is easier to debug, faster to deploy, and safer to change.

The true value is measured in business outcomes: accelerated delivery cycles, reduced downtime, and enhanced security posture. Your infrastructure code becomes a strategic asset that enables innovation rather than a technical liability that hinders it. The initial investment in establishing these standards pays compounding dividends in stability, team velocity, and developer satisfaction. By treating your modules as first-class software products with clear contracts, rigorous testing, and excellent documentation, you unlock the full potential of Infrastructure as Code. This disciplined approach is the definitive line between managing infrastructure and truly engineering it.

Ready to implement these best practices but need the expert capacity to do it right? OpsMoon connects you with the top 0.7% of elite, vetted DevOps and SRE freelancers who specialize in building production-grade Terraform modules. Start with a free work planning session to build a roadmap for your infrastructure and get matched with the perfect expert to execute it.