10 Actionable Software Security Best Practices for 2026

Discover 10 technical software security best practices for 2026. This guide covers SSDLC, IaC, secrets, and more with actionable implementation steps.

In today's fast-paced development landscape, software security can no longer be a final-stage checkbox; it's the bedrock of reliable and trustworthy applications. Reactive security measures are costly, inefficient, and leave organizations exposed to ever-evolving threats. The most effective strategy is to build security into every layer of the software delivery lifecycle. This 'shift-left' approach transforms security from a barrier into a competitive advantage.

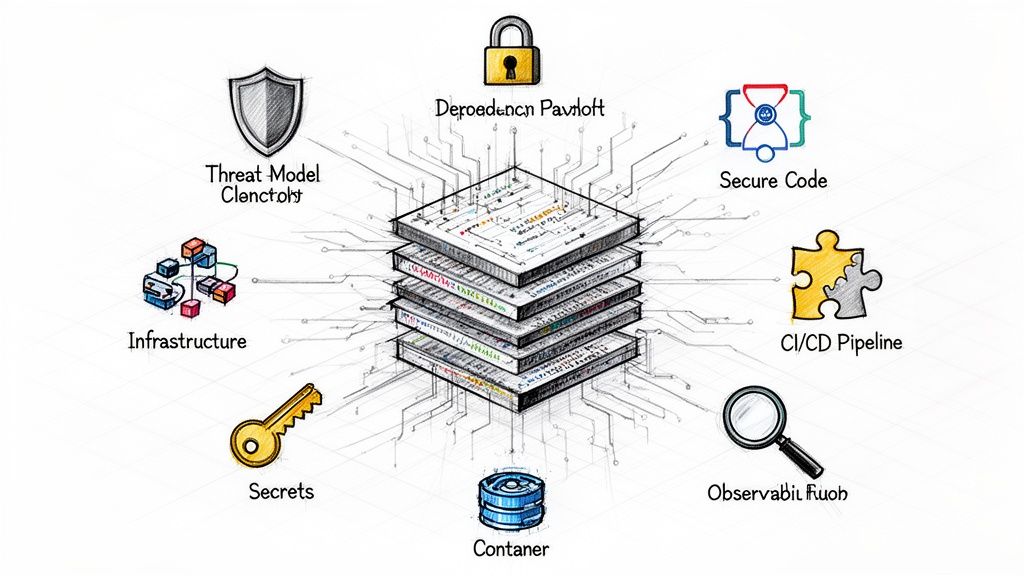

This guide moves beyond generic advice to provide a technical, actionable roundup of 10 software security best practices you can implement today. We will explore specific tools, frameworks, and architectural patterns designed to fortify your code, infrastructure, and processes. Our focus is on practical implementation, covering everything from secure CI/CD pipelines and Infrastructure as Code (IaC) hardening to Zero Trust networking and automated vulnerability scanning.

Each practice is presented as a building block toward a comprehensive DevSecOps culture, empowering your teams to innovate quickly without compromising on security. We will detail how to integrate these measures directly into your existing workflows, ensuring security becomes a seamless and automated part of development, not an afterthought. Let's dive into the technical details that separate secure software from the vulnerable.

1. Implement a Secure Software Development Lifecycle (SSDLC)

A Secure Software Development Lifecycle (SSDLC) is a foundational practice that embeds security activities directly into every phase of your existing development process. Instead of treating security as a final gate before deployment, an SSDLC framework makes it a continuous, shared responsibility from initial design to post-release maintenance. This "shift-left" approach is one of the most effective software security best practices for proactively identifying and mitigating vulnerabilities early, when they are cheapest and easiest to fix.

The core principle is to integrate specific security checks and balances at each stage: requirements, design, development, testing, and deployment. This model transforms security from a siloed function into an integral part of software creation, dramatically reducing the risk of releasing insecure code.

How an SSDLC Works in Practice

Implementing an SSDLC involves augmenting your standard development workflow with targeted security actions. For a comprehensive understanding of how to implement security practices throughout your development process, refer to this Guide to the Secure Software Development Life Cycle (SDLC). A typical implementation includes:

- Requirements Phase: Define clear security requirements alongside functional ones. Specify data encryption standards (e.g., require TLS 1.3 for all data in transit, AES-256-GCM for data at rest), authentication protocols (e.g., mandate OIDC with PKCE flow for all user-facing applications), and compliance constraints (e.g., GDPR data residency).

- Design Phase: Conduct threat modeling sessions using frameworks like STRIDE (Spoofing, Tampering, Repudiation, Information Disclosure, Denial of Service, Elevation of Privilege) or DREAD. Document data flows and trust boundaries to identify potential architectural weaknesses, such as an unauthenticated internal API.

- Development Phase: Developers follow secure coding guidelines (e.g., OWASP Top 10, CERT C) and use Static Application Security Testing (SAST) tools (e.g., SonarQube, Snyk Code) integrated into their IDEs via plugins and pre-commit hooks to catch vulnerabilities in real-time.

- Testing Phase: Augment standard QA with Dynamic Application Security Testing (DAST) tools like OWASP ZAP, Interactive Application Security Testing (IAST), and manual penetration testing against a staging environment to find runtime vulnerabilities not visible in source code.

- Deployment & Maintenance: Implement security gates in your CI/CD pipeline (e.g., Jenkins, GitLab CI) that automatically fail a build if SAST or SCA scans detect critical vulnerabilities. Continuously monitor production environments with runtime application self-protection (RASP) tools and have a defined incident response plan.

OpsMoon Expertise: Our DevOps specialists can help you design and implement a tailored SSDLC framework. We can integrate automated security tools like SAST and DAST into your CI/CD pipelines, establish security gates, and conduct a maturity assessment to identify and close gaps in your current processes.

2. Enforce Secret Management and Credential Rotation

Enforcing robust secret management and credential rotation is a critical software security best practice for protecting your most sensitive data. This involves a systematic approach to securely storing, accessing, and rotating credentials like API keys, database passwords, and TLS certificates. Hardcoding secrets in source code or configuration files is a common but dangerous mistake that creates a massive security vulnerability, making centralized management essential.

The core principle is to treat secrets as dynamic, short-lived assets that are centrally managed and programmatically accessed. By removing credentials from developer workflows and application codebases, you dramatically reduce the risk of accidental leaks and provide a single, auditable point of control for all sensitive information. This practice is fundamental to achieving a zero-trust security posture.

How Secret Management Works in Practice

Implementing a strong secret management strategy involves adopting dedicated tools and automated workflows. These systems provide a secure vault and an API for applications to request credentials just-in-time, ensuring they are never exposed in plaintext. For a deeper dive into this topic, explore these secrets management best practices. A typical implementation includes:

- Centralized Vaulting: Use a dedicated secrets manager like HashiCorp Vault or cloud-native services (AWS Secrets Manager, Azure Key Vault) as the single source of truth for all credentials. Applications authenticate to the vault using a trusted identity (e.g., a Kubernetes Service Account, an AWS IAM Role).

- Dynamic Secret Generation: Configure the vault to generate secrets on-demand with a short Time-To-Live (TTL). For instance, an application can request temporary database credentials from Vault's database secrets engine that expire in one hour, eliminating the need for long-lived static passwords.

- Automated Rotation: Enable automatic credential rotation policies for long-lived secrets, such as rotating a root database password every 30-90 days without manual intervention. The secrets manager handles the entire lifecycle of updating the credential in the target system and the vault. Robust secret management extends to securing API access, and a thorough understanding of authentication mechanisms is crucial for protecting sensitive resources. To learn more, see this ultimate guide to API key authentication.

- CI/CD Integration: Integrate secret scanning tools like GitGuardian or TruffleHog into your CI pipeline's pre-commit or pre-receive hooks to detect and block any commits that contain hardcoded credentials, preventing them from ever entering the codebase.

- Auditing and Access Control: Implement strict, role-based access control (RBAC) policies within the secrets manager and maintain comprehensive audit logs that track every secret access event, including which identity accessed which secret and when.

OpsMoon Expertise: Our security and DevOps engineers are experts in deploying and managing enterprise-grade secret management solutions. We can help you implement HashiCorp Vault with Kubernetes integration, configure cloud-native services like AWS Secrets Manager, establish automated rotation policies, and integrate secret scanning directly into your CI/CD pipelines to prevent credential leaks.

3. Implement Container and Image Security Scanning

Containerization has revolutionized software deployment, but it also introduces new attack surfaces. Implementing container and image security scanning is a critical software security best practice that prevents vulnerable or misconfigured containers from ever reaching production. This practice involves systematically analyzing container images for known vulnerabilities (CVEs) in OS packages and application dependencies, malware, embedded secrets, and policy violations.

By integrating scanning directly into the build and deployment pipeline, teams can shift security left and automate the detection of issues within container layers and application dependencies. This proactive approach hardens your runtime environment by ensuring that only trusted, vetted images are used, significantly reducing the risk of exploitation in production.

How Container and Image Scanning Works in Practice

The process involves using specialized tools to dissect container images, inspect their contents, and compare them against vulnerability databases and predefined security policies. For a deep dive into container security tools and their integration, you can explore the CNCF's Cloud Native Security Whitepaper, which covers various aspects of securing cloud-native applications. A typical implementation workflow includes:

- Build-Time Scanning: Integrate an image scanner like Trivy, Grype, or Clair directly into your CI/CD pipeline. For example, in a GitLab CI pipeline, a dedicated job can run

trivy image my-app:$CI_COMMIT_SHA --exit-code 1 --severity CRITICAL,HIGHto fail the build if severe vulnerabilities are found. - Registry Scanning: Configure your container registry (e.g., AWS ECR, Google Artifact Registry, Azure Container Registry) to automatically scan images upon push. This serves as a second gate and provides continuous scanning for newly discovered vulnerabilities in existing images.

- Policy Enforcement: Define and enforce security policies as code. For example, use a Kubernetes admission controller like Kyverno or OPA Gatekeeper to block pods from running if their image contains critical vulnerabilities or originates from an untrusted registry.

- Runtime Monitoring: Use tools like Falco or Sysdig Secure to continuously monitor running containers for anomalous behavior (e.g., unexpected network connections, shell execution in a container) based on predefined rules, providing real-time threat detection.

- Image Signing: Implement image signing with technologies like Cosign (part of Sigstore) to cryptographically verify the integrity and origin of your container images. This ensures the image deployed to production is the exact same one that was built and scanned in CI.

OpsMoon Expertise: Our team specializes in embedding robust container security into your Kubernetes and CI/CD workflows. We can integrate and configure advanced scanners like Trivy, Snyk, or Prisma Cloud into your pipelines, establish automated security gates, and implement comprehensive image signing and verification strategies to ensure your containerized applications are secure from build to runtime.

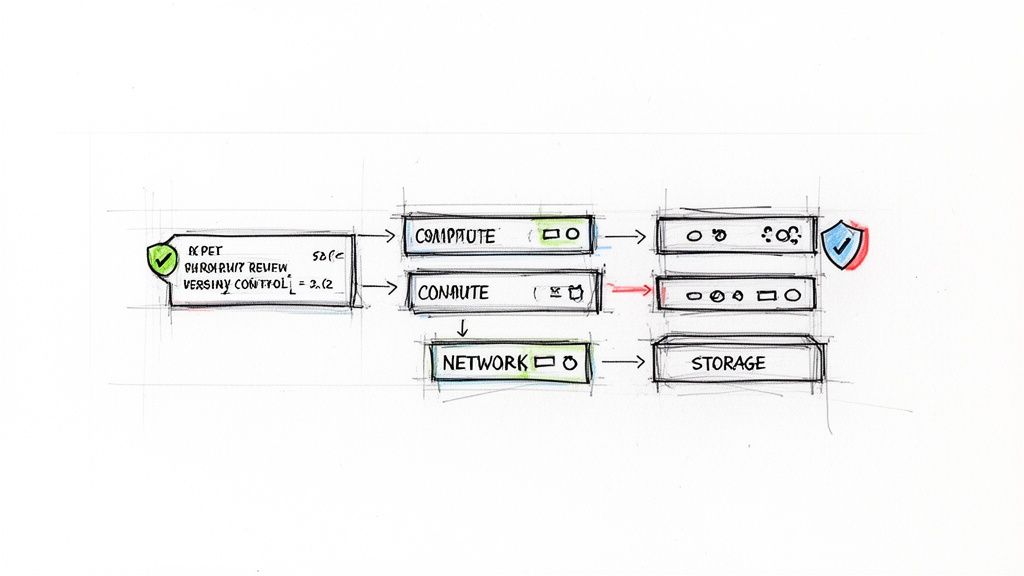

4. Deploy Infrastructure as Code (IaC) with Security Reviews

Infrastructure as Code (IaC) is the practice of managing and provisioning infrastructure through machine-readable definition files, such as Terraform, CloudFormation, or Ansible, rather than manual configuration. This approach brings version control, automation, and repeatability to infrastructure management. When combined with rigorous security reviews, it becomes a powerful software security best practice for preventing misconfigurations that often lead to data breaches.

Treating your infrastructure like application code allows you to embed security directly into your provisioning process. By codifying security policies and running automated static analysis checks against your IaC templates, you can ensure that every deployed resource—from VPCs to IAM roles—adheres to your organization's security and compliance standards before it ever goes live.

How IaC Security Works in Practice

Implementing secure IaC involves integrating security validation into your development and CI/CD pipelines. This ensures that infrastructure definitions are scanned for vulnerabilities and misconfigurations automatically. For an in-depth look at this process, explore our guide on how to check IaC for security issues. Key actions include:

- Policy as Code (PaC): Use tools like Open Policy Agent (OPA) or HashiCorp Sentinel to define and enforce security guardrails. For example, write an OPA policy in Rego to deny any AWS Security Group resource that defines an ingress rule with

cidr_blocks = ["0.0.0.0/0"]on port 22 (SSH). - Static IaC Scanning: Integrate scanners like Checkov, tfsec, or Terrascan directly into your CI pipeline. These tools analyze your Terraform or CloudFormation files for thousands of known misconfigurations, such as unencrypted S3 buckets or overly permissive IAM roles, and can fail the build if issues are found.

- Peer Review Process: Enforce mandatory pull request reviews for all infrastructure changes via branch protection rules in Git. This human-in-the-loop step ensures that a second pair of eyes validates the logic and security implications before a

terraform applyis executed. - Drift Detection: Continuously monitor your production environment for drift—manual changes made outside of your IaC workflow. Tools like

driftctlor built-in features in Terraform Cloud can detect these changes and alert your team, allowing you to remediate them and maintain the integrity of your code-defined state.

OpsMoon Expertise: Our cloud and DevOps engineers specialize in building secure, compliant, and scalable infrastructure using IaC. We can help you integrate tools like Checkov and OPA into your CI/CD pipelines, establish robust peer review workflows, and implement automated drift detection to ensure your infrastructure remains secure and consistent.

5. Establish Comprehensive Logging, Monitoring, and Observability

Comprehensive logging, monitoring, and observability form the bedrock of a proactive security posture, enabling you to see and understand what is happening across your applications and infrastructure in real-time. Instead of reacting to incidents after significant damage is done, this practice allows for the rapid detection of suspicious activities, forensic investigation of breaches, and continuous verification of security controls. It goes beyond simple log collection by correlating logs, metrics, and traces to provide deep context into system behavior.

This approach transforms your operational data into a powerful security tool. By establishing a centralized system for collecting, analyzing, and alerting on security-relevant events, you can identify threats like unauthorized access attempts or data exfiltration as they occur. This visibility is not just a best practice; it's often a mandatory requirement for meeting compliance standards like SOC 2, ISO 27001, and GDPR.

How Comprehensive Observability Works in Practice

Implementing a robust observability strategy involves instrumenting your entire stack to emit detailed telemetry data and funneling it into a centralized platform for analysis and alerting. For a deeper dive into modern observability platforms, consider reviewing how a service like Datadog provides security monitoring across complex environments. A typical implementation includes:

- Log Aggregation: Centralize logs from all sources, including applications, servers, load balancers, firewalls, and cloud services (e.g., AWS CloudTrail, VPC Flow Logs). Use agents like Fluentd or Vector to ship structured logs (e.g., JSON format) to a centralized platform like the ELK Stack (Elasticsearch, Logstash, Kibana) or managed solutions like Splunk or Datadog.

- Real-Time Monitoring & Alerting: Define and configure alerts for specific security events and behavioral anomalies. Examples include multiple failed login attempts from a single IP within a five-minute window, unexpected privilege escalations in audit logs, or API calls to sensitive endpoints from unusual geographic locations or ASNs.

- Distributed Tracing: Implement distributed tracing with OpenTelemetry to track the full lifecycle of a request as it moves through various microservices. This is critical for pinpointing the exact location of a security flaw or understanding the attack path during an incident by visualizing the service call graph.

- Security Metrics & Dashboards: Create dashboards to visualize key security metrics, such as authentication success/failure rates, firewall block rates, and the number of critical vulnerabilities detected by scanners over time. This provides an at-a-glance view of your security health and trends.

OpsMoon Expertise: Our observability specialists can design and deploy a scalable logging and monitoring stack tailored to your specific security and compliance needs. We can configure tools like the ELK Stack or Datadog, establish critical security alerts, and create custom dashboards to give you actionable insights into your environment's security posture.

6. Implement Network Segmentation and Zero Trust Architecture

A Zero Trust Architecture (ZTA) is a modern security model built on the principle of "never trust, always verify." It assumes that threats can exist both outside and inside the network, so it eliminates the concept of a trusted internal network and requires strict verification for every user, device, and application attempting to access resources. This approach, often combined with network segmentation, is a critical software security best practice for minimizing the potential impact, or "blast radius," of a security breach.

The core principle is to enforce granular access policies based on identity and context, not network location. By segmenting the network into smaller, isolated zones and applying strict access controls between them, you can prevent lateral movement from a compromised component to the rest of the system. This model is essential for modern, distributed architectures like microservices and cloud environments where traditional perimeter security is no longer sufficient.

How Zero Trust Works in Practice

Implementing a Zero Trust model involves layering multiple security controls to validate every access request dynamically. For a detailed overview of the core principles, you can explore the NIST Special Publication on Zero Trust Architecture. A practical implementation often includes:

- Micro-segmentation: In a Kubernetes environment, use Network Policies to define explicit ingress and egress rules for pods based on labels. For instance, a policy can specify that pods with the label

app=frontendcan only initiate traffic to pods with the labelapp=api-gatewayon TCP port 8080. - Identity-Based Access: Enforce strong authentication and authorization for all service-to-service communication. Implementing a service mesh like Istio or Linkerd allows you to enforce mutual TLS (mTLS), where each microservice presents a cryptographic identity (e.g., SPIFFE/SPIRE) to authenticate itself before any communication is allowed.

- Least Privilege Access: Grant users and services the minimum level of access required to perform their functions. Use Role-Based Access Control (RBAC) in Kubernetes or Identity and Access Management (IAM) in cloud providers to enforce this principle rigorously. An IAM role for an EC2 instance should only have permissions for the specific AWS services it needs to call.

- Continuous Monitoring: Actively monitor network traffic and access logs for anomalous behavior or policy violations. For example, use VPC Flow Logs and a SIEM to set up alerts for any attempts to access a production database from an unauthorized service or IP range, even if the firewall would have blocked it.

OpsMoon Expertise: Our platform engineers specialize in designing and implementing robust Zero Trust architectures. We can help you deploy and configure service meshes like Istio, write and manage Kubernetes Network Policies, and establish centralized identity and access management systems to secure your cloud-native applications from the ground up.

7. Enable Automated Security Testing (SAST, DAST, SCA)

Integrating automated security testing is a non-negotiable software security best practice for modern development teams. This approach embeds different types of security analysis directly into the CI/CD pipeline, allowing for continuous and rapid feedback on the security posture of your code. By automating these checks, you can systematically catch vulnerabilities before they reach production, without slowing down development velocity.

The three core pillars of this practice are SAST, DAST, and SCA. SAST (Static Application Security Testing) analyzes your source code for flaws without executing it. DAST (Dynamic Application Security Testing) tests your running application for vulnerabilities, and SCA (Software Composition Analysis) scans your dependencies for known security issues. Together, they provide comprehensive, automated security coverage.

How Automated Security Testing Works in Practice

Implementing automated security testing involves selecting the right tools for your technology stack and integrating them at key stages of your CI/CD pipeline. The goal is to create a safety net that automatically flags potential security risks, often blocking a build or deployment if critical issues are found. For a deeper look at security scanning tools, consider this OWASP Source Code Analysis Tools list. A robust setup includes:

- Static Application Security Testing (SAST): Integrate a tool like SonarQube, Semgrep, or GitHub’s native CodeQL to scan code on every commit or pull request. This provides developers with immediate feedback on security hotspots, such as potential SQL injection vulnerabilities identified by taint analysis or hardcoded secrets.

- Software Composition Analysis (SCA): Use tools like Snyk, Dependabot, or JFrog Xray to scan third-party libraries and frameworks. SCA tools check your project's manifests (e.g.,

package-lock.json,pom.xml) against a database of known vulnerabilities (CVEs) and can automatically create pull requests to update insecure packages. - Dynamic Application Security Testing (DAST): Configure a DAST tool, such as OWASP ZAP or Burp Suite, to run against a staging or test environment after a successful deployment. The pipeline can trigger an authenticated scan that crawls the application, simulating external attacks to find runtime vulnerabilities like cross-site scripting (XSS) or insecure cookie configurations.

- Security Gates: Establish automated quality gates in your CI/CD pipeline. For example, configure your Jenkins or GitLab CI pipeline to fail if an SCA scan detects a high-severity vulnerability (CVSS score > 7.0) with a known exploit, preventing insecure code from being promoted.

OpsMoon Expertise: Our team specializes in integrating comprehensive security testing into your CI/CD pipelines. We can help you select, configure, and tune SAST, DAST, and SCA tools to minimize false positives, establish meaningful security gates, and create dashboards that provide clear visibility into your application's security posture.

8. Enforce Principle of Least Privilege (PoLP) and RBAC

The Principle of Least Privilege (PoLP) is a foundational security concept stating that any user, program, or process should have only the bare minimum permissions necessary to perform its function. When combined with Role-Based Access Control (RBAC), which groups users into roles with defined permissions, PoLP becomes a powerful tool for controlling access and minimizing the potential damage from a compromised account or service. This is one of the most critical software security best practices for preventing lateral movement and privilege escalation.

Instead of granting broad, default permissions, this approach forces a deliberate and granular assignment of access rights. By restricting what an entity can do, you dramatically shrink the attack surface. If a component is compromised, the attacker's capabilities are confined to that component’s minimal set of permissions, preventing them from accessing sensitive data or other parts of the system.

How PoLP and RBAC Work in Practice

Implementing PoLP and RBAC involves defining roles based on job functions and assigning the most restrictive permissions possible to each. The goal is to move away from a model of implicit trust to one of explicit, verified access. A comprehensive access control strategy is detailed in resources like the NIST Access Control Guide. A practical implementation includes:

- Cloud Environments: Use AWS IAM policies or Azure AD roles to grant specific permissions. For instance, an application service running on EC2 that only needs to read objects from a specific S3 bucket should have an IAM role with a policy allowing only

s3:GetObjecton the resourcearn:aws:s3:::my-specific-bucket/*, nots3:*on*. - Kubernetes: Leverage Kubernetes RBAC to create fine-grained

RolesandClusterRoles. A CI/CD service account deploying to theproductionnamespace should be bound to aRolewith permissions limited tocreate,update, andpatchonDeploymentsandServicesresources only within that namespace. - Application Level: Define user roles within the application itself (e.g., 'admin', 'editor', 'viewer') and enforce access checks at the API gateway or within the application logic to ensure users can only perform actions and access data aligned with their role.

- Databases: Create dedicated database roles with specific

SELECT,INSERT, orUPDATEpermissions on certain tables or schemas, rather than granting a service account fulldb_ownerorrootprivileges.

OpsMoon Expertise: Our cloud and security experts can help you design and implement a robust RBAC strategy across your entire technology stack. We audit existing permissions, create least-privilege IAM policies for AWS, Azure, and GCP, and configure Kubernetes RBAC to secure your containerized workloads, ensuring access is strictly aligned with operational needs.

9. Implement Incident Response and Disaster Recovery Planning

Even with the most robust preventative measures, security incidents can still occur. A comprehensive Incident Response (IR) and Disaster Recovery (DR) plan is a critical software security best practice that prepares your organization to detect, respond to, and recover from security breaches and service disruptions efficiently. This proactive planning minimizes financial damage, protects brand reputation, and ensures operational resilience by providing a clear, actionable roadmap for chaotic situations.

The goal is to move from a reactive, ad-hoc scramble to a structured, rehearsed process. A well-defined plan enables your team to contain threats quickly, eradicate malicious actors, restore services with minimal data loss, and conduct post-mortems to prevent future occurrences. It addresses not just the technical aspects of recovery but also the crucial communication and coordination required during a crisis.

How IR and DR Planning Works in Practice

Implementing a formal IR and DR strategy involves creating documented procedures, assigning clear responsibilities, and regularly testing your organization's readiness. For a deeper dive into establishing these procedures, explore our Best Practices for Incident Management. A mature plan includes several key components:

- Preparation Phase: Develop detailed incident response playbooks for common scenarios like ransomware attacks, data breaches, or DDoS attacks. These playbooks should contain technical checklists, communication templates, and contact information. Establish a dedicated Computer Security Incident Response Team (CSIRT) with defined roles and escalation paths.

- Detection & Analysis: Implement robust monitoring and alerting systems using SIEM (Security Information and Event Management) and observability tools. Define clear criteria for what constitutes a security incident to trigger the response plan, such as alerts from a Web Application Firewall (WAF) indicating a successful SQL injection attack.

- Containment, Eradication & Recovery: Outline specific technical procedures to isolate affected systems (e.g., using security groups to quarantine a compromised EC2 instance), preserve forensic evidence (e.g., taking a disk snapshot), remove the threat, and restore operations from secure, immutable backups. This includes DR strategies like automated failover to a secondary region or rebuilding services from scratch using Infrastructure as Code (IaC) templates.

- Post-Incident Activity: Conduct a blameless post-mortem to analyze the incident's root cause, evaluate the effectiveness of the response, and identify areas for improvement. Use these findings to update playbooks, harden security controls, and improve monitoring. Regularly test the plan through tabletop exercises and full-scale DR simulations (e.g., chaos engineering).

OpsMoon Expertise: Our cloud and security experts specialize in designing and implementing resilient systems based on frameworks like the AWS Well-Architected Framework. We can help you create automated DR strategies, build immutable infrastructure, configure robust backup and recovery solutions, and conduct realistic failure-scenario testing to ensure your business can withstand and recover from any incident.

10. Maintain Security Patches and Dependency Updates

Proactive patch management is a critical software security best practice focused on keeping all components of your software stack, including operating systems, third-party libraries, and frameworks, current with the latest security updates. Neglected dependencies are a primary vector for attacks, as adversaries actively scan for systems running software with known, unpatched vulnerabilities (e.g., Log4Shell, Struts). This practice establishes a systematic process for identifying, testing, and deploying patches to close these security gaps swiftly.

The core principle is to treat dependency and patch management not as an occasional cleanup task but as a continuous, automated part of your operations. By integrating tools that automatically detect outdated components and vulnerabilities, you can address threats before they are exploited, maintaining the integrity and security of your applications and infrastructure.

How Patch and Dependency Management Works in Practice

Effective implementation involves automating the detection and, where possible, the application of updates. This reduces manual effort and minimizes the window of exposure. A robust strategy balances the urgency of security fixes with the need for stability, ensuring updates do not introduce breaking changes.

- Automated Dependency Scanning: Integrate tools like GitHub’s Dependabot, Renovate, or Snyk directly into your source code repositories. Configure them to automatically scan your

package.json,pom.xml, orrequirements.txtfiles daily, identify vulnerable dependencies, and create pull requests with the necessary version bumps. - Prioritization and Triage: Not all patches are equal. Use the Common Vulnerability Scoring System (CVSS) and other threat intelligence (e.g., EPSS – Exploit Prediction Scoring System) to prioritize updates. Critical vulnerabilities with known public exploits (e.g., CISA KEV catalog) must be addressed within a strict SLA (e.g., 24-72 hours).

- Base Image and OS Patching: Automate the process of updating container base images (e.g.,

node:18-alpine) and underlying host operating systems. Set up CI/CD pipelines that periodically pull the latest secure base image, rebuild your application container, and run it through a full regression test suite before promoting it to production. - Systematic Rollout: Implement phased rollouts (canary or blue-green deployments) for significant updates, especially for core infrastructure like the Kubernetes control plane or service mesh components. This allows you to validate functionality and performance on a subset of traffic before a full production rollout.

- End-of-Life (EOL) Monitoring: Actively track the lifecycle of your software components. When a library or framework (e.g., Python 2.7, AngularJS) reaches its end-of-life, it will no longer receive security patches, making it a permanent liability. Plan migrations away from EOL software well in advance.

OpsMoon Expertise: Our infrastructure specialists excel at creating and managing large-scale, automated patch management systems. We can configure tools like Renovate for complex monorepos, build CI/CD pipelines that automate container base image updates and rebuilds, and establish clear Service Level Agreements (SLAs) for deploying critical security patches across your entire infrastructure.

Top 10 Software Security Best Practices Comparison

| Practice | Implementation complexity | Resource requirements | Expected outcomes | Ideal use cases | Key advantages |

|---|---|---|---|---|---|

| Implement Secure Software Development Lifecycle (SSDLC) | High — process changes, tool integration, training | Moderate–High: security tools, CI/CD integration, skilled staff | Fewer vulnerabilities, improved compliance, safer releases | Organizations building custom apps, regulated industries | Shifts security left, reduces remediation costs, builds security culture |

| Enforce Secret Management and Credential Rotation | Medium — vault integration and automation | Low–Medium: secret vault, rotation tooling, policies | Reduced credential leaks, audit trails, limited blast radius | Multi-cloud, Kubernetes, services with many secrets | Eliminates hardcoded creds, automated rotation, compliance-ready |

| Implement Container and Image Security Scanning | Medium — CI and registry integration | Medium: scanners, compute, vulnerability DB updates, triage | Fewer vulnerable images, SBOMs, improved supply-chain visibility | Containerized deployments, Kubernetes clusters, CI/CD pipelines | Prevents vulnerable containers in prod, enforces image policies |

| Deploy Infrastructure as Code (IaC) with Security Reviews | Medium–High — IaC adoption and policy-as-code | Medium: IaC tools, policy engines, code review processes | Consistent secure infra, drift detection, auditable changes | Cloud infrastructure teams, multi-environment deployments | Repeatable secure deployments, policy enforcement at scale |

| Establish Comprehensive Logging, Monitoring, and Observability | Medium — telemetry pipelines and alert tuning | High: storage, SIEM/monitoring platforms, analyst capacity | Faster detection/investigation, forensic evidence, compliance | Production systems needing incident detection and audits | Provides visibility for threat hunting, detection, and audits |

| Implement Network Segmentation and Zero Trust Architecture | High — architectural redesign, service mesh, identity | High: network, identity, policy management, ongoing ops | Reduced lateral movement, granular access control | Distributed systems, hybrid cloud, high-security environments | Limits blast radius, enforces least privilege across network |

| Enable Automated Security Testing (SAST, DAST, SCA) | Medium — tool selection and CI integration | Medium: testing tools, maintenance, triage resources | Early vulnerability detection, faster developer feedback | Active dev teams with CI/CD and rapid release cadence | Automates security checks, scales with development workflows |

| Enforce Principle of Least Privilege (PoLP) and RBAC | Medium — role modeling and governance | Medium: IAM tooling, access reviews, automation | Reduced unauthorized access, simpler audits, less overprivilege | Teams with many users/services and cloud resources | Minimizes overprivilege, reduces insider and lateral risks |

| Implement Incident Response and Disaster Recovery Planning | Medium — process design and regular testing | High: runbooks, backup systems, forensic tools, drills | Lower MTTD/MTTR, clear recovery procedures, auditability | Organizations requiring resilience, regulated industries | Improves recovery readiness and organizational resilience |

| Maintain Security Patches and Dependency Updates | Low–Medium — automation and testing workflows | Medium: update pipelines, test environments, monitoring | Reduced exposure to known vulnerabilities, lower technical debt | All software environments, especially dependency-heavy projects | Prevents exploitation of known flaws, keeps stack maintainable |

From Theory to Practice: Operationalizing Your Security Strategy

Navigating the landscape of modern software development requires more than just building functional features; it demands a deep, ingrained commitment to security. We have explored ten critical software security best practices that form the bedrock of a resilient and trustworthy application. From embedding security into the earliest stages with a Secure Software Development Lifecycle (SSDLC) to establishing robust incident response plans, each practice serves as a vital layer in a comprehensive defense-in-depth strategy.

The journey from understanding these principles to implementing them effectively is where the real challenge lies. It is not enough to simply acknowledge the importance of secret management or dependency updates. The key to a mature security posture is operationalization: transforming these concepts from checklist items into automated, integrated, and repeatable processes within your daily workflows.

Key Takeaways for a Mature Security Posture

The transition from a reactive to a proactive security model hinges on several core philosophical and technical shifts. Mastering these is not just about preventing breaches; it is about building a competitive advantage through reliability and user trust.

- Security is a Shared Responsibility: The "shift-left" principle is not just a buzzword. It represents a cultural transformation where developers, operations engineers, and security teams collaborate from day one. Integrating automated security testing (SAST, DAST, SCA) directly into the CI/CD pipeline empowers developers with immediate feedback, making security a natural part of the development process rather than an afterthought.

- Automation is Your Greatest Ally: Manual security reviews and processes cannot keep pace with modern release cycles. Automating container image scanning, Infrastructure as Code (IaC) security reviews using tools like

tfsecorcheckov, and enforcing credential rotation policies are essential. Automation reduces human error, ensures consistent policy application, and frees up your engineering talent to focus on more complex strategic challenges. - Assume a Breach, Build for Resilience: The principles of Zero Trust Architecture and Least Privilege (PoLP) are critical because they force you to design systems that are secure by default. By assuming that any internal or external actor could be a threat, you are driven to implement stronger controls like network segmentation, strict Role-Based Access Control (RBAC), and comprehensive observability to detect and respond to anomalous activity quickly.

Your Actionable Next Steps

Translating this knowledge into action can feel overwhelming, but a structured approach makes it manageable. Start by assessing your current state and identifying the most significant gaps.

- Conduct a Maturity Assessment: Where are you today? Do you have an informal SSDLC? Is secret management handled inconsistently? Use the practices outlined in this article as a scorecard to pinpoint your top 1-3 areas for immediate improvement.

- Prioritize and Implement Incrementally: Do not try to boil the ocean. Perhaps your most pressing need is to get control over vulnerable dependencies. Start there by integrating an SCA tool into your pipeline. Next, focus on implementing IaC security reviews for your Terraform or CloudFormation scripts. Each small, incremental win builds momentum and demonstrably reduces risk.

- Invest in Expertise: Implementing these technical solutions requires a specialized skill set that blends security acumen with deep DevOps engineering expertise. Building secure, automated CI/CD pipelines, configuring comprehensive logging and monitoring stacks, and hardening container orchestration platforms are complex tasks. Engaging with experts who have done it before can accelerate your progress and help you avoid common pitfalls.

Ultimately, adopting these software security best practices is an ongoing commitment to excellence and a fundamental component of modern software engineering. It is a continuous cycle of assessment, implementation, and refinement that protects your data, your customers, and your reputation in an increasingly hostile digital world.

Ready to move from theory to a fully operationalized security strategy? The expert DevOps and SRE talent at OpsMoon specializes in implementing the robust, automated security controls your business needs. Schedule a free work planning session today to build a roadmap for a more secure and resilient software delivery pipeline.