Master the Software Development Life Cycle 5 Phases

Explore the software development life cycle 5 phases, from requirements to deployment. Learn proven strategies for building successful, high-quality software.

The software development life cycle is a structured process that partitions the work of creating software into five distinct phases: Requirements, Design, Implementation, Testing, and Deployment & Maintenance. This isn't a rigid corporate process but a technical framework, like an architect's blueprint for a skyscraper. It provides a strategic, engineering-focused roadmap for transforming a conceptual idea into high-quality, production-ready software.

Your Blueprint for Building Great Software

Following a structured methodology is your primary defense against common project failures like budget overruns, missed deadlines, and scope creep. This is where the Software Development Life Cycle (SDLC) provides critical discipline, breaking the complex journey into five fundamental phases. Each phase has specific technical inputs and outputs that are essential for delivering quality software efficiently.

Poor planning is the root cause of most project failures. Industry data indicates that a significant number of software projects are derailed by inadequate requirements gathering alone. A robust SDLC framework provides the necessary structure to mitigate these risks.

The core principle is to build correctly from the start to avoid costly rework. Each phase systematically builds upon the outputs of the previous one, creating a stable and predictable path from initial concept to a successful production deployment.

Before a deep dive into each phase, this table provides a high-level snapshot of the entire process, outlining the primary technical objective and key deliverable for each stage.

The 5 SDLC Phases at a Glance

| Phase | Primary Technical Objective | Key Deliverable |

|---|---|---|

| Requirements | Elicit, analyze, and document all functional and non-functional requirements. | Software Requirements Specification (SRS) |

| Design | Define the software's architecture, data models, and component interfaces. | High-Level Design (HLD) & Low-Level Design (LLD) Documents |

| Implementation | Translate design specifications into clean, efficient, and maintainable source code. | Version-controlled Source Code & Executable Builds |

| Testing | Execute verification and validation procedures to identify and eliminate defects. | Test Cases, Execution Logs & Bug Reports |

| Deployment & Maintenance | Release the software to production and manage its ongoing operation and evolution. | Deployed Application & Release Notes/Patches |

Consider this table your technical reference. Now, let's deconstruct the specific activities and deliverables within each phase, beginning with the foundational stage: Requirements.

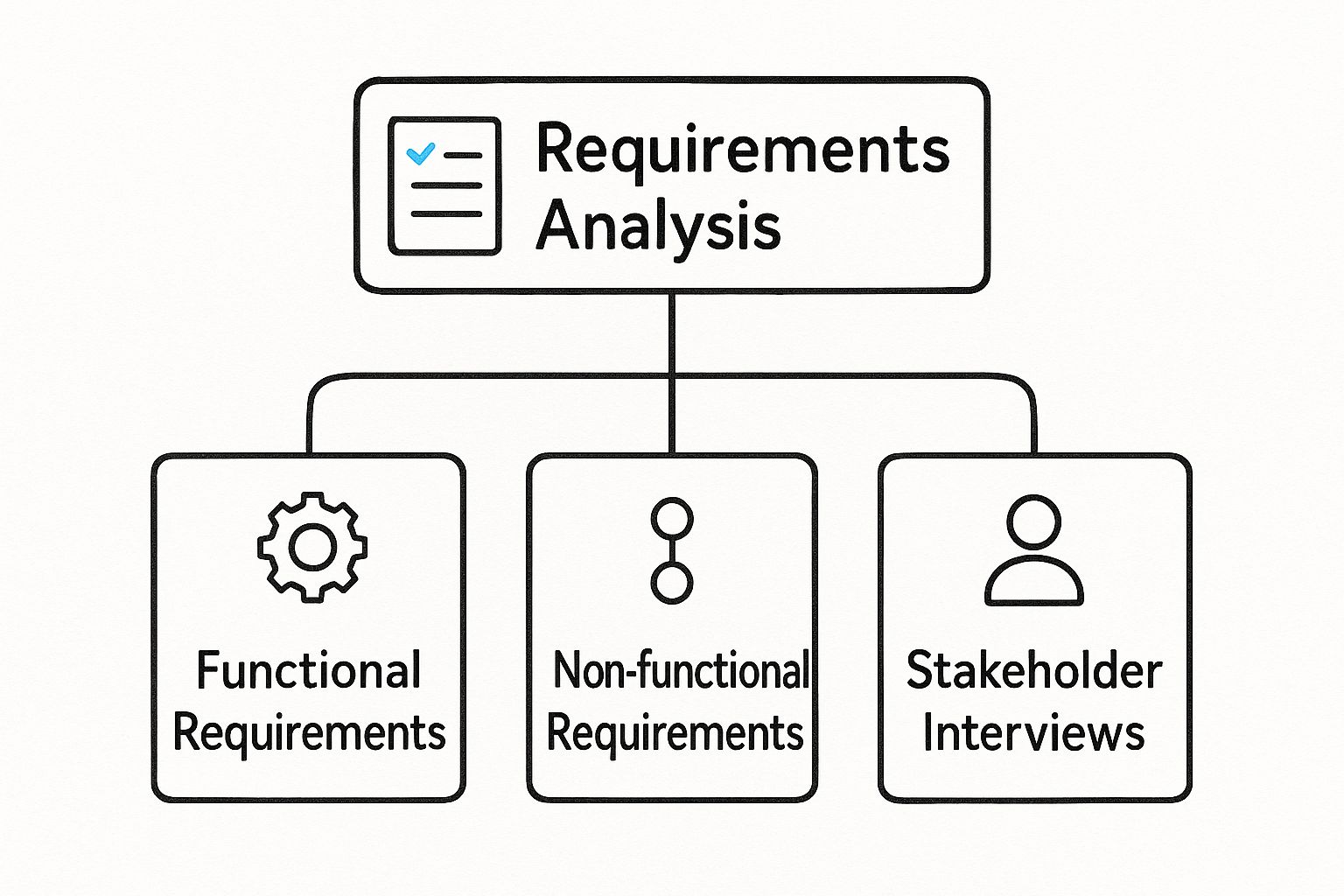

Understanding the Core Components

The first phase, Requirements, is a technical discovery process focused on defining the "what" and "why" of the project. This involves structured sessions with stakeholders to precisely document what the system must do (functional requirements) and the constraints it must operate under, such as performance or security (non-functional requirements).

This phase establishes the technical foundation for the entire project. Errors or ambiguities here will propagate through every subsequent phase, leading to significant technical debt.

As shown, a robust project foundation is built by translating stakeholder needs into precise, actionable technical specifications.

To fully grasp the SDLC, it is beneficial to understand various strategies for effective Software Development Lifecycle management. This broader context connects the individual phases into a cohesive, high-velocity delivery engine. Each phase we will explore is a critical link in this engineering value chain.

1. Laying the Foundation with Requirements Engineering

Software projects begin with an idea, but ideas are inherently ambiguous and incomplete. The requirements engineering phase is where this ambiguity is systematically transformed into a concrete, technical blueprint.

This is the most critical stage in the software development life cycle. Data from the Project Management Institute shows that a significant percentage of project failures are directly attributable to poor requirements management. Getting this phase right is a mission-critical dependency for project success.

Think of this phase as a technical interrogation of the system's future state. The objective is to build an unambiguous, shared understanding among stakeholders, architects, and developers before any code is written. This mitigates the high cost of fixing flawed assumptions discovered later in the lifecycle.

From Vague Ideas to Concrete Specifications

The core activity here is requirements elicitation—the systematic gathering of information. This is an active investigation utilizing structured techniques to extract precise details from end-users, business executives, and subject matter experts.

An effective elicitation process combines several methods:

- Structured Interviews: Formal sessions with key stakeholders to define high-level business objectives, constraints, and success metrics.

- Workshops (JAD sessions): Facilitated Joint Application Design sessions that bring diverse user groups together to resolve conflicts and build consensus on functionality in real-time.

- User Story Mapping: A visual technique to map the user's journey, breaking it down into epics, features, and granular user stories. This is highly effective for defining functional requirements from an end-user perspective.

- Prototyping: Creation of low-fidelity wireframes or interactive mockups. This provides a tangible artifact for users to interact with, generating specific and actionable feedback that abstract descriptions cannot.

Each technique serves to translate subjective business wants into objective, testable technical requirements that will form the project's foundation.

Creating the Project Blueprint Documents

The collected information must be formalized into engineering documents that serve as the contract for development. Two critical outputs are the Business Requirement Document (BRD) and the Software Requirement Specification (SRS).

Business Requirement Document (BRD):

This document outlines the "why." It defines the high-level business needs, project scope, and key performance indicators (KPIs) for success, written for a business audience.

Software Requirement Specification (SRS):

The SRS is the technical counterpart to the BRD. It translates business goals into detailed functional and non-functional requirements. This document is the primary input for architects and developers.

A well-architected SRS is unambiguous, complete, consistent, and verifiable. It becomes the single source of truth for the engineering team. Without it, development is based on assumption, introducing unacceptable levels of risk.

Preventing Scope Creep and Ambiguity

Two primary risks threaten this phase: scope creep (the uncontrolled expansion of requirements) and ambiguity (e.g., "the system must be fast").

To see how modern frameworks mitigate this, it's useful to read our guide explaining what is DevOps methodology, as its principles are designed to maintain alignment and control scope.

Here are actionable strategies to maintain control:

- Establish a Formal Change Control Process: No requirement is added or modified without a formal Change Request (CR). Each CR is evaluated for its impact on schedule, budget, and technical architecture, and must be approved by a Change Control Board (CCB).

- Quantify Non-Functional Requirements (NFRs): Vague requirements must be made measurable. "Fast" becomes "API response times for endpoint X must be < 200ms under a load of 500 concurrent users." Now it is a testable requirement.

- Prioritize with a Framework: Use a system like MoSCoW (Must-have, Should-have, Could-have, Won't-have) to formally categorize every feature. This provides clarity on the Minimum Viable Product (MVP) and manages stakeholder expectations.

By implementing these engineering controls, you establish a stable foundation, ready for a seamless transition into the design phase.

2. Architecting the Solution: The Design Phase

With the requirements locked down, the focus shifts from what the software must do to how it will be engineered. The design phase translates the SRS into a concrete technical blueprint.

This is analogous to an architect creating detailed schematics for a building. Structural loads, electrical systems, and data flows must be precisely mapped out before construction begins. Bypassing this stage guarantees a brittle, unscalable, and unmaintainable system.

Rushing design leads to architectural flaws that are exponentially more expensive to fix later in the lifecycle. A rigorous design phase ensures the final product is performant, scalable, secure, and maintainable.

High-Level vs. Low-Level Design

System design is bifurcated into two distinct but connected stages: High-Level Design (HLD) and Low-Level Design (LLD).

High-Level Design (HLD): The Architectural Blueprint

The HLD defines the macro-level architecture. It decomposes the system into major components, services, and modules and defines their interactions and interfaces.

Key technical decisions made here include:

- Architectural Pattern: Will this be a monolithic application or a distributed microservices architecture? This decision impacts scalability, deployment complexity, and team structure.

- Technology Stack: Selection of programming languages (e.g., Go, Python), databases (e.g., PostgreSQL vs. Cassandra), messaging queues (e.g., RabbitMQ, Kafka), and frameworks (e.g., Spring Boot, Django).

- Third-Party Integrations: Defining API contracts and data exchange protocols for interacting with external services (e.g., Stripe for payments, Twilio for messaging).

The HLD provides the foundational architectural strategy for the project.

Low-Level Design (LLD): The Component-Level Schematics

With the HLD approved, the LLD zooms into the micro-level, detailing the internal implementation of each component identified in the HLD.

This is where developers get the implementation specifics:

- Class Diagrams & Method Signatures: Defining the specific classes, their attributes, methods, and relationships within each module.

- Database Schema: Specifying the exact tables, columns, data types, indexes, and foreign key constraints for the database.

- API Contracts: Using a specification like OpenAPI/Swagger to define the precise request/response payloads, headers, and status codes for every endpoint.

The LLD provides an unambiguous implementation guide for developers, ensuring that all components will integrate correctly.

A strong HLD ensures you're building the right system architecture. A detailed LLD ensures you're building the system components right. Both are indispensable.

Key Outputs and Tough Decisions

The design phase involves critical engineering trade-offs. The monolithic vs. microservices decision is a primary example. A monolith offers initial simplicity but can become a scaling and deployment bottleneck. Microservices provide scalability and independent deployment but introduce significant operational complexity in areas like service discovery, distributed tracing, and data consistency.

Another critical activity is data modeling. A poorly designed data model can lead to severe performance degradation and data integrity issues that are extremely difficult to refactor once in production.

To validate these architectural decisions, teams often build prototypes before committing to production code. These can range from simple UI mockups in tools like Figma or Sketch to functional Proof-of-Concept (PoC) applications that test a specific technical approach (e.g., evaluating the performance of a particular database).

The primary deliverable is the Design Document Specification (DDS), a formal document containing the HLD, LLD, data models, and API contracts. This document is the definitive engineering guide for the implementation phase. A well-executed design phase is the most effective form of risk mitigation in software development.

3. Building the Product: The Implementation Phase

With the architectural blueprint signed off, the project moves from abstract plans to tangible code. This is the implementation phase, where developers roll up their sleeves and start building the actual software. They take all the design documents, user stories, and specifications and translate them into clean, efficient source code.

This isn't just about hammering out code as fast as possible. The quality of the work here sets the stage for everything that follows—performance, scalability, and how easy (or painful) it will be to maintain down the road. Rushing this step often leads to technical debt, which is just a fancy way of saying you've created future problems for yourself by taking shortcuts today.

Laying the Ground Rules: Engineering Best Practices

To keep the codebase from turning into a chaotic mess, high-performing teams lean on a set of proven engineering practices. These aren't just arbitrary rules; they're the guardrails that keep development on track, especially when multiple people are involved.

First up are coding standards. Think of these as a style guide for your code. They dictate formatting, naming conventions, and other rules so that every line of code looks and feels consistent, no matter who wrote it. This simple step makes the code immensely easier for anyone to read, debug, and update later.

The other non-negotiable tool is a version control system (VCS), and the undisputed king of VCS is Git. Git allows a whole team of developers to work on the same project at the same time without stepping on each other's toes. It logs every single change, creating a complete history of the project. If a new feature introduces a nasty bug, you can easily rewind to a previous stable state.

Building Smart: Modular Development and Agile Sprints

Modern software isn't built like a giant, solid sculpture carved from a single block of marble. It’s more like building with LEGO bricks. This approach is called modular development, where the system is broken down into smaller, self-contained, and interchangeable modules.

This method has some serious advantages:

- Work in Parallel: Different teams can tackle different modules simultaneously, which drastically cuts down development time.

- Easier Fixes: If a bug pops up in one module, you can fix and redeploy just that piece without disrupting the entire application.

- Reuse and Recycle: A well-built module can often be repurposed for other projects, saving a ton of time and effort in the long run.

In an Agile world, these modules or features are built in short, focused bursts called sprints. A sprint usually lasts between one and four weeks, and the goal is always the same: have a small, working, and shippable piece of the product ready by the end. This iterative cycle allows for constant feedback and keeps the project aligned with what users actually need.

One of the most crucial quality checks in this process is the peer code review. Before any new code gets added to the main project, another developer has to look it over. They hunt for potential bugs, suggest improvements, and make sure everything lines up with the coding standards. It's a simple, collaborative step that does wonders for maintaining high code quality.

The demand for developers who can work within these structured processes is only growing. The U.S. Bureau of Labor Statistics, for instance, projects a 22% increase in software developer jobs between 2019 and 2029. Well-defined SDLC phases like this one create the clarity needed for distributed teams to work together seamlessly across the globe. You can learn more by exploring some detailed insights about the software product lifecycle.

Automating the Assembly Line with Continuous Integration

Imagine trying to manually piece together code changes from a dozen different developers every day. It would be slow, tedious, and a recipe for disaster. That's the problem Continuous Integration (CI) solves. CI is a practice where developers merge their code changes into a central repository several times a day. Each time they do, an automated process kicks off to build and test the application.

A typical CI pipeline looks something like this:

- Code Commit: A developer pushes their latest changes to a shared repository like GitHub.

- Automated Build: A CI server (tools like Jenkins or GitLab CI) spots the new code and automatically triggers a build.

- Automated Testing: If the build is successful, a battery of automated tests runs to make sure the new code didn't break anything.

If the build fails or a test doesn't pass, the entire team gets an immediate notification. This means integration bugs are caught and fixed in minutes, not days or weeks. By automating this workflow, CI pipelines speed up development and clear the way for a smooth handoff to the next phase: testing.

4. Ensuring Quality With Rigorous Software Testing

So, the code is written. The features are built. We're done, right? Not even close. Raw code is a long way from a finished product, which brings us to the fourth—and arguably most critical—phase of the SDLC: Testing.

Think of this stage as the project's quality control department. It’s a systematic, multi-layered hunt for defects, designed to find and squash bugs before they ever see the light of day. A product isn’t ready until it's been proven to work under pressure.

Shipping untested code is like launching a ship with holes in the hull. You're just asking for a flood of bugs, performance nightmares, and security holes. This stage is all about methodically finding those problems with a real strategy, not just random clicking.

Deconstructing The Testing Pyramid

A rookie mistake is lumping all "testing" into one big bucket. In reality, a smart Quality Assurance (QA) strategy is structured like a pyramid, with different kinds of tests forming distinct layers. This approach is all about optimizing for speed and efficiency.

- Unit Tests (The Foundation): These are the bedrock. They're fast, isolated tests that check the smallest possible pieces of your code, like a single function. Developers write these to make sure each individual "building block" does exactly what it's supposed to do. You'll have tons of these, and they should run in seconds.

- Integration Tests (The Middle Layer): Okay, so the individual blocks work. But do they work together? Integration tests are designed to find out. Does the login module talk to the database correctly? These tests are a bit slower but are absolutely essential for finding cracks where different parts of your application meet.

- End-to-End (E2E) System Tests (The Peak): At the very top, we have E2E tests. These simulate an entire user journey from start to finish—logging in, adding an item to a cart, checking out. They validate the whole workflow, ensuring everything functions as one cohesive system. They're the slowest and most complex, which is why you have fewer of them.

A Spectrum Of Testing Disciplines

Beyond the pyramid's structure, testing involves a whole range of disciplines, each targeting a different facet of software quality. Getting this right is a huge part of mastering software quality assurance processes.

This table breaks down some of the most common testing types you'll encounter.

Comparison of Key Testing Types in SDLC

| Testing Type | Main Objective | Typical Stage | Example Defects Found |

|---|---|---|---|

| Functional Testing | Verifies that each feature works according to the SRS. | Throughout | A "Save" button doesn't save the data. |

| Performance Testing | Measures speed, responsiveness, and stability under load. | Pre-release | The application crashes when 100 users log in at once. |

| Security Testing | Identifies vulnerabilities and weaknesses in the application's defenses. | Pre-release | A user can access another user's private data. |

| Usability Testing | Assesses how easy and intuitive the software is for real users. | Late-stage | Users can't figure out how to complete a core task. |

| User Acceptance Testing (UAT) | The final check where actual stakeholders or clients validate the software. | Pre-deployment | The software works but doesn't solve the business problem it was intended to. |

Each type plays a unique role in ensuring the final product is robust, secure, and user-friendly.

From Bug Reports To Automated Frameworks

The whole testing process churns out a ton of data, mostly in the form of bug reports. A solid bug-tracking workflow is non-negotiable. Using tools like Jira, testers log detailed tickets for every defect, including clear steps to reproduce it, its severity, and screenshots. This gives developers everything they need to find the problem and fix it fast.

Catching bugs early isn't just a nice-to-have; it's a massive cost-saver. Industry stats show that fixing a defect in production can be up to 100 times more expensive than fixing it during development.

To keep up with the pace of modern development, teams lean heavily on automation. Manual testing is slow, tedious, and prone to human error. Automation frameworks like Selenium or Cypress let teams write scripts that run repetitive tests over and over, perfectly every time.

This frees up your human testers to do what they do best: creative exploratory testing and deep usability checks that machines just can't handle. Of course, this all hinges on great communication. Mastering the art of giving constructive feedback in code reviews is key to making this iterative cycle of testing and fixing run smoothly.

5. Launching and Maintaining the Final Product

After all the intense cycles of building and testing, we’ve reached the final milestone: getting your software into the hands of real users. This is where the rubber meets the road. The deployment and maintenance phase is the culmination of every previous effort and the true beginning of your product's life in the wild.

Deployment is a lot more than just flipping a switch and hoping for the best. It's a carefully choreographed technical process designed to release new code without causing chaos. Gone are the days of the risky "big bang" launch. Modern teams use sophisticated strategies to minimize downtime and risk, ensuring users barely notice a change—except for the awesome new features, of course.

Advanced Deployment Strategies

Service interruptions can cost a business thousands of dollars per minute, so teams have gotten very clever about avoiding them. These advanced deployment patterns are central to modern DevOps and allow for controlled, safe releases.

- Blue-Green Deployment: Picture two identical production environments, nicknamed "Blue" and "Green." If your live traffic is on Blue, you deploy the new version to the idle Green environment. After a final round of checks, you simply reroute all traffic to Green. If anything goes wrong? No sweat. You can instantly switch traffic back to Blue.

- Canary Deployment: This technique is like sending a canary into a coal mine. You roll out the new version to a tiny subset of users—the "canaries." The team monitors performance and user feedback like a hawk. If all systems are go, the release is gradually rolled out to everyone else. This approach dramatically minimizes the blast radius of any potential bugs.

These days, strategies like these are almost always managed by automated Continuous Deployment (CD) pipelines. These pipelines handle the entire release process, from the moment a developer commits code to the final launch in production.

The Cycle Continues with Maintenance

Here’s the thing about software: deployment is a milestone, not the finish line. The second your software goes live, the maintenance phase begins. This is often the longest and most resource-intensive part of the whole lifecycle. The work doesn’t stop; it just shifts focus.

For a deeper look into this stage, explore our guide on mastering the software release lifecycle.

This ongoing phase is all about making sure the software stays stable, secure, and relevant. It breaks down into a few key activities:

- Proactive Monitoring: This means using observability tools to keep a close eye on application performance, infrastructure health, and user activity in real-time. It's about spotting trouble before it turns into a critical failure.

- Efficient Bug Fixing: You need a crystal-clear process for users to report bugs and for developers to prioritize, fix, and deploy patches—fast.

- Planning Feature Updates: The cycle begins anew. You gather user feedback and market data to plan the next round of features and improvements, feeding that information right back into the requirements phase for the next version.

Maintenance isn't just about fixing what's broken. It's about proactively managing the product's evolution. A well-oiled maintenance phase is what guarantees long-term value and user happiness, right up until the day the product is eventually retired.

Frequently Asked Questions

How Does the 5 Phase SDLC Model Differ from Agile Methodologies?

Think of the traditional software development life cycle 5 phases (often called the Waterfall model) like building a house from a fixed blueprint. Every step is linear and sequential. You lay the foundation, then build the frame, then the walls, and so on. You can't start roofing before the walls are completely finished, and all decisions are locked in from the start.

Agile, on the other hand, is like building one room perfectly, getting feedback, and then building the next. Methodologies like Scrum break the project into short cycles called "sprints." In each sprint, a small piece of the final product goes through all five phases—requirements, design, build, test, and deploy. The biggest difference is that Agile embraces change, making it far more flexible and adaptive.

What Is the Most Common Reason for Failure in an SDLC Process?

It almost always comes down to one place: the Requirements Gathering phase. Time and time again, industry analysis points to incomplete, fuzzy, or constantly shifting requirements as the number one project killer.

When you get this part wrong, the mistakes snowball. A flawed requirement leads to a flawed design, which means developers waste time building the wrong thing. Then, you spend even more time and money on rework during testing. This is exactly why a rock-solid Software Requirement Specification (SRS) document and getting genuine stakeholder buy-in early on are non-negotiable.

Can Any of the SDLC Phases Be Skipped to Save Time?

That's a tempting shortcut that almost always ends in disaster. Skipping phases doesn't save time or money; it just moves the cost and pain further down the line, where it's much more expensive to fix.

Imagine skipping the Design phase. You might get code written faster, but it will likely be a tangled mess—hard to maintain, difficult to update, and a nightmare to test. And skipping the Testing phase? You're essentially shipping a product with a "good luck!" note to your users, praying they don't find the bugs that will inevitably wreck their experience and your reputation. Each phase is a critical checkpoint for a reason: it manages risk and builds quality into the final product.

Ready to accelerate every phase of your software delivery? OpsMoon connects you with the top 0.7% of remote DevOps engineers to build, automate, and scale your infrastructure. Start with a free work planning session.