A Practical Guide to SOC 2 Requirements for Engineers

Master SOC 2 requirements with this technical guide. Learn how to translate the Trust Services Criteria into actionable engineering controls for your audit.

When people hear "SOC 2 requirements," they often picture a massive, rigid checklist. But SOC 2 is a flexible framework, not a prescriptive rulebook. It’s built to prove your systems are secure and reliable, based on five core principles known as the Trust Services Criteria.

For anyone just starting out, getting a handle on the basics is key. If you're looking for a good primer, this piece on What Is SOC 2 Compliance is a great place to begin.

The framework, developed by the American Institute of Certified Public Accountants (AICPA), provides customers with verifiable proof that you handle their data responsibly. Instead of forcing a one-size-fits-all model, it allows you to tailor the audit to your specific services and technical architecture.

What Are the Core SOC 2 Requirements

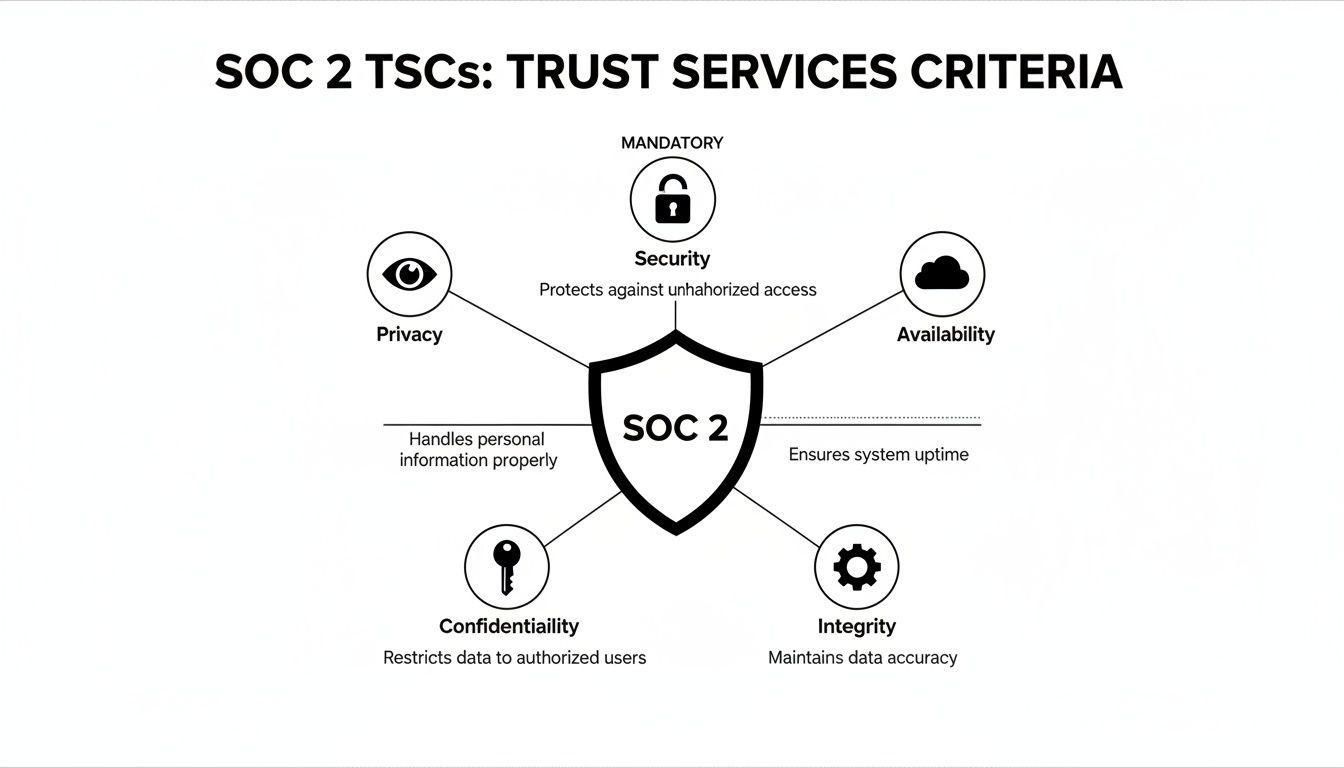

The heart of any SOC 2 audit is the Trust Services Criteria (TSCs). These are the principles your internal controls—both procedural and technical—will be measured against.

The only mandatory requirement is the Security criterion. This is the non-negotiable foundation of every SOC 2 audit. From there, you select additional criteria—Availability, Processing Integrity, Confidentiality, and Privacy—that align with your service commitments and customer contracts.

The Five Trust Services Criteria

The framework is built around one mandatory criterion and four optional ones you can choose from. This structure is what makes SOC 2 so adaptable to different technologies and business models.

Here’s a technical breakdown of each one to give you a clearer picture.

The Five SOC 2 Trust Services Criteria at a Glance

| Trust Services Criterion | Core Objective | Commonly Required For |

|---|---|---|

| Security (Mandatory) | Protect systems and data from unauthorized access, disclosure, and damage. | Every SOC 2 audit, no exceptions. This is the foundation. |

| Availability (Optional) | Ensure systems are available for use as agreed upon in contracts or SLAs. | Services with strict uptime guarantees, like IaaS, PaaS, or critical business apps. |

| Processing Integrity (Optional) | Ensure system processing is complete, accurate, timely, and authorized. | Financial platforms, e-commerce sites, or any app performing critical transactions. |

| Confidentiality (Optional) | Protect sensitive information (e.g., intellectual property, trade secrets) from unauthorized disclosure. | Companies handling proprietary business data, strategic plans, or other restricted info. |

| Privacy (Optional) | Protect Personally Identifiable Information (PII) through its entire lifecycle. | B2C companies, healthcare platforms, or any service collecting personal data from individuals. |

Your choice of TSCs has a huge impact on the scope and technical depth of your audit. This decision should be a direct reflection of your customer contracts, your system architecture, and the specific data flows you're responsible for.

I’ve seen teams make the mistake of trying to tackle all five TSCs to look "more compliant." A strong SOC 2 report isn't about quantity; it's about relevance. Including Processing Integrity for a simple data storage service just adds unnecessary complexity and cost to the audit without providing any real value. An auditor will ask you to prove controls for every TSC you select; over-scoping creates unnecessary engineering work.

Choosing your TSCs wisely ensures the entire audit process stays focused, relevant, and gives a true picture of your security posture. It’s about proving you do what you say you do, where it counts the most for your customers.

Translating the Five Trust Services Criteria into Code

Knowing the theory behind the five Trust Services Criteria (TSCs) is one thing, but actually implementing them is a whole different ball game. This is where the rubber meets the road—where abstract compliance goals have to become real, auditable technical controls baked right into your systems and code.

It's all about mapping those high-level principles to concrete configurations, scripts, and architectural choices. So, let's break down how each of the five TSCs translates into tangible engineering tasks that an auditor can actually test and verify.

This visual shows how the five criteria fit together, with Security serving as the non-negotiable foundation for any SOC 2 report.

While every audit is built on Security, you'll choose the other criteria based on the specific services you offer and the kind of data you handle.

Security: The Mandatory Foundation

Security isn't optional; it's the bedrock of every single SOC 2 report. The entire point is to prove you're protecting your systems against unauthorized access, plain and simple.

From an engineering standpoint, this means building a defense-in-depth strategy.

- Network Segmentation: Implement a multi-VPC architecture. Use Virtual Private Clouds (VPCs) and fine-grained subnets to isolate your production environment from development and staging. Enforce strict ingress/egress rules using network ACLs and security groups, allowing traffic only on necessary ports (e.g., TCP/443) from trusted sources.

- Intrusion Detection Systems (IDS): Deploy network-based IDS tools like AWS GuardDuty or an open-source option like Suricata to monitor VPC Flow Logs and DNS queries for anomalous activity. Configure automated alerts that pipe findings directly into a dedicated incident response channel in Slack or create a PagerDuty incident for critical threats.

- Vulnerability Management: Integrate static and dynamic security testing (SAST/DAST) tools directly into your CI/CD pipeline. Use tools like Snyk or Trivy to scan container images for known CVEs and third-party libraries for vulnerabilities as part of every build. Configure the pipeline to fail if high-severity vulnerabilities are detected.

Availability: Guaranteeing Uptime

If you promise customers a certain level of performance—usually defined in a Service Level Agreement (SLA)—then the Availability criterion is for you. The goal here is to prove your system is resilient and can handle failures without falling over.

Your technical controls need to reflect that promise:

- Automated Failover Architecture: Design your infrastructure to span multiple availability zones (AZs). Use managed services like AWS Application Load Balancers (ALBs) and auto-scaling groups to automatically reroute traffic and launch new instances if an instance or an entire AZ becomes unhealthy. For data tiers, use managed multi-AZ database services like Amazon RDS.

- Disaster Recovery (DR) Testing: Don't just write a DR plan; automate it. Use Infrastructure as Code to define a recovery environment and write scripts that simulate a full regional failover. Regularly test these scripts to measure your Recovery Time Objective (RTO) and Recovery Point Objective (RPO), ensuring you can restore from backups and meet your SLA commitments.

- Uptime Monitoring: Implement comprehensive monitoring using tools like Prometheus for metrics and alerting and Datadog for log aggregation and APM. Set up alerts on key service-level indicators (SLIs) like latency, error rates, and saturation. Ensure alerts are triggered before an SLA breach, allowing you to meet a 99.99% uptime guarantee.

Processing Integrity: Accurate and Reliable Transactions

Processing Integrity is all about ensuring that system processing is complete, accurate, and authorized. If you're building a financial platform, an e-commerce site, or anything where transaction correctness is absolutely critical, this one's for you.

Here’s how you build that trust into your code:

- Data Validation Checks: Implement strict server-side data validation using schemas (e.g., JSON Schema) in your APIs and ingestion pipelines. Ensure that any data failing validation is rejected with a clear error code (e.g., HTTP 400) and logged for analysis, preventing malformed data from corrupting your system.

- Robust Error Logging: When a transaction fails, you need to know why—immediately. Implement structured logging (e.g., JSON format) that captures the full context of the error, including a unique transaction ID, user ID, and stack trace. Centralize these logs and create automated alerts for spikes in specific error types.

- Transaction Reconciliation: Implement idempotent APIs to prevent duplicate processing. Set up automated reconciliation jobs that perform checksums or row counts between source and destination databases (e.g., between an operational PostgreSQL DB and a data warehouse) to programmatically identify discrepancies.

Confidentiality: Protecting Sensitive Data

Confidentiality is focused on protecting data that has been designated as, well, confidential. This isn't just customer data; we're talking about your intellectual property, internal financial reports, or secret business plans.

The controls here are all about preventing unauthorized disclosure:

- Encryption Everywhere: Mandate TLS 1.3 for all data in transit by configuring your load balancers and servers to reject older protocols. For data at rest, use platform-managed keys (like AWS KMS) to enforce server-side encryption (SSE-KMS) on all S3 buckets, EBS volumes, and RDS instances.

- Access Control Lists (ACLs): Implement granular, role-based access control (RBAC). Use IAM policies and S3 bucket policies to enforce the principle of least privilege. For example, a service account for a data processing job should only have

s3:GetObjectpermission for a specific bucket, nots3:*. - Infrastructure as Code (IaC): Use tools like Terraform or CloudFormation to define and manage your infrastructure. This gives you a clear, version-controlled audit trail of who configured what and when, making it dead simple to prove your security settings are correct. To see what this looks like in practice, check out our guide on how to properly inspect Infrastructure as Code.

Privacy: Safeguarding Personal Information

While Confidentiality is about protecting company secrets, Privacy is laser-focused on protecting Personally Identifiable Information (PII). This criterion is your ticket to aligning with major regulations like GDPR and CCPA.

The technical implementations get very specific:

- PII Data Mapping: Use automated data discovery and classification tools to scan your databases and object stores to identify and tag columns or files containing PII. Maintain a data inventory that maps each PII element to its physical location, owner, and retention policy.

- Consent Mechanisms: Engineer granular, user-facing consent management features directly into your application's API and UI. Store user consent preferences (e.g., for marketing communications vs. analytics) as distinct boolean flags in your user database with a timestamp.

- Automated DSAR Workflows: Create automated workflows to handle Data Subject Access Requests (DSARs). Build scripts that query all PII-containing data stores for a given user ID and can programmatically export or delete that user's data, generating an audit log of the action.

Choosing Between a SOC 2 Type I and Type II Report

Figuring out whether to go for a SOC 2 Type I or Type II report is more than just a compliance checkbox. It’s a strategic call that ripples across your engineering team, customer trust, and even how fast you can close deals.

The difference between them is pretty fundamental, but a simple analogy makes it crystal clear.

A Type I report is a photograph. It's a snapshot, capturing your security controls at one specific moment. An auditor comes in, looks at the design of your controls—your documented policies, your IaC configurations, your access rules—and confirms that, on paper, they look solid enough to meet the SOC 2 criteria you’ve chosen.

On the other hand, a Type II report is a video. Instead of a single snapshot, it records your controls in action over a longer stretch, usually three to twelve months. This report doesn’t just say your controls are designed well; it proves they’ve been working effectively, day in and day out.

The Startup Playbook: Type I as a Baseline

For an early-stage company, a Type I report is often the most pragmatic first move. It’s faster, costs less, and is a great way to unblock those early sales conversations with enterprise customers who need some kind of security validation to move forward.

Think of it as a readiness assessment that comes with an official stamp of approval. The process forces your engineering team to get its house in order by documenting processes, hardening systems, and putting the foundational controls in place for a real security program. It gives you a solid baseline and shows prospects you’re serious, all without the long, drawn-out observation period a Type II demands.

Why Enterprises Demand Type II

As you grow, so do your customers' expectations. A Type I shows you have a good plan, but a Type II proves your plan actually works. Big companies, especially those in financial services, healthcare, or other regulated fields, almost always require a Type II. They need rock-solid assurance that your security controls aren’t just theoretical—they've been consistently enforced over time.

A Type I report might get you past the initial security questionnaire, but a Type II report is what closes the deal. It provides irrefutable, third-party evidence of your security posture, making it the gold standard for vendor due diligence.

The engineering lift for a Type II is much heavier, no doubt about it. It means months of meticulous evidence collection—pulling logs from CI/CD pipelines, digging up Jira tickets for change management, and grabbing cloud configuration snapshots to show everything is operating as it should. The audit is more intense and the cost is higher, but the trust it builds is priceless.

A Clear Decision Framework

So, which one is right for you? It really boils down to your company's stage, your resources, and what your customer contracts demand.

A Type I is your best bet for a quick win to establish a security baseline and get sales moving. A Type II is the long-term investment you make to land and keep those big enterprise fish.

Automating Evidence Collection for Your Audit

A successful SOC 2 audit boils down to one thing: rock-solid evidence. You can't just scramble at the last minute to prove your controls were working six months ago. That approach is a recipe for failure.

The real key is to get ahead of the audit. You need to shift from reactive data hunting to proactive, automated evidence collection. It’s about baking compliance right into your daily engineering workflows, not treating it as a once-a-year fire drill.

This journey starts by defining a crystal-clear audit scope. Think of it as drawing a boundary around everything the auditors will examine—every system, piece of infrastructure, and code repo that falls under your chosen Trust Services Criteria. Get this right, and you eliminate surprises down the road.

This proactive stance isn't just a nice-to-have; it's becoming a necessity. A staggering 68% of SOC 2 audits fail because of insufficient monitoring evidence. This trend has pushed the AICPA to mandate monthly control testing for SOC 2 Type II reports, a huge leap from the old annual check-ins.

Defining Your Audit Scope

Before you can collect a single piece of evidence, you need to know exactly what the auditors are going to look at. This isn't just a quick list of servers; it's a complete inventory of your entire service delivery environment.

- System and Infrastructure Mapping: Use a Configuration Management Database (CMDB) or even a version-controlled YAML file to document all your production servers, databases, cloud services (like AWS S3 buckets or RDS instances), and networking components. Link each asset to the TSCs it supports (e.g., your load balancers are key evidence for Availability).

- Code Repository Identification: Pinpoint the specific Git repositories that house your application code, Infrastructure as Code (IaC), and deployment scripts for any in-scope systems. Use a

CODEOWNERSfile to formally define ownership and review requirements for critical repositories. - Data Flow Diagrams: Create and maintain diagrams (using a tool like Lucidchart or Diagrams.net) that map how sensitive data moves through your systems, including entry points, processing steps, and storage locations. This is critical for proving controls for the Confidentiality and Privacy criteria.

Identifying Key Evidence Sources

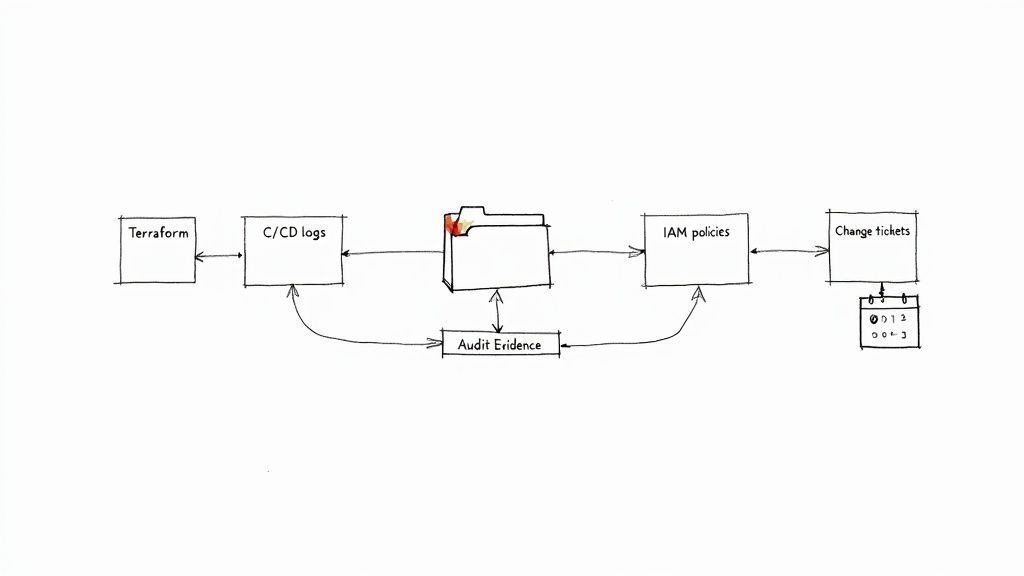

With your scope locked in, the next step is to figure out where your evidence actually lives. For modern engineering teams, this data is generated constantly by the tools you’re already using every single day. The trick is knowing what to grab.

Auditors aren't looking for vague promises; they want concrete proof that your controls are working as designed. This means tangible artifacts, like:

- Infrastructure as Code (IaC) Configurations: Your Terraform or CloudFormation files are pure gold. They provide a version-controlled, declarative record of how your cloud environment is configured, proving your security settings are intentional and consistently applied.

- CI/CD Pipeline Logs: Logs from tools like Jenkins, GitLab CI, or GitHub Actions are a treasure trove. They show that code changes went through automated testing, security scans, and required approvals before ever touching production.

- Cloud IAM Policies: Exported JSON policies from AWS IAM or similar services in GCP and Azure are direct evidence of your access control rules. They're undeniable proof of how you enforce the principle of least privilege.

- Change Management Tickets: Tickets in Jira or Linear that are linked to pull requests tell the human story behind a change. They show the business justification, peer review, and final approval, satisfying crucial change management requirements.

Mapping DevOps Practices to SOC 2 Controls

The good news is that your existing DevOps practices are likely already generating the evidence you need. It's just a matter of connecting the dots. By mapping your CI/CD pipelines, IaC workflows, and monitoring setups to specific SOC 2 controls, you can turn your everyday operations into a compliance machine.

This table shows how some common DevOps activities directly support SOC 2 requirements.

| SOC 2 Common Criteria | DevOps Practice Example | Evidence to Collect |

|---|---|---|

| CC6.1 (Logical Access) | Role-Based Access Control (RBAC) via AWS IAM, managed with Terraform. | Terraform code defining IAM roles and policies; screenshots of IAM console. |

| CC7.1 (System Configuration) | Infrastructure as Code (IaC) to define and enforce security group rules. | *.tf files showing security group configurations; terraform plan outputs. |

| CC7.2 (Change Management) | CI/CD Pipeline with required PR approvals and automated security scans. | Pull request history with reviewer approvals; CI pipeline logs (e.g., GitHub Actions). |

| CC7.4 (System Monitoring) | Observability Platform (e.g., Datadog, Grafana) with alerting on critical events. | Alert configurations; logs showing alert triggers and responses. |

| CC8.1 (System Development) | Automated Testing in the CI pipeline (unit, integration, vulnerability scans). | Test reports from the CI pipeline (e.g., SonarQube, Snyk); build logs. |

By viewing your DevOps toolchain through a compliance lens, you'll find that you’re already well on your way. The challenge isn't creating new processes from scratch, but rather learning how to capture and present the evidence from the robust processes you already have.

Implementing Automation for Continuous Collection

Trying to gather all this evidence manually is a surefire path to audit fatigue and human error. The goal is to automate this process so that evidence is continuously collected, organized, and ready for auditors the moment they ask for it.

Automation transforms SOC 2 evidence collection from a painful, periodic event into a seamless, background process. It's the difference between frantically digging through archives and simply pointing an auditor to a pre-populated, organized repository of proof.

You can get this done using scripts or specialized compliance platforms that tap into the APIs of your existing tools. Set up scheduled jobs (e.g., cron jobs or Lambda functions) to automatically pull pipeline logs, fetch IAM role configurations from the AWS API, and archive Jira tickets via webhooks. Store all this evidence in a secure, centralized S3 bucket with versioning and locked-down access controls.

By doing this, you're building an irrefutable audit trail that runs 24/7. It makes the audit itself a simple verification exercise rather than a massive investigative project. Our guide to what is continuous monitoring dives deeper into how to build these kinds of automated systems.

Embedding SOC 2 Controls in Your DevOps Workflow

Getting SOC 2 compliant shouldn't feel like a separate, soul-crushing task tacked on at the end. The most effective—and frankly, the most sane—way to meet SOC 2 requirements is to stop treating them like an external checklist. Instead, bake the controls directly into the DevOps lifecycle your team already lives and breathes every day.

When you do this, compliance becomes a natural outcome of great engineering, not a disruptive event you have to brace for. It's a mindset shift: security and compliance checks become just another part of the software delivery process, from the first line of code to the final deployment. This way, you build a system where compliance is automated, continuous, and woven right into your engineering culture.

Automating Security in the CI/CD Pipeline

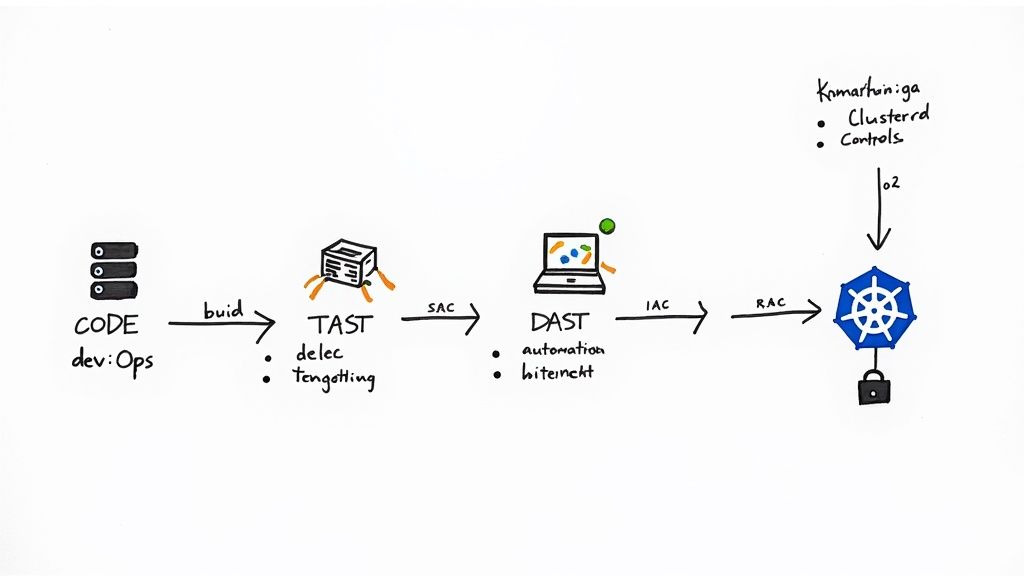

Your CI/CD pipeline is the central nervous system of your entire development process. That makes it the perfect place to automate security controls that would otherwise be a massive manual headache. Instead of just relying on human code reviews, you can integrate automated tools to act as vigilant gatekeepers.

- Static Application Security Testing (SAST): Tools like SonarQube or Snyk Code can be plugged right into your pipeline. They scan your source code for vulnerabilities before it ever gets merged, catching things like SQL injection or insecure configurations at the earliest possible moment. A build should automatically fail if high-severity issues pop up.

- Dynamic Application Security Testing (DAST): After your application is built and humming along in a staging environment, DAST tools like OWASP ZAP can actively poke and prod it for weaknesses. This simulates a real-world attack, uncovering runtime flaws that static analysis might miss.

This "shift-left" approach turns security into a shared responsibility, not just a problem for the security team to clean up later. It gives developers instant feedback, helping them learn and write more secure code from the get-go.

Infrastructure as Code as an Audit Trail

Infrastructure as Code (IaC) is one of your biggest allies for SOC 2 compliance. When you use tools like Terraform or CloudFormation to define your entire cloud environment in version-controlled files, you create an undeniable, time-stamped audit trail for your infrastructure.

Every single change—from tweaking a firewall rule to updating an IAM policy—is captured in a commit. This directly satisfies critical change management requirements. An auditor can simply look at your Git history to see who made a change, what the change was, and who approved it through a pull request. What was once a painful audit request becomes a simple matter of record.

IaC transforms your infrastructure from a manually configured, hard-to-track mess into a declarative, auditable, and repeatable asset. It’s not just a DevOps best practice; it's a compliance superpower.

Leveraging Observability for Security Monitoring

Modern observability platforms are no longer just for tracking application performance; they're essential for meeting SOC 2’s monitoring and incident response requirements. Tools like Datadog, Prometheus, or Grafana Loki give you the visibility needed to spot and react to security events.

To make these tools truly SOC 2-ready, you need to configure them to:

- Collect Security-Relevant Logs: Make sure you're pulling in logs from your cloud provider (like AWS CloudTrail), your applications, and the underlying operating systems.

- Create Security-Specific Alerts: Set up alerts for suspicious activity. Think multiple failed login attempts, unauthorized API calls, or changes to critical security groups.

- Establish Incident Response Dashboards: Build a single pane of glass for security incidents. This helps your team quickly assess what's happening and respond effectively.

This proactive monitoring proves to auditors that you have real-time controls in place to detect and handle potential security issues. This is absolutely critical, especially since misconfigured controls are a massive source of security failures. In fact, a Gartner analysis found that a staggering 93% of cloud breaches come from these kinds of misconfigurations, showing just how vital robust, automated monitoring really is. You can learn more by reviewing best practices for continuous monitoring.

Implementing Least Privilege with RBAC

The principle of least privilege is a cornerstone of the SOC 2 Security criterion. The idea is simple: grant users only the access they absolutely need to do their jobs, and nothing more. In modern cloud and Kubernetes environments, Role-Based Access Control (RBAC) is how you make this happen.

For example, in Kubernetes, you can create specific Roles and ClusterRoles that grant permissions only to the necessary resources. A developer might be allowed to view pods in a development namespace but be completely blocked from touching production secrets.

By managing these RBAC policies as code and checking them into Git, you create yet another auditable record. It proves your access controls are intentional, reviewed, and consistently enforced.

Your Technical SOC 2 Readiness Checklist

Getting started with a SOC 2 audit can feel like a huge undertaking, especially for an engineering team. The key is to think of it less like chasing a certificate and more like systematically building and proving a culture of security. With a clear roadmap, the whole process transforms from a daunting obstacle into a manageable project with a real finish line.

And this isn't just an internal exercise anymore. The demand for this level of security assurance is fast becoming table stakes. Globally, 65% of organizations say their buyers and partners are flat-out asking for SOC 2 attestation as proof of solid security. You can find more stats on this trend over at marksparksolutions.com. This shift makes a structured readiness plan a must-have to stay competitive.

Phase 1: Scoping and Planning

This first phase is all about laying the groundwork. Getting this right from the jump saves a ton of headaches, scope creep, and wasted effort down the road.

- Define Audit Boundaries: First things first, you need to draw a line around what's "in-scope." This means identifying every single system, app, database, and piece of infrastructure that touches customer data.

- Select Trust Services Criteria (TSCs): Figure out which TSCs actually matter to your business and your customer commitments. The Security criterion is mandatory, but don't just pile on others like Processing Integrity if it doesn't apply to what you do.

- Perform a Gap Analysis: Now, take a hard look at your current controls and measure them against the TSCs you've chosen. This initial pass will quickly shine a light on any missing policies, insecure setups, or gaps in your process that need attention.

A big piece of this phase also involves proactive technical debt management. If you let that stuff fester, it can seriously undermine the security and reliability you're trying to prove.

Phase 2: Control Implementation and Remediation

Once you know where the gaps are, it's time to close them. This is where your engineering team rolls up their sleeves and turns policy into actual, tangible technical fixes.

This is where the real work happens. It’s not about writing documents; it’s about shipping secure code, hardening infrastructure, and creating the automated guardrails that make compliance a default state, not a manual effort.

- Remediate Security Gaps: Start knocking out the issues you found in the gap analysis. This could mean finally rolling out multi-factor authentication (MFA) everywhere, patching those vulnerable libraries you've been putting off, or hardening your container images.

- Document Everything: Create clear, straightforward documentation for every control. Think architectural diagrams, process write-ups, and incident response runbooks. Make it easy for an auditor (and your future self) to understand what you've built.

- Conduct Internal Testing: Before the real auditors show up, be your own toughest critic. Run your own vulnerability scans and review access logs to make sure the controls are actually working as you expect. Our own production readiness checklist is a great place to start for this kind of internal validation.

Phase 3: Continuous Monitoring and Audit Preparation

With your controls in place, the focus shifts from one-off fixes to ongoing maintenance and evidence gathering. Now you have to prove that your controls have been working effectively over a period of time.

- Automate Evidence Collection: Don't do this manually. Set up automated jobs to constantly pull logs, configuration snapshots, and other pieces of evidence. Shove it all into a secure, organized place where you can find it easily.

- Schedule Regular Reviews: Put recurring reviews on the calendar for things like access rights, firewall rules, and security policies. This ensures they stay effective and don't get stale.

- Engage Your Auditor: Start a conversation with your chosen audit firm early. You can provide them with your system descriptions and some initial evidence to get their feedback. It’s a great way to streamline the formal audit and avoid any last-minute surprises.

Got Questions About SOC 2 Requirements? We've Got Answers.

When you're trying to square SOC 2 compliance goals with the technical reality of building and running software, a lot of questions pop up. It’s totally normal. Here are some of the most common ones we hear from engineering and leadership teams.

How Long Does a SOC 2 Audit Actually Take?

This is the big one, and the honest answer is: it depends. The timeline for a SOC 2 audit can vary wildly based on where you're at and which type of report you're going for.

A Type I audit is just a snapshot in time. Once you’re prepped and ready, the assessment itself can often be wrapped up in a few weeks. It's the quicker option, for sure.

But a Type II audit is a different beast altogether. This one needs an observation period, usually lasting anywhere from three to twelve months, to prove your controls are actually working day-in and day-out. After that period closes, tack on another four to eight weeks for the auditor to do their thing—testing, documenting, and finally generating the report. Your team's readiness and the complexity of your stack are the biggest swing factors here.

So, is SOC 2 a Certification?

Nope. And this is a really important distinction to understand. SOC 2 is an attestation report, not a certification.

A certification usually means you’ve ticked the boxes on a rigid, one-size-fits-all checklist. An attestation, however, is a formal opinion from an independent CPA firm. They're verifying that your specific controls meet the Trust Services Criteria you've chosen. It's a much more nuanced evaluation of your unique security posture.

Think of it like this: a certification is like passing a multiple-choice exam. A SOC 2 attestation is more like defending a thesis—it’s a deep, comprehensive evaluation of your specific environment, not just a pass/fail grade against a generic list.

Is a SOC 2 Report Enough Anymore?

While SOC 2 is still the gold standard for many, it's increasingly seen as the foundation, not the finish line. The compliance landscape is getting more crowded, and as threats get more sophisticated, just having a SOC 2 report might not be enough to satisfy every customer.

Organizations are now layering multiple frameworks to build deeper trust. In fact, some recent data shows that 92% of organizations now juggle at least two different compliance audits or assessments each year, with a whopping 58% tackling four or more. You can dig into the full story in A-LIGN’s latest benchmark report on compliance standards in 2025. The takeaway is clear: we're moving toward a multi-framework world.

Getting SOC 2 right requires a ton of DevOps and cloud security know-how. OpsMoon brings the engineering talent and strategic guidance to weave compliance right into your daily workflows, turning what feels like a roadblock into a real competitive edge. Let's map out your compliance journey with a free work planning session.