7 Secrets Management Best practices for DevOps in 2025

Discover 7 essential secrets management best practices. This guide covers policies, tools, and automation to secure credentials in your DevOps workflows.

In modern software development, managing sensitive credentials like API keys, database connection strings, and TLS certificates is a foundational security challenge. The fallout from a single leaked secret can be catastrophic, leading to severe data breaches, devastating financial losses, and irreparable damage to user trust. As development velocity increases with CI/CD and ephemeral environments, the risk of secret sprawl, accidental commits, and unauthorized access grows exponentially.

Simply relying on .env files, configuration management tools, or environment variables is a fundamentally flawed approach that fails to scale and provides minimal security guarantees. This outdated method leaves credentials exposed in source control, build logs, and developer workstations, creating a massive attack surface. A robust security posture demands a more sophisticated and centralized strategy.

This guide provides a comprehensive breakdown of seven critical secrets management best practices designed for modern engineering teams. We will move beyond high-level advice and dive into technical implementation details, specific tooling recommendations, and actionable automation workflows. You will learn how to build a secure, auditable, and scalable secrets management foundation that protects your software delivery lifecycle from end to end. By implementing these practices, you can effectively mitigate credential-based threats, enforce strict access controls, and ensure your applications and infrastructure remain secure in any environment, from local development to large-scale production deployments.

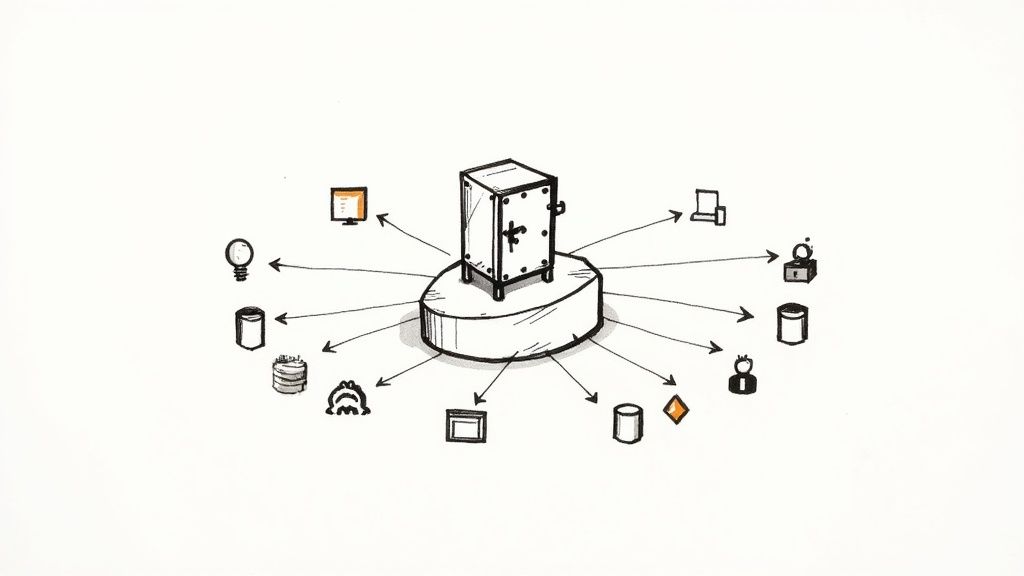

1. Use Dedicated Secrets Management Systems

Moving secrets out of code and simple file stores into a purpose-built platform enforces centralized control, auditing and lifecycle management. Dedicated systems treat API keys, certificates and database credentials as first-class citizens with built-in encryption, dynamic leasing and granular policy engines.

What It Is and How It Works

A dedicated secrets management system provides:

- Central Vaults: A centralized repository for all secrets, encrypted at rest using strong algorithms like AES-256-GCM. The encryption key is often protected by a Hardware Security Module (HSM) or a cloud provider's Key Management Service (KMS).

- Policy-as-Code: Granular access control policies, often defined in a declarative format like HCL (HashiCorp Configuration Language) or JSON. These policies control which identity (user, group, or application) can perform

create,read,update,delete, orlistoperations on specific secret paths. - Audit Devices: Immutable audit logs detailing every API request and response. These logs can be streamed to a SIEM for real-time threat detection.

- Dynamic Secrets: The ability to generate on-demand, short-lived credentials for systems like databases (PostgreSQL, MySQL) or cloud providers (AWS IAM, Azure). The system automatically creates and revokes these credentials, ensuring they exist only for the duration needed.

When an application needs a credential, it authenticates to the secrets manager using a trusted identity mechanism (e.g., Kubernetes Service Account JWT, AWS IAM Role, TLS certificate). Upon successful authentication, it receives a short-lived token which it uses to request its required secrets. This eliminates long-lived, hardcoded credentials.

Successful Implementations

- Netflix (HashiCorp Vault):

- Utilizes Vault's dynamic secrets engine to generate ephemeral AWS IAM roles and database credentials for its microservices.

- Integrates Vault with Spinnaker for secure credential delivery during deployment pipelines.

- Pinterest (AWS Secrets Manager):

- Migrated from plaintext config files, using AWS SDKs to fetch secrets at runtime.

- Leverages built-in rotation functions via AWS Lambda to refresh database credentials every 30 days without manual intervention.

- Shopify (CyberArk Conjur):

- Implements machine identity and RBAC policies to secure its Kubernetes-based production environment.

- Uses a sidecar injector pattern to mount secrets directly into application pods, abstracting the retrieval process from developers.

When and Why to Use This Approach

- You operate in a multi-cloud or hybrid environment and need a unified control plane for secrets.

- You need to meet compliance requirements like PCI DSS, SOC 2, or HIPAA, which mandate strict auditing and access control.

- Your architecture is dynamic, using ephemeral infrastructure (e.g., containers, serverless functions) that requires programmatic, just-in-time access to secrets.

Adopting a dedicated system is a cornerstone of secrets management best practices when you require scalability, compliance and zero-trust security.

Actionable Tips for Adoption

- Evaluate Technical Fit:

- HashiCorp Vault: Self-hosted, highly extensible, ideal for hybrid/multi-cloud.

- AWS Secrets Manager: Fully managed, deep integration with the AWS ecosystem.

- Azure Key Vault: Managed service, integrates tightly with Azure AD and other Azure services.

- Plan a Phased Migration:

- Start by onboarding a new, non-critical service to establish patterns.

- Use a script or tool to perform a bulk import of existing secrets from

.envfiles or config maps into the new system.

- Implement Automated Sealing/Unsealing:

- For self-hosted solutions like Vault, configure auto-unseal using a cloud KMS (AWS KMS, Azure Key Vault, GCP KMS) to ensure high availability and remove operational bottlenecks.

- Codify Your Configuration:

- Use Terraform or a similar IaC tool to manage the configuration of your secrets manager, including policies, auth methods, and secret engines. This makes your setup repeatable and auditable.

"A dedicated secrets manager transforms credentials from a distributed liability into a centralized, controllable, and auditable asset."

By replacing static storage with a dedicated secrets engine, engineering teams gain visibility, auditability and fine-grained control—all key pillars of modern secrets management best practices.

2. Implement Automatic Secret Rotation

Static, long-lived credentials are a significant security liability. Automating the rotation process systematically changes secrets without manual intervention, dramatically shrinking the window of opportunity for attackers to exploit a compromised credential and eliminating the risk of human error.

What It Is and How It Works

Automatic secret rotation is a process where a secrets management system programmatically revokes an old credential and generates a new one at a predefined interval. This is achieved through integrations with target systems.

The technical workflow typically involves:

- Configuration: You configure a rotation policy on a secret, defining the rotation interval (e.g.,

30dfor 30 days) and linking it to a rotation function or plugin. - Execution: On schedule, the secrets manager triggers the rotation logic. For a database, this could mean executing SQL commands like

ALTER USER 'app_user'@'%' IDENTIFIED BY 'new_strong_password';. For an API key, it would involve calling the provider's API endpoint to revoke the old key and generate a new one. - Update: The new credential value is securely stored in the secrets manager, creating a new version of the secret.

- Propagation: Applications are designed to fetch the latest version of the secret. This can be done on startup or by using a client-side agent (like Vault Agent) that monitors for changes and updates the secret on the local filesystem, triggering a graceful application reload.

This mechanism transforms secrets from static liabilities into dynamic, ephemeral assets, a core tenet of modern secrets management best practices.

Successful Implementations

- Uber (HashiCorp Vault):

- Leverages Vault's database secrets engine for PostgreSQL and Cassandra, which handles the entire lifecycle of dynamic user creation and revocation based on a lease TTL.

- Each microservice gets unique, short-lived credentials, drastically reducing the blast radius.

- Airbnb (Custom Tooling & AWS Secrets Manager):

- Uses AWS Secrets Manager's native rotation capabilities, which invoke a specified AWS Lambda function. The function contains the logic to connect to the third-party service, rotate the API key, and update the secret value back in Secrets Manager.

- Capital One (AWS IAM & Vault):

- Uses Vault's AWS secrets engine to generate short-lived IAM credentials with a TTL as low as 5 minutes for CI/CD pipeline jobs. The pipeline authenticates to Vault, gets a temporary access key, performs its tasks, and the key is automatically revoked upon lease expiration.

When and Why to Use This Approach

- You manage credentials for systems with APIs that support programmatic credential management (e.g., databases, cloud providers, SaaS platforms).

- Your organization must adhere to strict compliance frameworks like PCI DSS or SOC 2, which mandate regular password changes (e.g., every 90 days).

- You want to mitigate the risk of a leaked credential from a developer's machine or log file remaining valid indefinitely.

Automating rotation is critical for scaling security operations. It removes the operational burden from engineers and ensures policies are enforced consistently without fail. For a deeper look at how automation enhances security, explore these CI/CD pipeline best practices.

Actionable Tips for Adoption

- Prioritize by Risk:

- Start with your most critical secrets, such as production database root credentials or cloud provider admin keys.

- Implement Graceful Reloads in Applications:

- Ensure your applications can detect a changed secret (e.g., by watching a file mounted by a sidecar) and reload their configuration or connection pools without requiring a full restart.

- Use Versioning and Rollback:

- Leverage your secrets manager's versioning feature. If a new secret causes an issue, you can quickly revert the application's configuration to use the previous, still-valid version while you troubleshoot.

- Monitor Rotation Health:

- Set up alerts in your monitoring system (e.g., Prometheus, Datadog) to fire if a scheduled rotation fails. A failed rotation is a high-priority incident that could lead to service outages.

"A secret that never changes is a permanent vulnerability. A secret that changes every hour is a fleeting risk."

By making secret rotation an automated, programmatic process, you fundamentally reduce credential-based risk and build a more resilient, secure, and compliant infrastructure.

3. Apply Principle of Least Privilege

Granting the minimum level of access necessary for each application, service, or user to perform their required functions is a foundational security principle. Implementing granular permissions and role-based access controls for secrets dramatically reduces the potential blast radius of a compromise, ensuring a breached component cannot access credentials beyond its explicit scope.

What It Is and How It Works

The principle of least privilege (PoLP) is implemented in secrets management through policy-as-code. You define explicit policies that link an identity (who), a resource (what secret path), and capabilities (which actions).

A technical example using HashiCorp Vault's HCL format:

# Policy for the billing microservice

path "secret/data/billing/stripe" {

capabilities = ["read"]

}

# This service has no access to other paths like "secret/data/database/*"

This policy grants the identity associated with it read-only access to the Stripe API key. An attempt to access any other path will result in a "permission denied" error. This is enforced by mapping the policy to the service's authentication role (e.g., its Kubernetes service account or AWS IAM role).

This approach moves from a permissive, default-allow model to a restrictive, default-deny model.

Successful Implementations

- Google (GCP Secret Manager):

- Uses GCP's native IAM roles at a per-secret level. A Cloud Function can be granted the

roles/secretmanager.secretAccessorrole for a single secret, preventing it from accessing any other secrets in the project.

- Uses GCP's native IAM roles at a per-secret level. A Cloud Function can be granted the

- Spotify (HashiCorp Vault):

- Automates policy creation via CI/CD. When a new microservice is defined, a corresponding Vault policy is templated and applied via Terraform, ensuring the service is born with least-privilege access.

- LinkedIn (Custom Solution):

- Their internal secrets store uses ACLs tied to service identities. For Kafka, a service principal is granted

readpermission on a specific topic's credentials but denied access to credentials for other topics, preventing data spillage.

- Their internal secrets store uses ACLs tied to service identities. For Kafka, a service principal is granted

When and Why to Use This Approach

- You operate a microservices architecture where hundreds or thousands of services need isolated, programmatic access to secrets.

- You need to demonstrate compliance with security frameworks like NIST CSF, SOC 2, or ISO 27001 that require strong access controls.

- You are adopting a Zero Trust security model, where trust is never assumed and must be explicitly verified for every request.

Applying least privilege is a non-negotiable component of secrets management best practices, as it moves you from a permissive to a deny-by-default security posture. This approach aligns with modern DevOps security best practices by building security directly into the access control layer. To deepen your understanding, you can explore more about securing DevOps workflows.

Actionable Tips for Adoption

- Use Templated and Path-Based Policies:

- Structure your secret paths logically (e.g.,

secret/team_name/app_name/key). This allows you to write policies that use path templating to grant access based on team or application identity.

- Structure your secret paths logically (e.g.,

- Automate Policy Management with IaC:

- Commit your access policies to a Git repository and manage them using Terraform or Pulumi. This provides version control, peer review, and an audit trail for all permission changes.

- Implement Break-Glass Procedures:

- For emergencies, have a documented and highly audited workflow for temporarily elevating permissions. This often involves a tool like PagerDuty or an approval flow that requires multiple senior engineers to authorize.

- Regularly Audit Permissions:

- Use automated tools (e.g., custom scripts, open-source tools like

cloud-custodian) to periodically scan policies for overly permissive rules like wildcard (*) permissions or stale access for decommissioned services.

- Use automated tools (e.g., custom scripts, open-source tools like

"A default-deny policy for secrets ensures that access is a deliberate, audited decision, not an implicit assumption."

By enforcing the principle of least privilege, organizations transform their secrets management from a reactive to a proactive security discipline, significantly limiting the impact of any potential breach.

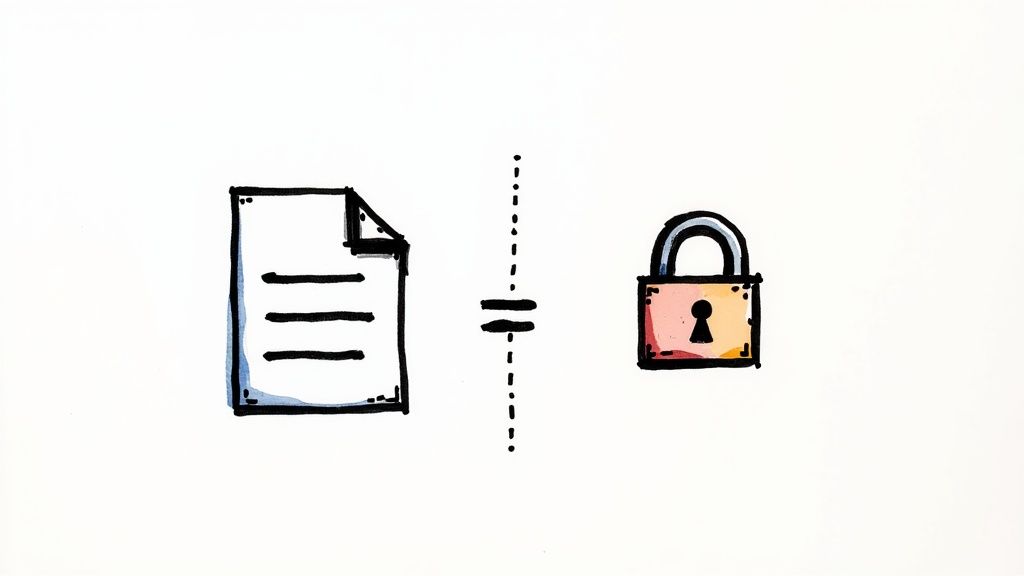

4. Never Store Secrets in Code or Configuration Files

Hardcoding secrets like API keys, database passwords, and private certificates directly into source code or configuration files is one of the most common and dangerous security anti-patterns. This practice makes secrets discoverable by anyone with access to the repository, exposes them in version control history, and complicates rotation and auditing.

What It Is and How It Works

This foundational practice involves completely decoupling sensitive credentials from application artifacts. Secrets should be injected into the application's runtime environment just-in-time.

Technical decoupling mechanisms include:

- Environment Variables: An orchestrator like Kubernetes injects secrets into a container's environment. The application reads them via

os.getenv("API_KEY"). While simple, this can expose secrets to processes with access to the container's environment. - Mounted Files/Volumes: A more secure method where a sidecar container (like Vault Agent Injector or Secrets Store CSI Driver for Kubernetes) retrieves secrets and writes them to an in-memory filesystem (

tmpfs) volume mounted into the application pod. The app reads the secret from a local file path (e.g.,/vault/secrets/db-password). - Runtime API Calls: The application uses an SDK to authenticate to the secrets manager on startup and fetches its credentials directly. This provides the tightest control but requires adding logic to the application code.

This approach ensures the compiled artifact (e.g., a Docker image) is environment-agnostic and contains no sensitive data.

Successful Implementations

- The Twelve-Factor App Methodology:

- Factor III: "Config" explicitly states that configuration, including credentials, should be stored in the environment and not in the code (https://12factor.net/config). This principle is a cornerstone of modern, cloud-native application development.

- GitHub's Secret Scanning:

- A real-world defense mechanism that uses pattern matching to detect credential formats (e.g.,

AKIA...for AWS keys) in pushed commits. When a match is found, it automatically notifies the provider to revoke the key, mitigating the damage of an accidental commit.

- A real-world defense mechanism that uses pattern matching to detect credential formats (e.g.,

- Kubernetes Secrets and CSI Drivers:

- The Kubernetes Secrets object provides a mechanism to store secrets, but they are only base64 encoded by default in etcd. A stronger pattern is to use the Secrets Store CSI Driver, which allows pods to mount secrets from external providers like Vault, AWS Secrets Manager, or Azure Key Vault directly into the container's filesystem.

When and Why to Use This Approach

- You use version control systems like Git, where a committed secret remains in the history forever unless the history is rewritten.

- You build immutable infrastructure, where the same container image is promoted across dev, staging, and production environments.

- Your CI/CD pipeline needs to be secured, as build logs are a common source of secret leakage if credentials are passed insecurely.

Decoupling secrets from code is a non-negotiable step in achieving effective secrets management best practices, as it immediately reduces the attack surface and prevents accidental leakage.

Actionable Tips for Adoption

- Integrate Pre-Commit Hooks:

- Use tools like

talismanorgitleaksas a pre-commit hook. This scans staged files for potential secrets before a commit is even created, blocking it locally on the developer's machine.

- Use tools like

- Implement CI/CD Pipeline Scanning:

- Add a dedicated security scanning step in your CI pipeline using tools like GitGuardian or TruffleHog. This acts as a second line of defense to catch any secrets that bypass local hooks.

- Use Dynamic Templating for Local Development:

- For local development, use a tool like

direnvor the Vault Agent to populate environment variables from a secure backend. Avoid committing.envfiles, even example ones, to source control. Use a.env.templateinstead.

- For local development, use a tool like

- Rewrite Git History for Leaked Secrets:

- If a secret is committed, it's not enough to just remove it in a new commit. The old commit must be removed from history using tools like

git-filter-repoor BFG Repo-Cleaner. After cleaning, immediately rotate the exposed secret.

- If a secret is committed, it's not enough to just remove it in a new commit. The old commit must be removed from history using tools like

"A secret in your code is a bug. It’s a vulnerability waiting to be discovered, shared, and exploited."

By treating your codebase as inherently untrusted for storing secrets, you enforce a critical security boundary that protects your credentials from ending up in the wrong hands.

5. Enable Comprehensive Audit Logging

Implementing detailed logging and monitoring for all secret-related activities provides an immutable record of access, modifications, and usage. This creates a clear trail for security incident response, forensic analysis, and compliance reporting, turning your secrets management system into a trustworthy source of truth.

What It Is and How It Works

Comprehensive audit logging captures every API request and response to and from the secrets management system. A good audit log entry is a structured JSON object containing:

{

"time": "2023-10-27T10:00:00Z",

"type": "response",

"auth": {

"client_token": "hmac-sha256:...",

"accessor": "...",

"display_name": "kubernetes-billing-app",

"policies": ["billing-app-policy"],

"token_ttl": 3600

},

"request": {

"id": "...",

"operation": "read",

"path": "secret/data/billing/stripe",

"remote_address": "10.1.2.3"

},

"response": {

"status_code": 200

}

}

This log shows who (billing-app), did what (read), to which resource (the Stripe secret), and when. These logs are streamed in real-time to a dedicated audit device, such as a file, a syslog endpoint, or directly to a SIEM platform like Splunk, ELK Stack, or Datadog for analysis.

Successful Implementations

- Financial Institutions (SOX Compliance):

- Stream Vault audit logs to Splunk. They build dashboards and alerts that trigger on unauthorized access attempts to secrets tagged as "sox-relevant," providing a real-time compliance monitoring and reporting system.

- Healthcare Organizations (HIPAA Compliance):

- Use AWS CloudTrail logs from AWS Secrets Manager to create a permanent record of every access to secrets guarding Protected Health Information (PHI). This log data is ingested into a data lake for long-term retention and forensic analysis.

- E-commerce Platforms (PCI DSS):

- Configure alerts in their SIEM to detect anomalies in secret access patterns, such as a single client token reading an unusually high number of secrets, or access from an unknown IP range, which could indicate a compromised application token.

When and Why to Use This Approach

- You operate in a regulated industry (finance, healthcare, government) with strict data access auditing requirements.

- You need to perform post-incident forensic analysis to determine the exact scope of a breach (which secrets were accessed, by whom, and when).

- You want to implement proactive threat detection by identifying anomalous access patterns that could signify an active attack or insider threat.

Enabling audit logging is a fundamental component of secrets management best practices, providing the visibility needed to trust and verify your security posture.

Actionable Tips for Adoption

- Stream Logs to a Centralized, Secure Location:

- Configure your secrets manager to send audit logs to a separate, write-only system. This prevents an attacker who compromises the secrets manager from tampering with the audit trail.

- Create High-Fidelity Alerts:

- Focus on actionable alerts. Good candidates include:

- Authentication failures from a production service account.

- Any modification to a root policy or global configuration.

- A user accessing a "break-glass" secret outside of a declared incident.

- Focus on actionable alerts. Good candidates include:

- Hash Client Tokens in Logs:

- Ensure your audit logging configuration is set to hash sensitive information like client tokens. This allows you to correlate requests from the same token without exposing the token itself in the logs.

- Integrate with User and Entity Behavior Analytics (UEBA):

- Feed your audit logs into a UEBA system. These systems can baseline normal access patterns and automatically flag deviations, helping you detect sophisticated threats that simple rule-based alerts might miss.

“Without a detailed audit log, you are blind to who is accessing your most sensitive data and why.”

By treating audit logs as a critical security feature, you gain the necessary oversight to enforce policies, respond to threats, and meet compliance obligations effectively.

6. Encrypt Secrets at Rest and in Transit

Encryption is the non-negotiable foundation of secrets security. Ensuring that secrets are unreadable to unauthorized parties, both when stored (at rest) and while being transmitted between services (in transit), prevents them from being intercepted or exfiltrated in a usable format. This dual-layered approach is a fundamental principle of defense-in-depth security.

What It Is and How It Works

This practice involves applying strong, industry-standard cryptographic protocols and algorithms.

- Encryption in Transit: This is achieved by enforcing Transport Layer Security (TLS) 1.2 or higher for all API communication with the secrets manager. This creates a secure channel that protects against eavesdropping and man-in-the-middle (MitM) attacks. The client must verify the server's certificate to ensure it's communicating with the legitimate secrets management endpoint.

- Encryption at Rest: This protects the secret data stored in the backend storage (e.g., a database, file system, or object store). Modern systems use envelope encryption. The process is:

- A high-entropy Data Encryption Key (DEK) is generated for each secret.

- The secret is encrypted with this DEK using an algorithm like AES-256-GCM.

- The DEK itself is then encrypted with a master Key Encryption Key (KEK).

- The encrypted DEK is stored alongside the encrypted secret.

The KEK is the root of trust, managed externally in an HSM or a cloud KMS, and is never stored on disk in plaintext.

Successful Implementations

- AWS KMS with S3:

- When using AWS Secrets Manager, secrets are encrypted at rest using a customer-managed or AWS-managed KMS key. This integration is seamless and ensures that even an attacker with direct access to the underlying storage cannot read the secret data.

- HashiCorp Vault:

- Vault's storage backend is always encrypted. The master key used for this is protected by a set of "unseal keys." Using Shamir's Secret Sharing, the master key is split into multiple shards, requiring a quorum of key holders to be present to unseal Vault (bring it online). This prevents a single operator from compromising the entire system.

- Azure Key Vault:

- Provides hardware-level protection by using FIPS 140-2 Level 2 validated Hardware Security Modules (HSMs). Customer keys and secrets are processed within the HSM boundary, providing a very high level of assurance against physical and software-based attacks.

When and Why to Use This Approach

- You are subject to compliance standards like PCI DSS, HIPAA, or SOC 2, which have explicit mandates for data encryption.

- Your threat model includes direct compromise of the storage layer, insider threats with administrative access to servers, or physical theft of hardware.

- You operate in a multi-tenant cloud environment where defense-in-depth is critical.

Applying encryption universally is a core component of secrets management best practices, as it provides a crucial last line of defense. The principles of data protection also extend beyond just secrets; for instance, understanding secure file sharing practices is essential for safeguarding all sensitive company data, as it often relies on the same encryption standards.

Actionable Tips for Adoption

- Enforce TLS 1.2+ with Certificate Pinning:

- Configure all clients to use a minimum TLS version of 1.2. For high-security applications, consider certificate pinning to ensure the client will only trust a specific server certificate, mitigating sophisticated MitM attacks.

- Use a Dedicated KMS for the Master Key:

- Integrate your secrets manager with a cloud KMS (AWS KMS, Azure Key Vault, GCP KMS) or a physical HSM. This offloads the complex and critical task of managing your root encryption key to a purpose-built, highly secure service.

- Automate Root Key Rotation:

- While less frequent than data key rotation, your master encryption key (KEK) should also be rotated periodically (e.g., annually) according to a defined policy. Your KMS should support automated rotation to make this process seamless.

- Use Strong, Standard Algorithms:

- Do not implement custom cryptography. Rely on industry-vetted standards. For symmetric encryption, use AES-256-GCM. For key exchange, use modern TLS cipher suites.

"Unencrypted secrets are a critical failure waiting to happen. Encryption at rest and in transit turns a catastrophic data breach into a non-event."

By systematically encrypting every secret, teams can build resilient systems where the compromise of one layer does not automatically lead to the exposure of sensitive credentials.

7. Implement Environment Separation

Maintaining a strict logical and physical boundary between secrets for development, testing, staging, and production environments prevents credential leakage and contains the blast radius of a breach. Treating each environment as a siloed security domain ensures that a compromise in a lower-trust environment, like development, cannot be leveraged to access high-value production systems.

What It Is and How It Works

Environment separation is an architectural practice that creates isolated contexts for secrets. This can be achieved at multiple levels:

- Logical Separation: Using namespaces or distinct path prefixes within a single secrets manager instance. For example, all production secrets live under

prod/, while staging secrets are understaging/. Access is controlled by policies that bind an environment's identity to its specific path. - Physical Separation: Deploying completely separate instances (clusters) of your secrets management system for each environment. The production cluster runs in a dedicated, highly restricted network (VPC) and may use a different cloud account or subscription, providing the strongest isolation.

- Identity-Based Separation: Using distinct service principals, IAM roles, or service accounts for each environment. A Kubernetes pod running in the

stagingnamespace uses astagingservice account, which can only authenticate to thestagingrole in the secrets manager.

A compromise of a developer's credentials, which only grant access to the dev environment's secrets, cannot be used to read production database credentials.

Successful Implementations

- Netflix (HashiCorp Vault):

- Employs the physical separation model, running entirely separate Vault clusters per environment, often in different AWS accounts. This provides a hard security boundary that is simple to audit and reason about.

- Spotify (Kubernetes & Internal Tools):

- Uses Kubernetes namespaces for logical separation. A pod's service account token includes a namespace claim. Their secrets manager validates this claim to ensure the pod can only request secrets mapped to its own namespace.

- Atlassian (AWS Secrets Manager):

- Utilizes a multi-account AWS strategy. The production environment runs in its own AWS account with a dedicated instance of Secrets Manager. IAM policies strictly prevent roles from the development account from assuming roles or accessing resources in the production account.

When and Why to Use This Approach

- You manage CI/CD pipelines where artifacts are promoted through multiple environments before reaching production.

- You must adhere to regulatory frameworks like SOC 2 or PCI DSS, which require strict segregation between production and non-production environments.

- Your security model needs to prevent lateral movement, where an attacker who compromises a less secure environment can pivot to a more critical one.

Isolating secrets by environment is a fundamental component of secrets management best practices because it upholds the principle of least privilege at an architectural level.

Actionable Tips for Adoption

- Use Infrastructure as Code (IaC) with Workspaces/Stacks:

- Define your secrets management configuration in Terraform or Pulumi. Use workspaces (Terraform) or stacks (Pulumi) to deploy the same configuration to different environments, substituting environment-specific variables (like IP ranges or IAM role ARNs).

- Prevent Cross-Environment IAM Trust:

- When using a cloud provider, ensure your IAM trust policies are scoped correctly. A role in the

devaccount should never be able to assume a role in theprodaccount. Audit these policies regularly.

- When using a cloud provider, ensure your IAM trust policies are scoped correctly. A role in the

- Use Environment-Specific Authentication Backends:

- Configure distinct authentication methods for each environment. For example, production services might authenticate using a trusted AWS IAM role, while development environments might use a GitHub OIDC provider for developers.

- Implement Network Policies:

- Use network security groups, firewall rules, or Kubernetes NetworkPolicies to prevent applications in the staging environment from making network calls to the production secrets manager endpoint.

"A breach in development should be an incident, not a catastrophe. Proper environment separation makes that distinction possible."

By architecting your systems with strict boundaries from the start, you create a more resilient and defensible posture, a core tenet of modern secrets management best practices.

Secrets Management Best Practices Comparison

| Strategy | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| Use Dedicated Secrets Management Systems | High – requires platform setup, policy definition, and client integration. | Moderate to High – licensing/infra costs, operational overhead. | Centralized, auditable secret storage with dynamic capabilities. | Enterprise-grade security, microservices, hybrid-cloud. | Centralized control, strong audit trail, dynamic secrets. |

| Implement Automatic Secret Rotation | Medium to High – requires integration with target systems, app support for hot-reloads. | Moderate – monitoring for rotation failures, client agent overhead. | Reduced risk from compromised credentials; enforces ephemeral secrets. | Databases, cloud credentials, API keys with rotation APIs. | Minimizes secret exposure time, eliminates manual toil. |

| Apply Principle of Least Privilege | Medium – requires careful policy design and ongoing maintenance. | Low to Moderate – relies on policy engine of secrets manager. | Minimized blast radius during a breach; enforces Zero Trust. | Microservices architectures, regulated industries. | Prevents lateral movement, reduces insider threat risk. |

| Never Store Secrets in Code | Low to Medium – requires developer training and CI/CD tooling. | Low – cost of scanning tools (many are open source). | Prevents accidental secret exposure in Git history and build logs. | All software development workflows using version control. | Stops leaks at the source, enables immutable artifacts. |

| Enable Comprehensive Audit Logging | Medium – requires configuring audit devices and log shipping/storage. | Moderate – SIEM licensing, log storage costs. | Full visibility into secret access for forensics and threat detection. | Compliance-driven organizations (PCI, HIPAA, SOX). | Provides immutable proof of access, enables anomaly detection. |

| Encrypt Secrets at Rest and in Transit | Medium – requires TLS configuration and KMS/HSM integration. | Moderate – KMS costs, operational complexity of key management. | Data is confidential even if storage or network layer is compromised. | All use cases; a foundational security requirement. | Provides defense-in-depth, meets compliance mandates. |

| Implement Environment Separation | Medium – requires IaC, network policies, and identity management. | Moderate – may require separate infrastructure for each environment. | Prevents a breach in a lower environment from impacting production. | Multi-environment CI/CD pipelines (dev, stage, prod). | Contains blast radius, enables safer testing. |

Operationalizing Your Secrets Management Strategy

Navigating the landscape of modern application security requires a fundamental shift in how we handle credentials. The days of hardcoded API keys, shared passwords in spreadsheets, and unmonitored access are definitively over. As we've explored, implementing robust secrets management best practices is not merely a compliance checkbox; it is the bedrock of a resilient, secure, and scalable engineering organization. Moving beyond theory to practical application is the critical next step.

The journey begins with acknowledging that secrets management is a continuous, dynamic process, not a "set it and forget it" task. Each practice we've detailed, from centralizing credentials in a dedicated system like HashiCorp Vault or AWS Secrets Manager to enforcing the principle of least privilege, contributes to a powerful defense-in-depth strategy. By weaving these principles together, you create a security posture that is proactive and intelligent, rather than reactive and chaotic.

Synthesizing the Core Pillars of Secure Secrets Management

To truly operationalize these concepts, it's essential to view them as interconnected components of a unified system. Let's distill the primary takeaways into an actionable framework:

- Centralize and Control: The first and most impactful step is migrating all secrets out of disparate, insecure locations (code repositories, config files, developer machines) and into a dedicated secrets management platform. This provides a single source of truth, enabling centralized control, auditing, and policy enforcement.

- Automate and Ephemeralize: Manual processes are prone to error and create security gaps. Automating secret rotation and implementing short-lived, dynamically generated credentials for applications and services drastically reduces the window of opportunity for attackers. This shifts the paradigm from protecting static, long-lived secrets to managing a fluid and temporary access landscape.

- Restrict and Verify: Access control is paramount. The principle of least privilege ensures that any given entity, whether a user or an application, has only the minimum permissions necessary to perform its function. This must be paired with comprehensive audit logging, which provides the visibility needed to detect anomalous behavior, investigate incidents, and prove compliance.

- Isolate and Protect: Strict environment separation (development, staging, production) is non-negotiable. This prevents a compromise in a lower-level environment from cascading into your production systems. Furthermore, ensuring all secrets are encrypted both in transit (using TLS) and at rest (using strong encryption algorithms) protects the data itself, even if the underlying infrastructure is compromised.

From Principles to Production: Your Implementation Roadmap

Adopting these secrets management best practices is a transformative initiative that pays immense dividends in breach prevention, operational efficiency, and developer productivity. While the initial setup can seem daunting, the long-term ROI is undeniable. A well-implemented system empowers developers to move quickly and securely, without the friction of manual credential handling.

Your immediate next steps should focus on a phased, methodical rollout. Start by conducting a thorough audit to discover where secrets currently reside. Next, select a secrets management tool that aligns with your existing technology stack and operational maturity. Begin with a single, non-critical application as a pilot project to build expertise and refine your integration workflows before expanding across your entire organization.

Key Insight: The ultimate goal is to make the secure path the easiest path. When requesting and using secrets is a seamless, automated part of your CI/CD pipeline, developers will naturally adopt secure practices, eliminating the temptation for insecure workarounds.

Mastering these concepts elevates your organization's security posture from a liability to a competitive advantage. It builds trust with your customers, satisfies stringent regulatory requirements, and ultimately protects your most valuable digital assets from ever-evolving threats. The investment in a robust secrets management strategy is an investment in the long-term viability and integrity of your business.

Ready to build a world-class secrets management foundation but need the expert engineering talent to get it done right? OpsMoon connects you with a global network of elite, pre-vetted DevOps and SRE professionals who specialize in designing and implementing secure, automated infrastructure. Find the perfect freelance expert to accelerate your security roadmap and integrate these best practices seamlessly into your workflows by visiting OpsMoon today.