A CTO’s Playbook to Outsource DevOps Services

A technical playbook to outsource DevOps services. Learn to assess maturity, vet vendors, define robust SLAs, and manage outsourced teams for peak performance.

To outsource DevOps services means engaging an external partner to architect, build, and manage your software delivery lifecycle. This encompasses everything from infrastructure automation with tools like Terraform to orchestrating CI/CD pipelines and managing containerized workloads on platforms like Kubernetes. It's a strategic move to bypass the protracted and costly process of building a specialized in-house team, giving you immediate access to production-grade engineering expertise.

Why Top CTOs Now Outsource DevOps

The rationale for outsourcing DevOps has evolved from pure cost arbitrage to a calculated strategy for gaining a significant technical and operational advantage. Astute CTOs recognize that outsourcing transforms the DevOps function from a capital-intensive cost center into a strategic enabler, accelerating product delivery and enhancing system resilience.

This shift is driven by tangible engineering challenges. The intense competition for scarce, high-salaried specialists in areas like Kubernetes administration and cloud security places immense pressure on hiring pipelines and budgets. Concurrently, the operational burden of maintaining complex CI/CD toolchains and responding to infrastructure incidents diverts senior engineers from their primary mission: architecting and building core product features.

The Strategic Shift from In-House to Outsourced

Engaging a global talent pool provides more than just additional engineering capacity; it injects battle-tested expertise directly into your operations. Instead of your principal engineers debugging a failed deployment pipeline at 2 AM, they can focus on shipping features that drive revenue and competitive differentiation.

Outsourcing converts your DevOps function from a fixed, high-overhead cost center into a flexible, on-demand operational expense. This agility is critical for dynamically scaling infrastructure in response to market demand without the friction of long-term hiring commitments.

The global DevOps Outsourcing market is expanding rapidly for this reason. Projections show a leap from USD 10.9 billion in 2025 to USD 26.8 billion by 2033, reflecting a compound annual growth rate (CAGR) of 10.2%. This isn't a fleeting trend but a market-wide pivot towards specialized, scalable solutions over in-house operational overhead. You can review the complete data in this market growth analysis on OpenPR.com.

The following diagram illustrates the transition from a traditional in-house model to a more agile, outsourced partnership.

This visual highlights the move from a static, capital-intensive internal team to a dynamic, global model engineered for efficiency and deep technical expertise. Of course, this approach has its nuances. For a balanced perspective, explore the pros and cons of offshore outsourcing in our detailed guide.

In-House vs Outsourced DevOps A Strategic Comparison

The decision between building an internal DevOps team and partnering with an external provider is a pivotal strategic choice, impacting capital allocation, hiring velocity, and your engineering team's focus. This table provides a technical breakdown of the key differentiators.

| Factor | In-House Model | Outsourced Model |

|---|---|---|

| Cost Structure | High fixed costs: salaries, benefits, training, tools. Significant capital expenditure. | Variable operational costs: pay-for-service or retainer. Predictable monthly expense. |

| Talent Acquisition | Long, competitive, and expensive recruitment cycles for specialized skills. | Immediate access to a vetted pool of senior engineers and subject matter experts. |

| Time-to-Impact | Slow ramp-up time for hiring, onboarding, and team integration. | Rapid onboarding and immediate impact, often within weeks. |

| Expertise & Skills | Limited to the knowledge of current employees. Continuous training is required. | Access to a broad range of specialized skills (e.g., K8s, security, FinOps) across a diverse team. |

| Scalability | Rigid. Scaling up or down requires lengthy hiring or difficult layoff processes. | Highly flexible. Easily scale resources up or down based on project needs or market changes. |

| Focus of Core Team | Internal team often gets bogged down with infrastructure maintenance and support tickets. | Frees up your in-house engineering team to focus on core product development and innovation. |

| Operational Overhead | High. Includes managing payroll, HR, performance reviews, and team dynamics. | Low. The vendor handles all HR, management, and administrative overhead. |

| Risk | High concentration of knowledge in a few key individuals ("key-person dependency"). | Risk is distributed. Knowledge is documented and shared across the provider's team. |

Ultimately, the choice hinges on your specific goals. If you have the resources and a long-term plan to build a deep internal competency, the in-house model can work. However, for most businesses—especially those looking for speed, specialized expertise, and financial flexibility—outsourcing offers a clear strategic advantage.

Know Where You Stand: Assessing Your DevOps Maturity for Outsourcing

Before engaging a DevOps partner, you must perform a rigorous technical audit to establish a baseline of your current capabilities. Entering a partnership without this self-assessment is like attempting to optimize a system without metrics—you'll be directionless.

This internal audit is a data-gathering exercise, not a blame session. It provides the "before" snapshot required to define a precise scope of work, set quantifiable objectives, and ultimately prove the ROI of your investment. Any credible partner will require this baseline to formulate an accurate proposal and deliver tangible results.

How Automated Is Your CI/CD, Really?

Begin by dissecting your CI/CD pipeline, the engine of your development velocity. Its current state will dictate a significant portion of the initial engagement.

Ask targeted, technical questions:

- Deployment Cadence: Are you deploying on-demand, multiple times a day, or is each release a monolithic, manually orchestrated event that requires a change advisory board and a weekend maintenance window?

- Automation Level: What percentage of the path from

git committo production is truly zero-touch? Does a merge to the main branch automatically trigger a build, run a full suite of tests (unit, integration, E2E), and deploy to a staging environment, or are there manual handoffs requiring human intervention? - Rollback Mechanism: When a production deployment fails, is recovery an automated, one-click action that reroutes traffic to the previous stable version? Or is it a high-stress, manual process involving

git revert, database restores, and frantic server configuration changes?

A low-maturity team might be using Jenkins with manually configured jobs and deploying via shell scripts over SSH. A more advanced team might leverage declarative pipelines in GitLab CI or GitHub Actions but lack critical automated quality gates like static analysis (SAST) or dynamic analysis (DAST). Be brutally honest about your current state.

For a deeper dive into these stages, check out our guide on the different DevOps maturity levels and what they look like in practice.

What’s Your Infrastructure and Container Game Plan?

Next, scrutinize your infrastructure management practices. The transition from manual "click-ops" in a cloud console to version-controlled, declarative infrastructure is a fundamental marker of DevOps maturity.

Your Infrastructure as Code (IaC) maturity can be evaluated by your adoption of tools like Terraform or CloudFormation. Are your VPCs, subnets, security groups, and compute resources defined in version-controlled .tf files? Or are engineers still manually provisioning resources, leading to configuration drift and non-reproducible environments? A lack of IaC is a significant technical debt and a security risk.

Similarly, evaluate your containerization and orchestration strategy using Docker and Kubernetes.

- Are your applications packaged as immutable container images stored in a registry like ECR or Docker Hub, or are you deploying artifacts to mutable virtual machines?

- If you use Kubernetes, are you leveraging a managed service (EKS, GKE, AKS) or self-managing the control plane, which incurs significant operational overhead?

- How are you managing Kubernetes manifests? Are you using Helm charts with a GitOps operator like Argo CD to automate deployments, or are engineers running

kubectl apply -ffrom their local machines?

Can You Actually See What’s Happening? Benchmarking Your Observability

Finally, assess your ability to observe and understand your systems' behavior. Without robust monitoring, logging, and tracing—the three pillars of observability—you are operating in the dark, and every incident becomes a prolonged investigation.

A rudimentary setup might involve SSHing into servers to grep log files and relying on basic cloud provider metrics. A truly observable system, however, integrates a suite of specialized, interoperable tools:

- Monitoring: Using Prometheus for time-series metrics collection and Grafana for building dashboards that visualize key service-level indicators (SLIs).

- Logging: Centralizing structured logs (e.g., in JSON format) into a system like the ELK Stack (Elasticsearch, Logstash, Kibana) or a SaaS platform like Datadog for high-cardinality analysis.

- Tracing: Implementing distributed tracing with OpenTelemetry and a backend like Jaeger to trace the lifecycle of a request across multiple microservices.

The ultimate test of your observability is your Mean Time to Resolution (MTTR). If it takes hours or days to diagnose and resolve a production issue, your observability stack is immature, regardless of the tools you use.

Translate these findings into specific, measurable, achievable, relevant, and time-bound (SMART) goals. For example: "Implement a fully automated blue-green deployment strategy in our GitLab CI pipeline for the core API, reducing the Change Failure Rate from 15% to under 2% within Q3." This provides a clear directive for your partner and a tangible benchmark for success.

Choosing Your DevOps Engagement Model

Once you have a clear understanding of your DevOps maturity, the next critical step is selecting the appropriate engagement model. A mismatch between your needs and the partnership structure is a direct path to scope creep, budget overruns, and misaligned expectations.

The decision to outsource DevOps services is about surgically applying the right type of expertise to your specific technical and business challenges. Just as you'd select a specific tool for a specific job, your choice of model must align with the problem you're solving—be it a strategic architectural decision, a well-defined project, or a critical skills gap in your team.

Advisory And Consulting for Strategic Guidance

This model is ideal when you need strategic direction, not just execution. It is best suited for organizations that have a competent engineering team but are facing complex architectural decisions, planning a major technology migration, or needing to validate their technical roadmap against industry best practices.

An advisory engagement provides a senior, third-party perspective to de-risk major initiatives and provide a clear, actionable plan. It's about leveraging external expertise to make better internal decisions.

Consider this model for scenarios such as:

- Architecture Reviews: You are planning a migration from a monolithic architecture to event-driven microservices and require an expert review of the proposed design to identify potential scalability bottlenecks, single points of failure, or security vulnerabilities.

- Technology Roadmapping: Your team needs to select a container orchestration platform (Kubernetes vs. Nomad vs. ECS) or an observability stack. An advisory partner can provide an unbiased, data-driven recommendation based on your specific operational requirements and team skill set.

- Security and Compliance Audits: You are preparing for a SOC 2 Type II or ISO 27001 audit and need a partner to perform a gap analysis of your current infrastructure and provide a detailed remediation plan.

This model is less about outsourcing tasks and more about insourcing expertise. You're buying strategic insights, not just engineering hours. It's a short-term, high-impact engagement designed to set your internal team up for long-term success.

Project-Based Delivery for Defined Outcomes

When you have a specific, well-defined technical objective with a clear start and end, a project-based model is the most efficient choice. This approach is optimal for initiatives where the scope, deliverables, and acceptance criteria can be clearly articulated upfront. The partner assumes full ownership of the project, from design and implementation to final delivery.

This model provides cost and timeline predictability, making it ideal for budget-constrained initiatives. You are purchasing a guaranteed outcome, not just engineering hours.

A project-based engagement is a strong fit for initiatives like:

- Full Kubernetes Migration: Migrating a legacy monolithic application from on-premise virtual machines to a managed Kubernetes service like Amazon EKS, including containerization, Helm chart creation, and CI/CD integration.

- Building a CI/CD Pipeline from Scratch: Designing and implementing a secure, multi-stage CI/CD pipeline using tools like GitLab CI, GitHub Actions, and Argo CD, complete with automated testing, security scanning, and progressive delivery patterns.

- Infrastructure as Code (IaC) Implementation: Converting an existing manually managed cloud environment into fully automated, modular Terraform or Pulumi code, managed in a version control system.

For example, a fintech company might use a project-based model to build its initial PCI DSS-compliant infrastructure on AWS. The scope is clear (e.g., "Deploy a three-tier web application architecture with encrypted data stores and strict network segmentation"), the outcome is measurable, and the partner is accountable for delivering a production-ready, auditable environment.

Staff Augmentation for Specialized Skills

Staff augmentation, or capacity extension, is a tactical model designed to fill specific skill gaps within your existing team. It involves embedding one or more specialized engineers who function as integrated members of your squad, reporting to your engineering managers and adhering to your internal development processes and workflows.

This is the most flexible model for accelerating your roadmap when you need specialized expertise that is difficult or time-consuming to hire for directly. It's about adding targeted engineering firepower to your team to increase velocity.

Here are scenarios where staff augmentation is the optimal solution:

- You require a senior Kubernetes engineer for six months to optimize cluster performance, implement a service mesh like Istio, and mentor your existing engineers on cloud-native best practices.

- Your team excels at application development but lacks deep expertise in Terraform and advanced cloud networking needed to build out a new multi-region architecture.

- You are adopting a GitOps workflow and need an Argo CD specialist to lead the implementation, set up the initial repositories, and train the team on the new deployment paradigm.

This hybrid model allows you to maintain full control over your project roadmap and architecture while accessing elite talent on demand. That same fintech company, after its initial project-based infrastructure build, could transition to a staff augmentation model, bringing in a DevOps engineer to manage daily operations and collaborate with developers on the new platform.

Vetting Vendors and Crafting a Bulletproof SLA

This is the most critical phase of the process, where technical due diligence must align with contractual precision. When you decide to outsource DevOps services, a polished sales presentation is irrelevant if the engineering team lacks the technical depth to manage your production systems.

The vetting process must be a rigorous technical evaluation, not a casual conversation. Ask specific, scenario-based questions that compel candidates to demonstrate their problem-solving methodology and real-world experience.

Probing for Real-World Technical Acumen

Avoid generic questions like "Do you have Kubernetes experience?" Instead, pose technical challenges that reveal their thought process.

- On Infrastructure as Code: "Describe a scenario where you encountered a Terraform state-locking issue in a collaborative environment. Detail the

terraformcommands you used to diagnose it, the steps you took to resolve the lock, and the long-term solution you implemented, such as using a remote backend with DynamoDB locking." - On Container Orchestration: "Walk me through your preferred GitOps architecture for managing multi-cluster Kubernetes deployments. How do you structure your Git repositories for applications and infrastructure? How do you handle secrets management and progressive delivery strategies like canaries using tools like Argo CD with Argo Rollouts?"

- On CI/CD Pipelines: "Design a CI/CD pipeline for a microservices architecture that enforces security without creating bottlenecks. Where in the pipeline would you place SAST, DAST, and container vulnerability scanning stages? How would you configure quality gates to block a deployment if critical vulnerabilities are found?"

- On Observability: "You receive a PagerDuty alert for a 50% increase in p99 latency for your primary API, correlated with a spike in CPU usage in Grafana. Describe your step-by-step diagnostic process using logs, metrics, and traces to identify the root cause."

The goal is not to find a single "correct" answer but to assess their ability to reason through complex problems, articulate trade-offs, and draw on proven experience from managing production systems. A live whiteboarding session where the candidate designs a scalable and resilient cloud architecture is an invaluable vetting tool. For a more complete look, check out our guide on vendor management best practices.

Defining the Contract Statement of Work and SLA

Once you've identified a technically proficient partner, you must codify the engagement in a meticulous Statement of Work (SOW) and Service Level Agreement (SLA). These documents must be precise, eliminating all ambiguity and leaving no room for misinterpretation.

Global rates for outsourced DevOps can range from $20–$35/hour in some regions to $120–$200/hour in North America, often delivering 40–60% cost savings compared to an in-house hire. A 500-hour project at $35/hour in Eastern Europe might total $17,500—a fraction of a single US-based engineer's annual salary. With these economics, it's imperative that your SLA defines exactly what you receive for your investment.

Your SLA must be built on specific, measurable, and non-negotiable terms.

A well-architected SLA is your operational insurance policy. It defines success metrics, establishes penalties for non-compliance, and ensures both parties operate from a shared understanding of performance expectations.

Non-Negotiable SLA Components

Every SLA must include these components to protect your business and ensure service quality.

- Uptime Guarantees: Specify a minimum of 99.95% uptime for production environments, calculated monthly and excluding pre-approved maintenance windows.

- Incident Response Tiers: Define clear priority levels and response times. A P1 (critical production outage) requires <15-minute acknowledgment and <1-hour time to begin remediation, 24/7/365. P2 (degraded service) and P3 (minor issue) incidents should have correspondingly longer timeframes.

- Security and Compliance Mandates: Explicitly require vendor compliance with standards like SOC 2 Type II or ISO 27001. Mandate background checks for all personnel and specify data handling protocols.

- Intellectual Property Clause: The contract must unequivocally state that all work product—including all code, scripts, configurations, and documentation—is the exclusive intellectual property of your company.

- Change Management Process: Define a strict change management protocol. Every infrastructure change must be executed via an Infrastructure as Code pull request, which must be reviewed and approved by your internal engineering lead before being merged.

- Exit Strategy and Knowledge Transfer: The contract must outline a comprehensive offboarding process, including a mandatory knowledge transfer period where all documentation, runbooks, credentials, and system access are cleanly transitioned back to your team.

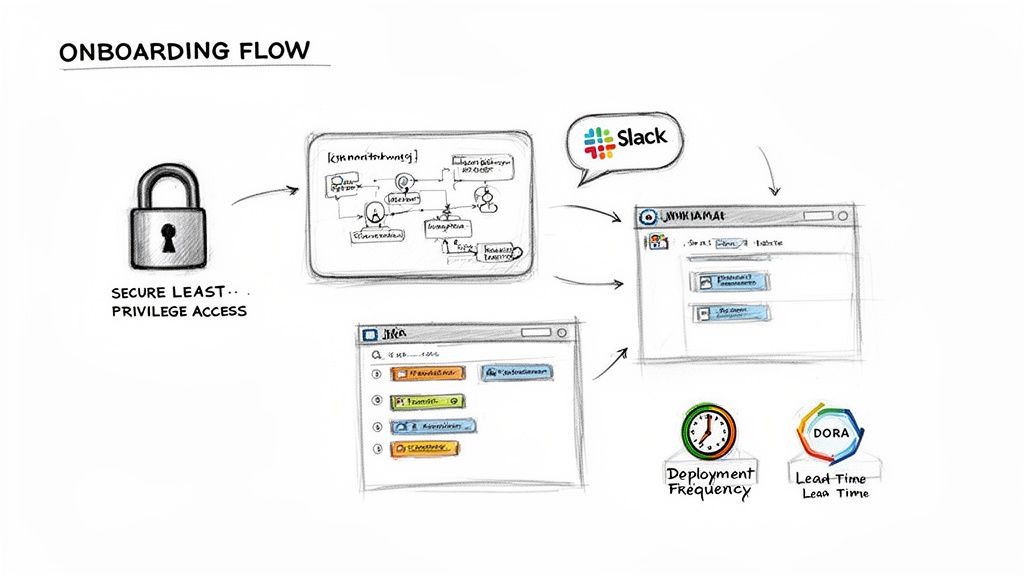

Onboarding and Managing Your Outsourced Team

Signing the contract is merely the beginning. The success of your decision to outsource DevOps services hinges on the effectiveness of your onboarding and integration process. This initial phase sets the operational tempo for the entire engagement.

This is a structured, security-first process for embedding your new partners into your daily engineering workflow and providing them with the necessary context to be effective.

The first priority is access provisioning, governed by the principle of least privilege. Your outsourced engineers must be granted only the minimum permissions required to perform their duties. This means creating specific IAM roles in your cloud environment, granting role-based access control (RBAC) in Kubernetes, and providing access only to necessary code repositories. Never grant broad administrative privileges.

To streamline this process, adopt established remote onboarding best practices. A structured checklist-driven approach ensures consistency and completeness from day one.

Establishing a Communications Framework

Effective management requires a robust communication framework that fosters transparency and collaboration. The objective is to integrate the outsourced team so they function as a genuine extension of your internal team, not as a disconnected third party.

Achieve this with a combination of synchronous and asynchronous tools:

- Shared Slack Channels: Create dedicated channels for specific projects or operational domains (e.g.,

#devops-infra,#k8s-cluster-prod). This ensures focused, searchable communication. - Daily Stand-ups: A mandatory 15-minute daily video call is essential for identifying blockers, aligning on priorities, and building team cohesion.

- Shared Project Boards: Use a single project management tool like Jira or Asana for all work. A unified backlog and Kanban board create a single source of truth for work in progress.

Knowledge transfer must be an active, not passive, process. Schedule live walkthroughs of your architecture using diagrams from tools like Lucidchart or Diagrams.net. Review operational runbooks together, ensuring they detail not just the "how" but also the "why" of a process and provide clear remediation steps.

Measuring Performance with DORA Metrics

Once the team is operational, your focus must shift to objective performance measurement. Gut feelings are insufficient. Use the industry-standard DORA (DevOps Research and Assessment) metrics to get a data-driven view of your software delivery performance.

These four key metrics provide a clear, quantitative assessment of your engineering velocity and stability:

- Deployment Frequency: How often is code successfully deployed to production? Elite teams deploy on-demand, multiple times a day.

- Lead Time for Changes: What is the median time from code commit to production deployment? This measures the efficiency of your entire CI/CD pipeline.

- Change Failure Rate: What percentage of production deployments result in a degraded service or require remediation (e.g., rollback, hotfix)?

- Time to Restore Service: What is the median time to restore service after a production failure? This is a direct measure of your system's resilience.

Tracking DORA metrics transforms performance conversations from subjective ("Are you busy?") to objective ("Are we delivering value faster and more reliably?"). It aligns both your internal and outsourced teams around the same measurable outcomes.

This data-driven approach fosters a culture of continuous improvement. In weekly or bi-weekly reviews, use these metrics to identify bottlenecks. A high Change Failure Rate might indicate insufficient automated testing coverage. A long Lead Time for Changes could point to inefficiencies in the code review or QA process. Your outsourced partner's responsibility is not just to maintain the status quo but to proactively identify and implement improvements that positively impact these key metrics.

Common Pitfalls in DevOps Outsourcing

Even with a technically proficient partner, several common pitfalls can derail a DevOps outsourcing engagement, leading to budget overruns and timeline delays. Awareness of these risks is the first step toward mitigating them.

The most common failure mode is treating the outsourced team as a "black box" vendor—providing a high-level requirements document and expecting a perfect solution without further interaction. This hands-off approach guarantees a disconnect. The team lacks the business context and technical nuance needed to make optimal decisions, resulting in a solution that is technically functional but misaligned with business needs.

The solution is deep integration. Include them in daily stand-ups, architectural design sessions, and relevant Slack channels. This fosters a sense of ownership and transforms them from a service provider into a true partner.

Vague Scope and the Slow Burn of Creep

An ambiguous scope is a primary cause of project failure. Vague requirements like "build a CI/CD pipeline" or "manage our Kubernetes cluster" are invitations for scope creep, where a series of small, undocumented requests incrementally derail the project's timeline and budget.

Apply the same rigor to infrastructure tasks as you do to application features.

- Write User Stories for Infrastructure: Frame every task as a user story with a clear outcome. For example: "As a developer, I need a CI pipeline that automatically runs unit and integration tests and deploys my feature branch to a dynamic staging environment so I can get rapid feedback."

- Define Clear Acceptance Criteria: Specify what "done" means in measurable, testable terms. For the pipeline story, acceptance criteria might include: "The pipeline must complete in under 10 minutes," "The deployment must succeed without manual intervention," and "A notification with a link to the staging environment is posted to Slack."

This level of precision eliminates ambiguity and ensures alignment on deliverables for every task.

A vague Statement of Work is an open invitation for budget overruns. Getting crystal clear on deliverables isn't just good practice—it's your best defense against surprise costs and delays.

Forgetting About Security Until It’s an Emergency

Another critical error is treating security as a final-stage gate rather than an integrated part of the development lifecycle. Bolting on security after the fact is invariably more costly, less effective, and often requires significant architectural rework.

This risk is amplified when you outsource DevOps services, as you are granting access to your core infrastructure. The DevOps market is projected to reach $86.16 billion by 2034, with DevSecOps—the integration of security into DevOps practices—being a major driver. Gartner predicts that by 2027, 80% of organizations will have full DevOps toolchains where security is a mandatory, automated component. You can dive deeper into these DevOps market statistics on Programs.com.

Integrate security from day one. Make it a key part of your vendor vetting process and codify requirements in the SLA. Enforce the principle of least privilege for all access. Mandate vulnerability scanning (SAST, DAST, and container scanning) within the CI/CD pipeline. Require that every infrastructure change undergoes a security-focused peer review as part of the pull request process.

DevOps Outsourcing FAQ

Engaging an external DevOps partner raises valid questions around security, cost, and control. Here are direct answers to the most common concerns from engineering leaders.

How Do You Actually Keep Things Secure When Outsourcing?

Security is achieved through a multi-layered strategy of technical controls and contractual obligations, not trust alone. Vetting starts with verifying the vendor's own security posture, such as SOC 2 or ISO 27001 compliance.

Operationally, enforce the principle of least privilege using granular IAM roles and Kubernetes RBAC. All access must be brokered through a VPN with mandatory multi-factor authentication (MFA) using hardware tokens. Secrets must be managed centrally in a tool like HashiCorp Vault or AWS Secrets Manager, not stored in code or environment variables.

All security protocols, data handling requirements, and the incident response plan must be explicitly defined in your Service Level Agreement (SLA). This is a non-negotiable contractual requirement.

Finally, security must be automated within the development lifecycle. Implement automated security scanning (SAST/DAST) and software composition analysis (SCA) as mandatory stages in all CI/CD pipelines to catch vulnerabilities before they reach production.

What’s the Real Cost Structure for Outsourced DevOps?

The cost model depends on the engagement type, typically falling into one of three categories:

- Staff Augmentation: A fixed monthly or hourly rate per engineer. Rates vary based on seniority and geographic location.

- Project-Based Work: A fixed price for a project with a clearly defined scope and deliverables, such as "Implement a production-ready EKS cluster based on our reference architecture."

- Advisory Services: A monthly retainer for strategic guidance, architectural reviews, and high-level planning, not day-to-day execution.

Demand complete pricing transparency. The proposal must clearly itemize all costs and explicitly state what is included (e.g., project management overhead, access to senior architects) to prevent unexpected charges.

How Do I Keep Control Over My Own Infrastructure?

You maintain control through process and technology, not micromanagement. The fundamental rule is: 100% of infrastructure changes must be implemented via Infrastructure as Code (e.g., Terraform, Pulumi) and submitted as a pull request to a Git repository.

This pull request must be reviewed and approved by your internal engineering team before it can be merged and applied. Direct console access for making changes should be forbidden. This GitOps workflow provides a complete, immutable audit trail of every change to your environment. Combined with shared observability dashboards from tools like Grafana or Datadog, this gives you more control and real-time visibility than most in-house teams possess.

Ready to accelerate your software delivery with expert support? OpsMoon connects you with the top 0.7% of global DevOps talent. Schedule your free work planning session to map out your infrastructure roadmap and get matched with the perfect engineers for your project.