A Developer’s Guide to Feature Toggle Management

A technical guide to feature toggle management. Learn to decouple deployment from release, implement advanced patterns, and avoid toggle debt in your projects.

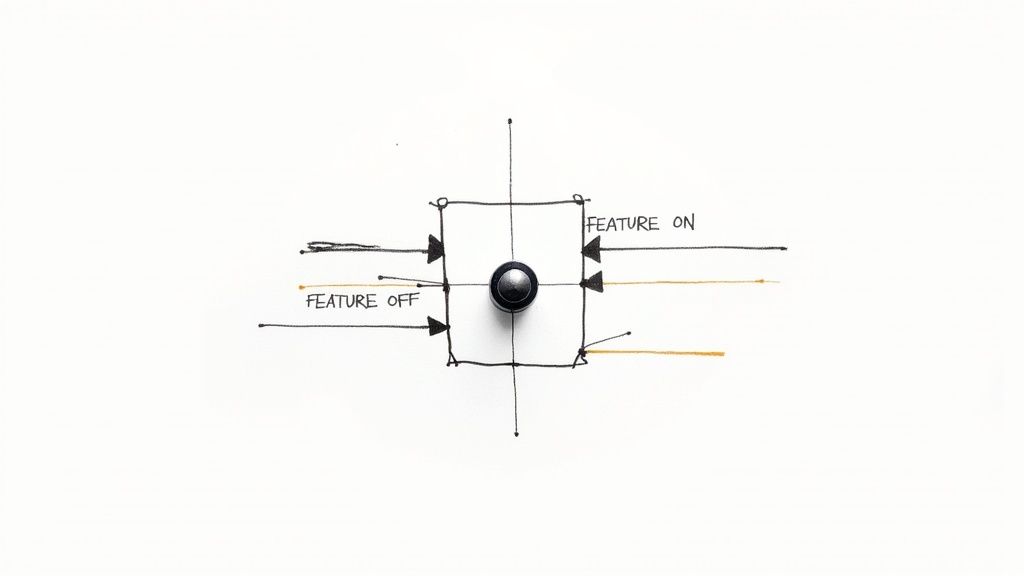

At its core, feature toggle management is a software development technique that allows teams to modify system behavior without changing or deploying code. It uses conditional logic—the feature toggles or flags—to control which code paths are executed at runtime. This provides a crucial safety net and enables granular, strategic control over feature releases.

From Simple Toggles to Strategic Control

In its most basic form, a feature toggle is an if/else statement in the codebase that checks a condition, such as a boolean value in a configuration file or an environment variable. While simple, this approach becomes unmanageable at scale, leading to configuration drift and high maintenance overhead.

This is where true feature toggle management comes into play. It elevates these simple conditional statements into a sophisticated, centralized system for managing the entire lifecycle of a feature. It transforms from a developer's convenience into a strategic asset for the entire organization, enabling complex release strategies and operational control.

Decoupling Deployment from Release

The most profound impact of feature toggle management is the decoupling of deployment from release. This is a foundational concept in modern DevOps and continuous delivery that fundamentally alters the software delivery lifecycle.

- Deployment: The technical process of pushing new code commits into a production environment. The new code is live but may be inactive, hidden behind a feature toggle.

- Release: The business decision to activate a feature, making it visible and available to a specific set of users. This is controlled via the toggle management system, not a new code deployment.

With a robust management system, engineers can continuously merge and deploy feature branches wrapped in toggles to the production environment. The code sits "dark"—inactive and isolated—until a product manager or release manager decides to activate it. They can then enable the feature from a central dashboard for specific user segments, often without requiring any engineering intervention.

By splitting these two actions, you completely eliminate the high-stakes, all-or-nothing drama of traditional "release days." Deployment becomes a low-risk, routine event. The actual release transforms into a flexible, controlled business move.

This separation is a cornerstone of modern, agile development. Feature toggles are now critical for shipping software safely and quickly. A recent analysis found that organizations implementing this technique saw an 89% reduction in deployment-related incidents. This highlights the power of this method for mitigating risk and enabling incremental rollouts. You can discover more insights about the benefits of feature flags on NudgeNow.com.

To truly grasp the power of this approach, we need to understand the core principles that separate it from simple if/else blocks.

Core Tenets of Strategic Feature Toggle Management

The table below breaks down the key principles that transform basic flags into a strategic management practice.

| Principle | Technical Implication | Business Impact |

|---|---|---|

| Centralized Control | Toggles are managed from a unified UI/API, not scattered across config files or environment variables. This creates a single source of truth. | Empowers non-technical teams (Product, Marketing) to control feature releases and experiments, reducing developer dependency. |

| Dynamic Targeting | The system evaluates toggles against a user context object (e.g., { "key": "user-id-123", "attributes": { "location": "DE", "plan": "premium" } }) in real-time. |

Enables canary releases, phased rollouts, A/B testing, and personalized user experiences based on any user attribute. |

| Kill Switch | An immediate, system-wide mechanism to disable a feature instantly by changing the toggle's state to false. |

Drastically reduces Mean Time to Recovery (MTTR) for incidents. It isolates the problematic feature without requiring a code rollback or hotfix deployment. |

| Audit Trail | A complete, immutable log of who changed which flag's state or targeting rules, when, and from what IP address. | Provides governance, accountability, and a crucial debugging history, essential for compliance in regulated industries. |

| Lifecycle Management | A formal process for tracking, managing, and eventually removing stale toggles from the codebase and the management platform. | Prevents technical debt accumulation, reduces codebase complexity, and ensures the system remains maintainable. |

Embracing these tenets is what moves a team from simply using flags to strategically managing features.

The Mixing Board Analogy

I like to think of a good feature toggle management system as a sound engineer's mixing board for your application. Every feature is its own channel on the board, giving you incredibly fine-grained control.

- Adjust levels: You can gradually roll out a feature, starting with just 1% of your user traffic, then ramp it up to 10%, 50%, and finally 100%.

- Mute a channel: If a feature starts causing performance degradation or errors, you can hit the "kill switch" and instantly disable it for everyone, without needing a panicked hotfix or rollback.

- Create special mixes: Want to release a feature only to your internal QA team? Or maybe just to premium subscribers in Europe? You define a specific segment (a "mix") for that audience.

This level of control fundamentally changes how you build and deliver software. It turns what used to be risky, stressful product launches into predictable, data-driven processes.

Anatomy of a Feature Toggle System

If you really want to get a handle on feature toggle management, you have to look under the hood at the technical architecture. It's so much more than a simple if statement. A solid system is a blend of several distinct parts, all working together to give you dynamic control over your application's features.

The first mental shift is to stop thinking about toggles as isolated bits of code and start seeing them as a complete, integrated system. Thinking through successful system integration steps is a great primer here, because every piece of your toggle system needs to communicate flawlessly with the others.

Core Architectural Components

A complete feature toggle system really boils down to four key parts. Each one has a specific job in the process of defining, evaluating, and controlling the flags across your apps.

- Management UI: This is the command center, the human-friendly dashboard. It’s where your developers, product managers, and other teammates can go to create new flags, define targeting rules, and monitor their state—all without needing to write or deploy a single line of code.

- Toggle Configuration Store: Think of this as the "source of truth" for all your flags. It's a high-availability, low-latency database or dedicated configuration service (like etcd or Consul) that holds the definitions, rules, and states for every single toggle.

- Application SDK: This is a small, lightweight library you integrate directly into your application's codebase (e.g., a Maven dependency in Java, an npm package in Node.js). Its job is to efficiently fetch toggle configurations from the store, cache them locally, and provide a simple API for evaluation (e.g.,

client.isEnabled('my-feature')). - Evaluation Engine: This is the heart of the whole operation. The engine takes a flag's rules (from the store) and the current user context and makes the final boolean decision: on or off. This logic can run either on the server or the client.

These components aren't just siloed parts; they're in constant conversation. A product manager flips a switch in the Management UI, which immediately saves the new rule to the Configuration Store. The SDK in your live application, often connected via a streaming connection (like Server-Sent Events), picks up this change in milliseconds and passes it to the Evaluation Engine, which then makes the decision that shapes the user's experience in real-time.

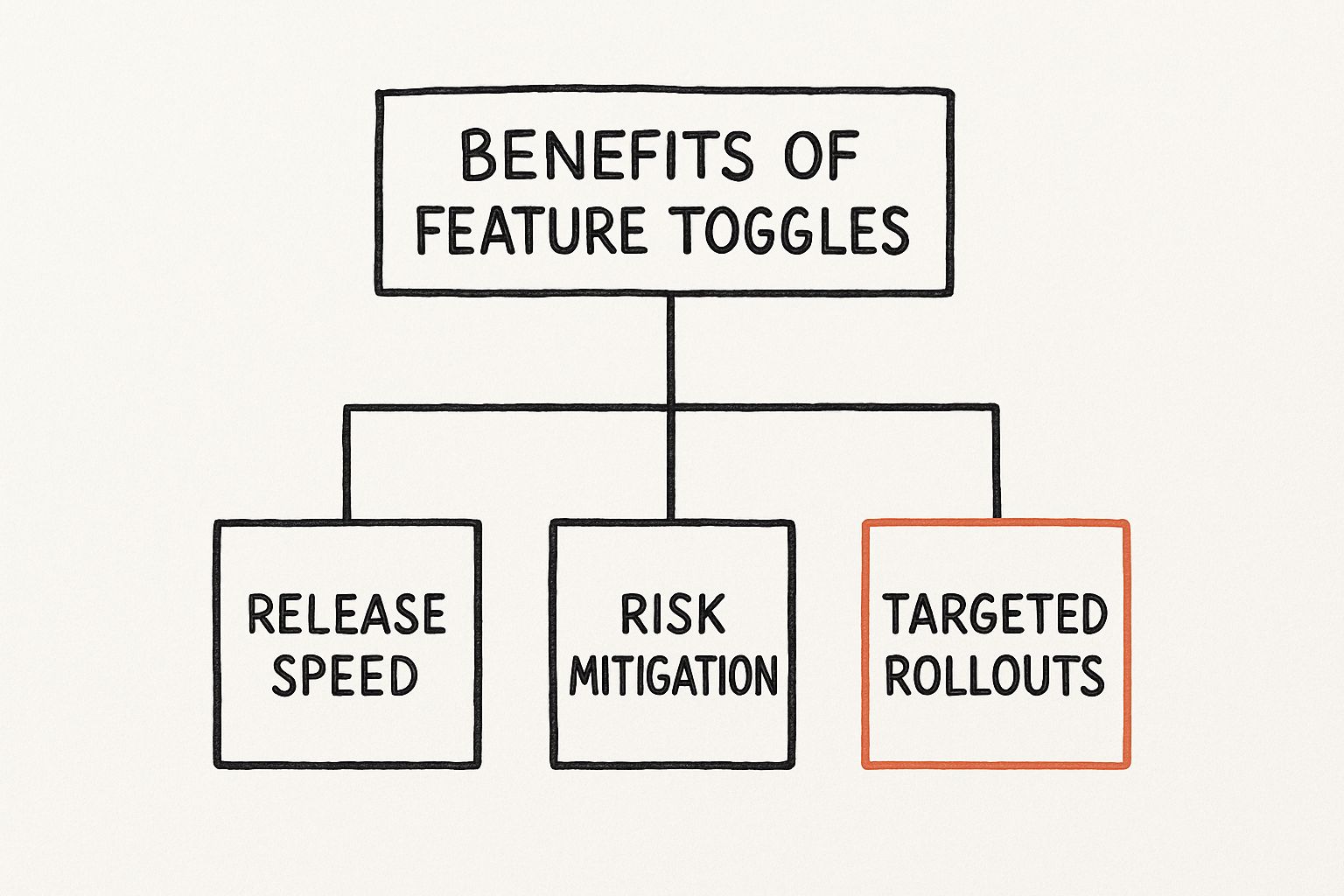

This infographic does a great job of showing how these technical pieces, when working correctly, create tangible business value like faster releases and safer, more targeted rollouts.

When the architecture is sound, you get a clear line from technical capability to direct business wins.

Server-Side vs. Client-Side Evaluation

One of the most critical architectural decisions is where the Evaluation Engine executes. This choice has significant implications for performance, security, and the types of use cases your toggles can support.

The location of your evaluation logic—whether on your servers or in the user's browser—fundamentally dictates the power and security of your feature flagging strategy. It's one of the most important technical choices you'll make when adopting a feature toggle management platform.

Let's break down the two main models.

Server-Side Evaluation

In this model, the decision-making happens on your backend. Your application's SDK communicates with the feature flag service, receives the rules, and evaluates the toggle state before rendering a response to the client.

- Security: This is the most secure model. Since the evaluation logic and all feature variations reside on your trusted server environment, sensitive business rules and configuration data are never exposed to the client. It's the only choice for toggles controlling access to paid features or sensitive data pathways.

- Performance: There can be a minimal latency cost during the request-response cycle as the flag is evaluated. However, modern SDKs mitigate this with in-memory caching, reducing evaluation time to microseconds for subsequent checks.

Client-Side Evaluation

With this approach, the evaluation happens on the user's device—typically within the web browser using a JavaScript SDK or a native mobile SDK. The SDK fetches the relevant rules and makes the decision locally.

- Flexibility: It's ideal for dynamic UI/UX changes that shouldn't require a full page reload, such as toggling React components or altering CSS styles in response to user interaction.

- Security: This is the trade-off. Because the toggle rules are downloaded to the client, a technically savvy user could inspect the browser's network traffic or memory to view them. Therefore, this model is unsuitable for any toggle that gates secure or sensitive functionality.

Most mature engineering organizations use a hybrid approach, selecting the evaluation model based on the specific use case: server-side for security-sensitive logic and client-side for dynamic UI modifications.

Implementing Strategic Toggle Patterns

Alright, let's move from theory to implementation. Applying feature toggles effectively isn't about using a one-size-fits-all flag for every problem. It's about implementing specific, well-defined patterns.

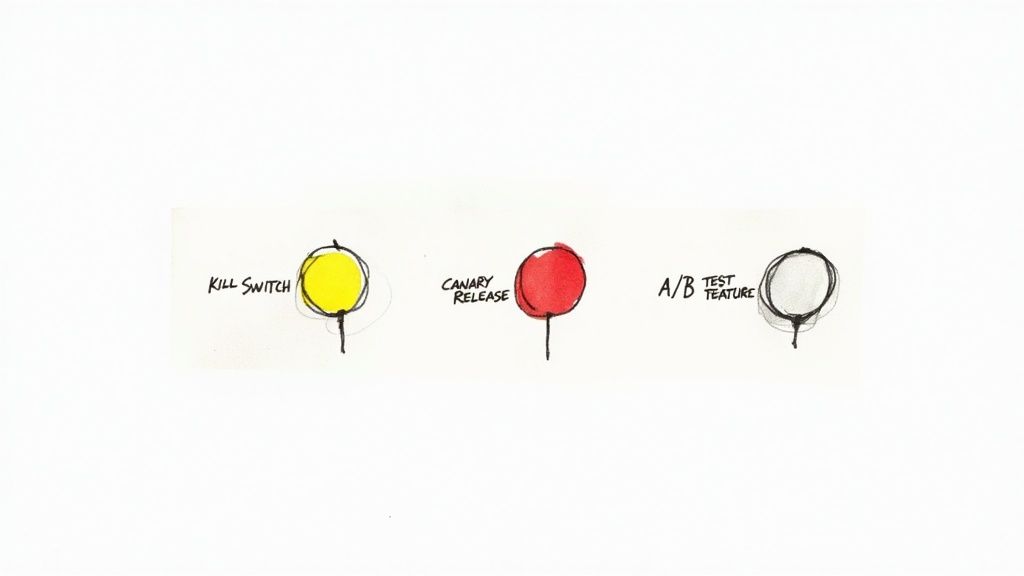

Categorizing your toggles by pattern defines their purpose, expected lifespan, and associated risk. This clarity is essential for maintainability. Let's dissect the four primary patterns with practical code examples.

Release Toggles for Trunk-Based Development

Release Toggles are the workhorses of Continuous Integration and Trunk-Based Development. Their purpose is to hide incomplete or unverified code paths from users, allowing developers to merge feature branches into main frequently without destabilizing the application.

These toggles are, by definition, temporary. Once the feature is fully released and stable, the toggle and its associated dead code path must be removed.

JavaScript Example:

Imagine you’re integrating a new charting library into a dashboard. The code is being merged in pieces but is not ready for production traffic.

import featureFlags from './feature-flag-client';

function renderDashboard(user) {

// Render existing dashboard components...

// 'new-charting-library' is a short-lived Release Toggle.

// The 'user' object provides context for the evaluation engine.

if (featureFlags.isEnabled('new-charting-library', { user })) {

renderNewChartingComponent(); // New code path under development

} else {

renderOldChartingComponent(); // Old, stable code path

}

}

This pattern is fundamental to decoupling deployment from release and maintaining a healthy, fast-moving development pipeline.

Experiment Toggles for A/B Testing

Experiment Toggles are designed for data-driven decision-making. They enable you to expose multiple variations of a feature to different user segments simultaneously and measure the impact on key performance indicators (KPIs).

For example, you might want to test if a new checkout flow (variation-b) improves conversion rates compared to the current one (variation-a). An Experiment Toggle would serve each variation to 50% of your user traffic, while you monitor conversion metrics for each group.

Experiment Toggles transform feature releases from guesswork into a scientific process. They provide quantitative data to validate that a new feature not only works but also delivers its intended business value.

Tech giants live and breathe this stuff. Facebook, for instance, juggles over 10,000 active feature flags to run countless experiments and rollouts simultaneously. Companies like Netflix use the same approach to fine-tune every part of the user experience. It's how they iterate at a massive scale.

Ops Toggles for Operational Control

Ops Toggles, often called "kill switches," are a critical infrastructure safety mechanism. Their purpose is to provide immediate operational control over system behavior, allowing you to disable a feature in production if it's causing issues like high latency, excessive error rates, or infrastructure overload.

Instead of a frantic, middle-of-the-night hotfix or a full rollback, an on-call engineer can simply disable the toggle to mitigate the incident instantly. This has a massive impact on Mean Time to Recovery (MTTR). These toggles are often long-lived, remaining in the codebase to provide ongoing control over high-risk or resource-intensive system components. A guide like this SaaS Operations Management: Your Complete Success Playbook can provide great context on where this fits in.

Permission Toggles for User Entitlements

Permission Toggles manage access to features based on user attributes, such as subscription tier, role, or beta program membership. They are used to implement tiered pricing plans, grant access to administrative tools, or manage entitlement for specific customer segments.

These toggles are almost always permanent as they are an integral part of the application's business logic and authorization model.

Python Example:

Let's say you have an advanced reporting feature that's only for "premium" plan subscribers. A Permission Toggle handles that logic cleanly.

import feature_flags

def generate_advanced_report(user_context):

# 'advanced-analytics' is a long-lived Permission Toggle.

# The evaluation is based on the 'plan' attribute in the user_context dictionary.

if feature_flags.is_enabled('advanced-analytics', context=user_context):

# Logic to generate and return the premium report

return {"status": "success", "report_data": "..."}

else:

# Logic for users without permission, e.g., an upsell message

return {"status": "error", "message": "Upgrade to a premium plan for access."}

# Example user context passed to the function

premium_user = {"key": "user-123", "attributes": {"plan": "premium"}}

free_user = {"key": "user-456", "attributes": {"plan": "free"}}

generate_advanced_report(premium_user) # Returns success

generate_advanced_report(free_user) # Returns error

Implementing these distinct patterns is the first step toward building a mature, maintainable, and powerful feature control strategy.

Choosing Your Feature Toggle Solution

Deciding how you'll manage feature toggles is a huge technical call. This choice will directly impact development velocity, system stability, and total cost of ownership (TCO). You have three primary implementation paths, each with distinct trade-offs.

This isn't just about picking a tool. It's about committing to a strategy that actually fits your team's skills, your budget, and where you're headed technically. The options boil down to building it yourself, using a free open-source tool, or paying for a commercial service.

Evaluating the Core Options

Building a feature toggle system from scratch gives you ultimate control, but it's a costly road. You get to dictate every single feature and security rule, tailoring it perfectly to your needs. The catch is the Total Cost of Ownership (TCO), which is almost always higher than you think. It's not just the initial build; it's the endless cycle of maintenance, bug fixes, and scaling the infrastructure. This path needs a dedicated team with some serious expertise.

Open-source solutions are a solid middle ground. Platforms like Unleash or Flipt give you a strong foundation to build on, saving you from reinventing the core components. This can be a really cost-effective way to go, especially if you're comfortable self-hosting and want a high degree of control. The main downside? You're on the hook for everything: setup, scaling, security, and any support issues that pop up.

Commercial (SaaS) platforms like LaunchDarkly or ConfigCat offer the quickest path to getting started. These are managed, battle-tested solutions that come with enterprise-level features, dedicated support, and robust SDKs for just about any language. Yes, they have a subscription fee, but they completely remove the operational headache of running the infrastructure. This frees up your engineers to build your actual product instead of another internal tool.

Your choice of a feature toggle solution is an investment in your development process. An initial assessment of TCO that includes engineering hours for maintenance and support is critical to making a financially and technically sound decision.

To pick the right option, you have to look past the initial setup. Think about the long-term ripple effects across your entire software delivery lifecycle. A good tool just melts into your workflow, but the wrong one will add friction and headaches at every turn. If you want to learn more about how to make these integrations smooth, check out our deep dive on CI/CD pipeline best practices.

Feature Toggle Solution Comparison In-House vs Open-Source vs Commercial

Making an informed choice requires a structured comparison. What works for a five-person startup is going to be a terrible fit for a large enterprise, and vice-versa. The right answer depends entirely on your team's unique situation.

Use this table as a starting point for your own evaluation. Weigh each point against your team's skills, priorities, and business goals to figure out which path makes the most sense for you.

| Evaluation Criteria | In-House (Build) | Open-Source | Commercial (SaaS) |

|---|---|---|---|

| Initial Cost | Very High (Engineering hours) | Low to Medium (Setup time) | Medium (Subscription fee) |

| Total Cost of Ownership | Highest (Ongoing maintenance) | Medium (Hosting & support) | Low to Medium (Predictable fee) |

| Scalability | Team-dependent | Self-managed | High (Managed by vendor) |

| Security | Full control; full responsibility | Self-managed; community-vetted | High (Vendor-managed, often certified) |

| Required Team Skills | Expert-level developers & SREs | Mid-to-Expert DevOps skills | Minimal; focused on SDK usage |

| Support | Internal team only | Community forums; no SLA | Dedicated support; SLAs |

| Time to Value | Slowest | Medium | Fastest |

Ultimately, there's no single "best" answer. The build-it-yourself approach offers unparalleled customization but demands massive investment. Open-source gives you control without starting from zero, and commercial solutions get you to the finish line fastest by handling all the heavy lifting for you.

Best Practices for Managing Toggle Debt

While a handful of feature toggles are easy to track, a system with hundreds—or even thousands—can quickly spiral into chaos. This is what we call toggle debt. It's a specific kind of technical debt where old, forgotten, or badly documented flags pollute your codebase. Left unchecked, this debt increases cognitive load, injects bugs through unexpected interactions, and makes the system difficult to reason about.

Effective feature toggle management isn’t just about flipping new switches on. It’s about being disciplined with their entire lifecycle, from birth to retirement. Adopting strict engineering habits is the only way to keep your toggles as a powerful asset instead of a ticking time bomb.

It's no surprise the global market for this kind of software is booming, especially with Agile and DevOps practices becoming standard. As companies in every sector from retail to finance lean more on these tools, the need for disciplined management has become critical. Cloud-based platforms are leading the charge, thanks to their scalability and easy integration. You can see a full market forecast in this feature toggles software report from Archive Market Research.

Establish Clear Naming Conventions

Your first line of defense against toggle debt is a consistent and machine-parseable naming convention. A flag named new-feature-toggle is useless. A good name should communicate its purpose, scope, and ownership at a glance.

A solid naming scheme usually includes these components, separated by a delimiter like a hyphen or colon:

- Team/Domain:

checkout,search,auth - Toggle Type:

release,ops,exp,perm - Feature Name:

new-payment-gateway,elastic-search-reindex - Creation Date/Ticket:

2024-08-15orJIRA-123

A flag named checkout:release:paypal-express:JIRA-1234 is instantly understandable. It belongs to the checkout team, it's a temporary release toggle for the PayPal Express feature, and all context can be found in a specific Jira ticket. This structured format also allows for automated tooling to find and flag stale toggles.

Define a Strict Toggle Lifecycle

Every temporary feature toggle (Release, Experiment) must have a predefined lifecycle. Without a formal process, short-term toggles inevitably become permanent fixtures, creating a complex web of dead code and conditional logic.

A feature toggle without an expiration date is a bug waiting to happen. The default state for any temporary toggle should be "scheduled for removal."

This lifecycle needs to be documented and, wherever you can, automated.

- Creation: When a flag is created, it must have an owner, a link to a ticket (e.g., Jira, Linear), and a target removal date. This should be enforced by the management platform.

- Activation: The flag is live, controlling a feature in production.

- Resolution: The feature is either fully rolled out (100% traffic) or abandoned. The flag is now considered "stale" and enters a cleanup queue.

- Removal: A ticket is automatically generated for the owner to remove the flag from the codebase and archive it in the management platform.

This kind of structured process is a hallmark of a healthy engineering culture. For a deeper dive into taming code complexity, check out our guide on how to manage technical debt.

Assign Explicit Ownership and Monitor Stale Flags

Accountability is critical. Every toggle needs a designated owner—an individual or a team—responsible for its maintenance and eventual removal. This prevents the "orphan toggle" problem where no one knows why a flag exists or if it's safe to remove.

Integrate toggle monitoring directly into your CI/CD pipeline and project management tools. A static analysis check in your CI pipeline can fail a build if it detects code referencing a toggle that has been marked for removal. A simple dashboard can also provide visibility by highlighting:

- Flags without a designated owner.

- Flags past their target removal date.

- Flags that have been in a static state (100% on or 100% off) for over 90 days.

Set up automated Slack or email alerts to notify owners when their toggles become stale. By making toggle debt visible and actionable, you transform cleanup from a painful manual audit into a routine part of the development workflow.

Once you get the hang of basic feature toggles, you can start exploring some seriously powerful stuff. Think of it as moving beyond simple on/off light switches to building a fully automated smart home. The real future here is in making your feature toggles intelligent, connecting them directly to your observability tools so they can practically manage themselves.

The most exciting development I'm seeing is AI-driven toggle automation. Picture this: you roll out a new feature, and instead of nervously watching dashboards, a machine learning model does it for you. It keeps an eye on all the crucial business and operational metrics in real-time.

If that model spots trouble—maybe error rates are spiking, user engagement plummets, or conversions take a nosedive—it can instantly and automatically flip the feature off. No human panic, no late-night calls. This is a game-changer for reducing your Mean Time to Recovery (MTTR) because the system reacts faster than any person ever could.

Granular Targeting and Progressive Delivery

Advanced toggles also unlock incredibly precise user targeting, which is essential for canary releases or complex beta tests. Forget just rolling out to 10% of users. You can now define super-specific rules based on all sorts of user attributes.

For instance, you could target a new feature only to:

- Users on a "Pro" subscription plan, who are located in Germany, and are using the latest version of your mobile app.

- Internal employees for "dogfooding," but only during peak business hours to see how the feature handles real-world load.

- A specific segment of beta testers, but only if their accounts are more than 90 days old.

This level of detail lets you expose new, potentially risky code to very specific, low-impact groups first. You get targeted feedback and performance data from the exact people you want to hear from, minimizing any potential fallout. This approach also has huge security implications, since controlling who sees what is critical. To go deeper on this, it's worth reading up on DevOps security best practices to make sure your rollouts are buttoned up.

By integrating feature toggles directly with observability platforms, you create a powerful closed-loop system. Toggles report their state and impact, while monitoring tools provide the performance data needed to make automated, intelligent decisions about the feature's lifecycle.

When you connect these dots, your feature flags transform from simple if/else statements into an intelligent, automated release control plane. It's the ultimate expression of decoupling deployment from release, paving the way for safer, faster, and more data-driven software delivery.

Technical FAQ on Feature Toggle Management

When you're first getting your hands dirty with a real feature toggle system, a few technical questions pop up again and again. Let's tackle some of the most common ones I hear from engineers in the field.

Do Feature Toggles Add Performance Overhead?

Yes, but the overhead is almost always negligible if implemented correctly. A server-side evaluation in a modern system typically adds single-digit milliseconds of latency to an initial request. SDKs are highly optimized, using global CDNs and streaming updates to fetch rules, then caching them in-memory for near-instantaneous evaluation (microseconds) on subsequent calls within the same process.

The key is proper implementation:

- Don't re-fetch rules on every check. The SDK handles this.

- Do initialize the SDK once per application lifecycle (e.g., on server start).

- On the client-side, load the SDK asynchronously (

<script async>) to avoid blocking page render.

How Do Toggles Work in Serverless Environments?

They are an excellent fit for environments like AWS Lambda or Google Cloud Functions. Since functions are often stateless, a feature flagging service acts as an external, dynamic configuration store, allowing you to alter a function's behavior without redeploying code.

The typical flow is:

- Cold Start: The function instance initializes. The feature flag SDK is initialized along with it and fetches the latest flag rules.

- Warm Invocation: For subsequent invocations of the same warm instance, the SDK is already initialized and uses its in-memory cache of rules for microsecond-fast evaluations.

This pattern allows for powerful strategies like canary releasing a new version of a Lambda function to a small percentage of invocations or using a kill switch to disable a problematic function instantly.

The real risk with feature toggles isn't a few milliseconds of latency—it's letting complexity run wild. A system choked with hundreds of old, forgotten flags creates way more technical debt and unpredictable bugs than any well-managed flag check ever will.

What Happens if the Flag Service Goes Down?

This is a critical resilience concern. Any production-grade feature flagging platform and its SDKs are designed with failure in mind. SDKs have built-in fallback mechanisms.

When an SDK initializes, it fetches the latest flag rules and caches them locally (either in-memory or on-disk). If the connection to the feature flag service is lost, the SDK will continue to serve evaluations using this last-known good configuration. This ensures your application's stability. As a final layer of defense, you should always provide a default value in your code (client.isEnabled('my-feature', default_value=False)). This ensures predictable behavior even if the SDK fails to initialize entirely.

Ready to streamline your release cycles and eliminate deployment risks? OpsMoon connects you with the top 0.7% of remote DevOps engineers who specialize in implementing robust feature toggle management systems. Get a free DevOps work plan and see how our experts can accelerate your software delivery today.