A Technical Guide to AWS DevOps Consulting: Accelerating Cloud Delivery

Leverage aws devops consulting to accelerate software delivery with proven CI/CD automation, scalable infrastructure, and cloud best practices from experts.

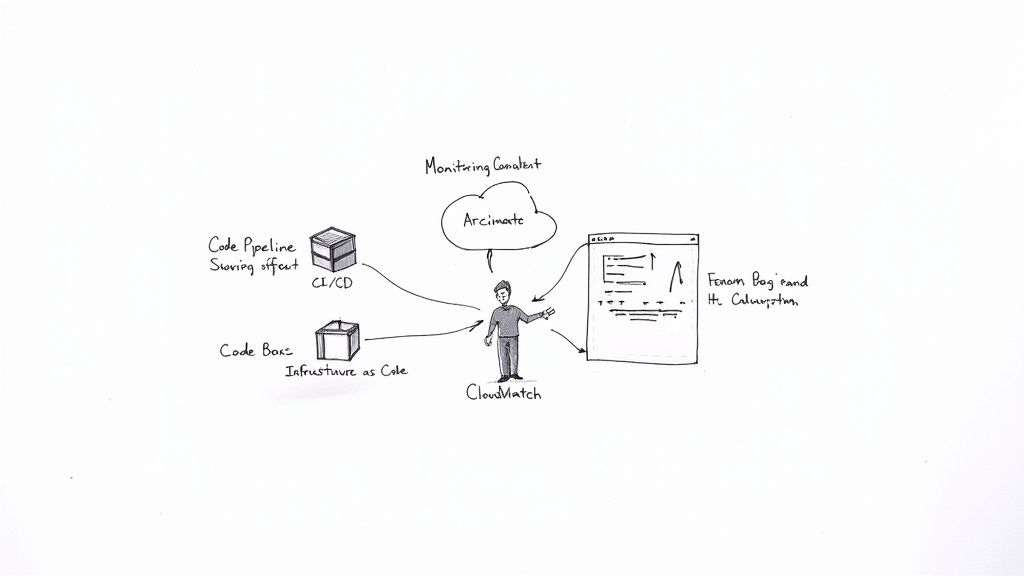

So, what exactly is AWS DevOps consulting? It's the strategic implementation of an expert architect into your team, focused on transforming software delivery by engineering automated, resilient pipelines. This process leverages native AWS services for Continuous Integration/Continuous Deployment (CI/CD), Infrastructure as Code (IaC), and comprehensive observability.

The primary objective is to engineer systems that are not only secure and scalable but also capable of self-healing. Consultants function as accelerators, guiding your team to a state of high-performance delivery and operational excellence far more rapidly than internal efforts alone.

How AWS DevOps Consulting Accelerates Delivery

An AWS DevOps consulting partnership begins with a granular analysis of your current CI/CD workflows, existing infrastructure configurations, and your team's technical competencies. From this baseline, these experts architect a fully automated pipeline designed to reliably transition code from a developer's local environment through staging and into production.

They translate core DevOps methodology—such as CI/CD, IaC, and continuous monitoring—into production-grade AWS implementations. This isn't theoretical; it's the precise application of specific tools to construct automated guardrails and repeatable deployment processes.

- AWS CodePipeline serves as the orchestrator, defining stages and actions for every build, static analysis scan, integration test, and deployment within a single, version-controlled workflow.

- AWS CloudFormation or Terraform codifies your infrastructure into version-controlled templates, eliminating manual provisioning and preventing configuration drift between environments.

- Amazon CloudWatch acts as the central nervous system for observability, providing the real-time metrics (e.g., CPUUtilization, Latency), logs (from Lambda, EC2, ECS), and alarms needed to maintain operational stability.

“An AWS DevOps consultant bridges the gap between best practices and production-ready pipelines.”

Role of Consultants as Architects

A significant portion of a consultant's role is architecting the end-to-end delivery process. They produce detailed diagrams mapping the flow from source code repositories (like CodeCommit or GitHub) through build stages, static code analysis, and multi-environment deployments. This architectural blueprint ensures that every change is tracked, auditable, and free from manual handoffs that introduce human error.

For example, they might implement a GitFlow branching strategy where feature branches trigger builds and unit tests, while merges to a main branch initiate a full deployment pipeline to production.

They also leverage Infrastructure as Code to enforce critical policies, embedding security group rules, IAM permissions, and compliance checks directly into CloudFormation or Terraform modules. This proactive approach prevents misconfigurations before they are deployed and simplifies audit trails.

Market Context and Adoption

The demand for this expertise is accelerating. By 2025, the global DevOps market is projected to reach USD 15.06 billion, a substantial increase from USD 10.46 billion in 2024. With enterprise adoption rates exceeding 80% globally, DevOps is now a standard operational model.

Crucially, companies leveraging AWS DevOps consulting report a 94% effectiveness rate in maximizing the platform's capabilities. You can find more details on DevOps market growth over at BayTech Consulting.

Key Benefits of AWS DevOps Consulting

The technical payoff translates into tangible business improvements:

- Faster time-to-market through fully automated, multi-stage deployment pipelines.

- Higher release quality from integrating automated static analysis, unit, and integration tests at every stage.

- Stronger resilience built on self-healing infrastructure defined as code, capable of automated recovery.

- Enhanced security by integrating DevSecOps practices like vulnerability scanning and IAM policy validation directly into the pipeline.

Consultants implement specific safeguards, such as Git pre-commit hooks that trigger linters or security scanners, and blue/green deployment strategies that minimize the blast radius of a failed release. For instance, they configure CloudFormation change sets to require manual approval in the pipeline, allowing your team to review infrastructure modifications before they are applied. This critical step eliminates deployment surprises and builds operational confidence.

When you partner with a platform like OpsMoon, you gain direct access to senior remote engineers who specialize in AWS. It’s a collaborative model that empowers your team with hands-on guidance and includes complimentary architect hours to design your initial roadmap.

The Four Pillars of an AWS DevOps Engagement

A robust AWS DevOps consulting engagement is not a monolithic project but a structured implementation built upon four technical pillars. These pillars represent the foundational components of a modern cloud operation, each addressing a critical stage of the software delivery lifecycle. When integrated, they create a cohesive system engineered for velocity, reliability, and security.

When architected correctly, these pillars transform your development process from a series of manual, error-prone tasks into a highly automated, observable workflow that operates predictably. This structure provides the technical confidence required to ship changes frequently and safely.

1. CI/CD Pipeline Automation

The first pillar is the Continuous Integration and Continuous Deployment (CI/CD) pipeline, the automated workflow that moves code from a developer's IDE to a production environment. An AWS DevOps consultant architects this workflow using a suite of tightly integrated native services.

- AWS CodeCommit functions as the secure, Git-based repository, providing the version-controlled single source of truth for all application and infrastructure code.

- AWS CodeBuild is the build and test engine. Its

buildspec.ymlfile defines the commands to compile source code, run unit tests (e.g., JUnit, PyTest), perform static analysis (e.g., SonarQube), and package software into deployable artifacts like Docker images pushed to ECR. - AWS CodePipeline serves as the orchestrator, defining the stages (Source, Build, Test, Deploy) and triggering the entire process automatically upon a Git commit to a specific branch.

This automation eliminates manual handoffs, a primary source of deployment failures, and guarantees that every code change undergoes identical quality gates, ensuring consistent and predictable releases.

2. Infrastructure as Code (IaC) Implementation

The second pillar, Infrastructure as Code (IaC), codifies your cloud environment—VPCs, subnets, EC2 instances, RDS databases, and IAM roles—into declarative templates. Instead of manual configuration via the AWS Console, infrastructure is defined, provisioned, and managed as code, making it repeatable, versionable, and auditable.

With IaC, your infrastructure configuration becomes a version-controlled artifact that can be peer-reviewed via pull requests and audited through Git history. This is the definitive solution to eliminating configuration drift and ensuring parity between development, staging, and production environments.

Consultants typically use AWS CloudFormation or Terraform to implement IaC. A CloudFormation template, for example, can define an entire application stack, including EC2 instances within an Auto Scaling Group, a load balancer, security groups, and an RDS database instance. Deploying this stack becomes a single, atomic, and automated action, drastically reducing provisioning time and eliminating human error.

3. Comprehensive Observability

The third pillar is establishing comprehensive observability to provide deep, actionable insights into application performance and system health. This extends beyond basic monitoring to enable understanding of the why behind system behavior, correlating metrics, logs, and traces.

To build a robust observability stack, an AWS DevOps consultant integrates tools such as:

- Amazon CloudWatch: The central service for collecting metrics, logs (via the CloudWatch Agent), and traces. This includes creating custom metrics, composite alarms, and dashboards to visualize system health.

- AWS X-Ray: A distributed tracing service that follows requests as they travel through microservices, identifying performance bottlenecks and errors in complex, distributed applications.

This setup enables proactive issue detection and automated remediation. For example, a CloudWatch Alarm monitoring the HTTPCode_Target_5XX_Count metric for an Application Load Balancer can trigger an SNS topic that invokes a Lambda function to initiate a deployment rollback via CodeDeploy, minimizing user impact.

4. Automated Security and Compliance

The final pillar integrates security into the delivery pipeline, a practice known as DevSecOps. This approach treats security as an integral component of the development lifecycle rather than a final gate. The goal is to automate security controls at every stage, from code commit to production deployment.

Consultants utilize services like Amazon Inspector to perform continuous vulnerability scanning on EC2 instances and container images stored in ECR. Findings are centralized in AWS Security Hub, which aggregates security alerts from across the AWS environment. This automated "shift-left" approach enforces security standards programmatically without impeding developer velocity, establishing a secure-by-default foundation.

Each pillar relies on a specific set of AWS services to achieve its technical outcomes. This table maps the core tools to the services provided in a typical engagement.

Core Services in an AWS DevOps Consulting Engagement

| Service Pillar | Core AWS Tools | Technical Outcome |

|---|---|---|

| CI/CD Automation | CodeCommit, CodeBuild, CodePipeline, CodeDeploy | A fully automated, repeatable pipeline for building, testing, and deploying code, reducing manual errors. |

| Infrastructure as Code | CloudFormation, CDK, Terraform | Version-controlled, auditable, and reproducible infrastructure, eliminating environment drift. |

| Observability | CloudWatch, X-Ray, OpenSearch Service | Deep visibility into application performance and system health for proactive issue detection and faster debugging. |

| DevSecOps | Inspector, Security Hub, IAM, GuardDuty | Automated security checks and compliance enforcement integrated directly into the development lifecycle. |

By architecting these AWS services into the four pillars, consultants build a cohesive, automated platform engineered for both speed and security.

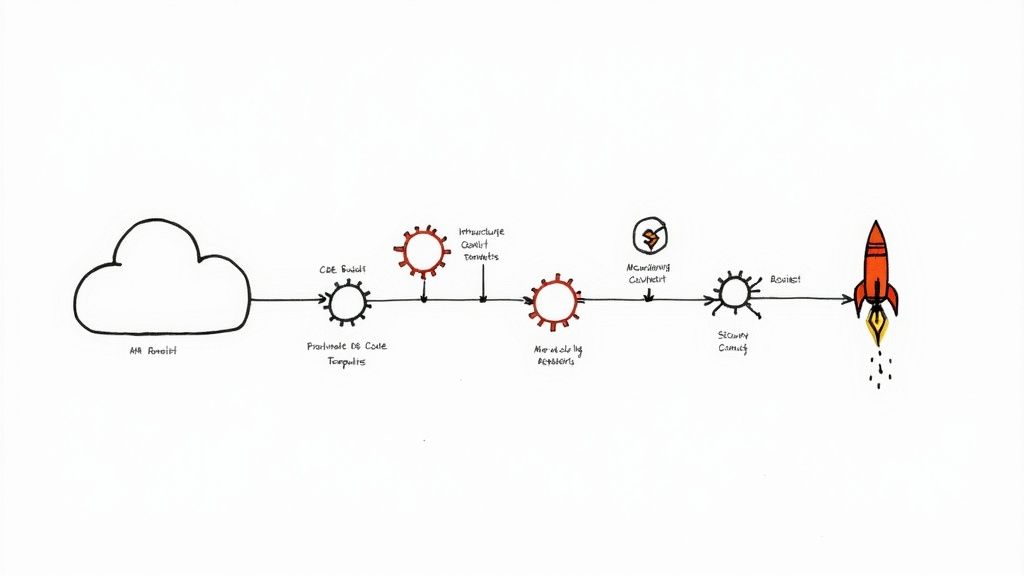

The Engagement Process from Assessment to Handoff

Engaging an AWS DevOps consulting firm is a structured, multi-phase process designed to transition your organization to a high-performing, automated delivery model. It is not a generic solution but a tailored approach that ensures the final architecture aligns precisely with your business objectives and technical requirements.

The process starts with a technical deep dive into your existing environment.

This journey is structured around the core pillars of a modern AWS DevOps practice, creating a logical progression from initial pipeline automation to securing and observing the entire ecosystem.

This flow illustrates how each technical pillar builds upon the last, resulting in a cohesive, resilient system that manages the entire software delivery lifecycle.

Discovery and Assessment

The initial phase is Discovery and Assessment. Consultants embed with your team to perform a thorough analysis of your existing architecture, code repositories, deployment workflows, and operational pain points.

This involves technical workshops, code reviews, and infrastructure audits to identify performance bottlenecks, security vulnerabilities, and opportunities for automation. Key outputs include a current-state architecture diagram and a list of identified risks and blockers.

For guidance on self-evaluation, our article on conducting a DevOps maturity assessment provides a useful framework.

Strategy and Roadmap Design

Following the discovery, the engagement moves into the Strategy and Roadmap Design phase. Here, consultants translate their findings into an actionable technical blueprint. This is a detailed plan including target-state architecture diagrams, a bill of materials for AWS services, and a phased implementation schedule.

Key deliverables from this phase include:

- A target-state architecture diagram illustrating the future CI/CD pipeline, IaC structure, and observability stack.

- Toolchain recommendations, specifying which AWS services (e.g., CodePipeline vs. Jenkins, CloudFormation vs. Terraform) are best suited for your use case.

- A project backlog in a tool like Jira, with epics and user stories prioritized for the implementation phase.

This roadmap serves as the single source of truth, aligning all stakeholders on the technical goals and preventing scope creep.

The roadmap is the most critical artifact of the initial engagement. It becomes the authoritative guide, ensuring that the implementation directly addresses the problems identified during discovery and delivers measurable value.

Implementation and Automation

With a clear roadmap, the Implementation and Automation phase begins. This is the hands-on-keyboard phase where consultants architect and build the CI/CD pipelines, write IaC templates using CloudFormation or Terraform, and configure monitoring dashboards and alarms in Amazon CloudWatch.

This phase is highly collaborative. Consultants typically work alongside your engineers, building the new systems while actively transferring knowledge through pair programming and code reviews. The objective is not just to deliver a system, but to create a fully automated, self-service platform that your developers can operate confidently.

Optimization and Knowledge Transfer

The final phase, Optimization and Knowledge Transfer, focuses on refining the newly built systems. This includes performance tuning, implementing cost controls with tools like AWS Cost Explorer, and ensuring your team is fully equipped to take ownership.

The handoff includes comprehensive documentation, operational runbooks for incident response, and hands-on training sessions. A successful engagement concludes not just with new technology, but with an empowered team capable of managing, maintaining, and continuously improving their automated infrastructure.

How to Choose the Right AWS DevOps Partner

Selecting an AWS DevOps consulting partner is a critical technical decision, not just a procurement exercise. You need a partner who can integrate with your engineering culture, elevate your team's skills, and deliver a well-architected framework you can build upon.

This decision directly impacts your future operational agility. AWS commands 31% of the global cloud infrastructure market, and with capital expenditure on AWS infrastructure projected to exceed USD 100 billion by 2025—driven heavily by AI and automation—a technically proficient partner is essential.

Scrutinize Technical Certifications

Validate credentials beyond surface-level badges. Certifications are a proxy for hands-on, validated experience. Look for advanced, role-specific certifications that demonstrate deep expertise.

- The AWS Certified DevOps Engineer – Professional is the non-negotiable baseline.

- Look for supplementary certifications like AWS Certified Solutions Architect – Professional and specialty certifications in Security, Advanced Networking, or Data Analytics.

These credentials confirm a consultant's ability to architect for high availability, fault tolerance, and self-healing systems that meet the principles of the AWS Well-Architected Framework.

Analyze Their Technical Portfolio

Logos are not proof of expertise. Request detailed, technical case studies that connect specific actions to measurable outcomes. Look for evidence of:

- A concrete reduction in deployment failure rates (e.g., from 15% to <1%), indicating robust CI/CD pipeline design with automated testing and rollback capabilities.

- A documented increase in release frequency (e.g., from quarterly to multiple times per day), demonstrating effective automation.

- A significant reduction in Mean Time to Recovery (MTTR) post-incident, proving the implementation of effective observability and automated failover mechanisms.

Quantifiable metrics demonstrate a history of delivering tangible engineering results.

Assess Their Collaborative Style

A true technical partnership requires transparent, high-bandwidth communication. Avoid "black box" engagements where work is performed in isolation and delivered without context or knowledge transfer.

If the consultant's engagement concludes and your team cannot independently manage, troubleshoot, and extend the infrastructure, the engagement has failed.

During initial discussions, probe their methodology for:

- Documentation and runbooks: Do they provide comprehensive, actionable documentation?

- Interactive training: Do they offer hands-on workshops and pair programming sessions with your engineers?

- Code reviews: Is your team included in the pull request and review process for all IaC and pipeline code?

A partner focused on knowledge transfer ensures you achieve long-term self-sufficiency and can continue to evolve the infrastructure.

Advanced Strategies For AWS DevOps Excellence

Building a functional CI/CD pipeline is just the baseline. Achieving operational excellence requires advanced, fine-tuned strategies that create a resilient, cost-efficient, and continuously improving delivery ecosystem. This is where an expert AWS DevOps consulting partner adds significant value, implementing best practices that proactively manage failure, enforce cost governance, and foster a culture of continuous improvement.

This is about engineering a software delivery lifecycle that anticipates failure modes, optimizes resource consumption, and adapts dynamically.

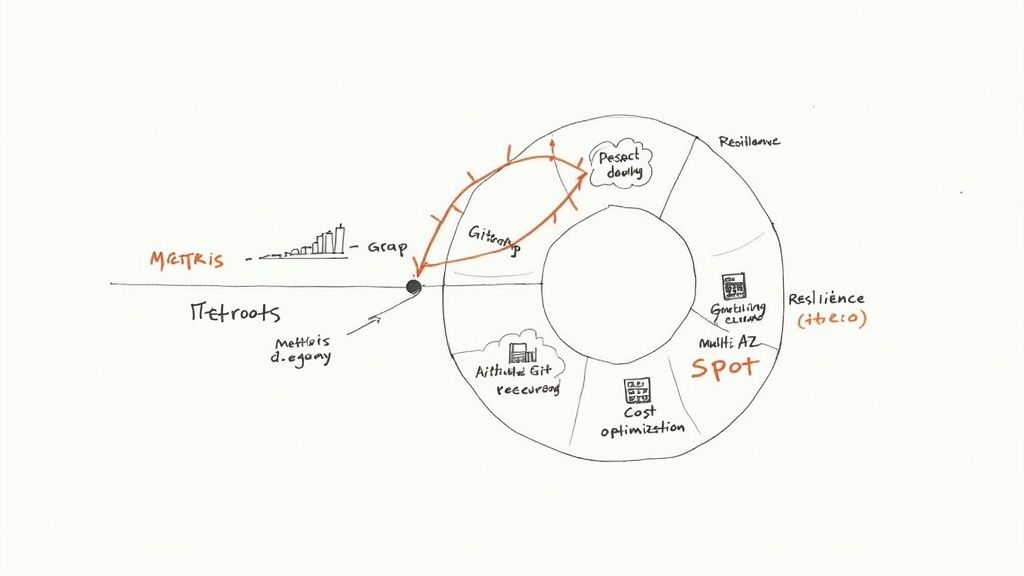

Embracing GitOps For Declarative Management

GitOps establishes a Git repository as the single source of truth for both application and infrastructure state. Every intended change to your environment is initiated as a pull request, which is then peer-reviewed, tested, and automatically applied to the target system.

Tools like Argo CD continuously monitor your repository. When a commit is merged to the main branch, Argo CD detects the drift between the desired state in Git and the actual state running in your Kubernetes cluster on Amazon EKS (AWS), automatically reconciling the difference. This declarative approach:

- Eliminates configuration drift by design.

- Simplifies rollbacks to a

git revertcommand. - Provides a complete, auditable history of every change to your infrastructure.

For a deeper dive, review our guide on Infrastructure as Code best practices.

Architecting For Resilience And Automated Failover

Operational excellence requires systems that are not just fault-tolerant but self-healing. This means architecting for failure and automating the recovery process.

- Multi-AZ Deployments: Deploy applications and databases across a minimum of two, preferably three, Availability Zones (AZs) to ensure high availability. An outage in one AZ will not impact your application's availability.

- Automated Failover: Use Amazon Route 53 health checks combined with DNS failover routing policies. If a health check on the primary endpoint fails, Route 53 automatically redirects traffic to a healthy standby endpoint in another region or AZ.

"A proactive approach to resilience transforms your architecture from fault-tolerant to self-healing, reducing Mean Time to Recovery (MTTR) from hours to minutes."

Advanced Cost Optimization Techniques

Cost management must be an integral part of the DevOps lifecycle, not an afterthought. Go beyond simple instance right-sizing and embed cost-saving strategies directly into your architecture and pipelines.

- AWS Graviton Processors: Migrate workloads to ARM-based Graviton instances to achieve up to 40% better price-performance over comparable x86-based instances.

- EC2 Spot Instances: Utilize Spot Instances for fault-tolerant workloads like CI/CD build agents or batch processing jobs, which can reduce compute costs by up to 90%.

- Real-Time Budget Alerts: Configure AWS Budgets with actions to notify a Slack channel or trigger a Lambda function to throttle resources when spending forecasts exceed predefined thresholds.

The financial impact of these technical decisions is significant:

| Technique | Savings Potential |

|---|---|

| Graviton-Powered Instances | 40% |

| EC2 Spot Instances | 90% |

| Proactive Budget Alerts | Prevent overruns |

With the global DevOps platform market projected to reach USD 16.97 billion by 2025 and an astonishing USD 103.21 billion by 2034, these optimizations ensure a sustainable and scalable cloud investment. For more market analysis, see the DevOps Platform Market report on custommarketinsights.com.

Frequently Asked Questions

When organizations consider AWS DevOps consulting, several technical and logistical questions consistently arise. These typically revolve around measuring effectiveness, project timelines, and integrating modern practices with existing systems.

Here are the direct answers to the most common queries regarding ROI, implementation timelines, legacy modernization, and staffing models.

What's the typical ROI on an AWS DevOps engagement?

Return on investment is measured through key DORA metrics: deployment frequency, lead time for changes, change failure rate, and mean time to recovery (MTTR).

We have seen clients increase deployment frequency by 3× (e.g., from monthly to weekly) and reduce MTTR by over 60% by implementing automated failover and robust observability. The ROI is demonstrated by increased development velocity and improved operational stability.

How long does a standard implementation take?

The timeline is scope-dependent. A foundational CI/CD pipeline for a single application can be operational in two weeks.

A comprehensive transformation, including IaC for complex infrastructure and modernization of legacy systems, is typically a 3–6 month engagement. The primary variable is the complexity of the existing architecture and the number of applications being onboarded.

Can consultants help modernize our legacy applications?

Yes, this is a core competency. The typical strategy begins with containerizing monolithic applications using Amazon ECS or EKS, a process known as "lift and shift."

This initial step decouples the application from the underlying OS, enabling it to be managed within a modern CI/CD pipeline. Subsequent phases focus on progressively refactoring the monolith into microservices, allowing for independent development and deployment.

Should we use a consultant or build an in-house team?

This is not a binary choice. A consultant provides specialized, accelerated expertise to establish a best-practice foundation quickly and avoid common architectural pitfalls.

Your in-house team possesses critical domain knowledge and is essential for long-term ownership and evolution. The most effective model is a hybrid approach where consultants lead the initial architecture and implementation while actively training and upskilling your internal team for a seamless handoff.

Additional Insights

The key to a successful engagement is defining clear, quantifiable success metrics and technical milestones from the outset. This ensures a measurable ROI.

The specifics of any project are dictated by its scope. Migrating a legacy application, for instance, requires additional time for dependency analysis and refactoring compared to a greenfield project.

To structure your evaluation process, follow these actionable steps:

- Define success metrics: Establish baseline and target values for deployment frequency, change failure rate, and MTTR.

- Map out the timeline: Create a phased project plan, from initial pipeline implementation to full organizational adoption.

- Assess modernization needs: Conduct a technical audit of legacy applications to determine the feasibility and effort required for containerization.

- Plan your staffing: Define the roles and responsibilities for both consultants and internal staff to ensure effective knowledge transfer.

Follow these technical best practices during the engagement:

- Set clear KPIs and review them in every sprint meeting.

- Schedule regular architecture review sessions with your consultants.

- Insist on automated dashboards that provide real-time visibility into your key deployment and operational metrics.

Custom Scenarios

Every organization has unique technical and regulatory constraints. A financial services company, for example, may require end-to-end encrypted pipelines with immutable audit logs using AWS Config and CloudTrail. This adds complexity and time for compliance validation but is a non-negotiable requirement.

Other common technical scenarios include:

- Multi-region architectures requiring sophisticated global traffic management using Route 53 latency-based routing and inter-region peering with Transit Gateway.

- Targeted upskilling workshops to train internal teams on specific technologies like Terraform, Kubernetes, or serverless architecture.

Ensure your AWS DevOps consulting engagement is explicitly tailored to your industry's specific technical, security, and compliance requirements.

Final Considerations

The selection of an AWS DevOps consulting partner is a critical factor in the success of these initiatives. The goal is to find a partner that can align a robust technical strategy with your core business objectives.

Always verify service level agreements, validate partner certifications, and request detailed, technical references.

- Look for partners whose consultants hold AWS DevOps Engineer – Professional and AWS Solutions Architect – Professional certifications.

- Demand regular metric-driven reviews to maintain accountability and ensure full visibility into the project's progress.

Adhering to these guidelines will help you establish a more effective and successful technical partnership.

Ready to optimize your software delivery? Contact OpsMoon today for a free work planning session. Get started with OpsMoon