10 Cloud cost optimization strategies You Should Know

Discover the top 10 cloud cost optimization strategies strategies and tips. Complete guide with actionable insights.

The allure of the public cloud is its promise of limitless scalability and agility, but this flexibility comes with a significant challenge: managing and controlling costs. As infrastructure scales, cloud bills can quickly spiral out of control, consuming a substantial portion of an organization's budget. This uncontrolled spending, often driven by idle resources, over-provisioning, and suboptimal architectural choices, directly impacts profitability and can hinder innovation by diverting funds from core development initiatives. For technical leaders, from CTOs and IT managers to DevOps engineers and SREs, mastering cloud cost management is no longer a secondary concern; it is a critical business function.

This guide moves beyond generic advice to provide a comprehensive roundup of actionable, technical cloud cost optimization strategies. We will dissect ten distinct approaches, offering specific implementation steps, command-line examples, and practical scenarios to help you take immediate control of your cloud spend. You will learn how to precisely right-size compute instances, develop a sophisticated Reserved Instance and Savings Plans portfolio, and leverage the cost-saving potential of Spot Instances without compromising stability.

We will also explore advanced tactics like implementing intelligent storage tiering, optimizing Kubernetes resource requests, and minimizing expensive data transfer fees. Each strategy is presented as a self-contained module, complete with the tools and metrics needed to measure your success. By implementing these detailed methods, you can transform your cloud infrastructure from a major cost center into a lean, efficient engine for growth, ensuring every dollar spent delivers maximum value. This article is your technical playbook for building a cost-effective and highly scalable cloud environment.

1. Right-sizing Computing Resources

Right-sizing is a fundamental cloud cost optimization strategy focused on aligning your provisioned computing resources with your actual workload requirements. It directly combats the common issue of over-provisioning, where organizations pay for powerful, expensive instances that are chronically underutilized. The process involves systematically analyzing performance metrics like CPU, memory, network I/O, and storage throughput to select the most cost-effective instance type and size that still meets performance targets.

This strategy is not a one-time fix but a continuous process. By regularly monitoring usage data, engineering teams can identify instances that are either too large (and thus wasteful) or too small (risking performance bottlenecks). For example, Airbnb successfully automated its right-sizing process, leading to a significant 25% reduction in their Amazon EC2 costs by dynamically adjusting instance sizes based on real-time demand.

How to Implement Right-sizing

Implementing a successful right-sizing initiative involves a data-driven, iterative approach. It is more than just picking a smaller instance; it's about finding the correct instance.

Actionable Steps:

- Establish a Baseline: Begin by collecting at least two to four weeks of performance data using monitoring tools like Amazon CloudWatch, Azure Monitor, or Google Cloud's operations suite. Focus on metrics such as

CPUUtilization(average and maximum),MemoryUtilization,NetworkIn/NetworkOut, andEBSReadBytes/EBSWriteBytes. - Analyze and Identify Targets: Use native cloud tools like AWS Compute Optimizer or Azure Advisor to get initial recommendations. Manually query metrics for instances with sustained

CPUUtilizationbelow 40% as primary candidates for downsizing. For AWS, you can use the AWS CLI to find underutilized instances:aws ce get-rightsizing-recommendation --service "AmazonEC2" --filter '{"Dimensions": {"Key": "REGION", "Values": ["us-east-1"]}}'. - Test in Non-Production: Start your right-sizing experiments in development or staging environments. Use load testing tools like Apache JMeter or k6 to simulate production traffic and validate the performance of the new instance type.

- Implement and Monitor: Roll out changes gradually to production workloads using a blue-green or canary deployment strategy. Closely monitor application performance metrics (APM) like p95/p99 latency and error rates. Set up automated CloudWatch Alarms or Azure Monitor Alerts to quickly detect performance degradation.

Key Insight: Don't just downsize; consider changing instance families. A workload might be memory-bound but not CPU-intensive. Switching from a general-purpose instance (like AWS's m5) to a memory-optimized one (like r5) can often provide better performance at a lower cost, even if the core count is smaller. For I/O-heavy workloads, consider storage-optimized instances like the I3 or I4i series.

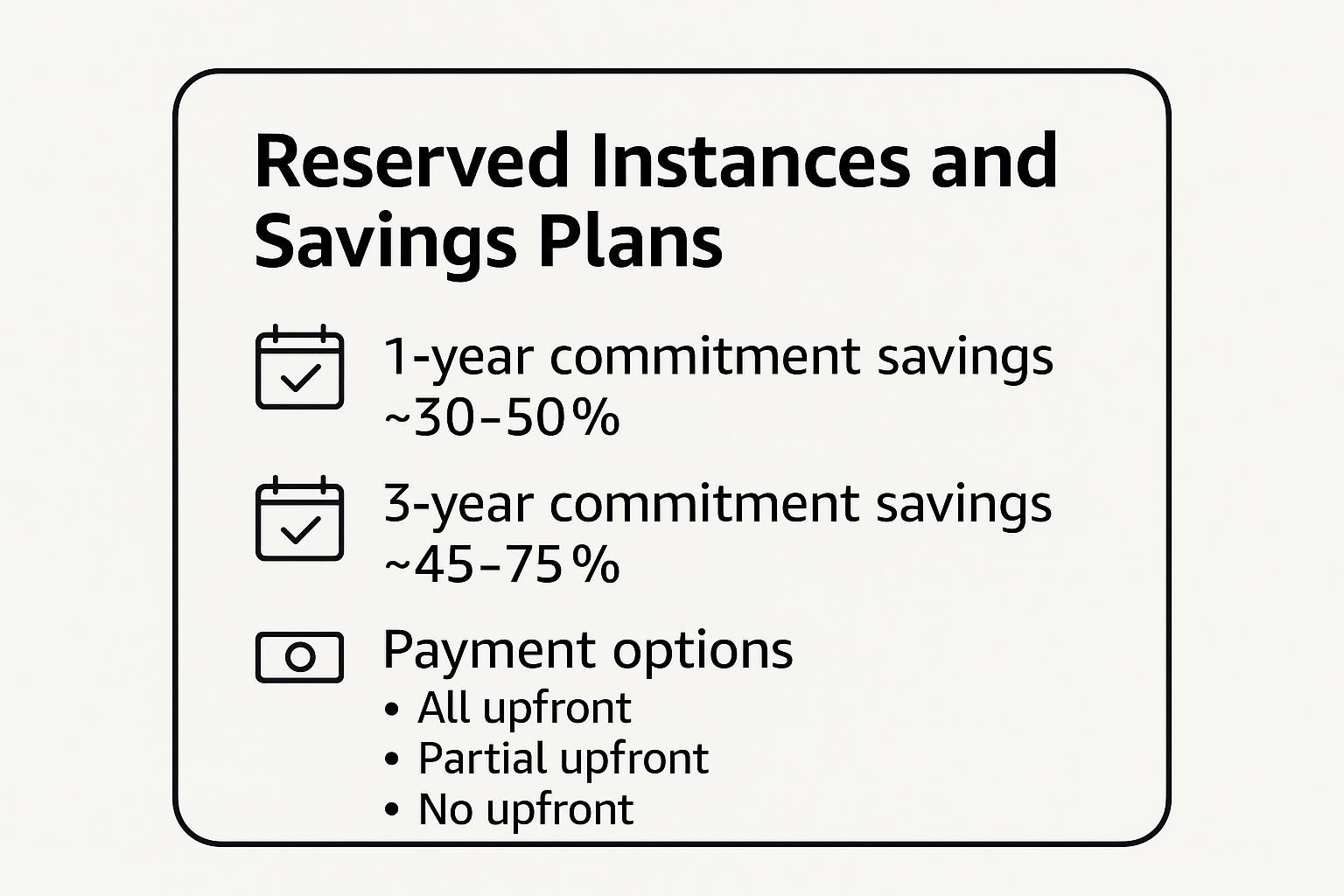

2. Reserved Instance and Savings Plans Strategy

This strategy involves committing to a specific amount of compute usage for a one or three-year term in exchange for a significant discount compared to on-demand pricing. Major cloud providers like AWS, Azure, and Google Cloud offer these commitment-based models, which are ideal for workloads with stable, predictable usage patterns. By forecasting capacity needs, organizations can lock in savings of up to 75%, drastically reducing their overall cloud spend.

The infographic above summarizes the potential savings and payment flexibility these plans offer. As highlighted, committing to a longer term yields deeper discounts, making this one of the most impactful cloud cost optimization strategies for stable infrastructure. For instance, Pinterest leveraged a strategic Reserved Instance (RI) purchasing plan to save an estimated $20 million annually, while Lyft used AWS Savings Plans to cut costs by 40% on its steady-state workloads.

How to Implement a Commitment Strategy

Successfully implementing RIs or Savings Plans requires careful analysis and ongoing management to maximize their value. It is not a "set it and forget it" solution but an active portfolio management process.

Actionable Steps:

- Analyze Usage History: Use cloud-native tools like AWS Cost Explorer or Azure Advisor to analyze at least 30-60 days of usage data. Focus on identifying consistent, always-on workloads like production databases, core application servers, or essential support services. Export the data to a CSV for deeper analysis if needed.

- Start with Stable Workloads: Begin by purchasing commitments for your most predictable resources. Cover a conservative portion of your usage (e.g., 50-60%) to avoid over-committing while you build confidence in your forecasting. A good starting point is to cover the lowest observed hourly usage over the past month.

- Choose the Right Commitment Type: Evaluate the trade-offs. Standard RIs offer the highest discount but lock you into a specific instance family. Convertible RIs (AWS) provide flexibility to change instance families. Savings Plans (AWS) offer a flexible discount based on a dollar-per-hour commitment across instance families and regions. For Azure, evaluate Reserved VM Instances vs. Azure Savings Plans for compute.

- Monitor and Optimize Coverage: Regularly track your RI/Savings Plan utilization and coverage reports in AWS Cost Explorer or Azure Cost Management. Aim for utilization rates above 95%. If you have underutilized RIs, consider selling them on the AWS RI Marketplace or modifying them if your plan allows. Set up budget alerts to notify you when your on-demand spending exceeds a certain threshold, indicating a need to purchase more reservations.

Key Insight: Combine commitment models with right-sizing. Before purchasing a Reserved Instance, first ensure the target instance is right-sized for its workload. Committing to an oversized, underutilized instance for one to three years locks in waste, diminishing the potential savings. Always right-size first, then reserve.

3. Auto-scaling and Dynamic Resource Management

Auto-scaling is one of the most powerful cloud cost optimization strategies, enabling your infrastructure to dynamically adjust its computing capacity in response to real-time demand. This approach ensures you automatically provision enough resources to maintain application performance during traffic spikes, while also scaling down to eliminate waste and reduce costs during quiet periods. It effectively prevents paying for idle resources by precisely matching your compute power to your workload's current needs.

This strategy is crucial for applications with variable or unpredictable traffic patterns. For instance, Snapchat leverages auto-scaling to seamlessly manage fluctuating user activity throughout the day, ensuring a smooth user experience while optimizing costs. Similarly, during its massive launch, Pokémon GO used Google Cloud's auto-scaling to grow from 50 to over 50,000 instances to handle unprecedented player demand, showcasing the immense power of dynamic resource allocation.

How to Implement Auto-scaling

Effective auto-scaling goes beyond simply turning it on; it requires careful configuration of scaling policies and continuous monitoring to achieve optimal results. The goal is to create a resilient and cost-efficient system that reacts intelligently to demand shifts.

Actionable Steps:

- Define Scaling Policies: Use tools like AWS Auto Scaling Groups, Azure Virtual Machine Scale Sets, or the Kubernetes Horizontal Pod Autoscaler. Configure policies based on performance metrics like CPU utilization (

TargetTrackingScaling), request count per target (ApplicationLoadBalancerRequestCountPerTarget), or custom metrics from a message queue (SQSApproximateNumberOfMessagesVisible). For example, set a rule to add a new instance when average CPU utilization exceeds 70% for five consecutive minutes. - Set Cooldown Periods: Implement cooldown periods (e.g., 300 seconds) to prevent the scaling group from launching or terminating additional instances before the previous scaling activity has had time to stabilize. This avoids rapid, unnecessary fluctuations, known as "thrashing."

- Use Predictive Scaling: For workloads with known, recurring traffic patterns (like e-commerce sites during holidays), leverage predictive scaling features offered by AWS. These tools use machine learning on historical CloudWatch data to forecast future demand and schedule capacity changes in advance.

- Integrate Health Checks: Configure robust health checks (e.g., ELB health checks) to ensure that new instances launched by the auto-scaler are fully operational and have passed status checks before being added to the load balancer and serving traffic. This prevents routing traffic to unhealthy instances and maintains application reliability.

Key Insight: Amplify your savings by incorporating Spot Instances into your auto-scaling groups. You can configure the group to request cheaper Spot Instances as its primary capacity source and fall back to more expensive On-Demand Instances only when Spot Instances are unavailable. This multi-tiered approach, using a mixed-instances policy, can reduce compute costs by up to 90% for fault-tolerant workloads.

4. Spot Instance and Preemptible VM Utilization

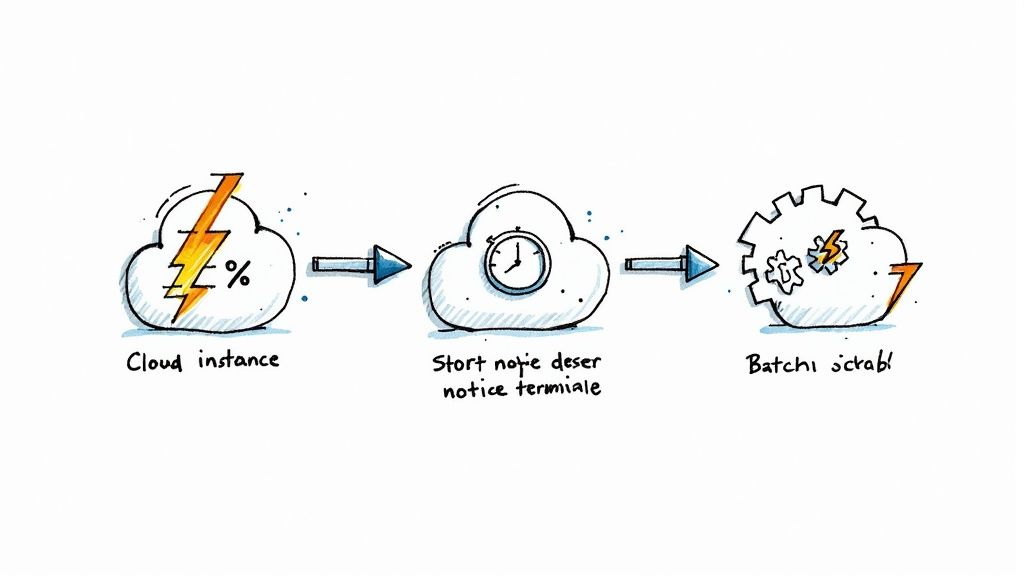

Leveraging spot instances, one of the most powerful cloud cost optimization strategies, involves using a cloud provider's spare compute capacity at a significant discount, often up to 90% off on-demand prices. These resources, known as Spot Instances on AWS, Preemptible VMs on Google Cloud, or Spot Virtual Machines on Azure, can be reclaimed by the provider with short notice, typically a two-minute warning. This model is perfectly suited for workloads that are fault-tolerant, stateless, or can be easily interrupted and resumed.

This strategy unlocks massive savings for the right applications. For example, Lyft processes over 20 billion GPS data points daily using spot instances, cutting compute costs by 75%. Similarly, genomics research firm Benchling uses spot instances for complex data processing, achieving an 80% cost reduction. The key is architecting applications to gracefully handle the inherent volatility of these instances.

How to Implement Spot and Preemptible Instances

Successfully using spot instances requires a shift from treating compute as a stable resource to treating it as a transient commodity. The implementation focuses on automation, flexibility, and fault tolerance.

Actionable Steps:

- Identify Suitable Workloads: Analyze your applications to find ideal candidates. Prime examples include big data processing jobs (EMR, Spark, Hadoop), batch rendering, continuous integration/continuous delivery (CI/CD) pipelines, and development/testing environments. These tasks can typically withstand interruptions.

- Utilize Managed Services: Leverage native cloud services like AWS EC2 Fleet or Auto Scaling Groups with a mixed instances policy or Azure VM Scale Sets with spot priority. These services automatically provision a mix of on-demand and spot instances to meet capacity needs while replacing terminated spot instances based on a defined allocation strategy (e.g.,

lowest-priceorcapacity-optimized). - Implement Checkpointing: For long-running jobs, architect applications to periodically save progress to durable storage like Amazon S3 or Azure Blob Storage. For AWS, handle the

EC2 Spot Instance Interruption Noticeby creating a CloudWatch Event rule to trigger a Lambda function that gracefully saves state before shutdown. - Diversify and Automate: Don't rely on a single spot instance type. Configure your instance groups (like EC2 Fleet) to pull from multiple instance families and sizes (e.g., m5.large, c5.large, r5.large) across different Availability Zones. This diversification significantly reduces the chance of all your instances being terminated simultaneously due to a price spike or capacity demand in one specific pool.

Key Insight: The most advanced spot strategies treat fleets of instances as a single, resilient compute pool. Tools like NetApp's Spot Ocean abstract away the complexity of bidding, provisioning, and replacement. They can automatically fall back to on-demand instances if spot capacity is unavailable, ensuring workload availability while maximizing cost savings.

5. Multi-cloud and Hybrid Cloud Cost Arbitrage

Multi-cloud and hybrid cloud cost arbitrage is an advanced cloud cost optimization strategy that involves strategically distributing workloads across multiple public cloud providers (like AWS, Azure, and GCP) and private, on-premises infrastructure. This approach allows organizations to leverage pricing discrepancies, specialized services, and regional cost variations to achieve the best possible price-to-performance ratio for each specific workload, while simultaneously mitigating vendor lock-in.

This strategy moves beyond single-provider optimization to treat the cloud market as an open ecosystem. For instance, a company might run its primary compute on Azure due to favorable enterprise agreements, use Google Cloud for its powerful BigQuery and AI Platform services, and leverage AWS for its broad Lambda and DynamoDB offerings. Famously, Dropbox saved a reported $75 million over two years by migrating its primary storage workloads from AWS to its own custom-built infrastructure, a prime example of hybrid cloud arbitrage.

How to Implement a Multi-cloud/Hybrid Strategy

Successfully executing a multi-cloud or hybrid strategy requires significant architectural planning and robust management tools. It is not about randomly placing services; it's about intentional, data-driven workload placement.

Actionable Steps:

- Standardize with Agnostic Tooling: Adopt cloud-agnostic tools to ensure portability. Use Terraform or Pulumi for infrastructure as code (IaC) and containerize applications with Docker and orchestrate them with Kubernetes. This abstraction layer makes moving workloads between environments technically feasible.

- Analyze and Model Costs: Before migrating, perform a thorough cost analysis using tools that can model cross-cloud expenses. Factor in not just compute and storage prices but also crucial, often-overlooked expenses like data egress fees. A workload may be cheaper to run in one cloud, but expensive data transfer costs (e.g., >$0.09/GB from AWS to the internet) could negate the savings.

- Start with Stateless and Non-Critical Workloads: Begin your multi-cloud journey with stateless applications or non-critical services like development/testing environments or CI/CD runners. These workloads are less sensitive to latency and have fewer data gravity concerns, making them ideal for initial pilots.

- Implement Centralized Governance and Monitoring: Deploy a multi-cloud management platform (CMP) like CloudHealth by VMware, Flexera One, or an open-source tool like OpenCost. These tools provide a unified view of costs, help enforce security policies using frameworks like Open Policy Agent (OPA), and manage compliance across all your cloud and on-premises environments.

Key Insight: True arbitrage power comes from workload portability. The ability to dynamically shift a workload from one cloud to another based on real-time cost or performance data is the ultimate goal. This requires a sophisticated CI/CD pipeline and Kubernetes-based architecture that can deploy to different clusters (e.g., EKS, GKE, AKS) with minimal configuration changes.

6. Storage Lifecycle Management and Tiering

Storage lifecycle management is a powerful cloud cost optimization strategy that automates the movement of data to more cost-effective storage tiers based on its age, access frequency, and business value. Not all data requires the high-performance, high-cost "hot" storage designed for frequent access. This strategy ensures you only pay premium prices for data that actively needs it, while less-frequently accessed data is transitioned to cheaper "cold" or "archive" tiers.

This approach directly addresses the ever-growing cost of cloud storage by aligning spending with data's actual lifecycle value. For example, Thomson Reuters implemented lifecycle policies for vast archives of legal documents, saving millions by automatically moving older, rarely accessed files to lower-cost tiers. Similarly, Pinterest optimizes image storage costs by using automated tiering based on how often pins are viewed, ensuring popular content remains fast while older content is archived cheaply.

How to Implement Storage Lifecycle Management

Effective implementation requires a clear understanding of your data access patterns and a well-defined policy that balances cost savings with data retrieval needs. It's a strategic process of classifying data and automating its journey through different storage classes.

Actionable Steps:

- Analyze Data Access Patterns: Use tools like Amazon S3 Storage Lens or Azure Storage analytics to understand how your data is accessed. Identify which datasets are frequently requested (hot), infrequently accessed (warm), and rarely touched (cold). The S3 Storage Lens "Activity" dashboard is crucial for this analysis.

- Define and Create Lifecycle Policies: Based on your analysis, create rules within your cloud provider's storage service. For example, a policy in AWS S3, defined in JSON or via the console, could automatically move objects prefixed with

logs/to the Standard-Infrequent Access (S3-IA) tier after 30 days, and then to S3 Glacier Flexible Retrieval after 90 days. Also, include rules to expire incomplete multipart uploads and delete old object versions. - Leverage Intelligent Tiering for Unpredictable Workloads: For data with unknown or changing access patterns, use automated services like AWS S3 Intelligent-Tiering or Azure Blob Storage's lifecycle management with its

last-access-timecondition. These services monitor access at the object level and move data between frequent and infrequent access tiers automatically, optimizing costs without manual analysis for a small monitoring fee. - Tag Data for Granular Control: Implement a robust data tagging strategy. Tagging objects by project, department, or data type (e.g.,

Type:Log,Project:Alpha) allows you to apply different, more specific lifecycle policies to different datasets within the same storage bucket or container. You can define lifecycle rules that apply only to objects with a specific tag.

Key Insight: Always factor in retrieval costs and latency when designing your tiering strategy. Archival tiers like AWS Glacier Deep Archive offer incredibly low storage prices (around $0.00099 per GB-month) but come with higher per-object retrieval fees and longer access times (up to 12 hours). Ensure these retrieval characteristics align with your business SLAs for that specific data. The goal is cost optimization, not making critical data inaccessible.

7. Serverless and Function-as-a-Service (FaaS) Architecture

Adopting a serverless architecture is a powerful cloud cost optimization strategy that shifts the operational paradigm from managing servers to executing code on demand. With FaaS platforms like AWS Lambda, you are billed based on the number of requests and the precise duration your code runs, measured in milliseconds. This pay-per-execution model completely eliminates costs associated with idle server capacity, making it ideal for workloads with intermittent or unpredictable traffic patterns.

This strategy fundamentally changes how you think about infrastructure costs. Instead of provisioning for peak load, the platform scales automatically to handle demand, from zero to thousands of requests per second. For example, iRobot leveraged AWS Lambda for its IoT data processing needs, resulting in an 85% reduction in infrastructure costs. Similarly, Nordstrom migrated its event-driven systems to a serverless model, cutting related expenses by over 60% by paying only for active computation.

How to Implement a Serverless Strategy

Successfully moving to a FaaS model requires rethinking application architecture and focusing on event-driven, stateless functions. It is a strategic choice for microservices, data processing pipelines, and API backends.

Actionable Steps:

- Identify Suitable Workloads: Start by identifying event-driven, short-lived tasks in your application. Good candidates include image resizing upon S3 upload, real-time file processing, data transformation for ETL pipelines (e.g., Lambda triggered by Kinesis), and API endpoints for mobile or web frontends using API Gateway.

- Decompose Monoliths: Break down monolithic applications into smaller, independent functions that perform a single task. Use IaC tools like the Serverless Framework or AWS SAM (Serverless Application Model) to define, deploy, and manage your functions and their required cloud resources (like API Gateway triggers or S3 event notifications) as a single CloudFormation stack.

- Optimize Function Configuration: Profile your functions to determine the optimal memory allocation. Assigning too much memory wastes money, while too little increases execution time and can also increase costs. Use open-source tools like AWS Lambda Power Tuning, a state machine-based utility, to automate this process and find the best cost-performance balance for each function.

- Monitor and Refine: Use observability tools like AWS X-Ray, Datadog, or Lumigo to trace requests and monitor function performance, execution duration, and error rates. Continuously analyze these metrics to identify opportunities for code optimization, such as optimizing database connection management or reducing external API call latency.

Key Insight: Manage cold starts for latency-sensitive applications. A "cold start" occurs when a function is invoked for the first time or after a period of inactivity, adding latency. Use features like AWS Lambda Provisioned Concurrency or Azure Functions Premium plan to keep a specified number of function instances "warm" and ready to respond instantly, ensuring a consistent user experience for a predictable fee.

8. Container Optimization and Kubernetes Resource Management

This advanced cloud cost optimization strategy centers on refining the efficiency of containerized workloads, particularly those orchestrated by Kubernetes. It moves beyond individual virtual machines to optimize at the application and cluster level, maximizing resource density and minimizing waste. The goal is to run more workloads on fewer nodes by tightly managing CPU and memory allocation for each container, a process known as bin packing.

This strategy is highly effective because Kubernetes clusters often suffer from significant resource fragmentation and underutilization without proper management. By leveraging Kubernetes-native features like autoscaling and resource quotas, organizations can create a self-regulating environment that adapts to demand. For example, Spotify famously reduced its infrastructure costs by 40% through extensive Kubernetes optimization and improved resource utilization, demonstrating the immense financial impact of this approach.

How to Implement Kubernetes Resource Management

Effective Kubernetes cost management requires a granular, data-driven approach to resource allocation and cluster scaling. It's about ensuring every container gets what it needs without hoarding resources that others could use.

Actionable Steps:

- Define Resource Requests and Limits: This is the most critical step. For every container in your deployment manifests (

deployment.yaml), set CPU and memoryrequests(the amount guaranteed to a container, influencing scheduling) andlimits(the hard ceiling it can consume). Use tools like Goldilocks or Prometheus to analyze application performance and set realistic baselines. - Implement Horizontal Pod Autoscaler (HPA): Configure HPA to automatically increase or decrease the number of pods in a deployment based on observed metrics like CPU utilization or custom application metrics exposed via Prometheus Adapter. This ensures your application scales with user traffic, not just the underlying infrastructure.

- Enable Cluster Autoscaler: Use the Cluster Autoscaler to dynamically add or remove nodes from your cluster. It works in tandem with the HPA, provisioning new nodes when pods are

Pendingdue to resource constraints and removing underutilized nodes to cut costs. Many businesses explore expert Kubernetes services to correctly implement and manage these complex scaling mechanisms. - Utilize Spot Instances: Integrate spot or preemptible instances into your node groups for fault-tolerant, non-critical workloads. Use taints and tolerations to ensure that only appropriate workloads are scheduled onto these ephemeral nodes. Tools like Karpenter can significantly simplify and optimize this process.

Key Insight: Don't treat all workloads equally. Use Pod Disruption Budgets (PDBs) to protect critical applications from voluntary disruptions (like node draining). At the same time, assign lower priority classes (

PriorityClass) to non-essential batch jobs. This allows the Kubernetes scheduler to preempt lower-priority pods in favor of high-priority services when resources are scarce, maximizing both availability and cost-efficiency.

9. Cloud Cost Monitoring and FinOps Implementation

FinOps, short for Financial Operations, is a cultural and operational practice that brings financial accountability to the variable spending model of the cloud. It is not just a tool, but a cultural shift that unites finance, technology, and business teams to manage cloud costs effectively. This strategy emphasizes real-time visibility, shared ownership, and continuous optimization, transforming cloud spending from a reactive IT expense into a strategic, value-driven business metric.

The core goal of FinOps is to help engineering and finance teams make data-backed spending decisions. By implementing FinOps, companies like HERE Technologies have reduced their cloud costs by 30%. Similarly, Atlassian manages a complex multi-account AWS environment by applying FinOps principles for detailed cost allocation and chargebacks, ensuring every team understands its financial impact. This level of detail is one of the most powerful cloud cost optimization strategies available.

How to Implement FinOps

Implementing FinOps is a journey that starts with visibility and evolves into a mature, organization-wide practice. It requires a commitment to collaboration and data transparency across different departments.

Actionable Steps:

- Establish Granular Visibility: The first step is to see exactly where money is going. Implement a comprehensive and enforced resource tagging and labeling strategy to allocate costs to specific projects, teams, or business units. Use native tools like AWS Cost Explorer and Azure Cost Management + Billing, or dedicated platforms like Cloudability and Apptio. Learn more about how effective observability underpins this process.

- Create Accountability and Ownership: Assign clear ownership for cloud spending. Each engineering team or product owner should have access to a dashboard showing their service's budget and usage. This accountability fosters a cost-conscious mindset directly within the teams that provision resources.

- Implement Regular Review Cycles: Establish a regular cadence for cost review meetings (e.g., weekly or bi-weekly) involving stakeholders from engineering, finance, and product management. Use these sessions to review spending against forecasts, analyze anomalies in the AWS Cost and Usage Report (CUR), and prioritize optimization tasks in a backlog.

- Automate Optimization and Governance: Use automation to enforce cost-saving policies. This can include Lambda functions to shut down non-production instances outside of business hours (

Schedule-Tag), AWS Config rules to detect unattached EBS volumes or idle load balancers, and automated budget alerts via Amazon SNS for potential overruns.

Key Insight: FinOps is not about saving money at all costs; it's about maximizing business value from the cloud. The focus should be on unit economics, such as "cost per customer" or "cost per transaction." This shifts the conversation from "how much are we spending?" to "are we spending efficiently to drive growth?"

10. Data Transfer and Network Optimization

Data transfer and network optimization is a critical cloud cost optimization strategy that focuses on minimizing the egress costs associated with moving data out of a cloud provider's network. These charges, often overlooked during initial architectural design, can accumulate rapidly and become a major, unexpected expense. This strategy involves the strategic placement of resources, the use of Content Delivery Networks (CDNs), and implementing efficient data movement patterns to reduce bandwidth consumption.

This is not just about reducing traffic volume; it's about making intelligent architectural choices to control data flow. For example, Netflix saves millions annually by heavily leveraging its own CDN (Open Connect) and strategically placing servers within ISP networks, bringing content closer to viewers and drastically cutting its data transfer costs. Similarly, Shopify reduced its data transfer costs by 45% through a combination of aggressive CDN optimization and modern image compression formats.

How to Implement Network Optimization

Effective network cost control requires a multi-faceted approach that combines architectural planning with ongoing monitoring and the right technology stack. It's about being deliberate with every byte that leaves your cloud environment.

Actionable Steps:

- Analyze and Baseline Data Transfer: Use cloud-native tools like AWS Cost and Usage Report (CUR) and query it with Athena, Azure Cost Management, or Google Cloud's detailed billing export to identify your top sources of data transfer costs. Look for line items like

DataTransfer-Out-Bytesand group by service, region, and availability zone to find the biggest offenders. - Implement a Content Delivery Network (CDN): For any publicly facing static assets (images, CSS, JavaScript) or streaming media, use a CDN like Amazon CloudFront, Azure CDN, or Cloudflare. A CDN caches your content at edge locations worldwide, serving users from a nearby server instead of your origin, which dramatically reduces costly

DataTransfer-Out-Bytescharges from your primary cloud region. - Keep Traffic Within the Cloud Network: Whenever possible, architect your applications to keep inter-service communication within the same cloud region. Data transfer between services in the same region using private IPs is often free or significantly cheaper than inter-region or internet-bound traffic. Use VPC Endpoints (for AWS services) or Private Link to secure traffic to cloud services without sending it over the public internet.

- Compress and Optimize Data Payloads: Before transferring data, ensure it is compressed. Implement Gzip or Brotli compression for text-based data at the web server (e.g., Nginx, Apache) or load balancer level. For images, use modern, efficient formats like WebP or AVIF and apply lossless or lossy compression where appropriate. This reduces the total bytes transferred, directly lowering costs.

Key Insight: Pay close attention to data transfer between different availability zones (AZs). While traffic within a single AZ is free, traffic between AZs in the same region is not (typically $0.01/GB in each direction). For high-chattiness applications, co-locating dependent services in the same AZ can yield significant savings, though you must balance this cost optimization against high-availability requirements which often necessitate multi-AZ deployments.

Cloud Cost Optimization Strategies Comparison

| Strategy | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| Right-sizing Computing Resources | Moderate; requires continuous monitoring | Moderate; monitoring tools | Cost savings (20-50%), better efficiency | Workloads with variable usage; cost reduction | Automated recommendations; improved efficiency |

| Reserved Instance & Savings Plans | Moderate; requires forecasting | Low to moderate; upfront costs | Significant cost savings (30-75%), budget predictability | Stable, predictable workloads | Large discounts; budgeting stability |

| Auto-scaling & Dynamic Management | High; complex policy configuration | High; real-time monitoring | Automatic scaling, cost optimization | Applications with fluctuating traffic | Automatic cost control; performance boost |

| Spot Instance & Preemptible VMs | Moderate; requires fault-tolerant design | Low; uses spare capacity | Massive cost savings (up to 90%) | Batch jobs, dev/test, flexible workloads | Very low cost; high performance availability |

| Multi-cloud & Hybrid Arbitrage | High; complex management | High; multiple platform skills | Cost optimization via pricing arbitrage | Multi-cloud or hybrid environments | Avoid vendor lock-in; leverage best pricing |

| Storage Lifecycle Management | Moderate; policy setup | Moderate; storage tiering | Reduced storage costs, automated management | Data with variable access patterns | Automated cost reduction; compliance support |

| Serverless & FaaS Architecture | Moderate; architecture redesign may be needed | Low; pay per execution | Cost savings on variable traffic workloads | Event-driven, variable or unpredictable traffic | No server management; automatic scaling |

| Container Optimization & Kubernetes | High; requires container orchestration expertise | Moderate; cluster resources | Better resource utilization and scaling | Containerized microservices, dynamic workloads | Improved efficiency; automatic scaling |

| Cloud Cost Monitoring & FinOps | High; organizational and cultural change | Low to moderate; tooling needed | Enhanced cost visibility and accountability | Enterprises seeking cross-team cost management | Proactive cost control; collaboration boost |

| Data Transfer & Network Optimization | Moderate; global infrastructure management | Moderate; CDNs and edge nodes | Reduced data transfer costs and improved latency | Applications with heavy data transfer or global users | Cost savings; improved performance |

Final Thoughts

Embarking on the journey of cloud cost optimization is not a one-time project but a continuous, strategic discipline. Throughout this guide, we've explored a comprehensive suite of ten powerful cloud cost optimization strategies, moving far beyond surface-level advice to provide actionable, technical roadmaps. From the foundational practice of right-sizing instances and the strategic procurement of Reserved Instances to the dynamic efficiencies of auto-scaling and the tactical use of Spot Instances, each strategy represents a critical lever you can pull to gain control over your cloud expenditure.

We've delved into the architectural shifts that unlock profound savings, such as adopting serverless functions and optimizing Kubernetes resource management. Furthermore, we highlighted the often-overlooked yet significant impact of storage lifecycle policies and data transfer optimization. The common thread weaving these disparate tactics together is the necessity of a cultural shift towards financial accountability, crystallized in the practice of FinOps. Without robust monitoring, clear visibility, and cross-functional collaboration, even the most brilliant technical optimizations will fall short of their potential.

Synthesizing Strategy into Action

The true power of these concepts is realized when they are integrated into a cohesive, multi-layered approach rather than applied in isolation. A mature cloud financial management practice doesn't just choose one strategy; it artfully combines them.

- Foundation: Start with visibility and right-sizing. You cannot optimize what you cannot see. Implement robust tagging and monitoring to identify waste, then aggressively resize overprovisioned resources. This is your baseline.

- Commitment: Layer on Reserved Instances or Savings Plans for your stable, predictable workloads identified during the foundational stage. This provides a significant discount on the resources you know you'll need.

- Dynamism: For your variable or spiky workloads, implement auto-scaling. This ensures you only pay for the capacity you need, precisely when you need it. For stateless, fault-tolerant workloads, introduce Spot Instances to capture the deepest discounts.

- Architecture: As you evolve, re-architect applications to be more cloud-native. Embrace serverless (FaaS) for event-driven components to eliminate idle costs, and fine-tune your Kubernetes deployments with precise resource requests and limits to maximize container density.

- Data Management: Simultaneously, enforce strict storage lifecycle policies and optimize your network architecture to minimize costly data transfer fees.

This layered model transforms cloud cost optimization from a reactive, cost-cutting chore into a proactive, value-driving engine for your organization.

The Ultimate Goal: Sustainable Cloud Efficiency

Mastering these cloud cost optimization strategies is about more than just lowering your monthly bill from AWS, Azure, or GCP. It's about building a more resilient, efficient, and scalable engineering culture. When your teams are empowered with the tools and knowledge to make cost-aware decisions, you foster an environment of ownership and innovation. The capital you save can be reinvested into core product development, market expansion, or talent acquisition, directly fueling your business's growth.

Ultimately, effective cloud cost management is a hallmark of a mature technology organization. It demonstrates technical excellence, financial discipline, and a strategic understanding of how to leverage the cloud's power without succumbing to its potential for unchecked spending. The journey requires diligence, the right tools, and a commitment to continuous improvement, but the rewards – a lean, powerful, and cost-effective cloud infrastructure – are well worth the effort.

Navigating the complexities of Reserved Instances, Spot fleets, and Kubernetes resource management requires deep expertise and constant vigilance. OpsMoon provides on-demand, expert DevOps and SRE talent to implement these advanced cloud cost optimization strategies for you. Partner with us at OpsMoon to transform your cloud infrastructure into a model of financial efficiency and technical excellence.